Hello @Shivasai Bommaraveni ,

Thanks for the question and using MS Q&A platform.

You may checkout the below options to increase the write performance:

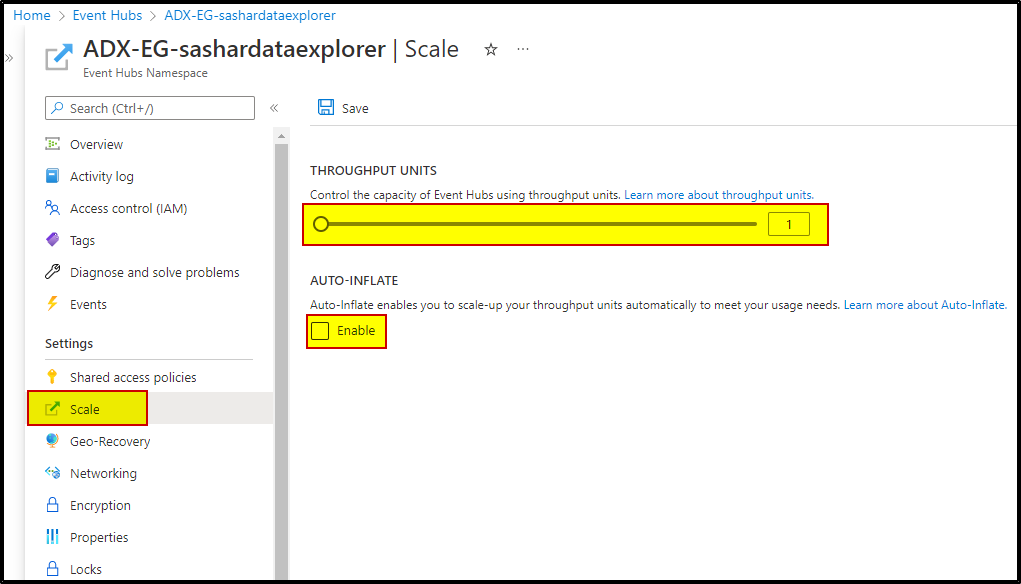

Option1: Increase the throughput unit capacity or enable Auto-Inflate future.

Auto-Inflate automatically scales the number of Throughput Units assigned to your Standard Tier Event Hubs Namespace when your traffic exceeds the capacity of the Throughput Units assigned to it. You can specify a limit to which the Namespace will automatically scale.

Option2: Setup

maxEventsPerTriggeroption in your query.

Rate limit on maximum number of events processed per trigger interval. The specified total number of events will be proportionally split across partitions of different volume.

After adding below code in the data bricks job to consume more event hub records throughput has been improved.

`.option("maxEventsPerTrigger", 5000000)`

For more information, refer to Azure Databricks – Event Hubs.

Hope this will help. Please let us know if any further queries.

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators