Hi @Andres Esteban ,

Thankyou for using Microsoft Q&A platform and posting your query.

As I understand your question here, you are trying to copy data from ADLS to Postgre SQL and the data stored in the ADLS is in the format - 'store name/container name/0/year/month/day/hour/minute/20.avro'. You want to process only the new data which arrives in a newly created folder and copy the same to PostGre SQL table.

So , you want to know how to fetch the latest and greatest data from the new folder. Please correct me if my understanding is incorrect.

To achieve this, you can use one of the following approaches:

Option1:

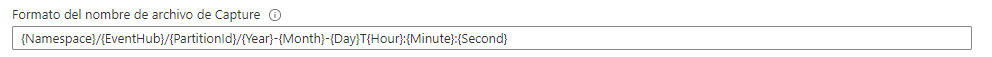

- Keep the source ADLS folder structure as storeName/containerName/0/Year-Month-DayTHour:Minute_20.avro so now the format of files would be:

storeName/containerName/0/2022-04-13T09:36_20.avro

storeName/containerName/0/2022-04-14T08:36_20.avro

....

storeName/containerName/0/2022-05-01T01:36_20.avro

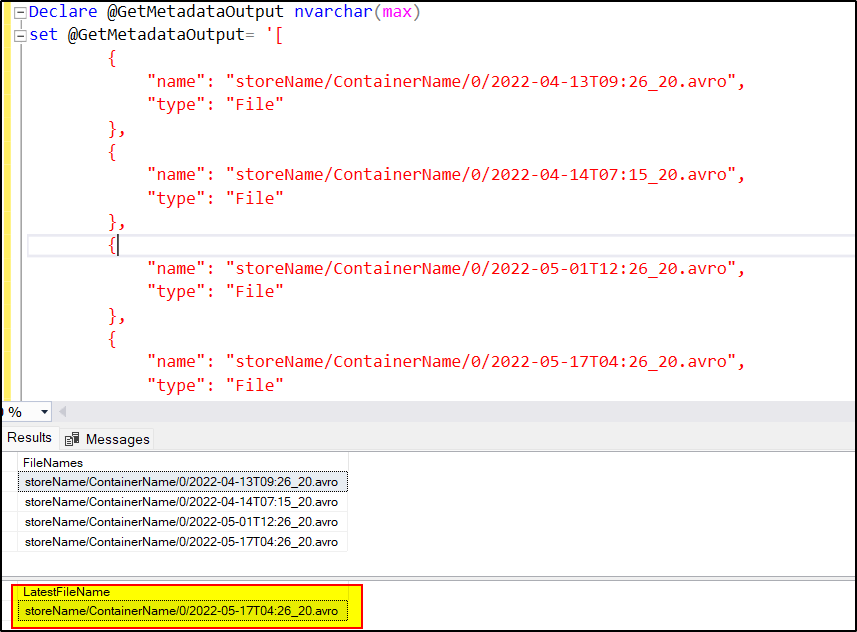

Create an ADF pipeline and use Get Metadata activity to fetch all the files present in ContainerName/0 folder and then use LookUp activity having dataset pointing to PostGreSQL /Azure SQL and write SQL query to fetch the max value of the fileName. Example:

Declare @GetMetadataOutput nvarchar(max)

set @GetMetadataOutput= '@{activity('Get Metadata1').output.childItems}'

Select * FROM OPENJSON(@GetMetadataOutput) WITH(FileNames NVARCHAR(200) '$.name')

select max(fileNames) as LatestFileName from (

Select * FROM OPENJSON(@GetMetadataOutput) WITH(FileNames NVARCHAR(200) '$.name'))a

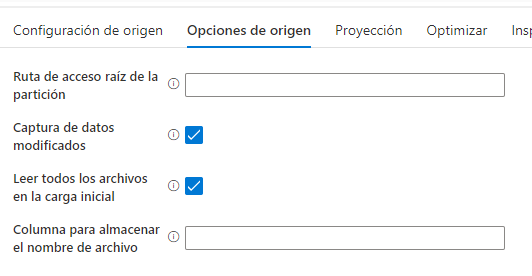

- Call dataFlow after the lookUp and pass the LatestFileName as the parameter in dataflow and use the same filePath in the source dataset.

Option2:

- Write some custom code using Azure function or Azure Databricks to iterate over recent folder and then loop through the subfolders and get the latest file and pass the same in your dataflow. Please refer to this thread for more help: https://learn.microsoft.com/en-us/answers/questions/795517/get-latest-date.html

Hope this will help. Please let us know if any further queries.

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators

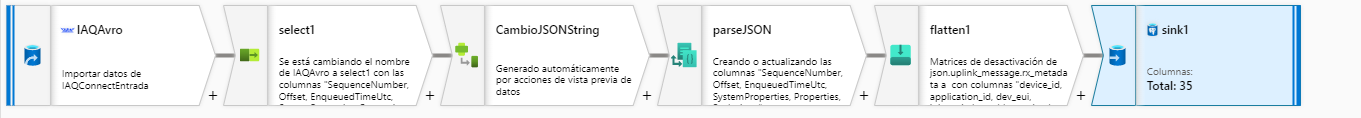

This is the Dataflow taking as source only one record to make the tests

This is the Dataflow taking as source only one record to make the tests  And this is the Pipeline

And this is the Pipeline