Hello @Quentin Chiffoleau ,

Thanks for the question and using MS Q&A platform.

As we understand the ask here is to read a paraquet file which is stored in ADLS gen2 , please do let us know if its not accurate.

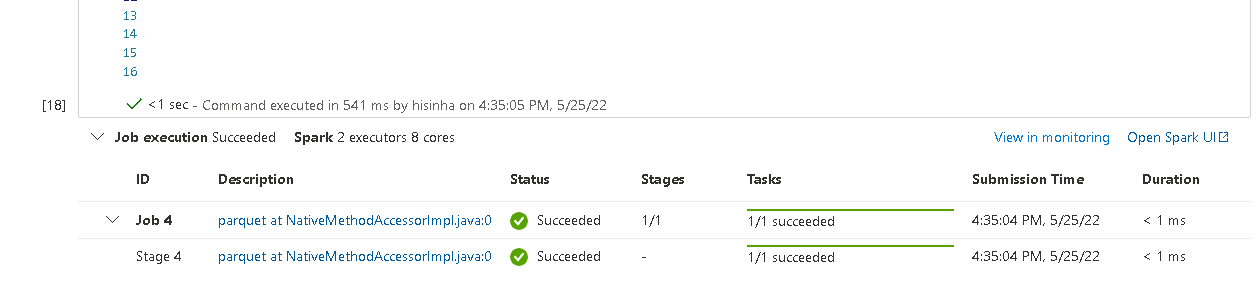

I have tried the below code and it does work fine for me . I am not sure if you are using the SAS key or access key , I am using the access key below .

You will have to update the account key , account name , container name and relative path to work

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql.types import *

spark=SparkSession.builder.appName("PySpark Read Parquet").getOrCreate()

account_name = 'accountName # fill in your primary account name

container_name = 'himanshu' # fill in your container name

relative_path = 'NYCTaxi/PassengerCountStats.parquet/part-00000-21161a2b-1c65-4a76-9999-0b2403785f46-c000.snappy.parquet' # fill in your relative folder path

adls_path = 'abfss://%s@%s.dfs.core.windows.net/%s' % (container_name, account_name, relative_path)

spark.conf.set('fs.azure.account.key.%s.dfs.core.windows.net' %(account_name) ,"accesskey")

df1 = spark.read.parquet(adls_path)

Please do let me if you have any queries.

Thanks

Himanshu

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators