Hello @Shaun Bailey and welcome to Microsoft Q&A.

As I understand, the goal is to push each record of an enormous file, one by one into a stored procedure. However due to the size, you wish to first break up that large JSON into multiple smaller CSV's.

Wait, did I get the JSON and CSV swapped?

Anyway, there are several limitations to note.

As you have discovered, you are not allowed to put one loop inside another loop. However, you can put an Execute Pipeline inside a loop, and then in the child pipeline put another loop.

What you might not know, is that putting a Set Variable inside a forEach loop can be dangerous. When you have multiple instances of the loop running at the same time, the Set Variables will try to write to the same place. This causes confusion when one instance later tries to read the variable, only to find what a different instance wrote! This is why you should try to minimize Set Variable inside a loop, unless it runs in sequence, not parallel.

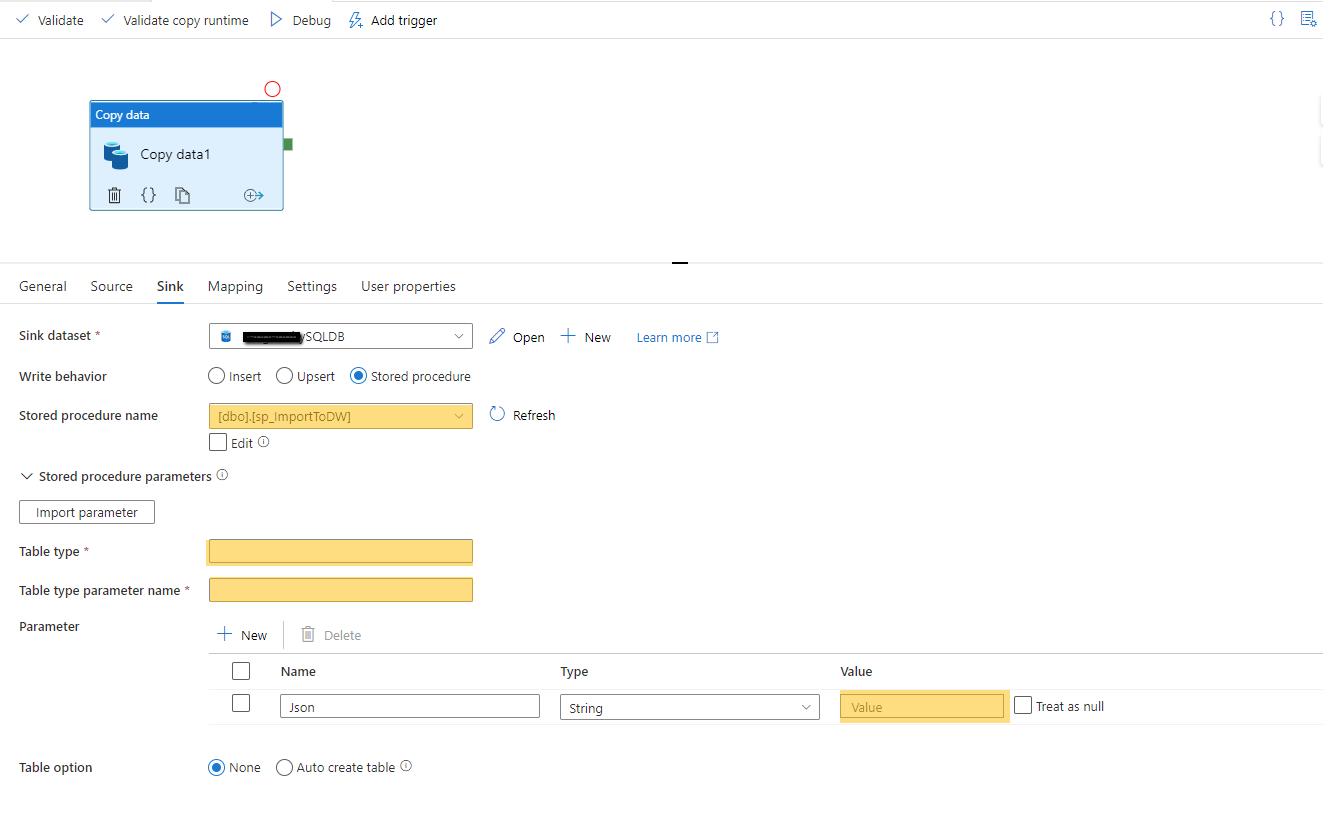

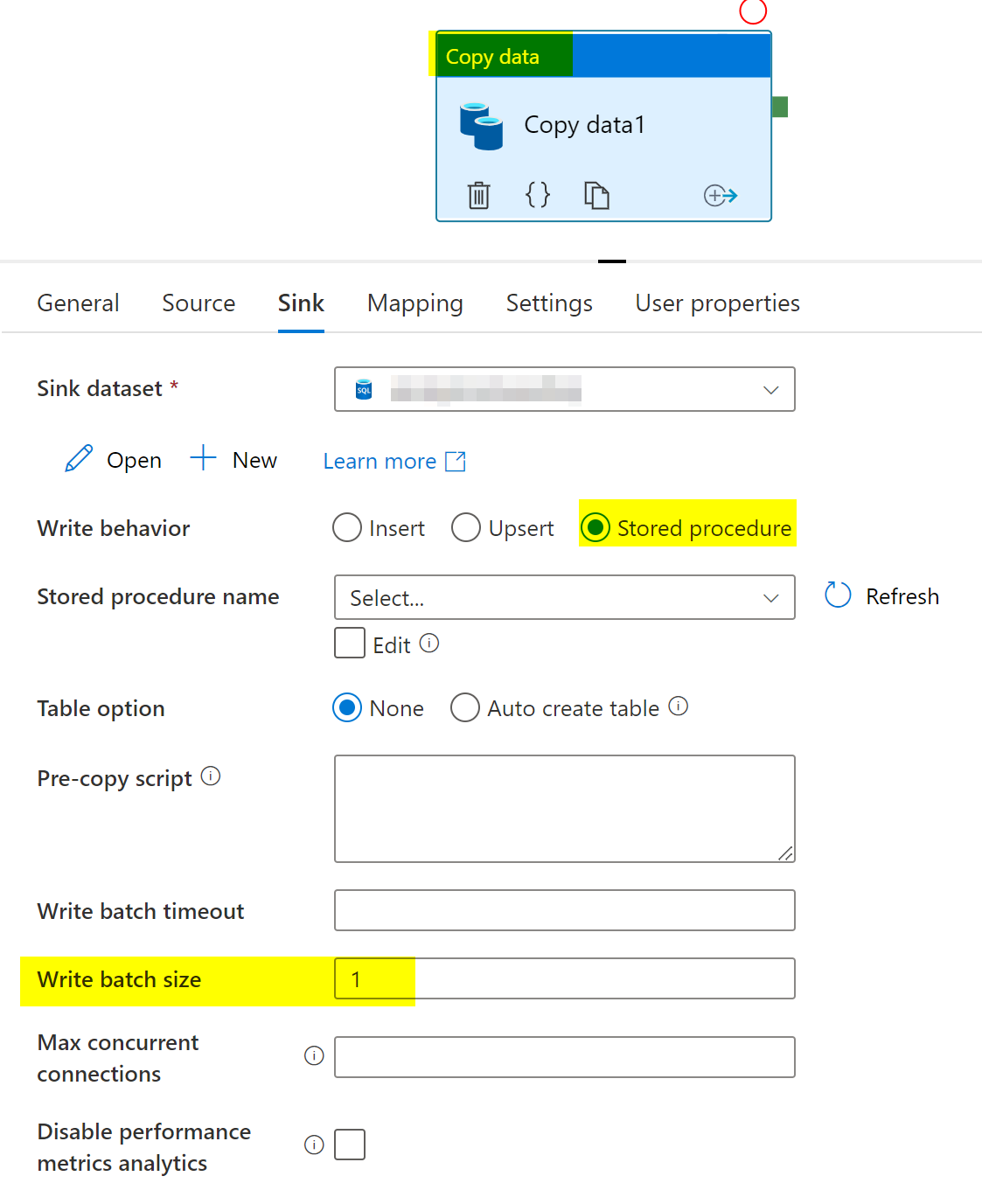

There are 3 activities which can support Stored Procedure. Copy Activity. Lookup activity. Stored Proc activity. Which one you use depends upon whether you are expecting data to be returned to the Factory in response to the procedure running.

If you are expecting return data, use Lookup activity.

If the data/parameters you want to send is in a variable, use Stored Procedure activity. Also works when there is no parameters to send, and you just want to run the proc, like truncating a table.

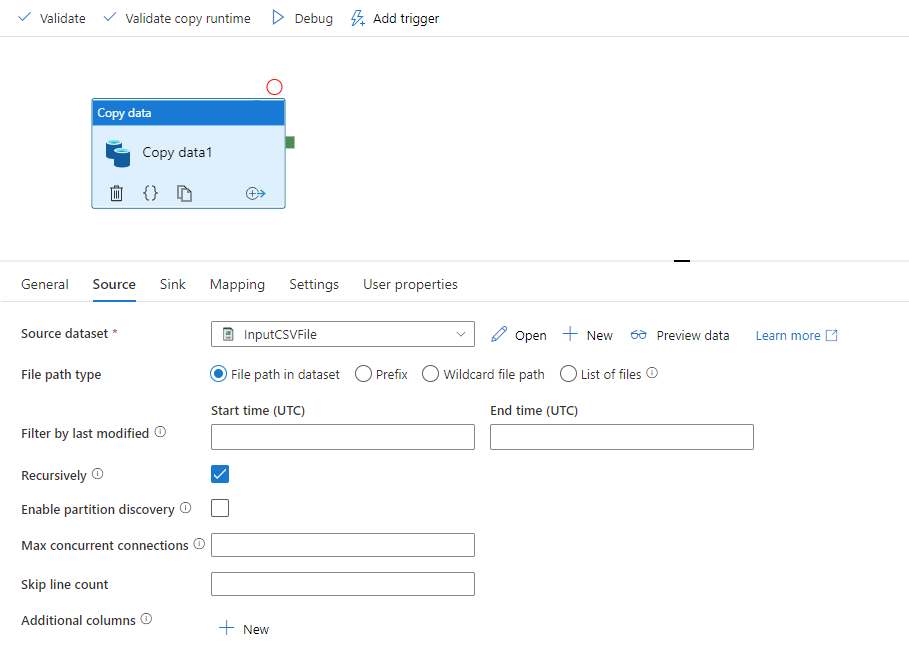

If the data you want to send is in a file, use Copy Activity.

You did specify sending 1 row per execution of stored procedure, as opposed to a batch of rows at a time. Normally CopyActivity does it in batches, however we can control the batch size! So, if we set the write batch size to 1, we end up sending 1 row at a time!

The reason I am leaning towards Copy Activity is because you would otherwise need to fetch all the records with a Lookup activity so you could feed them to Stored Proc or Lookup activities. The Lookup activity has a limitation on how many rows / bytes are returned. It is not always possible to work around this limitation.

So, you might not need 2 loops for this. One activity to divide up the file into smaller parts. One loop to go over each smaller part. Inside the loop one copy activity.

This leaves the implementation of the division.

When the sink is delimited text AND the target is a folder, you can choose the maximum number of rows per file. This effects breaking up the big file into smaller csv's. This feature is not available when the sink is JSON.

This is one reason I wanted to double check which end was CSV and which end was JSON. The other reason, is more use cases transform hierarchical JSON into CSV for easier loading into SQL. Going the other direction seemed a little odd, unless you are intentionally encoding a flat record into a hierarchical form and stuffing that into a single cell.

Even if we are going from CSV to JSON, that is no big deal. We just need an extra copy activity.

Big CSV ----> many small CSV ---> many small JSON

That is a copy activity to break up the big csv into many smaller csv's . Then a forEach loop on the many small csv's. Inside the loop, first a copy to change from small csv to small json. Then another copy to push json to SQL, using write batch size = 1. End loop.

The sink dataset of the Big csv -> little csv , can be the same as source dataset of little csv --> little json.

Please do let me if you have any queries.

Thanks

Martin

------------------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators