Hello @arkiboys ,

Thanks for the question and using MS Q&A platform.

There are couple of ways to transfer notebooks from one workspace to another workspace.

Method1: Use the following python code to migrate the sandboxed user environments, which include the nested folder structure and notebooks per user.

Copy and save the following python script to a file, and run it in your Databricks command line. For example, python scriptname.py.

import sys

import os

import subprocess

from subprocess import call, check_output

EXPORT_PROFILE = "primary"

IMPORT_PROFILE = "secondary"

# Get a list of all users

user_list_out = check_output(["databricks", "workspace", "ls", "/Users", "--profile", EXPORT_PROFILE])

user_list = (user_list_out.decode(encoding="utf-8")).splitlines()

print (user_list)

# Export sandboxed environment(folders, notebooks) for each user and import into new workspace.

#Libraries are not included with these APIs / commands.

for user in user_list:

#print("Trying to migrate workspace for user ".decode() + user)

print (("Trying to migrate workspace for user ") + user)

subprocess.call(str("mkdir -p ") + str(user), shell = True)

export_exit_status = call("databricks workspace export_dir /Users/" + str(user) + " ./" + str(user) + " --profile " + EXPORT_PROFILE, shell = True)

if export_exit_status==0:

print ("Export Success")

import_exit_status = call("databricks workspace import_dir ./" + str(user) + " /Users/" + str(user) + " --profile " + IMPORT_PROFILE, shell=True)

if import_exit_status==0:

print ("Import Success")

else:

print ("Import Failure")

else:

print ("Export Failure")

print ("All done")

Method2: You can do it manually: Export as DBC file from one workspace and then import to another workspace.

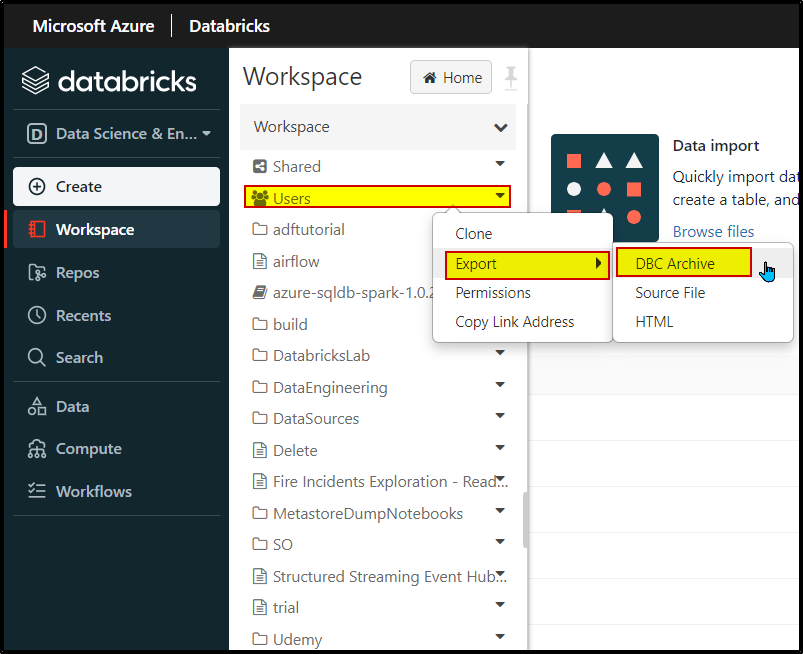

Step1: Export from one workspace.

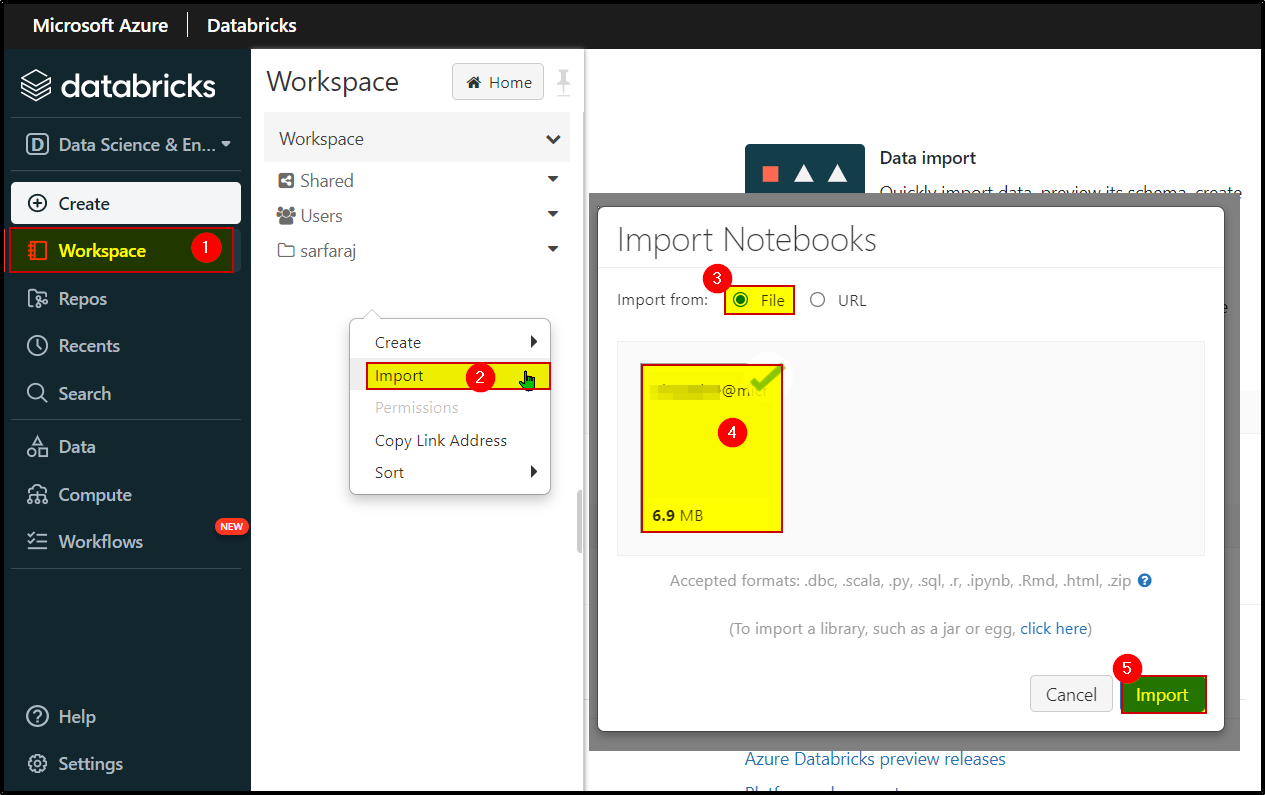

Step2: Import to another workspace.

For more details, refer to Regional disaster recovery for Azure Databricks clusters and Migrate the workspace folders and notebooks.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators