Short and to the point

- Use double for non-integer math where the most precise answer isn't necessary.

- Use decimal for non-integer math where precision is needed (e.g. money and currency).

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

under what kinds of situations should I use decimal data type? and I found that most likely I can use double instead, would someone give me some hints for that? Thank you

Short and to the point

this is usually taught in a beginners computer science class. On computers, floating point is done in base 2. just like 1/3 is a repeating decimal (base ten), some decimal numbers can not be expressed in base 2.

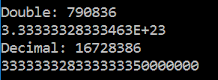

simple example:

double num = .0;

for (var i =0; i < 100; ++i)

{

num = num + .01;

}

Console.WriteLine(num); //1.0000000000000007

for this reason, when dealing with money (which you want to add up), you use integer arithmetic with an implied decimal point. this is what the decimal datatype is for.

if the language you are using does not support a decimal datatype, then for money you typically do the math in pennies (or mills if more precision needed) then divide by 100.

I found that most likely I can use double instead,

The data type is an unprecise value, while decimal is a precise one.

See https://learn.microsoft.com/en-us/dotnet/api/system.double?view=net-6.0

=> Precision

In this case, the floating-point value provides an imprecise representation of the number that it represents. Performing additional mathematical operations on the original floating-point value often tends to increase its lack of precision

If numbers must add up correctly or balance, use decimal. This includes any financial storage or calculations, scores, or other numbers that people might do by hand.

If the exact value of numbers is not important, use double for speed. This includes graphics, physics or other physical sciences computations where there is already a "number of significant digits".

For money, always decimal. That is why it was created.

HI

It's all about precision. Based on the precision need, you can use either double or decimal.

Use double for non-integer math where the most precise answer isn't necessary. Use decimal for non-integer math where precision is needed.

Example: