Hello @Saif Ahmad ,

Thanks for the question and using MS Q&A platform.

This is an excepted behaviour in Azure HDInsight.

As a best practice, you should not use the local storage on the disk of the VMs. Recommended to use the Azure Storage account to save the files.

There are several ways you can access the files in Data Lake Storage from an HDInsight cluster. The URI scheme provides unencrypted access (with the wasb: prefix) and TLS encrypted access (with wasbs). We recommend using wasbs wherever possible, even when accessing data that lives inside the same region in Azure.

- Using the fully qualified name. With this approach, you provide the full path to the file that you want to access. wasb://<containername>@<accountname>.blob.core.windows.net/<file.path>/

wasbs://<containername>@<accountname>.blob.core.windows.net/<file.path>/ - Using the shortened path format. With this approach, you replace the path up to the cluster root with: wasb:///<file.path>/

wasbs:///<file.path>/ - Using the relative path. With this approach, you only provide the relative path to the file that you want to access. /<file.path>/

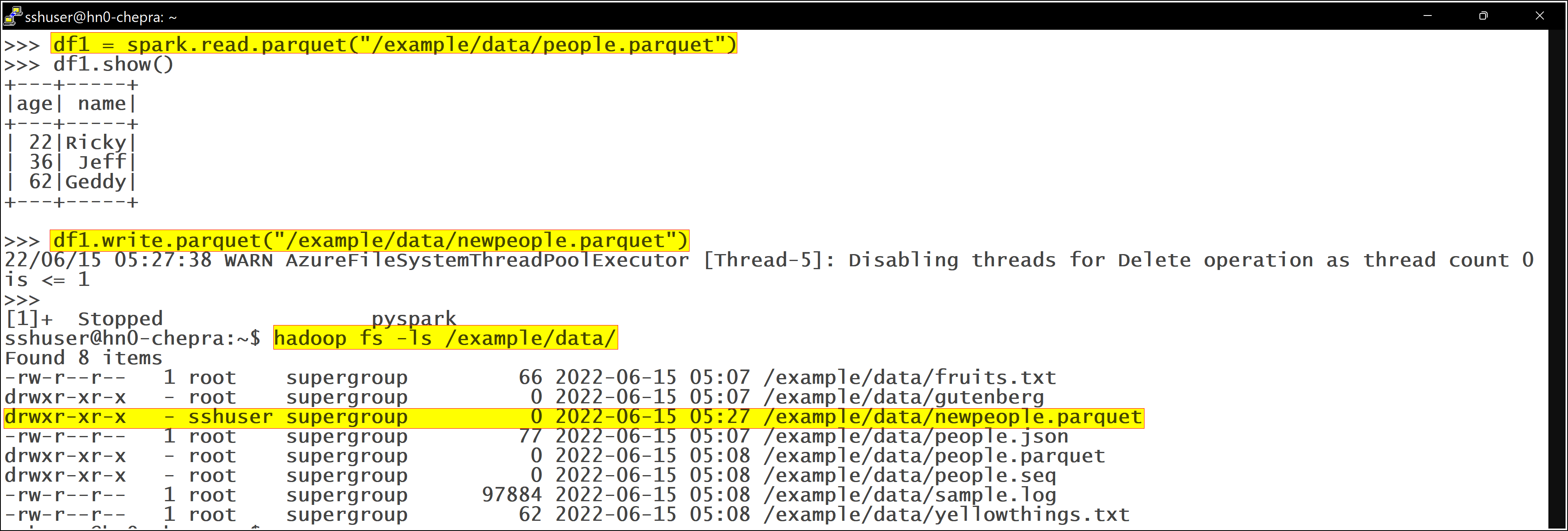

For more details, refer to Azure HDInsight - Access files from within cluster and Run Apache Spark from the Spark Shell.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators