Hi @Anonymous ,

Thankyou for using Microsoft Q&A platform and thankyou for posting your query.

As I understand your issue, you are trying to copy data from Azure data lake storage to Dedicated SQL pool but it is having performance issues for larger files. Please let me know if my understanding is incorrect.

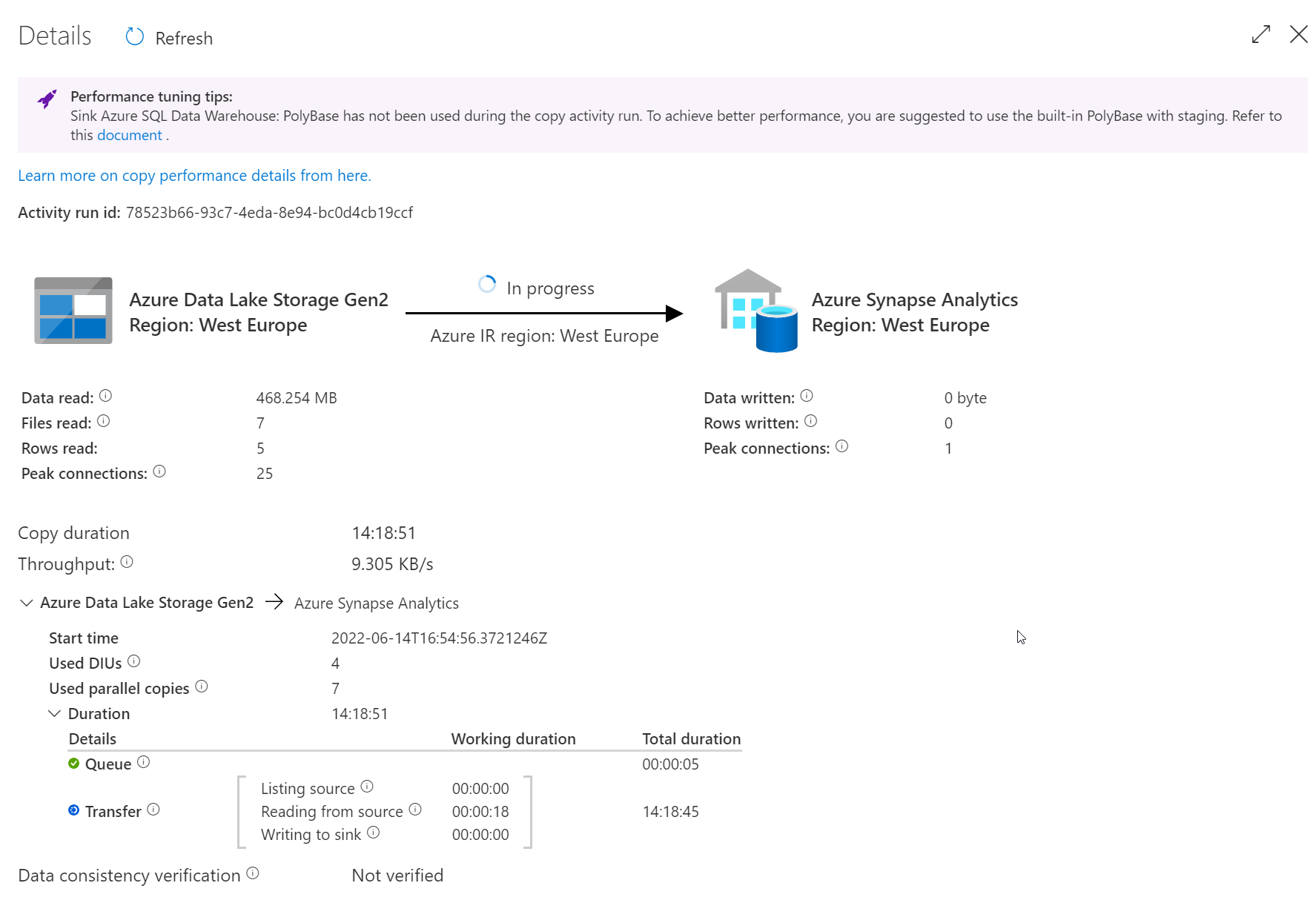

As can be seen in the screenshot, it's been suggested to use Polybase while copying data to Azure synapse

Please refer to the following article for more details: Use PolyBase to load data into Azure Synapse Analytics and Staged copy by using PolyBase

Using PolyBase is an efficient way to load a large amount of data into Azure Synapse Analytics with high throughput. You'll see a large gain in the throughput by using PolyBase instead of the default BULKINSERT mechanism.

Also, you can consider increasing the DIUs and DOPs to have improved performance of copy activity.

Kindly refer to this article for more details: Data Integration Units and Parallel copy

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators