Hi @Lidia Sánchez ,

Thankyou for using Microsoft Q&A platform and thanks for posting your query.

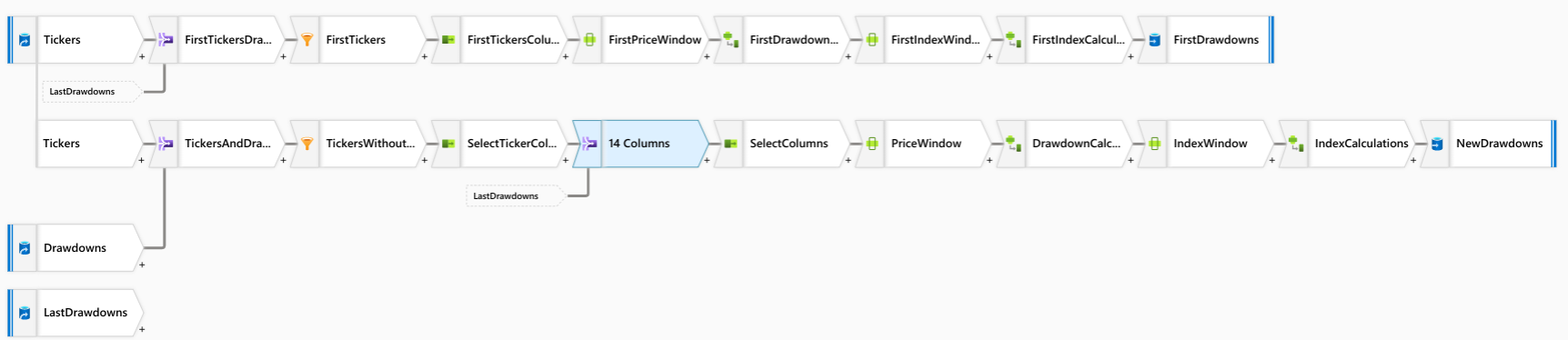

Going through the verbatim of your query, it seems dataflow is giving timeout issue while executing join transformation. Please let me know if it's not the case. The cause of the error might be because the stream chosen for broadcast is too large to produce data within limit.

- Check the Optimize tab on your data flow transformations for activities like join, exists, and lookup. The default option for broadcast is Auto. If Auto is set, or if you're manually setting the left or right side to broadcast under Fixed, you can either set a larger Azure integration runtime (IR) configuration or turn off broadcast. For the best performance in data flows, we recommend that you allow Spark to broadcast by using Auto and use a memory-optimized Azure IR.

- If you're running the data flow in a debug test execution from a debug pipeline run, you might run into this condition more frequently. That's because Azure Data Factory throttles the broadcast timeout to 60 seconds to maintain a faster debugging experience. You can extend the timeout to the 300-second timeout of a triggered run. To do so, you can use the Debug > Use Activity Runtime option to use the Azure IR defined in your Execute Data Flow pipeline activity.

Please check the following article for troubleshooting the time out issue and other dataflow related issues: Error code: DF-Executor-BroadcastTimeout

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators