Hello @Ömer Faruk Özsakarya ,

Thanks for the question and using MS Q&A platform.

Since you are using Copy activity of ADF and the source is Cosmos DB API for Mongo DB, I believe your mapping is hierarchical source to tabular sink. And your source have array object from which you would want to iterate and extract the data. Please correct if my understanding is not accurate.

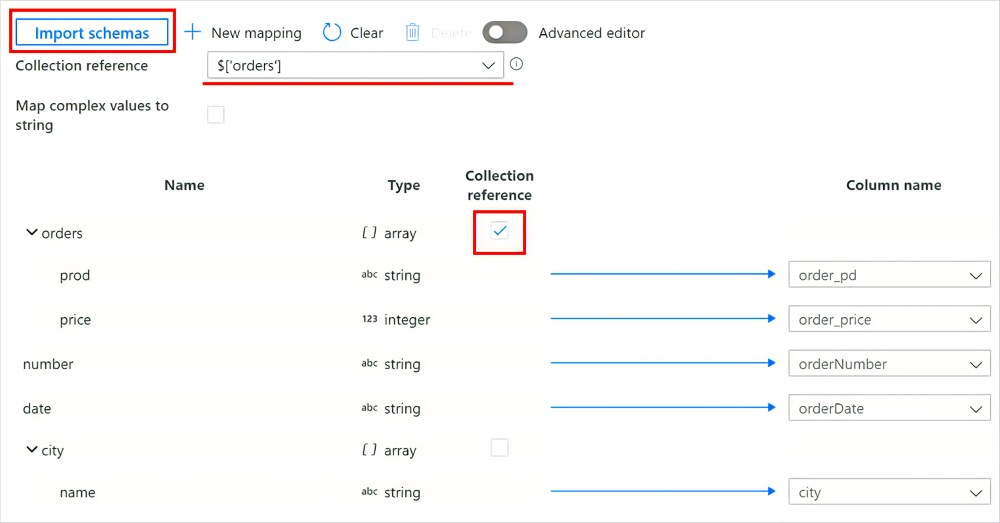

Since you are using Copy activity you can utilize Collection reference under mapping section and select the array for which you would like to iterate and copy the data. But using the feature, only single array is supported for such operation.

For more info please refer to this doc: ADF schema mapping in copy activity - Hierarchical source to tabular sink

In case if you have nested/complex arrays then copy activity doesn't fit for your solution and as per the ADF product team it is recommended to use Mapping data flow and use Flatten transformation. But currently ADF mapping data flow doesn't support Cosmos DB API for Mongo DB as source. Currently only Cosmos DB (SQL API) is supported as source for Mapping data flows.

If you have any feature request suggestion, please feel free to log it in IDEAS Forum here: https://feedback.azure.com/d365community/forum/1219ec2d-6c26-ec11-b6e6-000d3a4f032c.

All the feedback shared in this forum are actively monitored and reviewed by ADF engineering team. Please do share the feedback link once it is posted so that we can pass the feedback to respective product teams.

As an alternative, I would recommend having a look at Azure Synapse Link for Azure Cosmos DB and see if fits your business requirement.

With Synapse Link, you can now directly connect to your Azure Cosmos DB containers from Azure Synapse Analytics and access the analytical store with no separate connectors. Azure Synapse Analytics currently supports Synapse Link with Synapse Apache Spark and serverless SQL pool.

Hope this will info helps.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators