Hello @Maulik Dave ,

Thanks for the question and using MS Q&A platform.

As per my understanding, you have a web API that calls one of the ADB job using service principal token which executes a Python code. The python code is to access a storage container or folders. You would like to know if you need to specifically configure credentials in your ADF notebook Python code to access the storage account. Please correct if my understanding in not accurate.

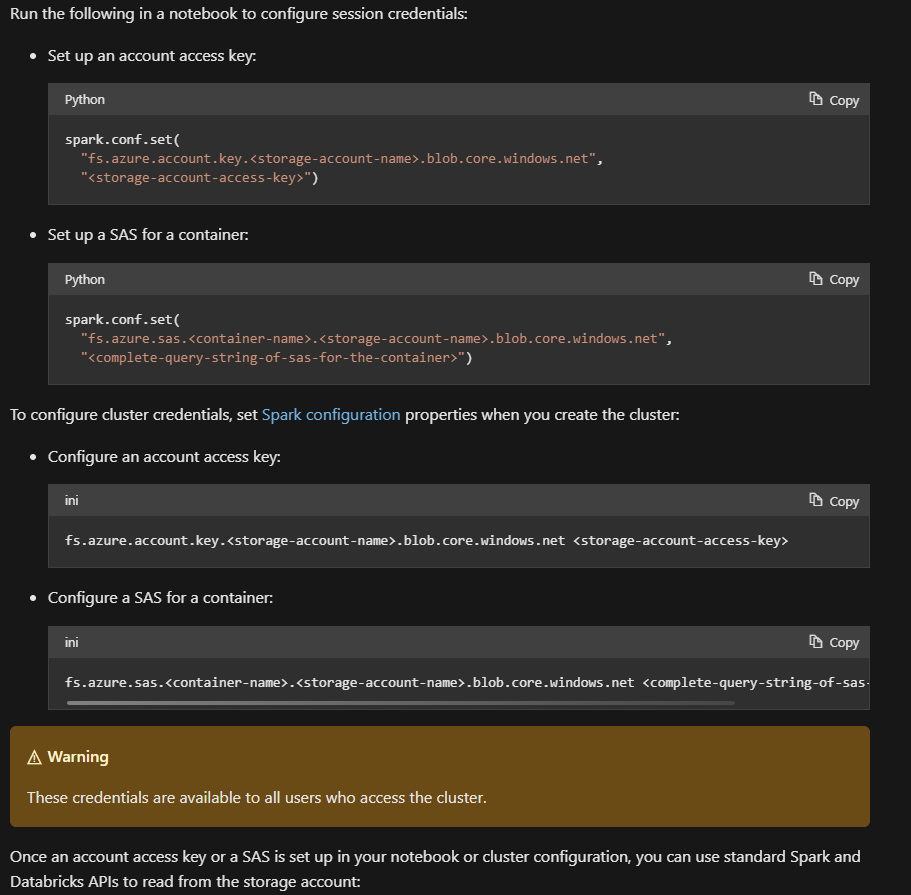

You can read data from public storage accounts without any additional settings. Whereas for private storage accounts, you must configure credentials (a Shared Key or a Shared Access Signature (SAS)) before you can access data in Azure Blob storage, either as session credentials or cluster credentials.

For more info please refer to this articles:

- Access your blob container from Azure Databricks workspace

- Access Azure Blob storage directly

- Accessing Azure Blob Storage from Azure Databricks

- Youtube Video - Connecting Azure Databricks to Azure Blob Storage

For leveraging credentials safely in Azure Databricks, it is recommended to you follow the Secret management user guide as shown in Mount an Azure Blob storage container.

Hope this info helps.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators