Hello @Sukumar Vinnakota ,

Thanks for the question and using MS Q&A platform.

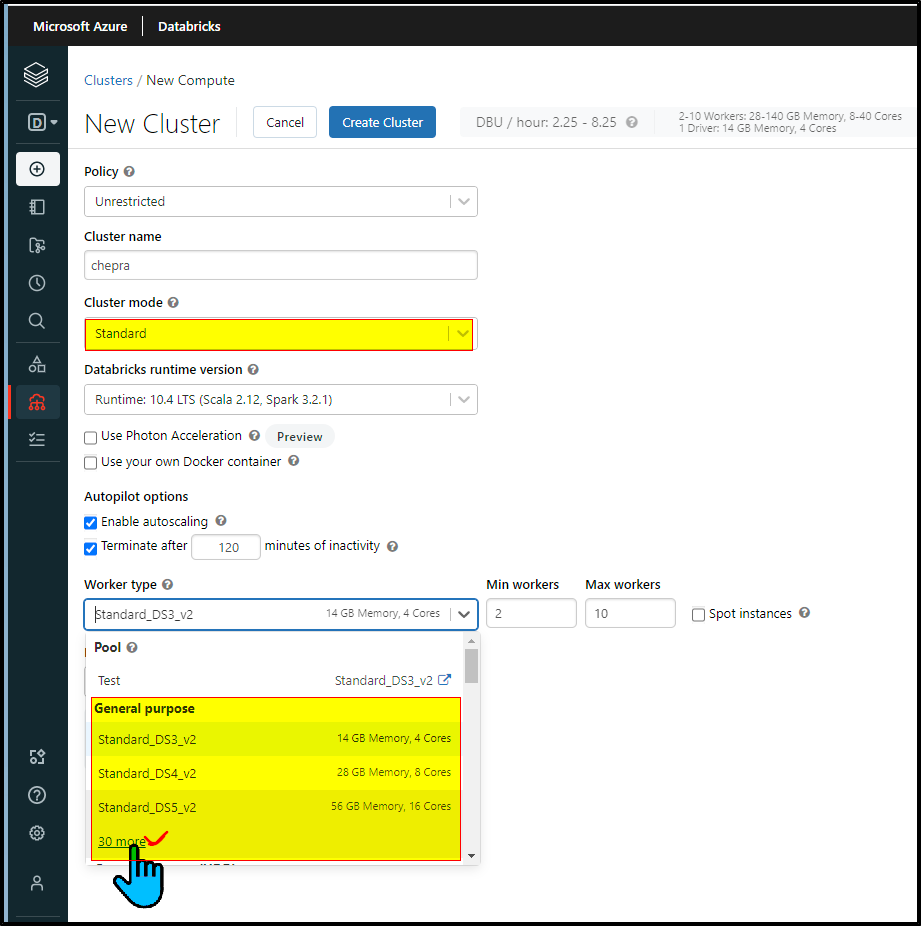

Azure Databricks - Node Sizes

Azure Databricks offers three distinct workloads on several VM Instances tailored for your data analytics workflow—the Jobs Compute and Jobs Light Compute workloads make it easy for data engineers to build and execute jobs and the All-Purpose Compute workload makes it easy for data scientists to explore, visualise, manipulate and share data and insights interactively.

Note: In Standard cluster mode, you have 33 instances available under General purpose type. You can pick any instance as per your requirement.

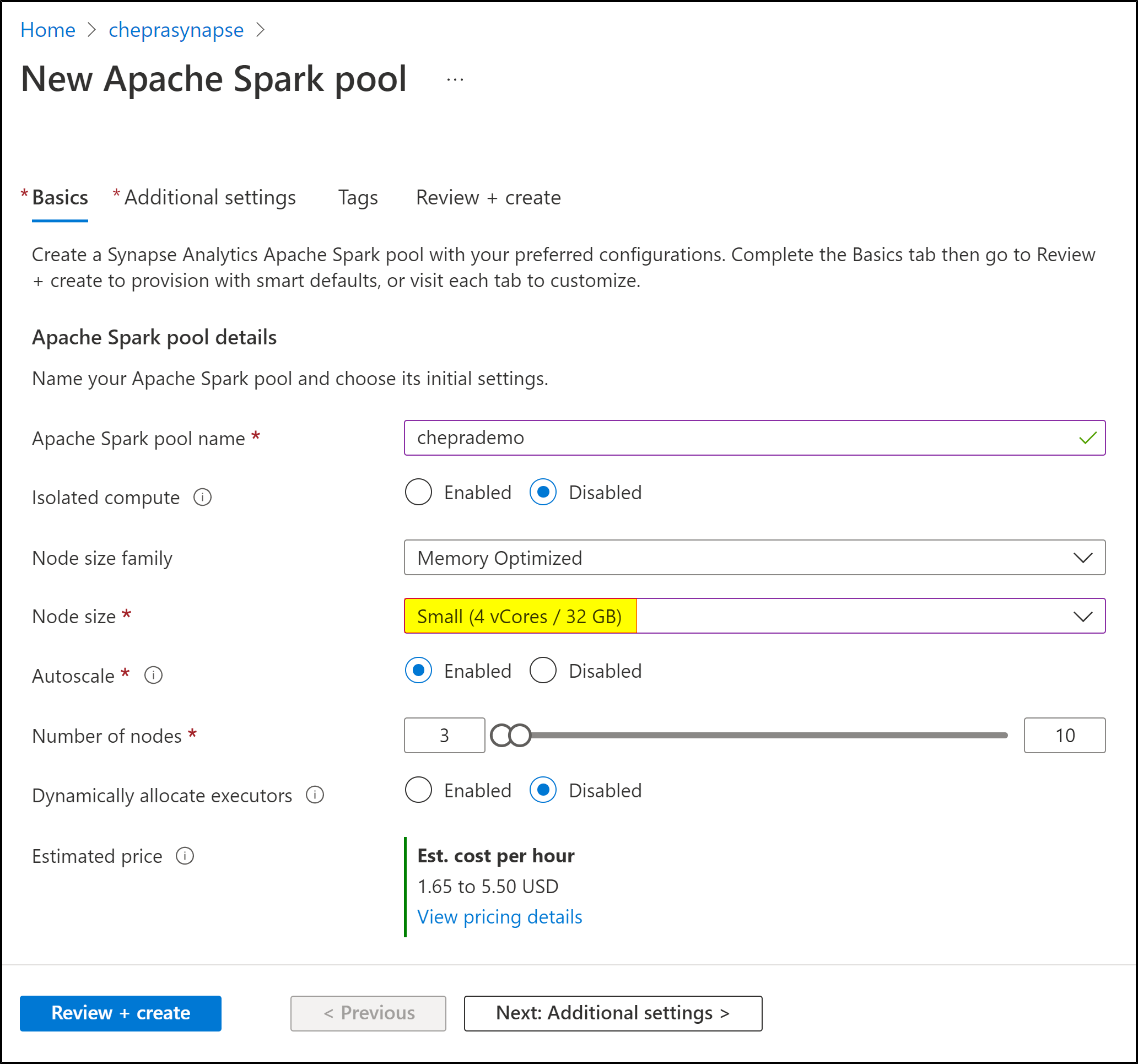

Azure Synapse Analytics - Node Sizes

A Spark pool can be defined with node sizes that range from a Small compute node with 4 vCore and 32 GB of memory up to a XXLarge compute node with 64 vCore and 512 GB of memory per node. Node sizes can be altered after pool creation although the instance may need to be restarted.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators