Hello @Anonymous ,

Welcome to the Microsoft Q&A platform, and thanks for posting your query.

Are you seeing any errors when you experiencing high memory usage? Here are a few things I want you to check from your end.

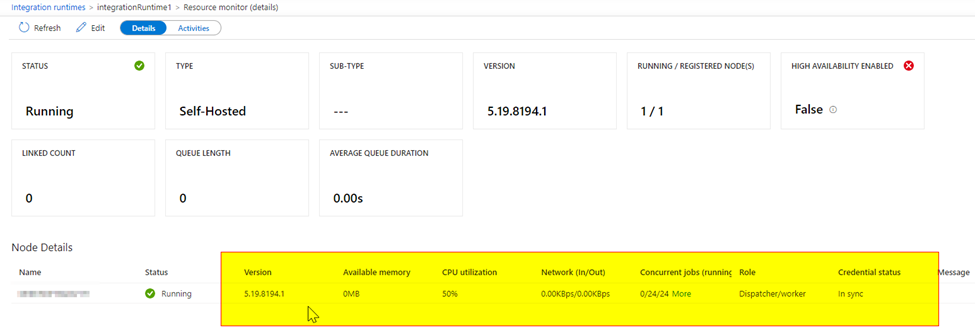

If your memory and CPU are consistently high then it could be due to running more pipelines using self hosted IR. If you see any momentary high memory usage it could be due to a large volume of concurrent activity.

1) Check the settings for limit concurrent jobs. The max limit is 96.

2) Check the network throughput

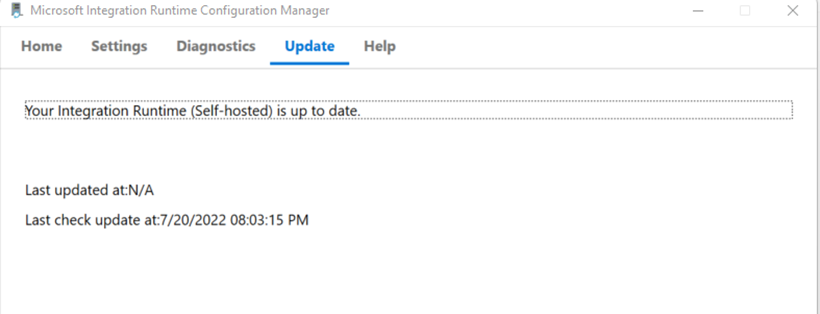

3) Check if you have the latest version of self hosted IR installed.

4) If you are using single node for self hosted IR, consider adding 2nd node

5) Check the resource usage and concurrent activity execution on the IR node. Adjust the internal and trigger time of activity runs to avoid too much execution on a single IR node at the same time.

6) Look into add more memory

Here is the general troubleshooting self-hosted integration runtime document.

While setting the concurrent connection value, will need to consider the number of CPU cores and the amount of RAM of the machine. If we have more cores and more memory, we can set this value to a high number.

and we can scale out by increasing the number of nodes. If we increase the number of nodes, the concurrent jobs limit will be the sum of all available nodes.

for ex: if we add 3 nodes to your SHIR, and set '10' in the 'limit concurrent jobs' on each node, then we will get a maximum of 30 concurrent jobs.

There is no specific recommend number here. It all depends on workload and cpu/memory of your nodes.

one more advantage of adding another node is 'high availability'

Please let me know if you have any further questions.