Hi @Srihari Adabala ,

Welcome to Microsoft Q&A platform and thanks for posting your question.

As I understand your query, you are trying to process a 7MB file using mapping dataflow, however, you are getting the following error : "DF-Executor-OutOfMemoryError" . Please let me know if my understanding is incorrect.

The cause behind this error as the error message suggests, is that "The cluster is running out of memory."

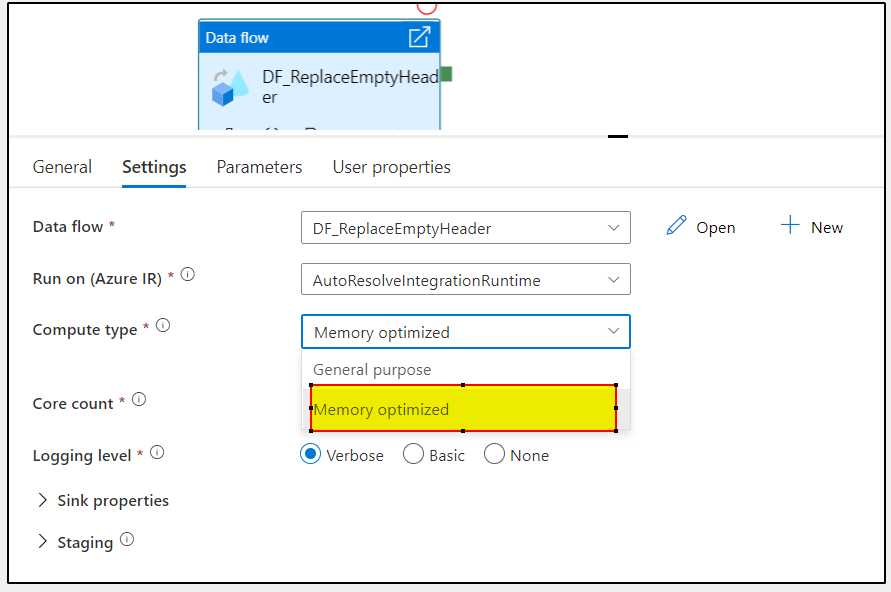

The recommended solution is to retry using an integration runtime with bigger core count and/or memory optimized compute type

There are two available options for the type of Spark cluster to utilize: general purpose & memory optimized.

General purpose clusters are the default selection and will be ideal for most data flow workloads. These tend to be the best balance of performance and cost.

If your data flow has many joins and lookups, you may want to use a memory optimized cluster. Memory optimized clusters can store more data in memory and will minimize any out-of-memory errors you may get. Memory optimized have the highest price-point per core, but also tend to result in more successful pipelines. If you experience any out of memory errors when executing data flows, switch to a memory optimized Azure IR configuration.

Kindly check the following troubleshooting document : DF-Executor-OutOfMemoryError and Cluster type

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators