Hi @Anonymous ,

Thankyou for using Microsoft Q&A platform and asking your query here.

As I understand your ask , you want to load data of a table which has columns with datatype as 'money' and 'numeric' from SQL server to Delta lake and you want to know how to preserve the datatype till the target database. Please let me know if my understanding is incorrect.

I tried to repro your case , for that I performed following steps:

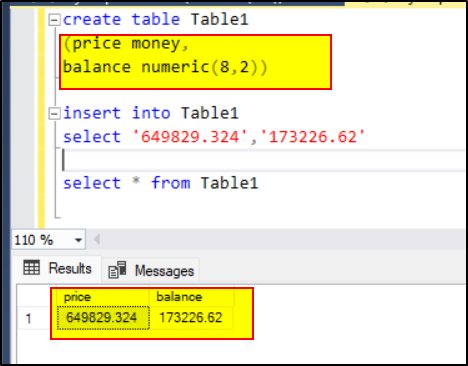

1. I created a table in my Azure SQL database (Note: Dataflow doesnt support self hosted IR, so you cant use on premise SQL server) with columns having datatype as money and numeric(8,2)

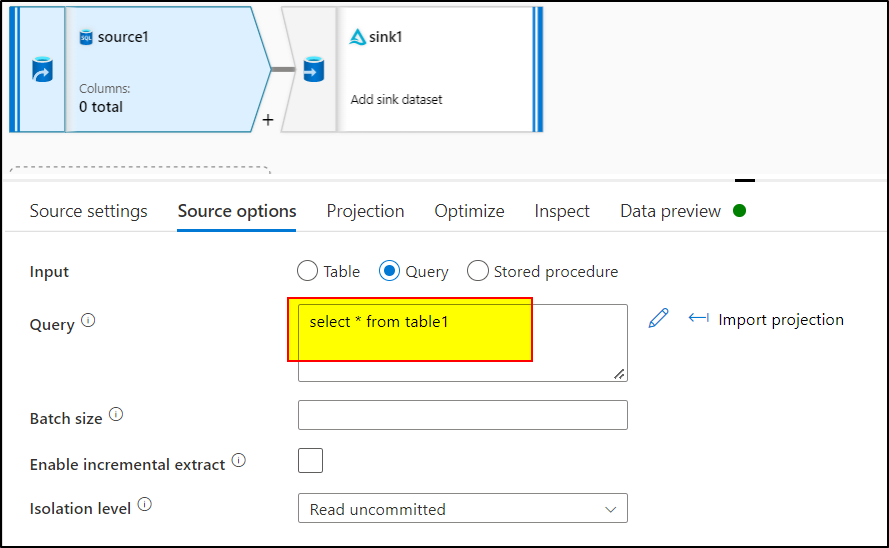

2. Inside the dataflow , I created a dataset pointing to the above SQL db and selected the same in the source transformation.

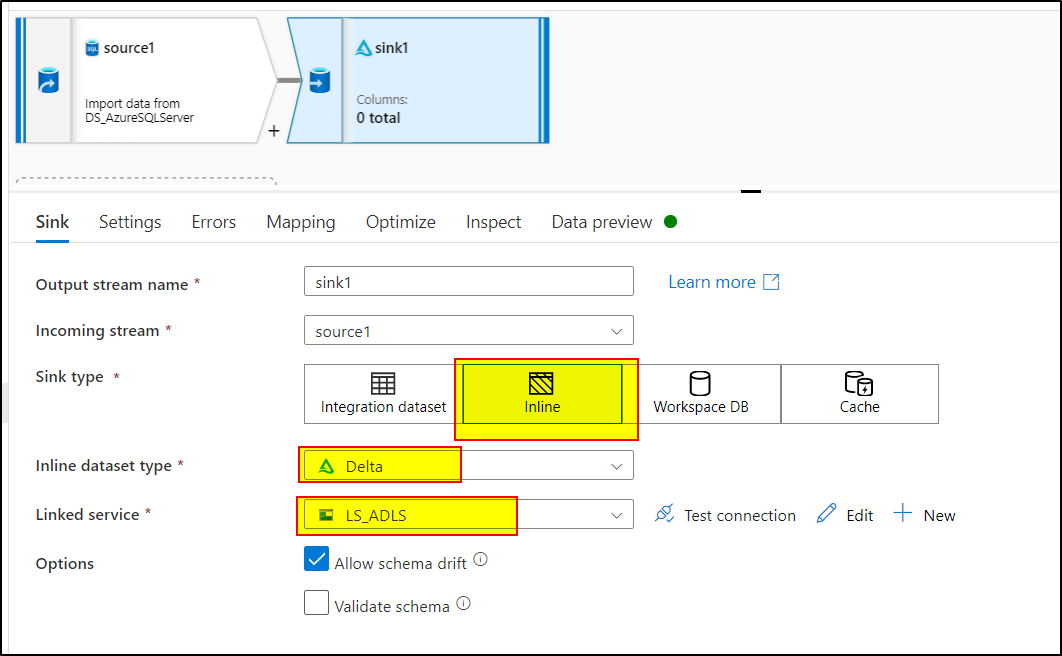

3. In the sink transformation, use inline dataset and select Delta format , select the linked service which would eventually store the data in the ADLS in .parquet format.

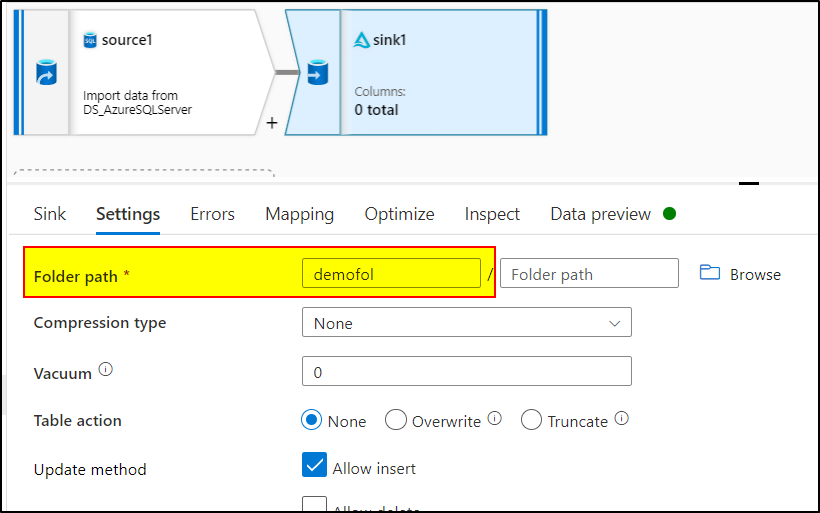

In the sink settings, provide the folder path for creation of the file.

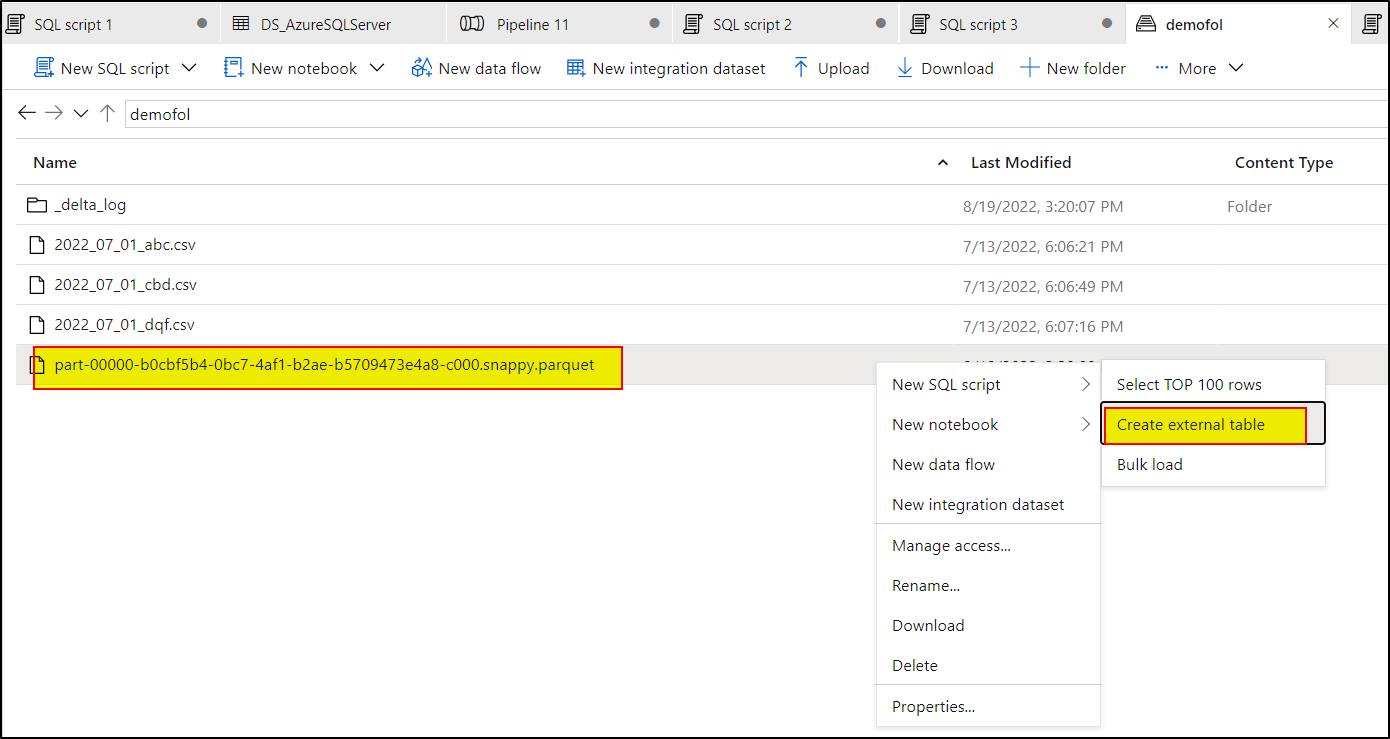

4. After running the pipeline calling the dataflow, it created a .parquet file inside the folder. Right click on the same and select 'Create external table' using sql script.

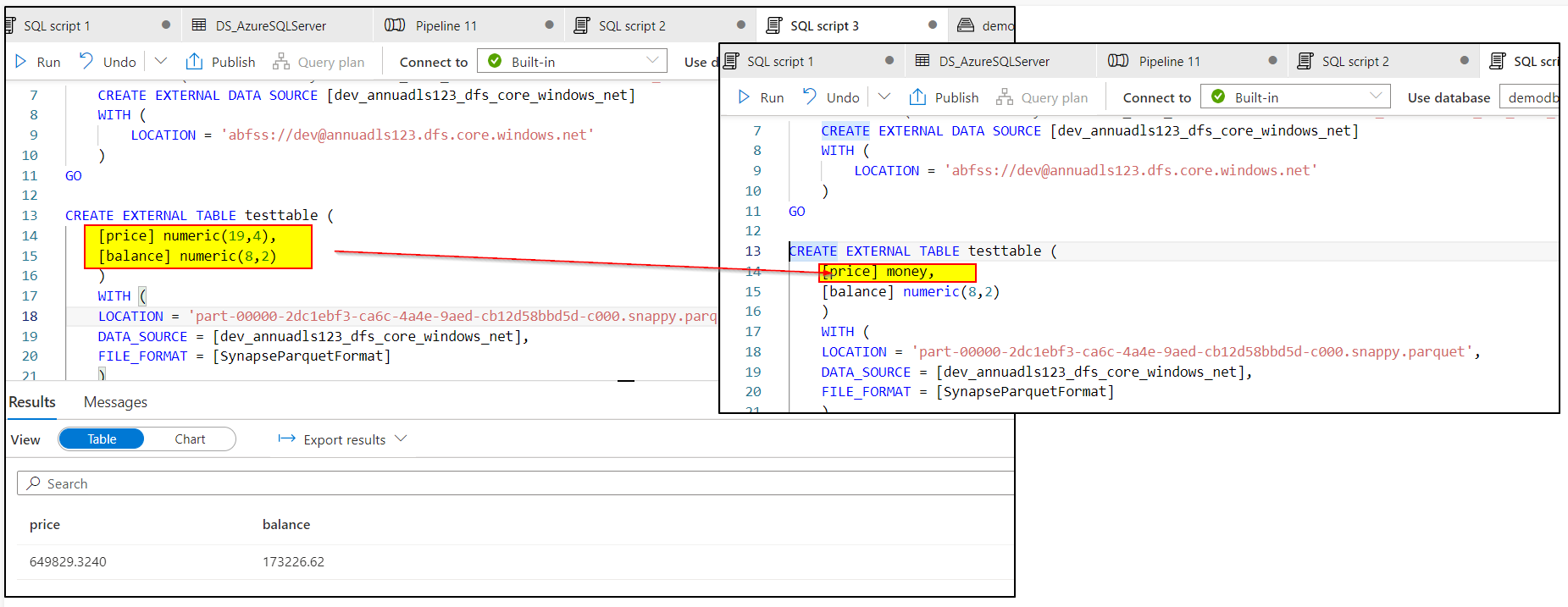

The generated sql script preserves the numeric datatype but 'money' is replaced by numeric , kindly change it back to money explicitly

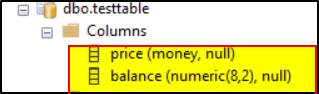

5. In the external table , check the table schema, it should be reflecting same datatype as expected

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators