Hello @gajanan choure ,

Thanks for the question and using MS Q&A platform.

As we understand the ask here is why the schema on the Synapase is getting changed , please do let us know if its not accurate.

I have tried the below and I do not see this happening .

This is what I did tried .

- Created a DELTA table in Azure data bricks ( ADB)

%sql

CREATE TABLE student101 (name VARCHAR(64), address VARCHAR(64), student_id tinyint )

USING Delta PARTITIONED BY (student_id);

2.Insert data

%sql

INSERT INTO student101 VALUES

('Amy Smith', '123 Park Ave, San Jose', 11);

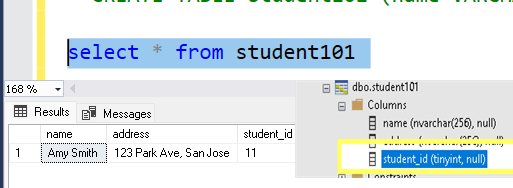

- Create a table on the Synapse side

CREATE TABLE student101 (name VARCHAR(64), address VARCHAR(64), student_id tinyint )

Please beware that the student_id is tinyint here .

- Created a dataframe by reading the DELTA table

new_df = spark.sql("select * from student101")

new_df.show()sc._jsc.hadoopConfiguration().set(

"fs.azure.account.key.XXXX.blob.core.windows.net",

"YYY")

spark.conf.set(

"fs.azure.account.key.XXX.blob.core.windows.net",

"ZZZ")Get some data from an Azure Synapse table.

new_df.write \

.mode("overwrite")\

.format("com.databricks.spark.sqldw") \

.option("url", "jdbc:sqlserver://analyticsshared.database.windows.net:1433;database=synapse;user=CCCC@X ;password=XXXXX;encrypt=true;trustServerCertificate=false;hostNameInCertificate=*.database.windows.net;loginTimeout=30;") \

.option("tempDir", "wasbs://data@xxxxxxxxxxxxx .blob.core.windows.net/input") \

.option("forwardSparkAzureStorageCredentials", "true") \

.option("dbTable", "student101") \

.save()

Please do let me if you have any queries.

Thanks

Himanshu

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators