Hi @pankaj chaturvedi ,

Thankyou for using Microsoft Q&A platform and thanks for posting your query.

As I understand your query, you are trying to find out the latestModified file from two folders: TEST1 and TEST2. Please let me know if that's not the requirement.

First of all the solution which you are following would perfectly work if in case you want to copy the latestfile from one folder not from multiple folders. So, for your requirement , we need to introduce another pipeline which would loop through the two folders and execute the already created child pipeline.

There are couple of changes you need to accomodate in the child pipeline as well. I will point it out later.

For the parent pipeline, kindly follow the following steps:

- Use Get metadata activity and use

Child Itemsin the field list . In the dataset, don't provide any file path. Keep it blank so that it would fetch all the folder names present in the storage account. - Use Filter activity and provide

@activity('Get Metadata1').output.childItemsin the Items and@or(equals(item().name,'TEST1'),equals(item().name,'TEST2'))in the condition . (Note: In my case , in below gif I am checking for folders having 'adls' in the foldername) - Use ForEach activity and provide

@activity('Filter1').output.valuein the Items. - Inside ForEach activity, use Execute Pipeline to invoke the child pipeline . In the child pipeline, Create a parameter called

SinkFileNameand in settings tab of execute pipeline activity of parent pipeline , provide the value for the parameter as@item().name - In the child pipeline, in the last copy activity parameterize the sink dataset by creating a parameter called

FileNameand use that in the filePath of connection tab as@concat(dataset().FileName,'.csv'). In the sink tab of copy activity, provide the value for the parameter as

@pipeline().parameters.SinkFileName - In the first Getmetadata activity of child pipeline, kindly parameterize the dataset by creating parameter called

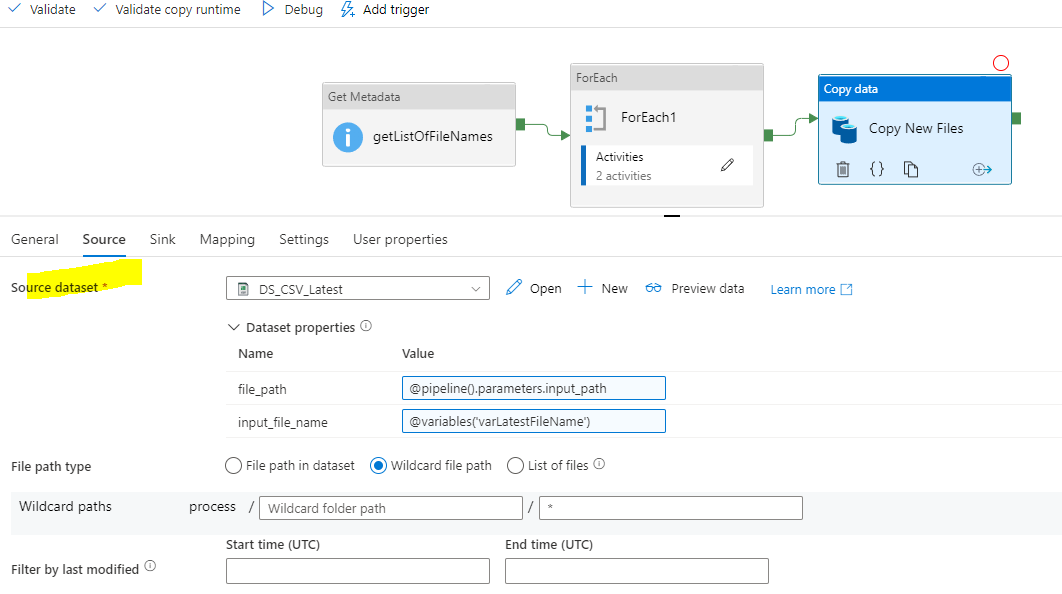

FolderNameand provide value as@pipeline().parameters.SinkFileName. - In the sink dataset of child pipeline , you are using wildcard file path which is not correct. You should be using file path in dataset .

Parent Pipeline:

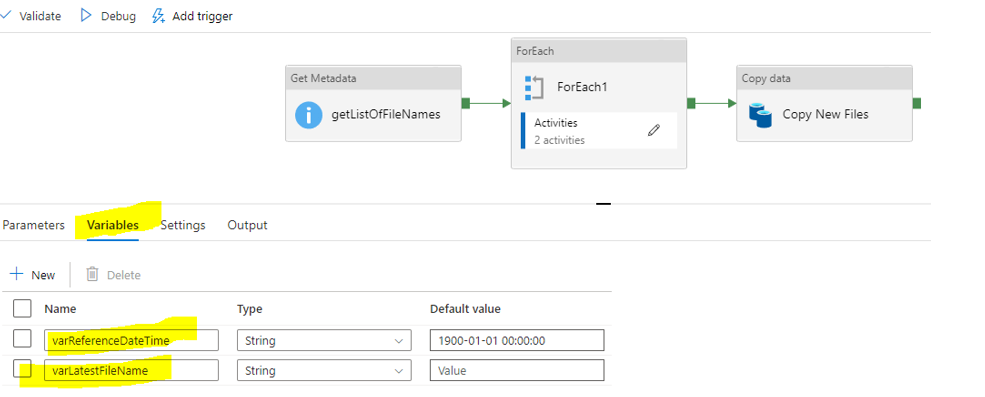

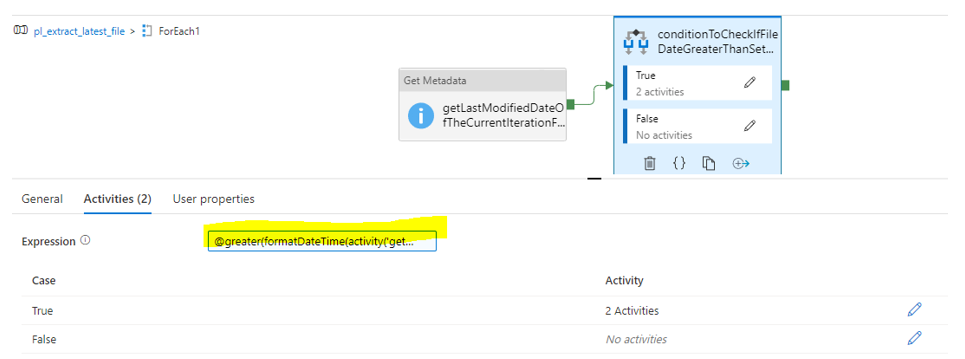

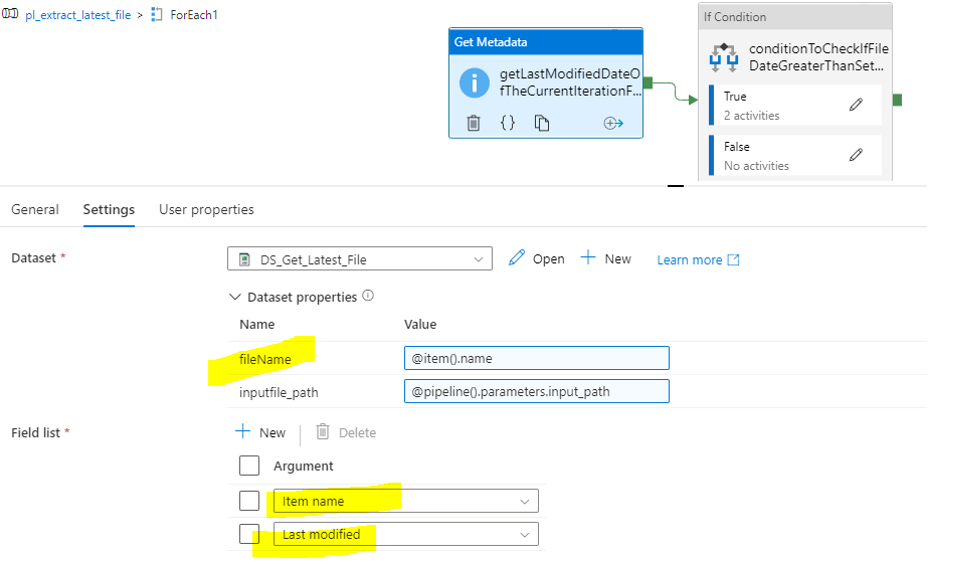

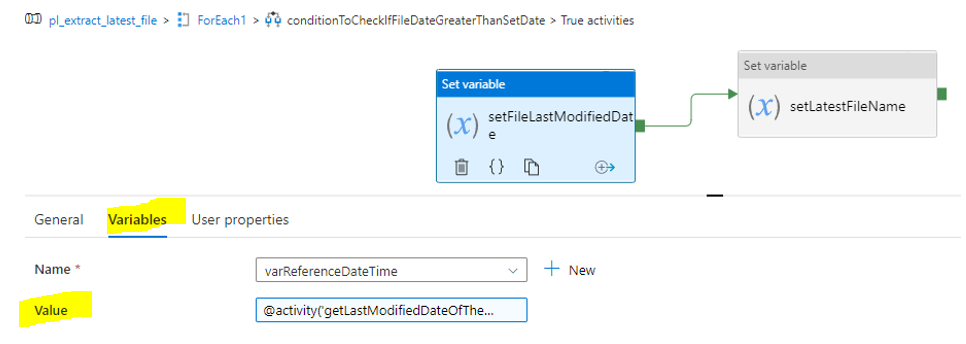

Child Pipeline:

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

button and take the satisfaction survey whenever the information provided helps you.

button and take the satisfaction survey whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators