Hi @Goutham Vutukuri ,

Thankyou for using Microsoft Q&A platform and thanks for posting your question here.

As I understand your requirement you want to copy complex JSON into Azure SQL table.

First of all the JSON that you have shared is an incomplete JSON , there are few missing braces at the end. Here I am pasting the valid JSON:

[

{

"sitesEnergy": {

"timeUnit": "DAY",

"unit": "Wh",

"count": 5,

"siteEnergyList": [

{

"siteId": 2248407,

"energyValues": {

"measuredBy": "METER",

"values": [

{

"date": "2022-09-01 00:00:00",

"value": 1520734.0

},

{

"date": "2022-09-02 00:00:00",

"value": 1630062.0

},

{

"date": "2022-09-03 00:00:00",

"value": 1495343.0

},

{

"date": "2022-09-04 00:00:00",

"value": 1363594.0

},

{

"date": "2022-09-05 00:00:00",

"value": 146063.0

}

]

}

},

{

"siteId": 1485192,

"energyValues": {

"measuredBy": "METER",

"values": [

{

"date": "2022-09-01 00:00:00",

"value": 265781.0

},

{

"date": "2022-09-02 00:00:00",

"value": 281640.0

},

{

"date": "2022-09-03 00:00:00",

"value": 239422.0

},

{

"date": "2022-09-04 00:00:00",

"value": 227063.0

},

{

"date": "2022-09-05 00:00:00",

"value": 45094.0

}

]

}

},

{

"siteId": 1449188,

"energyValues": {

"measuredBy": "METER",

"values": [

{

"date": "2022-09-01 00:00:00",

"value": 1410626.0

},

{

"date": "2022-09-02 00:00:00",

"value": 1387875.0

},

{

"date": "2022-09-03 00:00:00",

"value": 1349688.0

},

{

"date": "2022-09-04 00:00:00",

"value": 1206687.0

},

{

"date": "2022-09-05 00:00:00",

"value": 308375.0

}

]

}

}

]

}

}

]

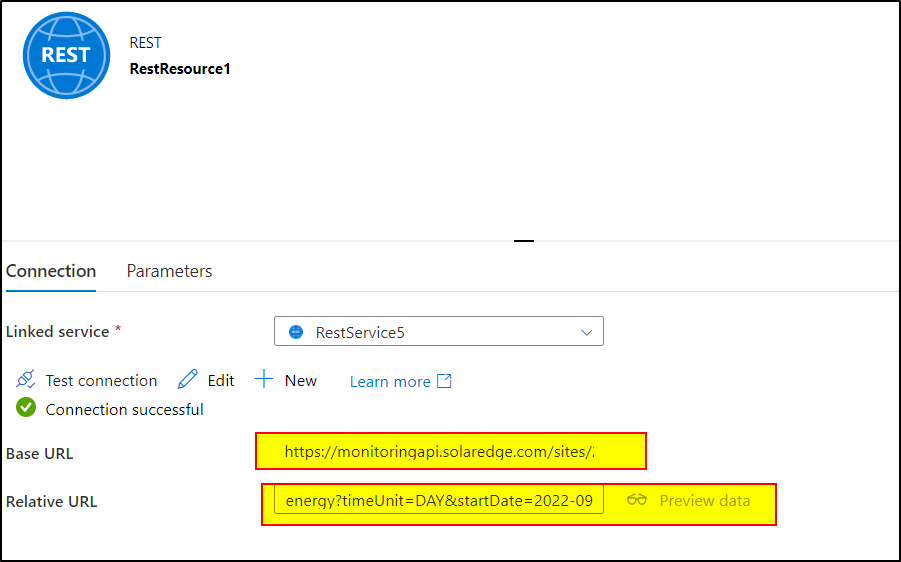

Now, your requirement can't be achieved by using Copy activity simply because it's a complex nested json which needs to be flattened before copying it to the relational database. Here are the steps you can follow :

- Create a dataset pointing to the source .json file and use the same in the source transformation . In the source settings option, select 'Array of document' in the JSON settings option.

- Use Flatten transformation to unroll by 'siteEnergyList[]' and use the same in the unroll root option. Now, Select 'timeUnit','unit','count','siteId','value[]' as the source columns .

- Use another Flatten transformation and provide 'value[]' in the unroll by option and select 'date' and 'value' as the source columns and preview the data

- Use Sink transformation and select a dataset pointing to the Azure SQL table and call the dataflow in ADF pipeline and execute it.

Check the below gif for reference:

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators