Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This article helps you deploy a private link-based AKS cluster. If you're interested in creating an AKS cluster without required private link or tunnel, see Create an Azure Kubernetes Service (AKS) cluster with API Server VNet integration.

Overview of private clusters in AKS

In a private cluster, the control plane or API server has internal IP addresses that are defined in the RFC1918 - Address Allocation for Private Internet document. By using a private cluster, you can ensure network traffic between your API server and your node pools remains only on the private network.

The control plane or API server is in an AKS-managed Azure resource group, and your cluster or node pool is in your resource group. The server and the cluster or node pool can communicate with each other through the Azure Private Link service in the API server virtual network and a private endpoint exposed on the subnet of your AKS cluster.

When you create a private AKS cluster, AKS creates both private and public fully qualified domain names (FQDNs) with corresponding DNS zones by default. For detailed DNS configuration options, see Configure a private DNS zone, private DNS subzone, or custom subdomain.

Region availability

Private clusters are available in public regions, Azure Government, and Microsoft Azure operated by 21Vianet regions where AKS is supported.

Prerequisites for private AKS clusters

- The Azure CLI version 2.28.0 or higher. Run

az --versionto find the version, and runaz upgradeto upgrade the version. If you need to install or upgrade, see Install Azure CLI. - If using Azure Resource Manager (ARM) or the Azure REST API, the AKS API version must be 2021-05-01 or higher.

- To use a custom DNS server, add the Azure public IP address 168.63.129.16 as the upstream DNS server in the custom DNS server, and make sure to add this public IP address as the first DNS server. For more information about the Azure IP address, see What is IP address 168.63.129.16?

- The cluster's DNS zone should be what you forward to 168.63.129.16. You can find more information on zone names in Azure services DNS zone configuration.

- Existing AKS clusters enabled with API Server VNet integration can have private cluster mode enabled. For more information, see Enable or disable private cluster mode on an existing cluster with API Server VNet integration.

Important

As of November 30, 2025, Azure Kubernetes Service (AKS) no longer supports or provides security updates for Azure Linux 2.0. The Azure Linux 2.0 node image is frozen at the 202512.06.0 release. Beginning March 31, 2026, node images will be removed, and you'll be unable to scale your node pools. Migrate to a supported Azure Linux version by upgrading your node pools to a supported Kubernetes version or migrating to osSku AzureLinux3. For more information, see [Retirement] Azure Linux 2.0 node pools on AKS.

Limitations and considerations for private AKS clusters

- You can't apply IP authorized ranges to the private API server endpoint - they only apply to the public API server.

- Azure Private Link service limitations apply to private clusters.

- There's no support for Azure DevOps Microsoft-hosted Agents with private clusters. Consider using self-hosted agents.

- If you need to enable Azure Container Registry on a private AKS cluster, set up a private link for the container registry in the cluster virtual network (VNet) or set up peering between the container registry's virtual network and the private cluster's virtual network.

- Deleting or modifying the private endpoint in the customer subnet causes the cluster to stop functioning.

- Azure Private Link service is supported on Standard Azure Load Balancer only. Basic Azure Load Balancer isn't supported.

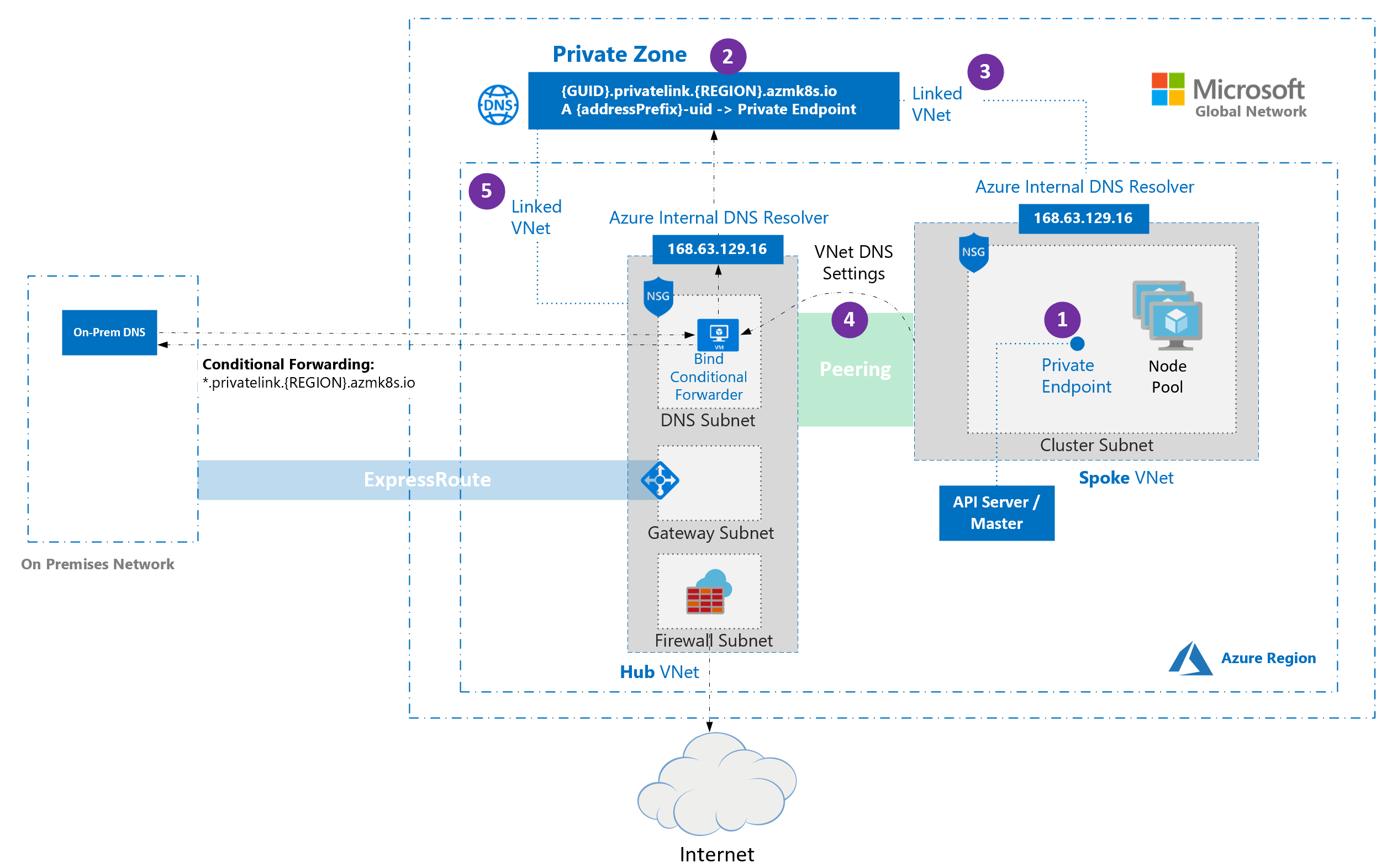

Hub and spoke with custom DNS for private AKS clusters

Hub and spoke architectures are commonly used to deploy networks in Azure. In many of these deployments, DNS settings in the spoke VNets are configured to reference a central DNS forwarder to allow for on-premises and Azure-based DNS resolution.

Keep the following considerations in mind when deploying private AKS clusters in hub and spoke architectures with custom DNS:

When a private cluster is created, a private endpoint (1) and a private DNS zone (2) are created in the cluster-managed resource group by default. The cluster uses an

Arecord in the private zone to resolve the IP of the private endpoint for communication to the API server.The private DNS zone is linked only to the VNet that the cluster nodes are attached to (3), which means that the private endpoint can only be resolved by hosts in that linked VNet. In scenarios where no custom DNS is configured on the VNet (default), it works without issue as hosts point at 168.63.129.16 for DNS that can resolve records in the private DNS zone because of the link.

If you keep the default private DNS zone behavior, AKS tries to link the zone directly to the spoke VNet that hosts the cluster even when the zone is already linked to a hub VNet.

In spoke VNets that use custom DNS servers, this action can fail if the cluster's managed identity lacks Network Contributor on the spoke VNet.

To prevent the failure, choose one of the following supported configurations:

- Custom private DNS zone: Provide a precreated private zone and set

privateDNSZone/--private-dns-zoneto its resource ID. Link that zone to the appropriate VNet (for example, the hub VNet) and setpublicDNStofalse/ use--disable-public-fqdn. - Public DNS only: Disable private zone creation by setting

privateDNSZone/--private-dns-zonetononeand leavepublicDNSat its default value (true) / don't use--disable-public-fqdn.

- Custom private DNS zone: Provide a precreated private zone and set

If you're using bring your own (BYO) route table with kubenet and BYO DNS with private clusters, cluster creation fails. You need to associate the

RouteTablein the node resource group to the subnet after the cluster creation failed to make the creation successful.

Keep the following limitations in mind when using custom DNS with private AKS clusters:

- Setting

privateDNSZone/--private-dns-zonetononeandpublicDNS: false/--disable-public-fqdnat the same time isn't supported. - Conditional forwarding doesn't support subdomains.

Create a private AKS cluster with default basic networking

Create a resource group using the

az group createcommand. You can also use an existing resource group for your AKS cluster.az group create \ --name <private-cluster-resource-group> \ --location <location>Create a private cluster with default basic networking using the

az aks createcommand with the--enable-private-clusterflag.Key parameters in this command:

--enable-private-cluster: Enables private cluster mode.

az aks create \ --name <private-cluster-name> \ --resource-group <private-cluster-resource-group> \ --load-balancer-sku standard \ --enable-private-cluster \ --generate-ssh-keys

Create a private AKS cluster with advanced networking

Create a resource group using the

az group createcommand. You can also use an existing resource group for your AKS cluster.az group create \ --name <private-cluster-resource-group> \ --location <location>Create a private cluster with advanced networking using the

az aks createcommand.Key parameters in this command:

--enable-private-cluster: Enables private cluster mode.--network-plugin azure: Specifies the Azure CNI networking plugin.--vnet-subnet-id: The resource ID of an existing subnet in a virtual network.--dns-service-ip: An available IP address within the Kubernetes service address range to use for the cluster DNS service.--service-cidr: A CIDR notation IP range from which to assign service cluster IPs.

az aks create \ --resource-group <private-cluster-resource-group> \ --name <private-cluster-name> \ --load-balancer-sku standard \ --enable-private-cluster \ --network-plugin azure \ --vnet-subnet-id <subnet-id> \ --dns-service-ip 10.2.0.10 \ --service-cidr 10.2.0.0/24 --generate-ssh-keys

Use custom domains with private AKS clusters

If you want to configure custom domains that can only be resolved internally, see Use custom domains.

Disable a public FQDN on a private AKS cluster

Disable a public FQDN on a new cluster

Disable a public FQDN when creating a private AKS cluster using the

az aks createcommand with the--disable-public-fqdnflag.az aks create \ --name <private-cluster-name> \ --resource-group <private-cluster-resource-group> \ --load-balancer-sku standard \ --enable-private-cluster \ --assign-identity <resource-id> \ --private-dns-zone <private-dns-zone-mode> \ --disable-public-fqdn \ --generate-ssh-keys

Disable a public FQDN on an existing cluster

Disable a public FQDN on an existing AKS cluster using the

az aks updatecommand with the--disable-public-fqdnflag.az aks update \ --name <private-cluster-name> \ --resource-group <private-cluster-resource-group> \ --disable-public-fqdn

Configure a private DNS zone, private DNS subzone, or custom subdomain for a private AKS cluster

You can configure private DNS settings for a private AKS cluster using the Azure CLI (with the --private-dns-zone parameter) or an Azure Resource Manager (ARM) template (with the privateDNSZone property). The following table outlines the options available for the --private-dns-zone parameter / privateDNSZone property:

| Setting | Description |

|---|---|

system |

The default value when configuring a private DNS zone. If you omit --private-dns-zone / privateDNSZone, AKS creates a private DNS zone in the node resource group. |

none |

If you set --private-dns-zone / privateDNSZone to none, AKS doesn't create a private DNS zone. |

<custom-private-dns-zone-resource-id> |

To use this parameter, you need to create a private DNS zone in the following format for Azure global cloud: privatelink.<region>.azmk8s.io or <subzone>.privatelink.<region>.azmk8s.io. You need the resource ID of the private DNS zone for future use. You also need a user-assigned identity or service principal with the Private DNS Zone Contributor and Network Contributor roles. For clusters using API Server VNet integration, a private DNS zone supports the naming format of private.<region>.azmk8s.io or <subzone>.private.<region>.azmk8s.io. You can't change or delete this resource after creating the cluster, as it can cause performance issues and cluster upgrade failures. You can use --fqdn-subdomain <subdomain> with <custom-private-dns-zone-resource-id> only to provide subdomain capabilities to privatelink.<region>.azmk8s.io. If you're specifying a subzone, there's a 32 character limit for the <subzone> name. |

Keep the following considerations in mind when configuring private DNS for a private AKS cluster:

- If the private DNS zone is in a different subscription than the AKS cluster, you need to register the

Microsoft.ContainerServicesAzure provider in both subscriptions. - If your AKS cluster is configured with an Active Directory service principal, AKS doesn't support using a system-assigned managed identity with custom private DNS zone. The cluster must use user-assigned managed identity authentication.

Create a private AKS cluster with a private DNS zone

Create a private AKS cluster with a private DNS zone using the

az aks createcommand.Key parameters in this command:

--enable-private-cluster: Enables private cluster mode.--private-dns-zone [system|none]: Configures the private DNS zone for the cluster. The default value issystem.--assign-identity <resource-id>: The resource ID of a user-assigned managed identity with the Private DNS Zone Contributor and Network Contributor roles.

az aks create \ --name <private-cluster-name> \ --resource-group <private-cluster-resource-group> \ --load-balancer-sku standard \ --enable-private-cluster \ --assign-identity <resource-id> \ --private-dns-zone [system|none] \ --generate-ssh-keys

Create a private AKS cluster with a custom private DNS zone or private DNS subzone

Create a private AKS cluster with a custom private DNS zone or subzone using the

az aks createcommand.Key parameters in this command:

--enable-private-cluster: Enables private cluster mode.--private-dns-zone <custom-private-dns-zone-resource-id>|<custom-private-dns-subzone-resource-id>: The resource ID of a precreated private DNS zone or subzone in the following format for Azure global cloud:privatelink.<region>.azmk8s.ioor<subzone>.privatelink.<region>.azmk8s.io.--assign-identity <resource-id>: The resource ID of a user-assigned managed identity with the Private DNS Zone Contributor and Network Contributor roles.

# The custom private DNS zone name should be in the following format: "<subzone>.privatelink.<region>.azmk8s.io" az aks create \ --name <private-cluster-name> \ --resource-group <private-cluster-resource-group> \ --load-balancer-sku standard \ --enable-private-cluster \ --assign-identity <resource-id> \ --private-dns-zone [<custom-private-dns-zone-resource-id>|<custom-private-dns-subzone-resource-id>] \ --generate-ssh-keys

Create a private AKS cluster with a custom private DNS zone and custom subdomain

Create a private AKS cluster with a custom private DNS zone and subdomain using the

az aks createcommand.Key parameters in this command:

--enable-private-cluster: Enables private cluster mode.--private-dns-zone <custom-private-dns-zone-resource-id>: The resource ID of a precreated private DNS zone in the following format for Azure global cloud:privatelink.<region>.azmk8s.io.--fqdn-subdomain <subdomain>: The subdomain to use for the cluster FQDN within the custom private DNS zone.--assign-identity <resource-id>: The resource ID of a user-assigned managed identity with the Private DNS Zone Contributor and Network Contributor roles.

# The custom private DNS zone name should be in one of the following formats: "privatelink.<region>.azmk8s.io" or "<subzone>.privatelink.<region>.azmk8s.io" az aks create \ --name <private-cluster-name> \ --resource-group <private-cluster-resource-group> \ --load-balancer-sku standard \ --enable-private-cluster \ --assign-identity <resource-id> \ --private-dns-zone <custom-private-dns-zone-resource-id> \ --fqdn-subdomain <subdomain> \ --generate-ssh-keys

Update an existing private AKS cluster from a private DNS zone to public

You can only update from byo (bring your own) or system to none. No other combination of update values is supported.

Warning

When you update a private cluster from byo or system to none, the agent nodes change to use a public FQDN. In an AKS cluster that uses Azure Virtual Machine Scale Sets, a node image upgrade is performed to update your nodes with the public FQDN.

Update a private cluster from

byoorsystemtononeusing theaz aks updatecommand with the--private-dns-zoneparameter set tonone.az aks update \ --name <private-cluster-name> \ --resource-group <private-cluster-resource-group> \ --private-dns-zone none

Configure kubectl to connect to a private AKS cluster

To manage a Kubernetes cluster, use the Kubernetes command-line client, kubectl. kubectl is already installed if you use Azure Cloud Shell. To install kubectl locally, use the az aks install-cli command.

Configure

kubectlto connect to your Kubernetes cluster using theaz aks get-credentialscommand. This command downloads credentials and configures the Kubernetes CLI to use them.az aks get-credentials --resource-group <private-cluster-resource-group> --name <private-cluster-name>Verify the connection to your cluster using the

kubectl getcommand. This command returns a list of the cluster nodes.kubectl get nodesThe command returns output similar to the following example output:

NAME STATUS ROLES AGE VERSION aks-nodepool1-12345678-vmss000000 Ready agent 3h6m v1.15.11 aks-nodepool1-12345678-vmss000001 Ready agent 3h6m v1.15.11 aks-nodepool1-12345678-vmss000002 Ready agent 3h6m v1.15.11