Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

You can interact with workspace files stored in Azure Databricks programmatically. This enables tasks such as:

- Storing small data files alongside notebooks and code.

- Writing log files to directories synced with Git.

- Importing modules using relative paths.

- Creating or modifying an environment specification file.

- Writing output from notebooks.

- Writing output from execution of libraries such as Tensorboard.

You can programmatically create, edit, rename, and delete workspace files in Databricks Runtime 11.3 LTS and above. This functionality is supported for notebooks in Databricks Runtime 16.2 and above, and serverless environment 2 and above.

Note

To disable writing to workspace files, set the cluster environment variable WSFS_ENABLE_WRITE_SUPPORT=false. For more information, see Environment variables.

Note

In Databricks Runtime 14.0 and above, the the default current working directory (CWD) for code executed locally is the directory containing the notebook or script being run. This is a change in behavior from Databricks Runtime 13.3 LTS and below. See What is the default current working directory?.

Read the locations of files

Use shell commands to read the locations of files, for example, in a repo or in the local filesystem.

To determine the location of files, enter the following:

%sh ls

- Files aren't in a repo: The command returns the filesystem

/databricks/driver. - Files are in a repo: The command returns a virtualized repo such as

/Workspace/Repos/name@domain.com/public_repo_2/repos_file_system.

Read data workspace files

You can programmatically read small data files such as .csv or .json files from code in your notebooks. The following example uses Pandas to query files stored in a /data directory relative to the root of the project repo:

import pandas as pd

df = pd.read_csv("./data/winequality-red.csv")

df

You can use Spark to read data files. You must provide Spark with the fully qualified path.

- Workspace files in Git folders use the path

file:/Workspace/Repos/<user-folder>/<repo-name>/path/to/file. - Workspace files in your personal directory use the path:

file:/Workspace/Users/<user-folder>/path/to/file.

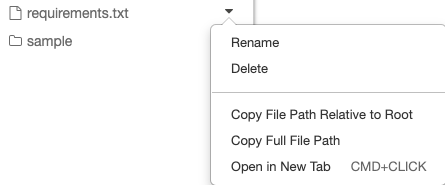

You can copy the absolute or relative path to a file from the dropdown menu next to the file:

The example below shows the use of {os.getcwd()} to get the full path.

import os

spark.read.format("csv").load(f"file:{os.getcwd()}/my_data.csv")

Note

In workspaces where DBFS root and mounts are disabled, you can also use dbfs:/Workspace to access workspace files with Databricks utilities. This requires Databricks Runtime 13.3 LTS or above. See Disable access to DBFS root and mounts in your existing Azure Databricks workspace.

To learn more about files on Azure Databricks, see Work with files on Azure Databricks.

Programmatically create, update, and delete files and directories

You can programmatically manipulate workspace files in Azure Databricks, similar to how you work with files in any standard file system.

Note

In Databricks Runtime 16.2 and above, and serverless environment 2 and above, all programmatic interactions with files is also available for notebooks. For information about converting a file to a notebook, see Convert a file to a notebook.

The following examples use standard Python packages and functionality to create and manipulate files and directories.

import os

# Create a new directory

os.mkdir('dir1')

# Create a new file and write to it

with open('dir1/new_file.txt', "w") as f:

f.write("new content")

# Append to a file

with open('dir1/new_file.txt', "a") as f:

f.write(" continued")

# Delete a file

os.remove('dir1/new_file.txt')

# Delete a directory

os.rmdir('dir1')

import shutil

# Copy a dashboard

shutil.copy("my-dashboard.lvdash.json", "my-dashboard-copy.lvdash.json")

# Move a query to a shared folder

shutil.move("test-query.dbquery","shared-queries/")