Events

Mar 17, 11 PM - Mar 21, 11 PM

Join the meetup series to build scalable AI solutions based on real-world use cases with fellow developers and experts.

Register nowThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

This article shows you how to evaluate a chat app's answers against a set of correct or ideal answers (known as ground truth). Whenever you change your chat application in a way that affects the answers, run an evaluation to compare the changes. This demo application offers tools that you can use today to make it easier to run evaluations.

By following the instructions in this article, you:

Note

This article uses one or more AI app templates as the basis for the examples and guidance in the article. AI app templates provide you with well-maintained reference implementations that are easy to deploy. They help to ensure a high-quality starting point for your AI apps.

Key components of the architecture include:

When you deploy this evaluation to Azure, the Azure OpenAI Service endpoint is created for the GPT-4 model with its own capacity. When you evaluate chat applications, it's important that the evaluator has its own Azure OpenAI resource by using GPT-4 with its own capacity.

An Azure subscription. Create one for free.

Complete the previous chat app procedure to deploy the chat app to Azure. This resource is required for the evaluations app to work. Don't complete the "Clean up resources" section of the previous procedure.

You need the following Azure resource information from that deployment, which is referred to as the chat app in this article:

azd up process.Search service during the azd up process.The Chat API URL allows the evaluations to make requests through your backend application. The Azure AI Search information allows the evaluation scripts to use the same deployment as your backend, loaded with the documents.

After you have this information collected, you shouldn't need to use the chat app development environment again. This article refers to it later, several times, to indicate how the evaluations app uses the chat app. Don't delete the chat app resources until you finish the entire procedure in this article.

A development container environment is available with all the dependencies that are required to complete this article. You can run the development container in GitHub Codespaces (in a browser) or locally by using Visual Studio Code.

Begin now with a development environment that has all the dependencies installed to complete this article. Arrange your monitor workspace so that you can see this documentation and the development environment at the same time.

This article was tested with the switzerlandnorth region for the evaluation deployment.

GitHub Codespaces runs a development container managed by GitHub with Visual Studio Code for the Web as the user interface. For the most straightforward development environment, use GitHub Codespaces so that you have the correct developer tools and dependencies preinstalled to complete this article.

Important

All GitHub accounts can use GitHub Codespaces for up to 60 hours free each month with two core instances. For more information, see GitHub Codespaces monthly included storage and core hours.

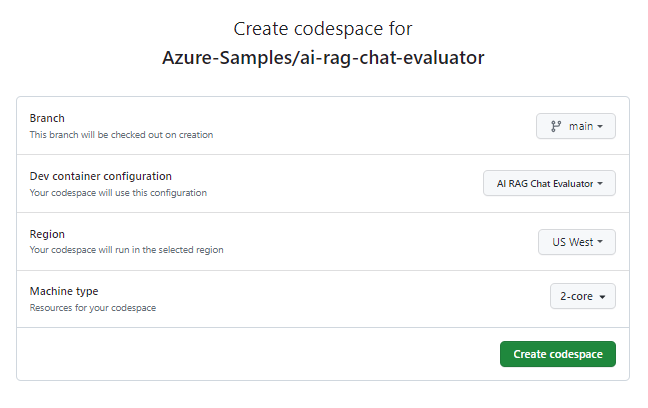

Start the process to create a new GitHub codespace on the main branch of the Azure-Samples/ai-rag-chat-evaluator GitHub repository.

To display the development environment and the documentation available at the same time, right-click the following button, and select Open link in new window.

On the Create codespace page, review the codespace configuration settings, and then select Create new codespace.

Wait for the codespace to start. This startup process can take a few minutes.

In the terminal at the bottom of the screen, sign in to Azure with the Azure Developer CLI:

azd auth login --use-device-code

Copy the code from the terminal and then paste it into a browser. Follow the instructions to authenticate with your Azure account.

Provision the required Azure resource, Azure OpenAI Service, for the evaluations app:

azd up

This AZD command doesn't deploy the evaluations app, but it does create the Azure OpenAI resource with a required GPT-4 deployment to run the evaluations in the local development environment.

The remaining tasks in this article take place in the context of this development container.

The name of the GitHub repository appears in the search bar. This visual indicator helps you distinguish the evaluations app from the chat app. This ai-rag-chat-evaluator repo is referred to as the evaluations app in this article.

Update the environment values and configuration information with the information you gathered during Prerequisites for the evaluations app.

Create a .env file based on .env.sample.

cp .env.sample .env

Run this command to get the required values for AZURE_OPENAI_EVAL_DEPLOYMENT and AZURE_OPENAI_SERVICE from your deployed resource group. Paste those values into the .env file.

azd env get-value AZURE_OPENAI_EVAL_DEPLOYMENT

azd env get-value AZURE_OPENAI_SERVICE

Add the following values from the chat app for its Azure AI Search instance to the .env file, which you gathered in the Prerequisites section.

AZURE_SEARCH_SERVICE="<service-name>"

AZURE_SEARCH_INDEX="<index-name>"

The chat app and the evaluations app both implement the Microsoft AI Chat Protocol specification, an open-source, cloud, and language-agnostic AI endpoint API contract that's used for consumption and evaluation. When your client and middle-tier endpoints adhere to this API specification, you can consistently consume and run evaluations on your AI backends.

Create a new file named my_config.json and copy the following content into it:

{

"testdata_path": "my_input/qa.jsonl",

"results_dir": "my_results/experiment<TIMESTAMP>",

"target_url": "http://localhost:50505/chat",

"target_parameters": {

"overrides": {

"top": 3,

"temperature": 0.3,

"retrieval_mode": "hybrid",

"semantic_ranker": false,

"prompt_template": "<READFILE>my_input/prompt_refined.txt",

"seed": 1

}

}

}

The evaluation script creates the my_results folder.

The overrides object contains any configuration settings that are needed for the application. Each application defines its own set of settings properties.

Use the following table to understand the meaning of the settings properties that are sent to the chat app.

| Settings property | Description |

|---|---|

semantic_ranker |

Whether to use semantic ranker, a model that reranks search results based on semantic similarity to the user's query. We disable it for this tutorial to reduce costs. |

retrieval_mode |

The retrieval mode to use. The default is hybrid. |

temperature |

The temperature setting for the model. The default is 0.3. |

top |

The number of search results to return. The default is 3. |

prompt_template |

An override of the prompt used to generate the answer based on the question and search results. |

seed |

The seed value for any calls to GPT models. Setting a seed results in more consistent results across evaluations. |

Change the target_url value to the URI value of your chat app, which you gathered in the Prerequisites section. The chat app must conform to the chat protocol. The URI has the following format: https://CHAT-APP-URL/chat. Make sure the protocol and the chat route are part of the URI.

To evaluate new answers, they must be compared to a ground truth answer, which is the ideal answer for a particular question. Generate questions and answers from documents that are stored in Azure AI Search for the chat app.

Copy the example_input folder into a new folder named my_input.

In a terminal, run the following command to generate the sample data:

python -m evaltools generate --output=my_input/qa.jsonl --persource=2 --numquestions=14

The question-and-answer pairs are generated and stored in my_input/qa.jsonl (in JSONL format) as input to the evaluator that's used in the next step. For a production evaluation, you would generate more question-and-answer pairs. More than 200 are generated for this dataset.

Note

Ony a few questions and answers are generated per source so that you can quickly complete this procedure. It isn't meant to be a production evaluation, which should have more questions and answers per source.

Edit the my_config.json configuration file properties.

| Property | New value |

|---|---|

results_dir |

my_results/experiment_refined |

prompt_template |

<READFILE>my_input/prompt_refined.txt |

The refined prompt is specific about the subject domain.

If there isn't enough information below, say you don't know. Do not generate answers that don't use the sources below. If asking a clarifying question to the user would help, ask the question.

Use clear and concise language and write in a confident yet friendly tone. In your answers, ensure the employee understands how your response connects to the information in the sources and include all citations necessary to help the employee validate the answer provided.

For tabular information, return it as an html table. Do not return markdown format. If the question is not in English, answer in the language used in the question.

Each source has a name followed by a colon and the actual information. Always include the source name for each fact you use in the response. Use square brackets to reference the source, e.g. [info1.txt]. Don't combine sources, list each source separately, e.g. [info1.txt][info2.pdf].

In a terminal, run the following command to run the evaluation:

python -m evaltools evaluate --config=my_config.json --numquestions=14

This script created a new experiment folder in my_results/ with the evaluation. The folder contains the results of the evaluation.

| File name | Description |

|---|---|

config.json |

A copy of the configuration file used for the evaluation. |

evaluate_parameters.json |

The parameters used for the evaluation. Similar to config.json but includes other metadata like time stamp. |

eval_results.jsonl |

Each question and answer, along with the GPT metrics for each question-and-answer pair. |

summary.json |

The overall results, like the average GPT metrics. |

Edit the my_config.json configuration file properties.

| Property | New value |

|---|---|

results_dir |

my_results/experiment_weak |

prompt_template |

<READFILE>my_input/prompt_weak.txt |

That weak prompt has no context about the subject domain.

You are a helpful assistant.

In a terminal, run the following command to run the evaluation:

python -m evaltools evaluate --config=my_config.json --numquestions=14

Use a prompt that allows for more creativity.

Edit the my_config.json configuration file properties.

| Existing | Property | New value |

|---|---|---|

| Existing | results_dir |

my_results/experiment_ignoresources_temp09 |

| Existing | prompt_template |

<READFILE>my_input/prompt_ignoresources.txt |

| New | temperature |

0.9 |

The default temperature is 0.7. The higher the temperature, the more creative the answers.

The ignore prompt is short.

Your job is to answer questions to the best of your ability. You will be given sources but you should IGNORE them. Be creative!

The configuration object should look like the following example, except that you replaced results_dir with your path:

{

"testdata_path": "my_input/qa.jsonl",

"results_dir": "my_results/prompt_ignoresources_temp09",

"target_url": "https://YOUR-CHAT-APP/chat",

"target_parameters": {

"overrides": {

"temperature": 0.9,

"semantic_ranker": false,

"prompt_template": "<READFILE>my_input/prompt_ignoresources.txt"

}

}

}

In a terminal, run the following command to run the evaluation:

python -m evaltools evaluate --config=my_config.json --numquestions=14

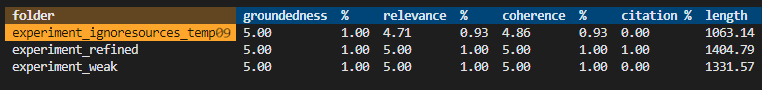

You performed three evaluations based on different prompts and app settings. The results are stored in the my_results folder. Review how the results differ based on the settings.

Use the review tool to see the results of the evaluations.

python -m evaltools summary my_results

The results look something like:

Each value is returned as a number and a percentage.

Use the following table to understand the meaning of the values.

| Value | Description |

|---|---|

| Groundedness | Checks how well the model's responses are based on factual, verifiable information. A response is considered grounded if it's factually accurate and reflects reality. |

| Relevance | Measures how closely the model's responses align with the context or the prompt. A relevant response directly addresses the user's query or statement. |

| Coherence | Checks how logically consistent the model's responses are. A coherent response maintains a logical flow and doesn't contradict itself. |

| Citation | Indicates if the answer was returned in the format requested in the prompt. |

| Length | Measures the length of the response. |

The results should indicate that all three evaluations had high relevance while the experiment_ignoresources_temp09 had the lowest relevance.

Select the folder to see the configuration for the evaluation.

Enter Ctrl + C to exit the app and return to the terminal.

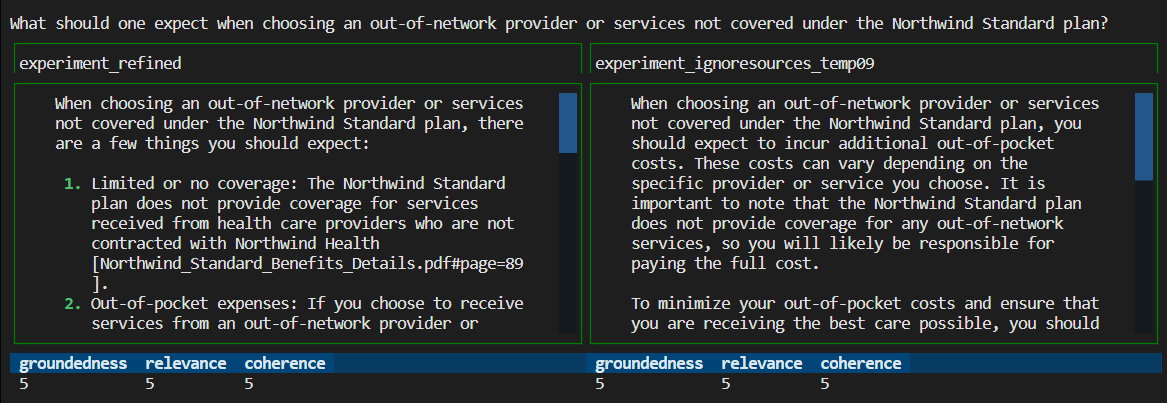

Compare the returned answers from the evaluations.

Select two of the evaluations to compare, and then use the same review tool to compare the answers.

python -m evaltools diff my_results/experiment_refined my_results/experiment_ignoresources_temp09

Review the results. Your results might vary.

Enter Ctrl + C to exit the app and return to the terminal.

my_input to tailor the answers such as subject domain, length, and other factors.my_config.json file to change the parameters such as temperature, and semantic_ranker and rerun experiments.Please answer in about 3 sentences.The following steps walk you through the process of cleaning up the resources you used.

The Azure resources created in this article are billed to your Azure subscription. If you don't expect to need these resources in the future, delete them to avoid incurring more charges.

To delete the Azure resources and remove the source code, run the following Azure Developer CLI command:

azd down --purge

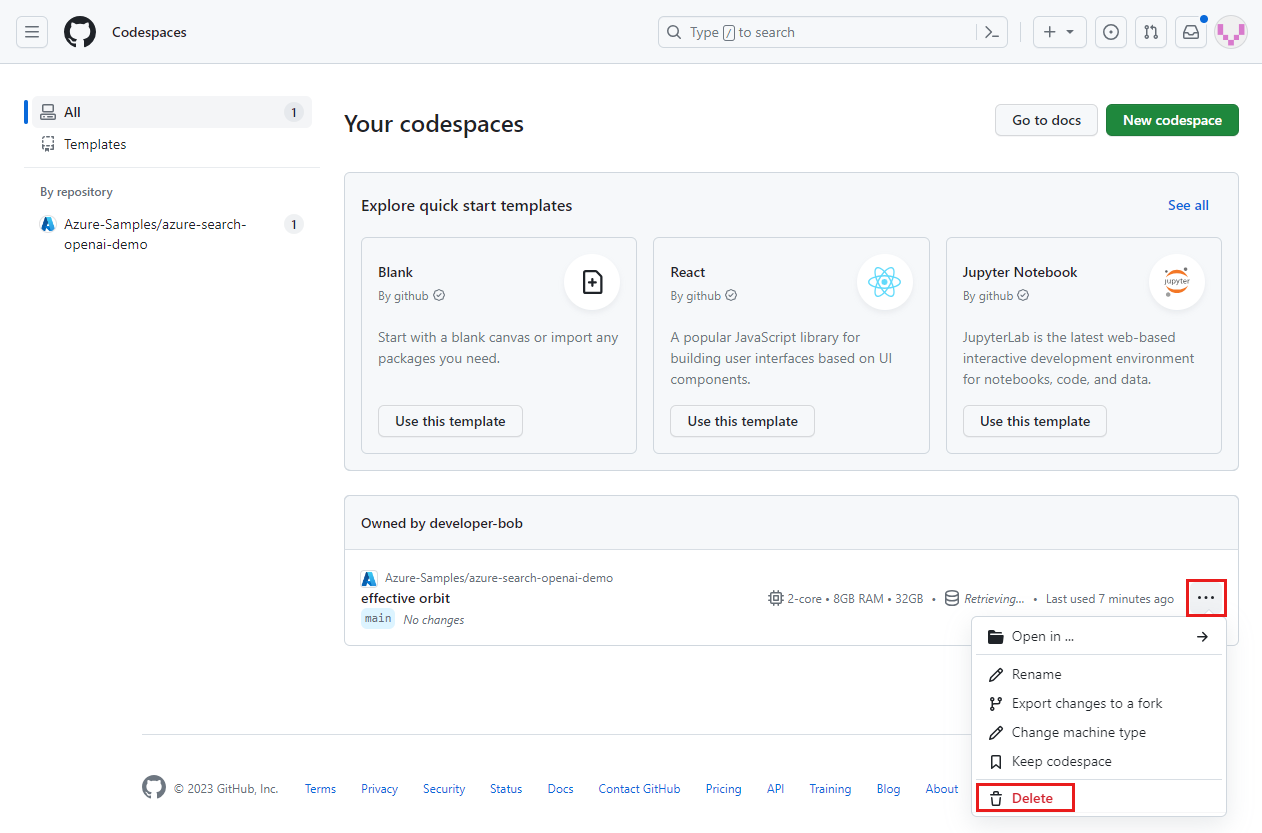

Deleting the GitHub Codespaces environment ensures that you can maximize the amount of free per-core hours entitlement that you get for your account.

Important

For more information about your GitHub account's entitlements, see GitHub Codespaces monthly included storage and core hours.

Sign in to the GitHub Codespaces dashboard.

Locate your currently running codespaces that are sourced from the Azure-Samples/ai-rag-chat-evaluator GitHub repository.

Open the context menu for the codespace, and then select Delete.

Return to the chat app article to clean up those resources.

Events

Mar 17, 11 PM - Mar 21, 11 PM

Join the meetup series to build scalable AI solutions based on real-world use cases with fellow developers and experts.

Register nowTraining

Module

Run evaluations and generate synthetic datasets - Training

Learn how to run evaluations and generate synthetic datasets with the Azure AI Evaluation SDK.

Certification

Microsoft Certified: Azure AI Engineer Associate - Certifications

Design and implement an Azure AI solution using Azure AI services, Azure AI Search, and Azure Open AI.