Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

| Developer Community | System Requirements and Compatibility | License Terms | DevOps Blog | SHA-256 Hashes |

In this article, you will find information regarding the newest release for Azure DevOps Server.

To learn more about installing or upgrading an Azure DevOps Server deployment, see Azure DevOps Server Requirements.

To download Azure DevOps Server products, visit the Azure DevOps Server Downloads page.

Direct upgrade to Azure DevOps Server 2022 is supported from Azure DevOps Server 2019 or Team Foundation Server 2015 or newer. If your TFS deployment is on TFS 2013 or earlier, you need to perform some interim steps before upgrading to Azure DevOps Server 2022. Please see the Install page for more information.

Azure DevOps Server 2022 Update 0.1 Patch 5 Release Date: November 14, 2023

Note

Azure DevOps Server patches are cumulative, if you didn't install Patch 3, this patch includes updates to the Azure Pipelines agent. The new version of the agent after installing Patch 5 will be 3.225.0.

| File | SHA-256 Hash |

|---|---|

| devops2022.0.1patch5.exe | DC4C7C3F9AF1CC6C16F7562DB4B2295E1318C1A180ADA079D636CCA47A6C1022 |

We have released a patch for Azure DevOps Server 2022 Update 0.1 that includes fixes for the following.

- Extended the PowerShell tasks allowed list of characters for Enable shell tasks arguments parameter validation.

- Fix an issue that was causing service connections edits to be persistent after clicking the cancel button.

Azure DevOps Server 2022 Update 0.1 Patch 4 Release Date: October 10, 2023

Note

Azure DevOps Server patches are cumulative, if you didn't install Patch 3, this patch includes updates to the Azure Pipelines agent. The new version of the agent after installing Patch 5 will be 3.225.0.

We have released a patch for Azure DevOps Server 2022 Update 0.1 that includes fixes for the following.

- Fixed a bug that caused pipelines to get stuck by upgrading pipeline execution model.

- Fixed a bug where "Analysis Owner" identity showed as Inactive Identity on patch upgrade machines.

- The build cleanup job contains many tasks, each of which deletes an artifact for a build. If any of these tasks failed, none of the subsequent tasks ran. We changed this behavior to ignore task failures and clean up as many artifacts as we can.

Azure DevOps Server 2022 Update 0.1 Patch 3 Release Date: September 12, 2023

Note

This patch includes updates to the Azure Pipelines agent. The new version of the agent after installing Patch 3 will be 3.225.0.

We have released a patch for Azure DevOps Server 2022 Update 0.1 that includes fixes for the following.

- CVE-2023-33136: Azure DevOps Server Remote Code Execution Vulnerability.

- CVE-2023-38155: Azure DevOps Server and Team Foundation Server Elevation of Privilege Vulnerability.

Azure DevOps Server 2022 Update 0.1 Patch 2 Release Date: August 8, 2023

We have released a patch for Azure DevOps Server 2022 Update 0.1 that includes fixes for the following.

- CVE-2023-36869: Azure DevOps Server Spoofing Vulnerability.

- Fixed a bug in SOAP calls where ArithmeticException can be raised for big metadata XML response.

- Implemented changes to the service connections editor so that endpoint state flushes on component dismiss.

- Addressed issue with relative links not working in markdown files.

- Fixed a performance issue related to application tier taking longer than normal to startup when there are a large number of tags defined.

- Addressed TF400367 errors on the Agent Pools page.

- Fixed a bug where Analysis Owner identity showed as Inactive Identity.

- Fixed infinite loop bug on CronScheduleJobExtension.

Azure DevOps Server 2022 Update 0.1 Patch 1 Release Date: June 13, 2023

We have released a patch for Azure DevOps Server 2022 Update 0.1 that includes fixes for the following.

- CVE-2023-21565: Azure DevOps Server Spoofing Vulnerability.

- CVE-2023-21569: Azure DevOps Server Spoofing Vulnerability.

- Fixed a bug with service connections editor. Now draft endpoint state flushes on component dismiss.

- Fixed a bug where detach or attach collection fails reporting the following error: 'TF246018: The database operation exceeded the timeout limit and has been cancelled.

Azure DevOps Server 2022 Update 0.1 Release Date: May 9, 2023

Azure DevOps Server 2022.0.1 is a roll up of bug fixes. It includes all the fixes in the Azure DevOps Server 2022.0.1 RC previously released. You can directly install Azure DevOps Server 2022.0.1 or upgrade from Azure DevOps Server 2022 or Team Foundation Server 2015 or newer.

Azure DevOps Server 2022 Update 0.1 RC Release Date: April 11, 2023

Azure DevOps Server 2022.0.1 RC is a roll up of bug fixes. It includes all fixes in the Azure DevOps Server 2022 patches previously released. You can directly install Azure DevOps Server 2022.0.1 or upgrade from Azure DevOps Server 2022 or Team Foundation Server 2015 or newer.

This release includes fixes for the following bugs:

- Upgraded Git Virtual File System (GVFS) from to v2.39.1.1-micorosoft.2 to address a security vulnerability.

- Test data was not being deleted, causing the database to grow. With this fix, we updated build retention to prevent creating new orphan test data.

- Updates to the AnalyticCleanupJob, the job status was Stopped and now we report Succeeded.

- Fixed “tfx extension publish” command failing with “The given key was not present in the dictionary” error.

- Implemented a workaround to address and error while accessing the Team Calendar extension.

- CVE-2023-21564: Azure DevOps Server Cross-Site Scripting Vulnerability

- CVE-2023-21553: Azure DevOps Server Remote Code Execution Vulnerability

- Updated MSBuild and VSBuild tasks to support Visual Studio 2022.

- Update methodology of loading reauthentication to prevent XSS attack vector.

- Azure DevOps Server 2022 Proxy reports the following error: VS800069: This service is only available in on-premises Azure DevOps.

- Fixed shelvesets accessibility issue via web UI.

- Addressed issue that required restarting tfsjobagent service and Azure DevOps Server application pool after updating SMTP-related setting in the Azure DevOps Server Management Console.

- Notifications were not getting sent for PAT seven days before the expiration date.

Azure DevOps Server 2022 Patch 4 Release Date: June 13, 2023

We have released a patch for Azure DevOps Server 2022 that includes fixes for the following.

- CVE-2023-21565: Azure DevOps Server Spoofing Vulnerability.

- CVE-2023-21569: Azure DevOps Server Spoofing Vulnerability.

- Fixed a bug with service connections editor. Now draft endpoint state flushes on component dismiss.

- Fixed a bug where detach or attach collection fails reporting the following error: 'TF246018: The database operation exceeded the timeout limit and has been cancelled.

Azure DevOps Server 2022 Patch 3 Release Date: March 21, 2023

We have released a patch(19.205.33506.1) for Azure DevOps Server 2022 that includes fixes for the following.

- Addressed issue that required restarting tfsjobagent service and Azure DevOps Server application pool after updating SMTP-related setting in the Azure DevOps Server Management Console.

- Copy Endpoint state to Service Endpoint edit panel instead of passing it by reference.

- Previously, when editing service connections, the edits were persisted in the UI after selecting the cancel button. With this patch, we've fixed in Notification SDK for when a team has notification delivery set to Do not deliver. In this scenario, if the notification subscription is configured with the Team preference delivery option, team members won’t receive the notifications. There is no need to expand the identities under the team further to check the members’ preferences.

Azure DevOps Server 2022 Patch 2 Release Date: February 14, 2023

We have released a patch for Azure DevOps Server 2022 that includes fixes for the following.

- CVE-2023-21564: Azure DevOps Server Cross-Site Scripting Vulnerability

- Updated MSBuild and VSBuild tasks to support Visual Studio 2022.

- Update methodology of loading reauthentication to prevent possible XSS attack vector.

- Azure DevOps Server 2022 Proxy reports the following error: VS800069: This service is only available in on-premises Azure DevOps.

Azure DevOps Server 2022 Patch 1 Release Date: January 24, 2023

We have released a patch for Azure DevOps Server 2022 that includes fixes for the following.

- Test data was not being deleted, causing the database to grow. With this fix, we updated build retention to prevent creating new orphan test data.

- Updates to the AnalyticCleanupJob, the job status was Stopped and now we report Succeeded.

- Fixed "tfx extension publish" command failing with "The given key was not present in the dictionary" error.

- Implemented a workaround to address and error while accessing the Team Calendar extension.

Azure DevOps Server 2022 Release Date: December 6, 2022

Azure DevOps Server 2022 is a roll up of bug fixes. It includes all features in the Azure DevOps Server 2022 RC2 and RC1 previously released.

Azure DevOps Server 2022 RC2 Release Date: October 25, 2022

Azure DevOps Server 2022 RC2 is a roll up of bug fixes. It includes all features in the Azure DevOps Server 2022 RC1 previously released.

Note

New SSH RSA Algorithms Enabled

RSA public key support has been improved to support SHA2 public key types in addition to the SHA1 SSH-RSA we previously supported.

Now supported public key types include:

- SSH-RSA

- RSA-SHA2-256

- RSA-SHA2-512

Action Required

If you implemented the work around to enable SSH-RSA by explicitly specifying it in the .ssh/config1 file you will either need to remove the PubkeyAcceptedTypes, or modify it to use either RSA-SHA2-256, or RSA-SHA2-512, or both. You can find details about what to do if you are still prompted for your password and GIT_SSH_COMMAND="ssh -v" git fetch shows no mutual signature algorithm in the documentation here.

Elliptical key support has not been added yet and remains a highly requested feature on our backlog.

Azure DevOps Server 2022 RC1 Release Date: August 9, 2022

Summary of What's New in Azure DevOps Server 2022

Important

The Warehouse and Analysis Service was deprecated in the previous version of Azure DevOps Server (2020). In Azure DevOps Server 2022 the Warehouse and Analysis Service has been removed from the product. Analytics now provides the in-product reporting experience.

Azure DevOps Server 2022 introduces many new features. Some of the highlights include:

- Delivery Plans

- Display correct persona on commit links

- New controls for environment variables in pipelines

- Generate unrestricted token for fork builds

- New TFVC pages

- Group By Tags available in chart widgets

You can also jump to individual sections to see all the new features for each service:

Boards

Delivery Plans

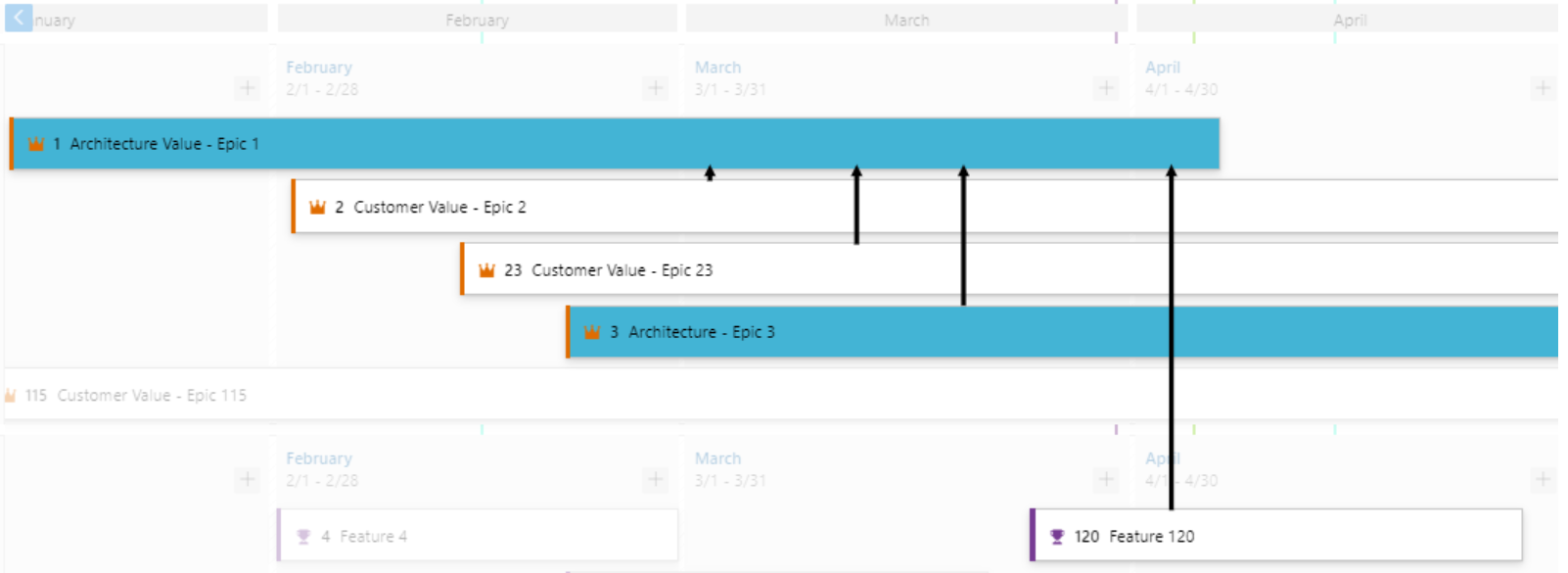

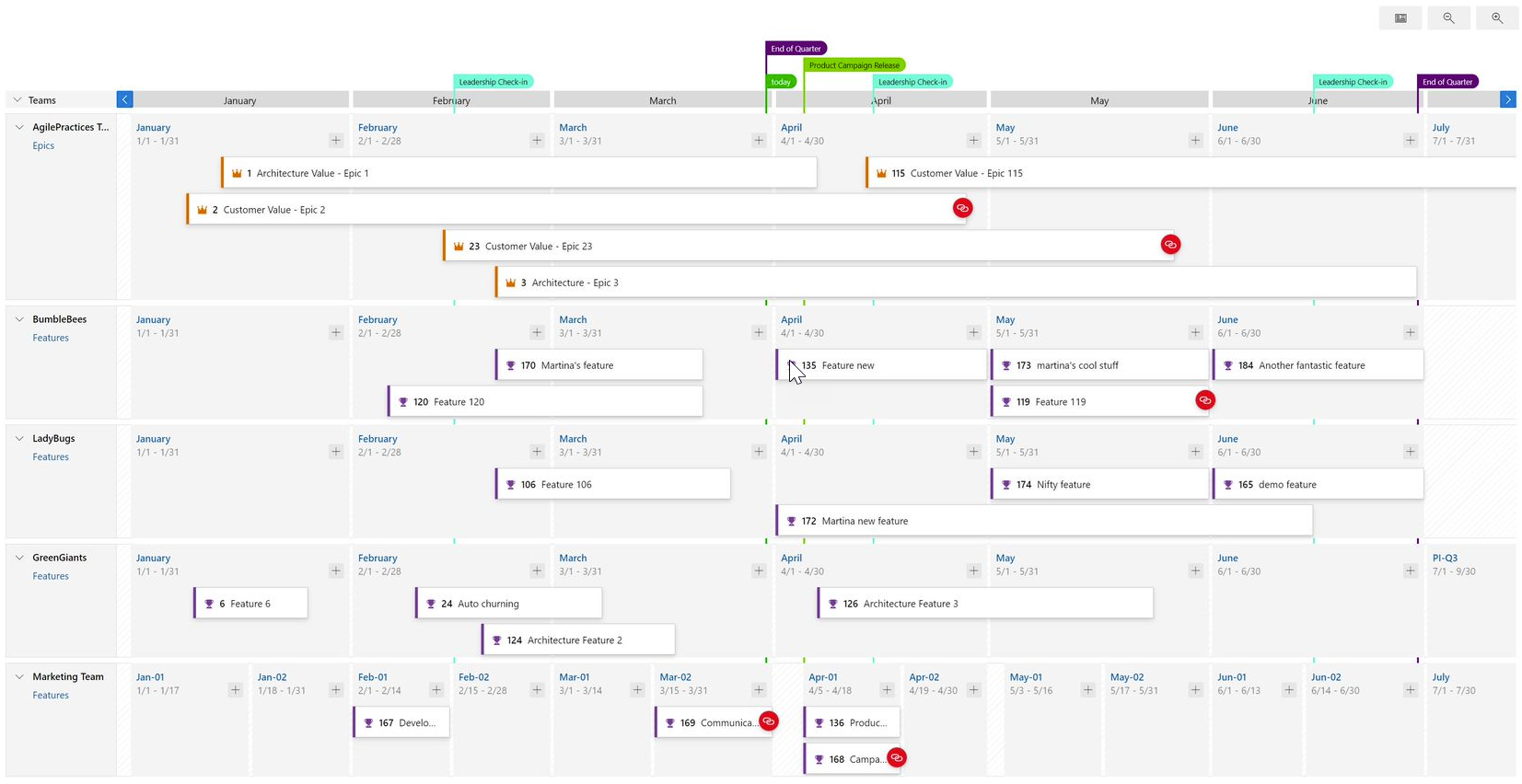

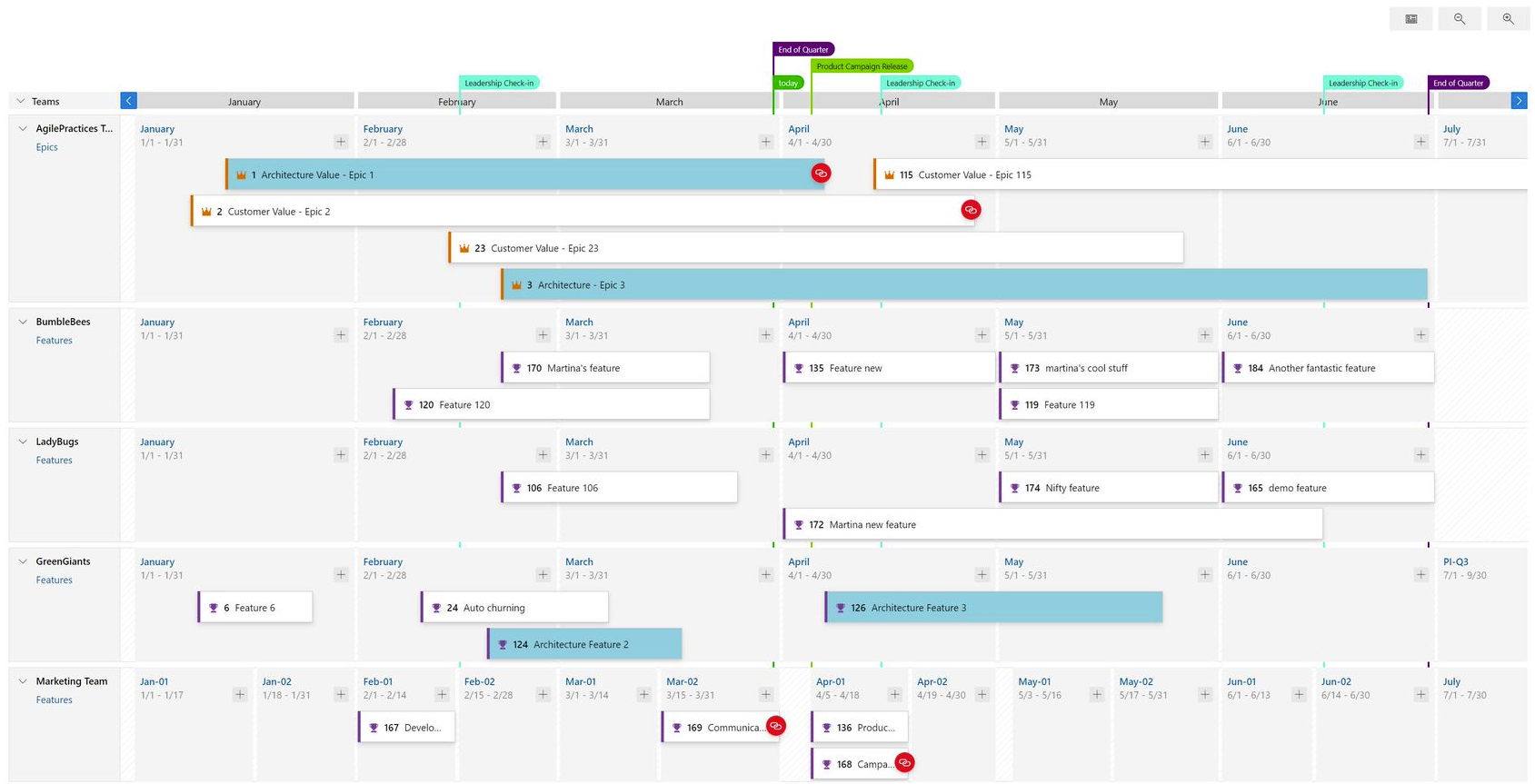

We are excited to announce that Delivery Plans is now included in Azure DevOps Server. Delivery Plans delivers on 3 key scenarios:

- A timelineview of the plan

- Progress of the work

- Dependency Tracking

Below are the main features. Filtering, Markers and Field Criteria are also part of Delivery Plans.

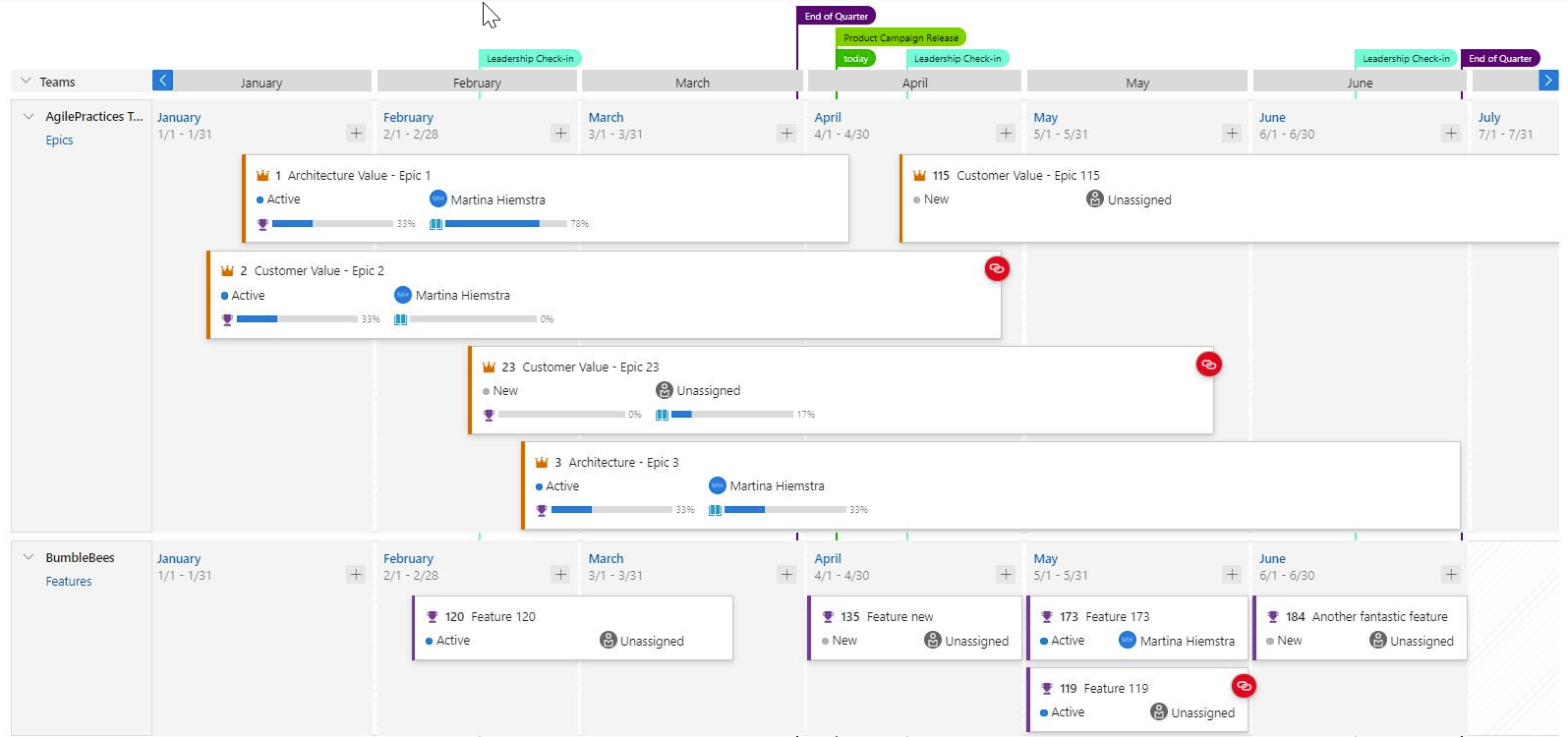

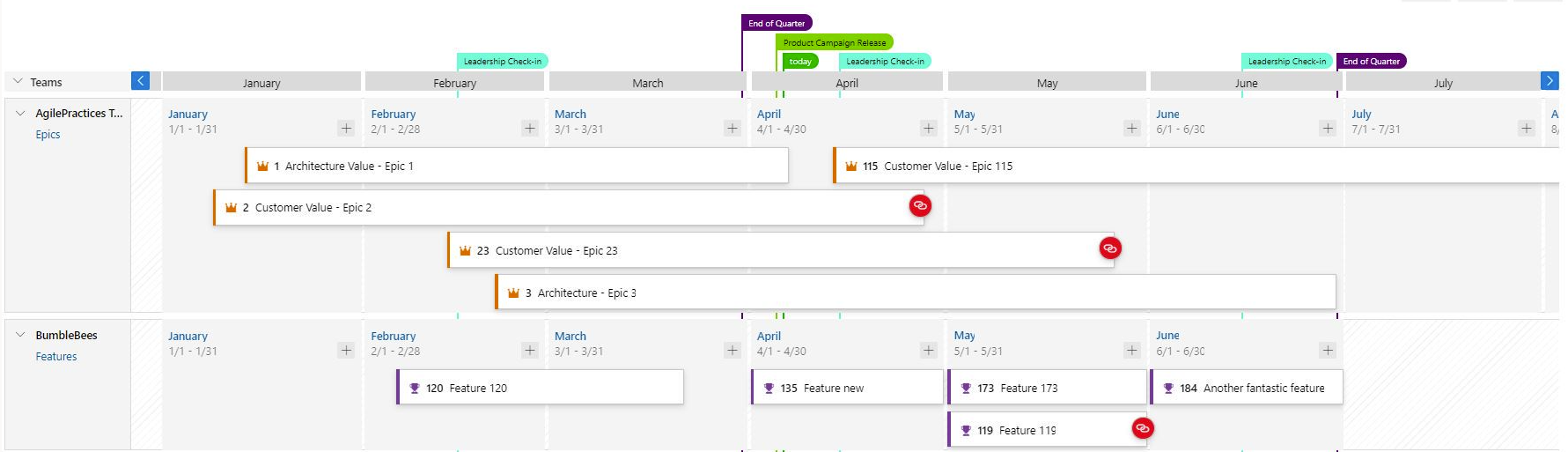

There are two main views: condensed and expanded

Delivery Plans 2.0 enables viewing all the work items in your plan on a timeline, using start and target dates or iteration dates.The order of precedence is start & target dates then followed by iteration. This lets you add portfolio level work items likeEpic which often are not defined to an iteration.

There are two main views the condensed view and expanded view. You can also zoom in and out of the plan by clicking on the magnifying glass in ther ight-hand side of the plan.

There are two main views the condensed view and expanded view. You can also zoom in and out of the plan by clicking on the magnifying glass in the right-hand side of the plan.

Condensed View

The condensed view shows all work item cards collapsed meaning that not all card information is shown. This view is useful for an overall view of the work in the plan. To collapse the card fields, click on the card icon next to the magnifying icons in the right-hand side of the plan.

Here’s an example of a plan toggling between the condensed and expanded views.

Expanded View

The expanded view shows the progress of a work item by counting the number of child and linked items and showing the percentage complete. Currently progress is determined by work item count.

Here is an example of a plan using an expanded view. Note the progress bars and percentage complete.

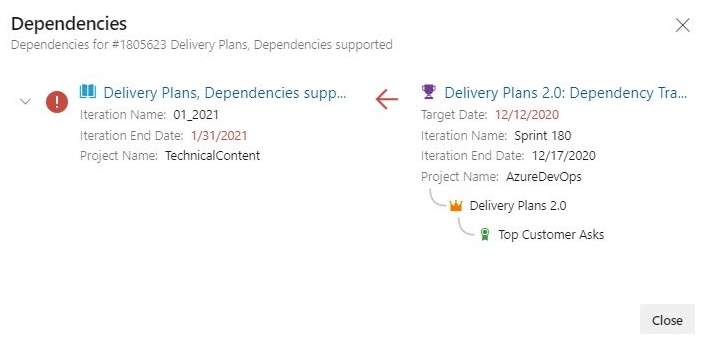

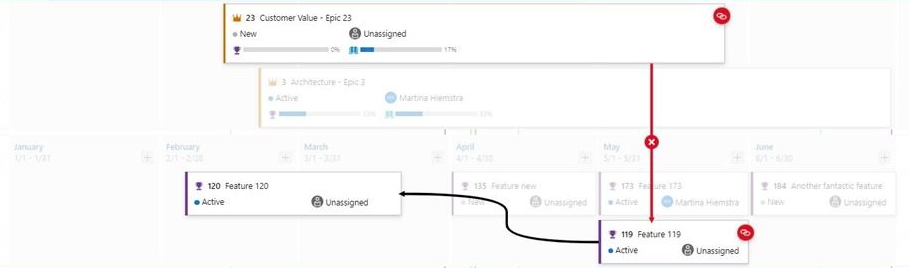

Dependency Tracking

Dependency tracking is based on predecessor and successor links being defined in work items. If those links are not defined, then no dependency lines will be displayed. When there is a dependency issue with a work item, the dependency link icon is colored red.

Viewing Dependencies

Specific dependencies are viewed through the dependency panel which shows all the dependencies for that work item, including the direction. A red exclamation mark indicates a dependency problem. To bring up the panel simply click on the dependency link icon in the upper right corner of the card. Here are examples of dependencies.

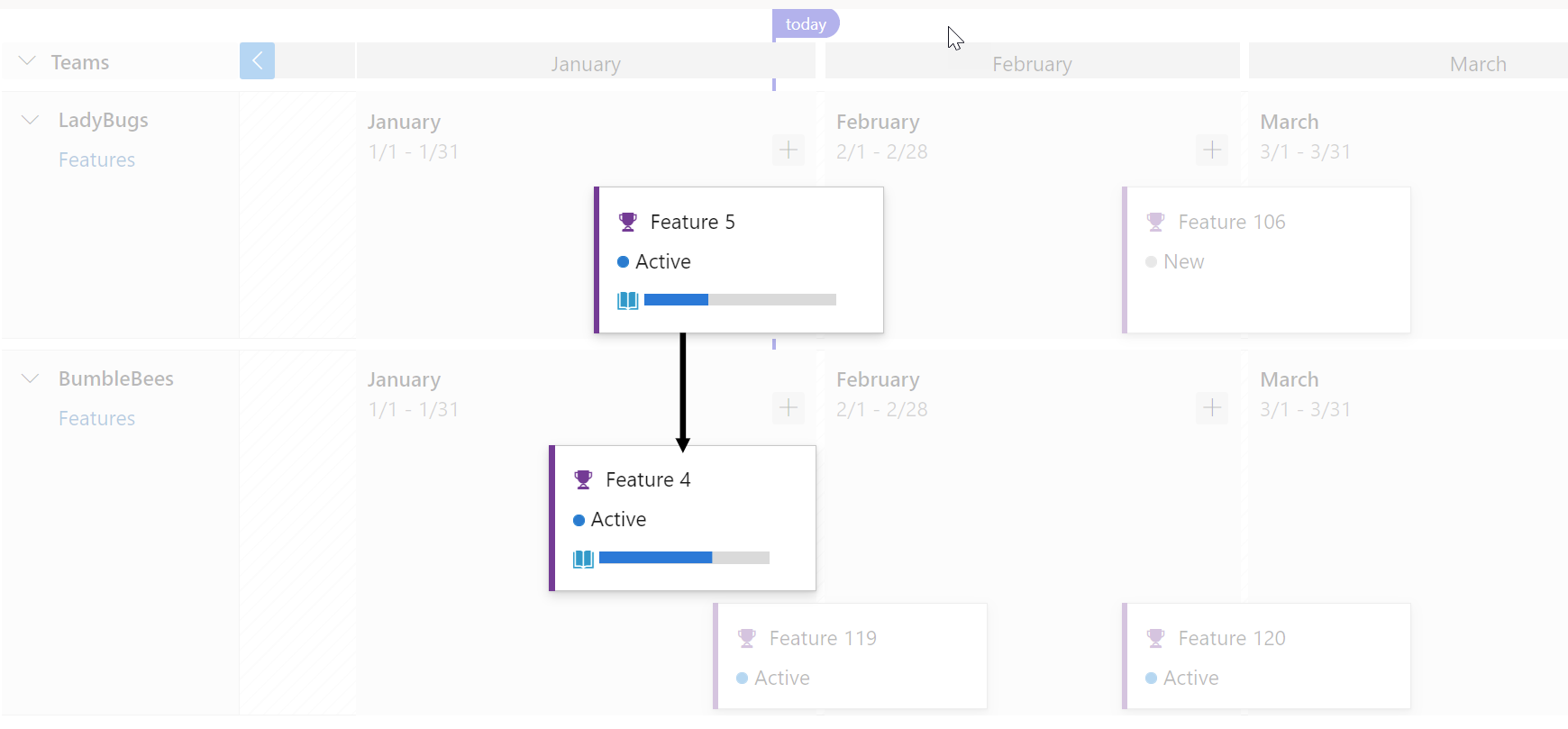

Dependency Lines

Dependencies between work items are visualized with directional arrow lines between the respective work items. Multiple dependencies will display as multiple lines. A red colored line indicates a problem.

Here are some examples.

Here’s an example of a work item with multiple dependencies and it works using condensed view too.

When there is an issue the line color is red, and so is the dependency icon.

Here is an example.

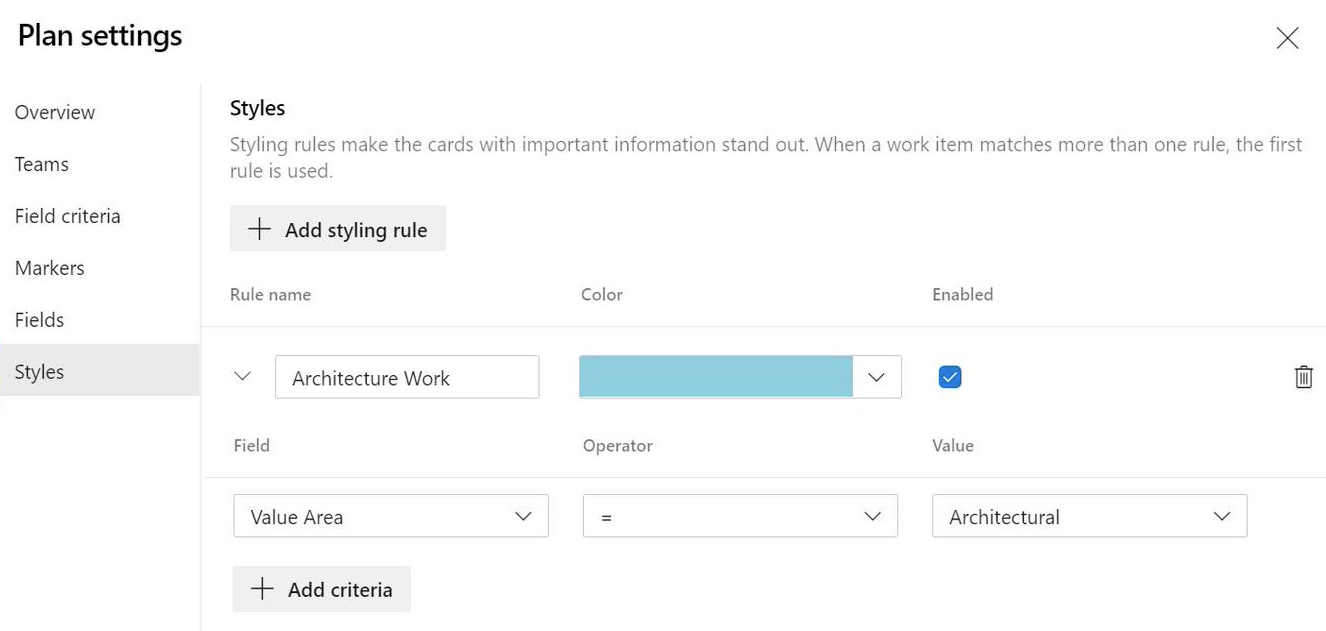

Card Styling

Cards can now be styled using rules, like the Kanban boards. Open the plan settings and click on Styles. In the Styles pane click on + Add styling rule to add the rule and then click Save. There can be up to 10 rules and each rule can have up to 5 clauses.

- Before

- After

To learn more about Delivery Plans, check out the documentation here.

Removed items on Work Items Hub

The Work Items Hub is the place to see a list of items you created or that are assigned to you. It provides several personalized pivot and filter functions to streamline listing work items. One of the top complaints of the Assigned to me pivot is that it displays removed work items. We agree that removed work items are no longer of value and shouldn't be in the backlog. In this sprint, we're hiding all Removed items from the Assigned to me views on the Work Items Hub.

Display correct persona on commit links

The development section in a work item shows the list of relevant commits and pull requests. You can view the author of the commit or pull request along with the associated time. With this update, we fixed an issue with the author's avatar being displayed incorrectly in the view.

Remove the ability to download a deleted attachment from work item history

We fixed a small issue where users were able to download attachments from the work item history, even after the attachment was removed from the form. Now, once the attachment is removed, it cannot be downloaded from the history, nor will the download URL be available from the REST API response.

Added "Will not Fix" value to Bug reason field

As with all other work item types, the Bug work item type has a well-defined workflow. Each workflow consists of three or more States and a Reason. Reasons specify why the item transitioned from one State to another. With this update, we added a Will not fix reason value for the Bug work item types in the Agile process. The value will be available as a reason when moving Bugs from New or Active to Resolved. You can learn more about how to define, capture, triage, and manage software bugs in Azure Boards documentation.

Pipelines

Removal of per-pipeline retention policies in classic builds

You can now configure retention policies for both classic builds and YAML pipelines in Azure DevOps project settings. Per-pipeline retention rules for classic build pipelines are no longer supported. While this is the only way to configure retention for YAML pipelines, you can also configure retention for classic build pipelines on a per-pipeline basis. We removed all per-pipeline retention rules for classic build pipelines in an upcoming release.

What this means for you: any classic build pipeline that used to have per-pipeline retention rules will be governed by the project-level retention rules.

To ensure you don't lose any builds when upgrading, we will create a lease for all builds existing at the time of upgrade that do not have a lease.

We recommend you check the project-level retention settings after the upgrade. If your pipeline specifically requires custom rules, you can use a custom task in your pipeline. For information on adding retention leases through a task, see the set retention policies for builds, releases, and tests documentation.

New controls for environment variables in pipelines

Azure Pipelines agent scans standard output for special logging commands and executes them. The setVariable command can be used to set a variable or modify a previously defined variable. This can potentially be exploited by an actor outside the system. For example, if your pipeline has a step that prints the list of files in an ftp server, then a person with access to the ftp server can add a new file, whose name contains the setVariable command and cause the pipeline to change its behavior.

We have many users that rely on setting variables using the logging command in their pipeline. With this release we are making the following changes to reduce the risk of unwanted uses of the setVariable command.

- We've added a new construct for task authors. By including a snippet such as the following in

task.json, a task author can control if any variables are set by their task.

{

"restrictions": {

"commands": {

"mode": "restricted"

},

"settableVariables": {

"allowed": [

"myVar",

"otherVar"

]

}

},

}

In addition, we are updating a number of built-in tasks such as ssh so that they cannot be exploited.

Finally, you can now use YAML constructs to control whether a step can set variables.

steps:

- script: echo hello

target:

settableVariables: none

steps:

- script: echo hello

target:

settableVariables:

- things

- stuff

Generate unrestricted token for fork builds

GitHub Enterprise users commonly use forks to contribute to an upstream repository. When Azure Pipelines builds contributions from a fork of a GitHub Enterprise repository, it restricts the permissions granted to the job access token and does not allow pipeline secrets to be accessed by such jobs. You can find more information about the security of building forks in our documentation.

This may be more restrictive than desired in such closed environments, where users might still benefit from an inner-source collaboration model. While you can configure a setting in a pipeline to make secrets available to forks, there is no setting to control the job access token scope. With this release, we are giving you control to generate a regular job access token even for builds of forks.

You can change this setting from Triggers in pipeline editor. Before changing this setting, make sure that you fully understand the security implications of enabling this configuration.

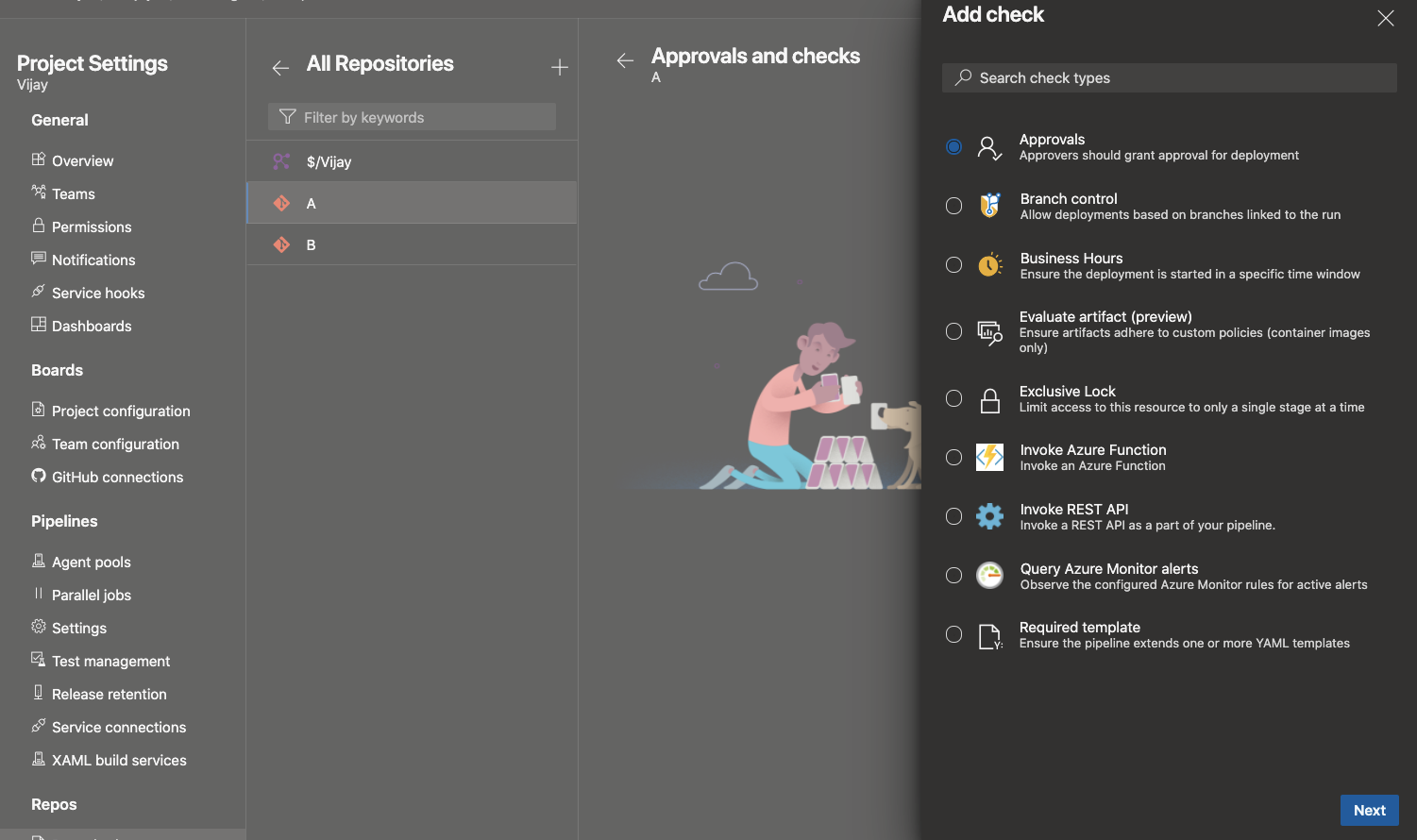

Repos as a protected resource in YAML pipelines

You may organize your Azure DevOps project to host many sub-projects - each with its own Azure DevOps Git repository and one or more pipelines. In this structure, you may want to control which pipelines can access which repositories. For example, let us say that you have two repositories A and B in the same project and two pipelines X and Y that normally build these repositories. You may want to prevent pipeline Y from accessing repository A. In general, you want the contributors of A to control which pipelines they want to provide access to.

While this was partially possible with Azure Git repositories and pipelines, there was no experience for managing it. This feature addresses that gap. Azure Git repositories can now be treated as protected resources in YAML pipelines, just like service connections and agent pools.

As a contributor of repo A, you can add checks and pipeline permissions to your repository. To do this, navigate to the project settings, select Repositories, and then your repository. You will notice a new menu called "Checks", where you can configure any of the in-the-box or custom checks in the form of Azure functions.

Under the "Security" tab, you can manage the list of pipelines that can access the repository.

Anytime a YAML pipeline uses a repository, the Azure Pipelines infrastructure verifies and ensures that all the checks and permissions are satisfied.

Note

These permissions and checks are only applicable to YAML pipelines. Classic pipelines do not recognize these new features.

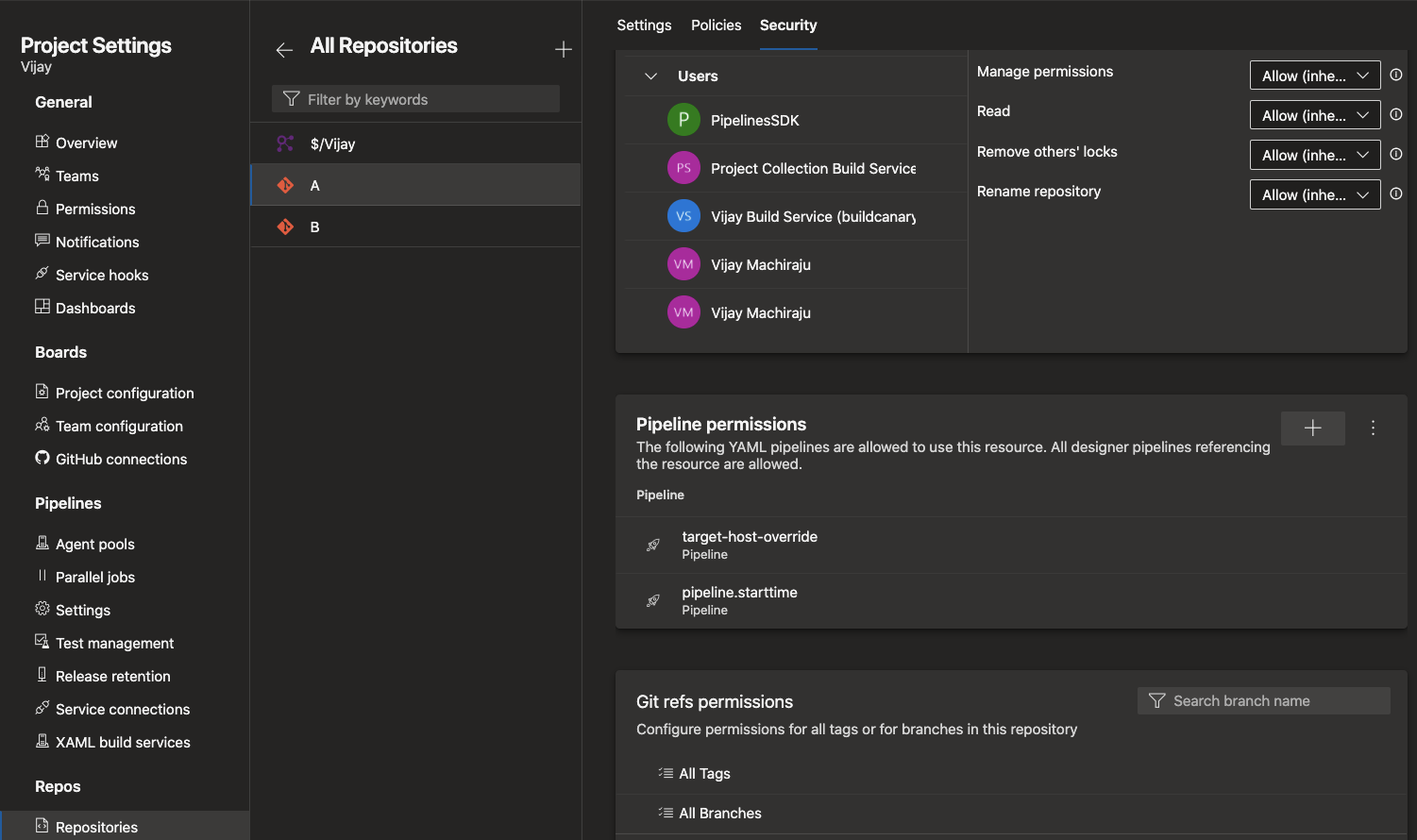

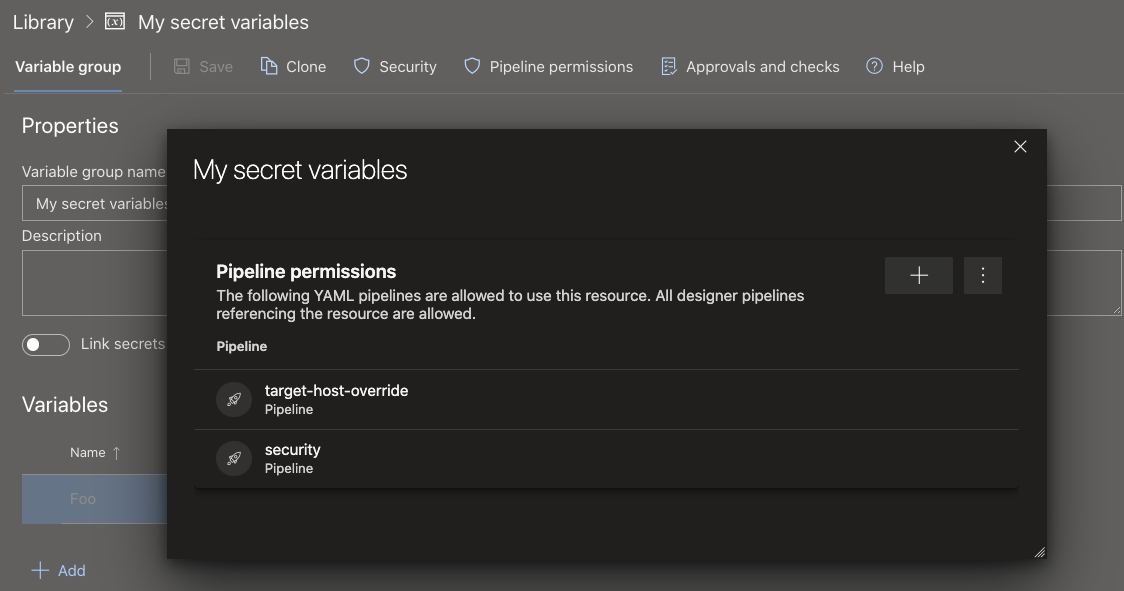

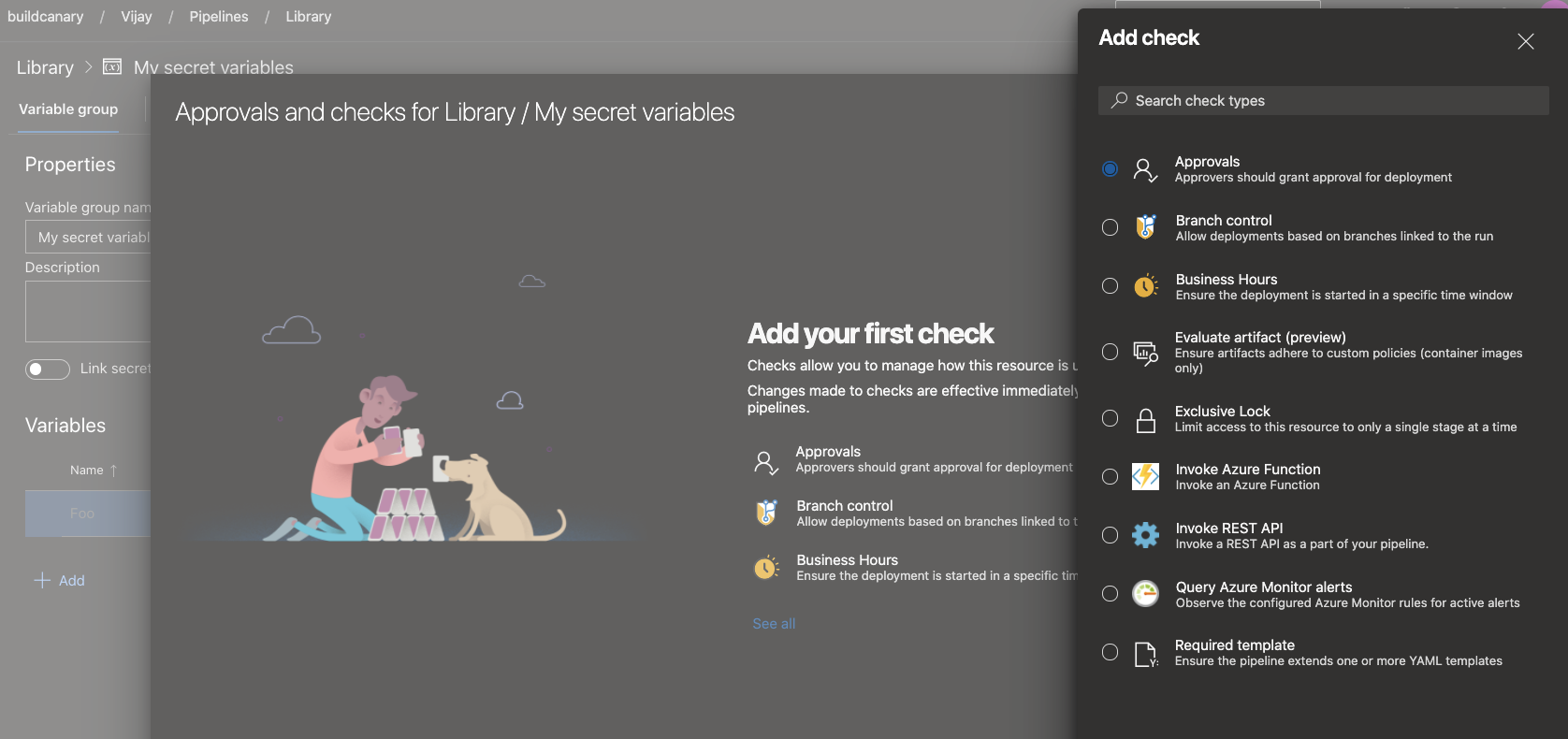

Permissions and checks on variable groups and secure files

You can use different types of shared resources in YAML pipelines. Examples include service connections, variable groups, secure files, agent pools, environments, or repositories. To protect a pipeline from accessing a resource, the owner of the resource can configure permissions and checks on that resource. Every time a pipeline tries to access the resource, all the configured permissions and checks are evaluated. These protections have been available on service connections, environments, and agent pools for a while. They were recently added to repositories. With this release, we are adding the same protections to variable groups and secure files.

To restrict access to a variable group or a secure file to a small set of pipelines, use the Pipelines permissions feature.

To configure checks or approvals that should be evaluated every time a pipeline runs, use the Approvals and checks for Library feature.

Changes in the automatic creation of environments

When you author a YAML pipeline and refer to an environment that does not exist, Azure Pipelines automatically creates the environment. This automatic creation can occur in either the user context or the system context. In the following flows, Azure Pipelines knows about the user performing the operation:

- You use the YAML pipeline creation wizard in the Azure Pipelines web experience and refer to an environment that hasn't been created yet.

- You update the YAML file using the Azure Pipelines web editor and save the pipeline after adding a reference to an environment that does not exist. In each of the above cases, Azure Pipelines has a clear understanding of the user performing the operation. Hence, it creates the environment and adds the user to the administrator role for the environment. This user has all the permissions to manage the environment and/or to include other users in various roles for managing the environment.

In the following flows, Azure Pipelines does not have information about the user creating the environment: you update the YAML file using another external code editor, add a reference to an environment that does not exist, and then cause a continuous integration pipeline to be triggered. In this case, Azure Pipelines does not know about the user. Previously, we handled this case by adding all the project contributors to the administrator role of the environment. Any member of the project could then change these permissions and prevent others from accessing the environment.

We received your feedback about granting administrator permissions on an environment to all members of a project. As we listened to your feedback, we heard that we should not be auto-creating an environment if it is not clear as to who the user performing the operation is. With this release, we made changes to how environments will be automatically created:

- Going forward, pipeline runs will not automatically create an environment if it does not exist and if the user context is not known. In such cases, the pipeline will fail with an Environment not found error. You need to pre-create the environments with the right security and checks configuration before using it in a pipeline.

- Pipelines with known user context will still auto-create environments just like they did in the past.

- Finally, it should be noted that the feature to automatically create an environment was only added to simplify the process of getting started with Azure Pipelines. It was meant for test scenarios, and not for production scenarios. You should always pre-create production environments with the right permissions and checks, and then use them in pipelines.

Remove Insights dialogue from Build Pipeline

Based on your feedback, the task/pipeline Insights dialogue box that displays when navigating the Build Pipeline has been removed to improve the workflow. The pipeline analytics are still available so that you have the insights you need.

Support for sequential deployments rather than latest only when using exclusive lock checks

In YAML pipelines, checks are used to control the execution of stages on protected resources. One of the common checks that you can use is an exclusive lock check. This check lets only a single run from the pipeline proceed. When multiple runs attempt to deploy to an environment at the same time, the check cancels all the old runs and permits the latest run to be deployed.

Canceling old runs is a good approach when your releases are cumulative and contain all the code changes from previous runs. However, there are some pipelines in which code changes are not cumulative. With this new feature, you can choose to allow all runs to proceed and deploy sequentially to an environment, or preserve the previous behavior of canceling old runs and allowing just the latest. You can specify this behavior using a new property called lockBehavior in the pipeline YAML file. A value of sequential implies that all runs acquire the lock sequentially to the protected resource. A value of runLatest implies that only the latest run acquires the lock to the resource.

To use exclusive lock check with sequential deployments or runLatest, follow these steps:

- Enable the exclusive lock check on the environment (or another protected resource).

- In the YAML file for the pipeline, specify a new property called

lockBehavior. This can be specified for the whole pipeline or for a given stage:

Set on a stage:

stages:

- stage: A

lockBehavior: sequential

jobs:

- job: Job

steps:

- script: Hey!

Set on the pipeline:

lockBehavior: runLatest

stages:

- stage: A

jobs:

- job: Job

steps:

- script: Hey!

If you do not specify a lockBehavior, it is assumed to be runLatest.

Support for Quebec version of ServiceNow

Azure Pipelines has an existing integration with ServiceNow. The integration relies on an app in ServiceNow and an extension in Azure DevOps. We have now updated the app to work with the Quebec version of ServiceNow. Both classic and YAML pipelines now work with Quebec. To ensure that this integration works, upgrade to the new version of the app (4.188.0) from the Service Now store. For more information, see Integrate with ServiceNow Change Management.

New YAML conditional expressions

Writing conditional expressions in YAML files just got easier with the use of ${{ else }} and ${{ elseif }} expressions. Below are examples of how to use these expressions in YAML pipelines files.

steps:

- script: tool

env:

${{ if parameters.debug }}:

TOOL_DEBUG: true

TOOL_DEBUG_DIR: _dbg

${{ else }}:

TOOL_DEBUG: false

TOOL_DEBUG_DIR: _dbg

variables:

${{ if eq(parameters.os, 'win') }}:

testsFolder: windows

${{ elseif eq(parameters.os, 'linux' }}:

testsFolder: linux

${{ else }}:

testsFolder: mac

Support for wild cards in path filters

Wild cards can be used when specifying inclusion and exclusion branches for CI or PR triggers in a pipeline YAML file. However, they cannot be used when specifying path filters. For instance, you cannot include all paths that match src/app/**/myapp*. This has been pointed out as an inconvenience by several customers. This update fills this gap. Now, you can use wild card characters (**, *, or ?) when specifying path filters.

The default agent specification for pipelines will be Windows-2022

The windows-2022 image is ready to be the default version for the windows-latest label in Azure Pipelines Microsoft-hosted agents. Until now, this label pointed to windows-2019 agents. This change will be rolled out over a period of several weeks beginning on January 17. We plan to complete the migration by March.

Azure Pipelines has supported windows-2022 since September 2021. We have monitored your feedback to improve the windows-2022 image stability and now we are ready to set it as the latest.

The windows-2022 image includes Visual Studio 2022. For a full list of differences between windows-2022 and windows-2019, visit the GitHub issue. For a full list of software installed on the image, check here.

Pipeline folder rename validates permissions

Folders containing pipelines can be renamed. Renaming a folder will now succeed only if the user has edit permissions on at least one pipeline contained in the folder.

Pipelines Agent runtime upgrade planning

What is the Pipeline Agent?

The Azure DevOps Pipeline Agent is the software product that runs on a pipeline host to execute pipeline jobs. It runs on Microsoft hosted agents, Scale Set agents and Self-hosted agents. In the latter case you install it yourself. The Pipeline Agent consists of a Listener and Worker (implemented in .NET), the Worker runs tasks which are implemented either in Node or PowerShell and therefore hosts those runtimes for them.

Upcoming upgrade to .NET 6 & Red Hat 6 deprecation

With the release of .NET 6 we are able to take advantage of its new cross-platform capabilities. Specifically, we will be able to provide native compatibility for Apple Silicon and Windows Arm64. Hence we plan to move to .NET 6 for Pipeline Agent (Listener and Worker) in the coming months.

Due to a number of constraints that this imposes, we are dropping Red Hat Enterprise Linux 6 support from our agent April 30th 2022.

Updates to Azure File Copy task

We are rolling out a new version of Azure File Copy task. This task is used to copy files to Microsoft Azure storage blobs or virtual machines (VMs). The new version has several updates that have been often requested by the community:

The AzCopy tool's version has been updated to 10.12.2, which has support for file content types. As a result, when you copy PDF, Excel, PPT, or one of the supported mime types, the file's content type is set correctly.

With the new version of AzCopy, you can also configure a setting to clean the target when the destination type is Azure Blob. Setting this option will delete all the folders/files in that container. Or if a blob prefix is provided, all the folders/files in that prefix will be deleted.

The new version of the task relies on Az modules that are installed on the agent instead of AzureRM modules. This will remove an unnecessary warning in some cases when using the task.

The changes are part of a major version update for this task. You would have to explicitly update your pipelines to use the new version. We made this choice of updating the major version to ensure that we do not break any pipelines that are still dependent on AzureRM modules.

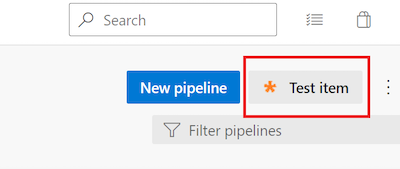

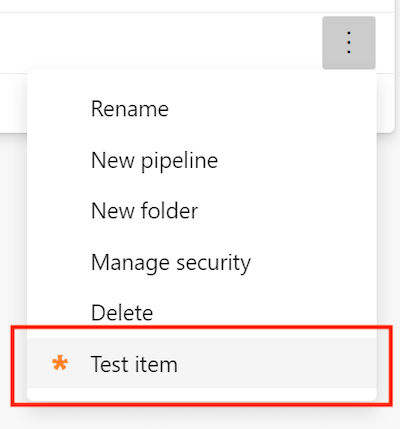

New extension points for Pipelines details view

We've added two new extensibility points that you can target in your extensions. These extensibility points let you add a custom button in the pipeline header and a custom menu on a pipeline folder:

- Custom button in the pipeline header:

ms.vss-build-web.pipelines-header-menu - Custom menu on a pipeline folder:

ms.vss-build-web.pipelines-folder-menu

To use these new extensibility points, simply add a new contribution that targets them in your Azure DevOps extension's vss-extension.json manifest file.

For example:

"contributions": [

{

"id": "pipelinesFolderContextMenuTestItem",

"type": "ms.vss-web.action",

"description": "Custom menu on a pipeline folder",

"targets": [

"ms.vss-build-web.pipelines-folder-menu"

],

"properties": {

"text": "Test item",

"title": "ms.vss-code-web.source-item-menu",

"icon": "images/show-properties.png",

"group": "actions",

"uri": "main.html",

"registeredObjectId": "showProperties"

}

},

{

"id": "pipelinesHeaderTestButton",

"type": "ms.vss-web.action",

"description": "Custom button in the pipeline header",

"targets": [

"ms.vss-build-web.pipelines-header-menu"

],

"properties": {

"text": "Test item",

"title": "ms.vss-code-web.source-item-menu",

"icon": "images/show-properties.png",

"group": "actions",

"uri": "main.html",

"registeredObjectId": "showProperties"

}

}

]

The result will be:

Custom button in the pipeline header

Custom menu on a pipeline folder

Improved migration to Azure DevOps Services

When running an import from Azure DevOps Server to Azure DevOps Services, you have to consider that Azure DevOps no longer supports per-pipeline retention rules. With this update, we removed these policies when you migrate to Azure DevOps Services from your on-premises Azure DevOps Server. To learn more about configuring retention policies, see our documentation on setting retention policies for builds, releases, and tests.

Improvement to Pipelines Runs REST API

Previously, the Pipelines Runs REST API returned only the self repository. With this update, the Pipelines Runs REST API returns all repository resources of a build.

Extended YAML Pipelines templates can now be passed context information for stages, jobs, and deployments

With this update, we are adding a new templateContext property for job, deployment, and stage YAML pipeline components meant to be used in conjunction with templates.

Here is a scenario for using templateContext:

You use templates to reduce code duplication or to improve the security of your pipelines

Your template takes as parameter a list of

stages,jobs, ordeploymentsThe template processes the input list and performs some transformations on each of the stages, jobs, or deployments. For example, it sets the environment in which each job runs or adds additional steps to enforce compliance

The processing requires additional information to be passed by the pipeline author into the template for each stage, job, or deployment in the list

Let's look at an example. Say you are authoring a pipeline that runs end-to-end tests for pull request validation. Your goal is to test only one component of your system, but, because you plan to run end-to-end tests, you need an environment where more of the system's components are available, and you need to specify their behavior.

You realize other teams will have similar needs, so you decide to extract the steps for setting up the environment into a template. Its code looks like the following:

testing-template.yml

parameters:

- name: testSet

type: jobList

jobs:

- ${{ each testJob in parameters.testSet }}:

- ${{ if eq(testJob.templateContext.expectedHTTPResponseCode, 200) }}:

- job:

steps:

- script: ./createSuccessfulEnvironment.sh ${{ testJob.templateContext.requiredComponents }}

- ${{ testJob.steps }}

- ${{ if eq(testJob.templateContext.expectedHTTPResponseCode, 500) }}:

- job:

steps:

- script: ./createRuntimeErrorEnvironment.sh ${{ testJob.templateContext.requiredComponents }}

- ${{ testJob.steps }}

What the template does is, for each job in the testSet parameter, it sets the response of the system's components specified by ${{ testJob.templateContext.requiredComponents }} to return ${{ testJob.templateContext.expectedHTTPResponseCode }}.

Then, you can create your own pipeline that extends testing-template.yml like in the following example.

sizeapi.pr_validation.yml

trigger: none

pool:

vmImage: ubuntu-latest

extends:

template: testing-template.yml

parameters:

testSet:

- job: positive_test

templateContext:

expectedHTTPResponseCode: 200

requiredComponents: dimensionsapi

steps:

- script: ./runPositiveTest.sh

- job: negative_test

templateContext:

expectedHTTPResponseCode: 500

requiredComponents: dimensionsapi

steps:

- script: ./runNegativeTest.sh

This pipeline runs two tests, a positive and a negative one. Both tests require the dimensionsapi component be available. The positive_test job expects the dimensionsapi return HTTP code 200, while negative_test expects it return HTTP code 500.

Support Group Managed Service Accounts as agent service account

The Azure Pipelines agent now supports Group Managed Service Accounts on Self-hosted agents on Windows.

Group Managed Service Accounts provide centralized password management for domain accounts that act as service accounts. The Azure Pipelines Agent can recognize this type of account so a password is not required during configuration:

.\config.cmd --url https://dev.azure.com/<Organization> `

--auth pat --token <PAT> `

--pool <AgentPool> `

--agent <AgentName> --replace `

--runAsService `

--windowsLogonAccount <DOMAIN>\<gMSA>

Informational runs

An informational run tells you Azure DevOps failed to retrieve a YAML pipeline's source code. Such a run looks like the following.

Azure DevOps retrieves a YAML pipeline's source code in response to external events, for example, a pushed commit, or in response to internal triggers, for example, to check if there are code changes and start a scheduled run or not. When this step fails, the system creates an informational run. These runs are created only if the pipeline's code is in a GitHub or BitBucket repository.

Retrieving a pipeline's YAML code can fail due to:

- Repository provider experiencing an outage

- Request throttling

- Authentication issues

- Unable to retrieve the content of the pipeline's .yml file

Read more about Informational runs.

Build Definition REST API retentionRules property is obsolete

In the Build Definition REST API's BuildDefinition response type, the retentionRules property is now marked as obsolete, as this property always returns an empty set.

Repos

New TFVC pages

We have been updating various pages in Azure DevOps to use a new web platform with the goal of making the experience more consistent and more accessible across the various services. TFVC pages have been updated to use the new web platform. With this version, we are making the new TFVC pages generally available.

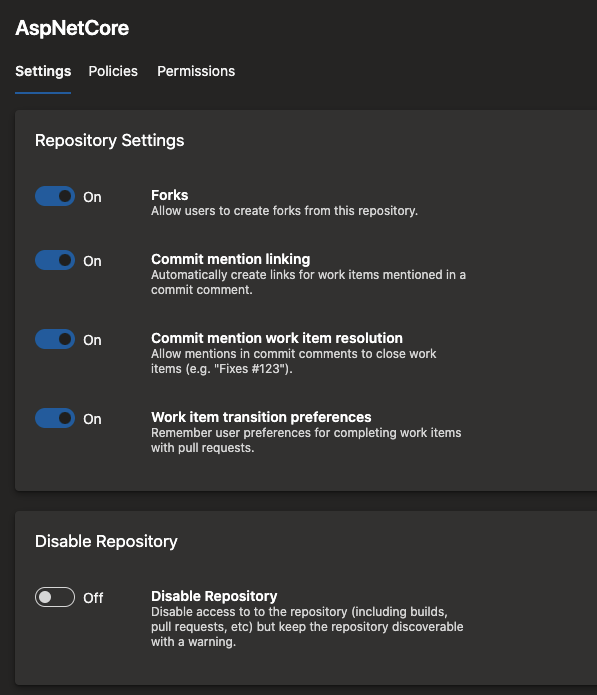

Disable a repository

Customers have often requested a way to disable a repository and prevent users from accessing its contents. For example, you may want to do this when:

- You found a secret in the repository.

- A third-party scanning tool found a repository to be out of compliance.

In such cases, you may want to temporarily disable the repository while you work to resolve the issue. With this update, you can disable a repository if you have Delete repository permissions. By disabling a repo, you:

- Can list the repo in the list of repos

- Cannot read the contents of the repo

- Cannot update the contents of the repo

- See a message that the repo has been disabled when they try to access the repo in the Azure Repos UI

After the necessary mitigation steps have been taken, users with Delete repository permission can re-enable the repository. To disable or enable a repository, go to Project Settings, select Repositories, and then the specific repo.

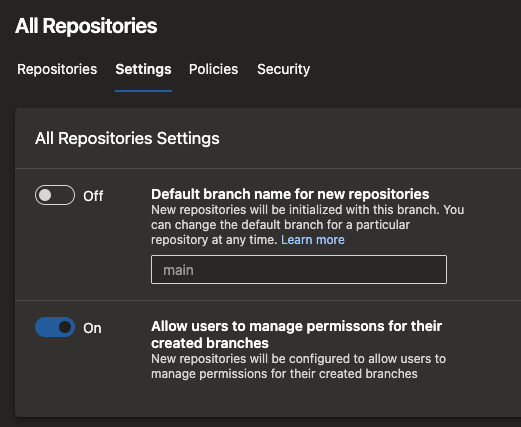

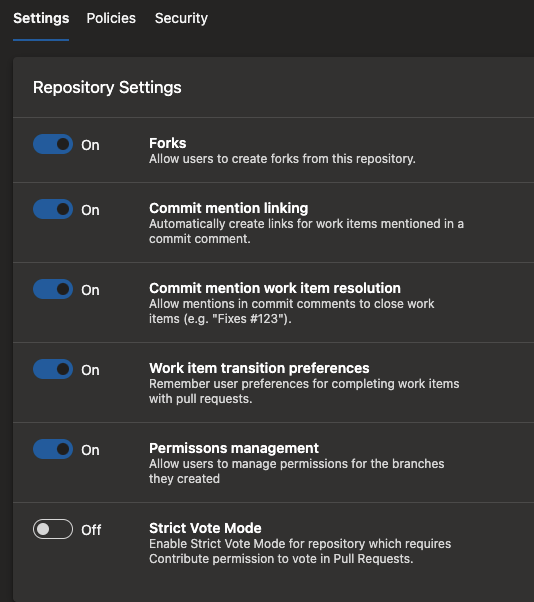

Configure branch creators to not get "Manage permissions" on their branches

When you create a new branch, you get "Manage permissions" on that branch. This permission lets you change the permissions of other users or admit additional users to contribute to that branch. For instance, a branch creator may use this permission to allow another external user to make changes to the code. Or, they may allow a pipeline (build service identity) to change the code in that branch. In certain projects with higher compliance requirements, users should not be able to make such changes.

With this update, you can configure any and all repositories in your team project and restrict branch creators from getting the "Manage permissions" permission. To do this, navigate to the project settings, select Repositories, and then Settings for all repositories or a specific repository.

This setting is on by default to mimic the existing behavior. But, you can turn it off if you wish to make use of this new security feature.

Prevent fork users from voting on their upstream PRs

With Azure Repos, users with "read" permission on a repository can fork the repo and make changes in their fork. To submit a pull request with their changes to the upstream, users need "contribute to pull requests" permission on the upstream. However, this permission also governs who can vote on pull requests in the upstream repository. As a result, you can end up in situations where a user, who is not a contributor to the repository, can submit a pull request and cause it to be merged depending on how you set up the branch policies.

In projects that promote an inner-source model, fork-and-contribute is a common pattern. To secure and promote this pattern further, we are changing the permission to vote on a pull request from "contribute to pull requests" to "contribute". However, this change is not being made by default in all projects. You have to opt-in and select a new policy on your repository, called "Strict Vote Mode" to switch this permission. We recommend that you do so if you rely on forks in Azure Repos.

Reporting

Group By Tags available in chart widgets

The Group By Tags chart widget is now available by default for all customers. When using the chart widget, there is now an option available for tags. Users can visualize their information by selecting all tags or a set of tags in the widget.

Display custom work item types in burndown widget

Previously, you were not able to see custom work item types configured in the burn down widget and summed or counted by a custom field. With this update, we fixed this issue and now you can see custom work item types in the burndown widget.

Feedback

We would love to hear from you! You can report a problem or provide an idea and track it through Developer Community and get advice on Stack Overflow.