Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

| Developer Community | System Requirements and Compatibility | License Terms | DevOps Blog | SHA-256 Hashes |

In this article, you will find information regarding the newest release for Azure DevOps Server.

To learn more about installing or upgrading an Azure DevOps Server deployment, see Azure DevOps Server Requirements.

To download Azure DevOps Server products, visit the Azure DevOps Server Downloads page.

Direct upgrade to Azure DevOps Server 2022 Update 1 is supported from Azure DevOps Server 2019 or Team Foundation Server 2015 or newer. If your TFS deployment is on TFS 2013 or earlier, you need to perform some interim steps before upgrading to Azure DevOps Server 2022. Please see the Install page for more information.

Azure DevOps Server 2022 Update 1 Patch 4 Release Date: June 11, 2024

| File | SHA-256 Hash |

|---|---|

| devops2022.1patch4.exe | 29012B79569F042E2ED4518CE7216CA521F9435CCD80295B71F734AA60FCD03F |

We have released Patch 4 for Azure DevOps Server 2022 Update 1 that includes a fix for the following.

- Fixed an issue in wiki and work items where search results were not available for Projects that had Turkish "I" in their name for Turkish locale.

Azure DevOps Server 2022 Update 1 Patch 3 Release Date: March 12, 2024

| File | SHA-256 Hash |

|---|---|

| devops2022.1patch3.exe | E7DE45650D74A1B1C47F947CDEF4BF3307C4323D749408EE7F0524C2A04D2911 |

We have released Patch 3 for Azure DevOps Server 2022 Update 1 that includes fixes for the following.

- Resolved an issue where the Proxy Server stopped working after installing Patch 2.

- Fixed a rendering issue on the extension details page affecting languages that were not English.

Azure DevOps Server 2022 Update 1 Patch 2 Release Date: February 13, 2024

| File | SHA-256 Hash |

|---|---|

| devops2022.1patch2.exe | 59B3191E86DB787F91FDD1966554DE580CA97F06BA9CCB1D747D41A2317A5441 |

We have released Patch 2 for Azure DevOps Server 2022 Update 1 that includes fixes for the following.

- Fixing details page rendering issue on Search extension.

- Fixed a bug where the disk space used by the proxy cache folder was calculated incorrectly and the folder was not properly cleaned up.

- CVE-2024-20667: Azure DevOps Server Remote Code Execution Vulnerability.

Azure DevOps Server 2022 Update 1 Patch 1 Release Date: December 12, 2023

| File | SHA-256 Hash |

|---|---|

| devops2022.1patch1.exe | 9D0FDCCD1F20461E586689B756E600CC16424018A3377042F7DC3A6EEF096FB6 |

We have released Patch 1 for Azure DevOps Server 2022 Update 1 that includes fixes for the following.

- Prevent setting

requested forwhen queuing a pipeline run.

Azure DevOps Server 2022 Update 1 Release Date: November 28, 2023

Note

There are two known issues with this release:

- The Agent version does not update after upgrading to Azure DevOps Server 2022.1 and using Update Agent in Agent Pool configuration. We are currently working on a patch to resolve this issue and will share updates in the Developer Community as we make progress. In the meantime, you can find a workaround for this issue in this Developer Community ticket.

- Maven 3.9.x compatibility. Maven 3.9.x introduced breaking changes with Azure Artifacts by updating the default Maven Resolver transport to native HTTP from Wagon. This causes 401 authentication issues for customers using http, instead of https. You can find more details about this issue here. As a workaround, you can continue using Maven 3.9.x with the property

-Dmaven.resolver.transport=wagon. This change forces Maven to use Wagon Resolver Transport. Check out the documentation here for more details.

Azure DevOps Server 2022.1 is a roll up of bug fixes. It includes all features in the Azure DevOps Server 2022.1 RC2 previously released.

Note

There is a known issue with Maven 3.9.x compatibility. Maven 3.9.x introduced breaking changes with Azure Artifacts by updating the default Maven Resolver transport to native HTTP from Wagon. This causes 401 authentication issues for customers using http, instead of https. You can find more details about this issue here.

As a workaround, you can continue using Maven 3.9.x with the property -Dmaven.resolver.transport=wagon. This change forces Maven to use Wagon Resolver Transport. Check out the documentation here for more details.

Azure DevOps Server 2022 Update 1 RC2 Release Date: October 31, 2023

Azure DevOps Server 2022.1 RC2 is a roll up of bug fixes. It includes all features in the Azure DevOps Server 2022.1 RC1 previously released.

Note

There is a known issue with Maven 3.9.x compatibility. Maven 3.9.x introduced breaking changes with Azure Artifacts by updating the default Maven Resolver transport to native HTTP from Wagon. This causes 401 authentication issues for customers using http, instead of https. You can find more details about this issue here.

As a workaround, you can continue using Maven 3.9.x with the property -Dmaven.resolver.transport=wagon. This change forces Maven to use Wagon Resolver Transport. Check out the documentation here for more details.

The following has been fixed with this release:

- Fixed an issue in local feeds where the upstream entries were not resolving display name changes.

- An unexpected error occurred when switching tabs from Code to another tab on the Search page.

- Error while creating a new work item type with Chinese, Japanese and Korean (CJK) Unified Ideographs. A question mark was displayed on RAW log on gated check-in when the team project name or files included CJK.

- Fixed an issue during installation of Search where the Java Virtual Machine (JVM) couldn't allocate enough memory to Elastic Search process. Search configuration widget will now use Java Development Kit (JDK) that is bundled with Elastic Search and hence removes the dependency on any Java Runtime Environment (JRE) preinstalled on the targeted server.

Azure DevOps Server 2022 Update 1 RC1 Release Date: September 19, 2023

Azure DevOps Server 2022.1 RC1 includes many new features. Some of the highlights include:

- All Public REST APIs support granular PAT scopes

- Last Accessed column on Delivery Plans page

- Visualize all dependencies on Delivery Plans

- @CurrentIteration macro in Delivery Plans

- Current project set as default scope for build access token in classic pipelines

- Show Parent in Query Results Widget

- Copy Dashboard

- Support for additional diagram types in wiki pages

- Container Registry service connections can now use Azure Managed Identities

You can also jump to individual sections to see all the new features for each service:

General

All Public REST APIs support granular PAT scopes

Previously, a number of publicly documented Azure DevOps REST APIs were not associated with scopes (e.g., work item read) that led to customers using full scopes to consume these APIs through non-interactive authentication mechanisms like personal access tokens (PAT). Using a full scope personal access token increases the risk when they can land in the hands of a malicious user. This is one of the main reasons that many of our customers did not take full advantage of the control plane policies to restrict the usage and behavior of the PAT.

Now, all public Azure DevOps REST APIs are now associated with and support a granular PAT scope. If you are currently using a full-scoped PAT to authenticate to one of the public Azure DevOps REST APIs, consider migrating to a PAT with the specific scope accepted by the API to avoid unnecessary access. The supported granular PAT scope(s) for a given REST API can be found in the Security section of the documentation pages. Additionally, there is a table of scopes here.

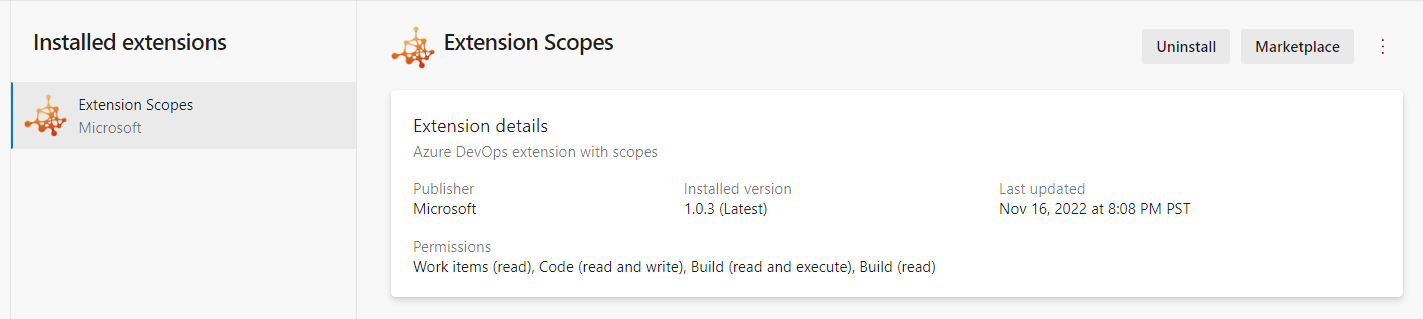

Extensions should display their Scopes

When installing extensions to your Azure DevOps collection, you can review the permissions the extension needs as part of the installation. However, once they are installed, the extension permissions are not visible in the extension settings. This has posed a challenge for administrators needing to perform a periodic review of installed extensions. Now, we have added the extension permissions to extension settings to help you review and take an informed decision on whether to keep them or not.

Boards

Last Accessed column on Delivery Plans page

The Delivery Plans directory page provides a list of the plans defined for your project. You could sort by: Name, Created By, Description, Last configured, or Favorites. With this update, we have added a Last accessed column to the directory page. This provides visibility on when a Delivery Plan was last opened and looked at. As a result, it is easy to identify the plans that are no longer used and can be deleted.

Visualize all dependencies on Delivery Plans

Delivery Plans has always provided the ability to see the dependencies across work items. You can visualize the dependency lines, one by one. With this release, we improved the experience by allowing you to see all the dependencies lines across all the work items on the screen. Simply click the dependencies toggle button on the top right of your delivery plan. Click it again to turn off the lines.

New work item revision limits

Over the past few years, we have seen project collections with automated tools generate tens of thousands of work item revisions. This creates issues with performance and usability on the work item form and the reporting REST APIs. To mitigate the issue, we have implemented a work item revision limit of 10,000 to the Azure DevOps Service. The limit only affects updates using the REST API, not the work item form.

Click here to learn more about the revision limit and how to handle it in your automated tooling.

Increase Delivery Plans team limit from 15 to 20

Delivery Plans lets you view multiple backlogs and multiple teams across your project collection. Previously, you could view 15 team backlogs, including a mix of backlogs and teams from different projects. With this release we increased the maximum limit from 15 to 20.

Fixed bug in Reporting Work Item Links Get API

We fixed a bug in the Reporting Work Item Links Get API to return the correct remoteUrl value for System.LinkTypes.Remote.Related link types. Before this fix, we were returning the wrong project collection name and a missing project id.

Create temporary query REST endpoint

We have seen several instances of extension authors attempting to run unsaved queries by passing the Work Item Query Language (WIQL) statement through the querystring. This works fine unless you have a large WIQL statement that reaches the browser limits on querystring length. To solve this, we have created a new REST endpoint to allow tool authors to generate a temporary query. Using the id from the response to pass via querystring eliminates this problem.

Learn more at the temp queries REST API documentation page.

Batch delete API

Currently, the only way to remove work items from the recycle bin is using this REST API to delete one at a time. This can be a slow process and is subject to rate limiting when trying to do any kind of mass clean up. In response, we have added a new REST API endpoint to delete and/or destroy work items in batch.

@CurrentIteration macro in Delivery Plans

With this update, we have added support for the @CurrentIteration macro for styles in Delivery Plans. This macro will let you get the current iteration from the team context of each row in your plan.

Card resize logic in Delivery Plans

Not everyone uses target date and/or start date when tracking Features and Epics. Some choose to use a combination of dates and iteration path. In this release, we improved the logic to appropriately set the iteration path and date field combinations depending on how they are being used.

For example, if target date is not being used and you resize the card, the new iteration path is set instead of updating the target date.

Batch update improvements

We made several changes to the 7.1 version of the work item batch update API. These include minor performance improvements and the handling of partial failures. Meaning, if one patch fails but the others do not, the others will successfully be completed.

Click here to learn more about the batch update REST API.

Batch delete API

This new REST API endpoint to delete and/or destroy work items in batch is now publicly available. Click here to learn more.

Prevent editing of shareable picklists fields

Custom fields are shared across processes. This can create a problem for picklist fields because we allow process admins to add or remove values from the field. When doing so, the changes affect that field on every process using it.

To solve this problem, we have added the ability for the collection admin to "lock" a field from being edited. When the picklist field is locked, the local process admin can not change the values of that picklist. They can only add or remove the field from the process.

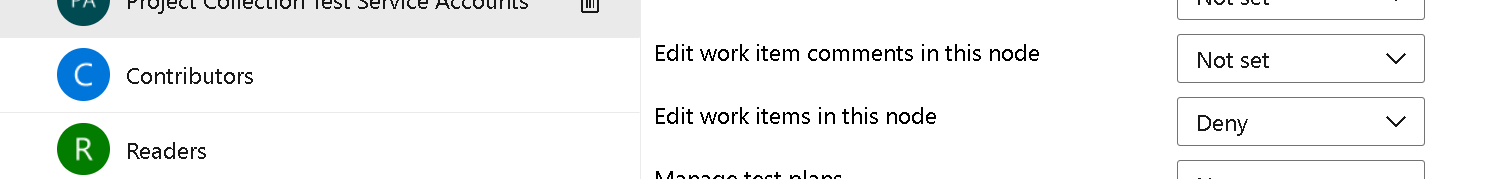

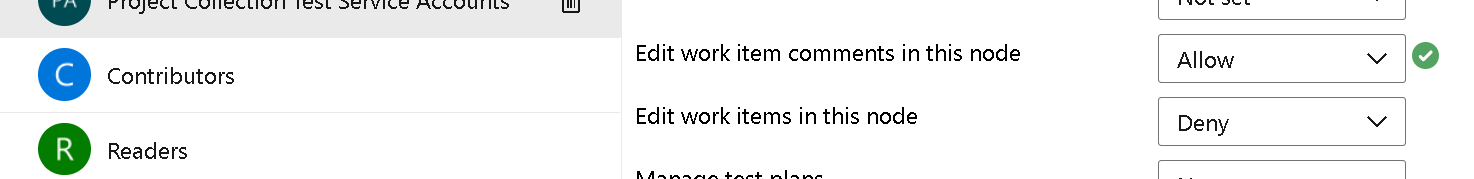

New save comments permission

The ability to save only work item comments has been a top request in the developer community. We are excited to let you know that we have implemented this feature. To begin, let's review the most common scenario:

"I want to prevent some users from editing work item fields but allow them to contribute to the discussion."

To accomplish this, you need to go to Project Settings > Project Configuration > Area Path. Then select the area path of choice and click Security.

Notice the new permission "Edit work item comments in this node". By default, the permission is set to Not set. Meaning, the work item will behave exactly like it did before. To allow a group or users to save comments, select that group/users and change the permission to Allow.

When the user opens the work item form in this area path, they will be able to add comments, but unable to update any of the other fields.

We hope you love this feature as much as we do. As always, if you have any feedback or suggestions, please let us know.

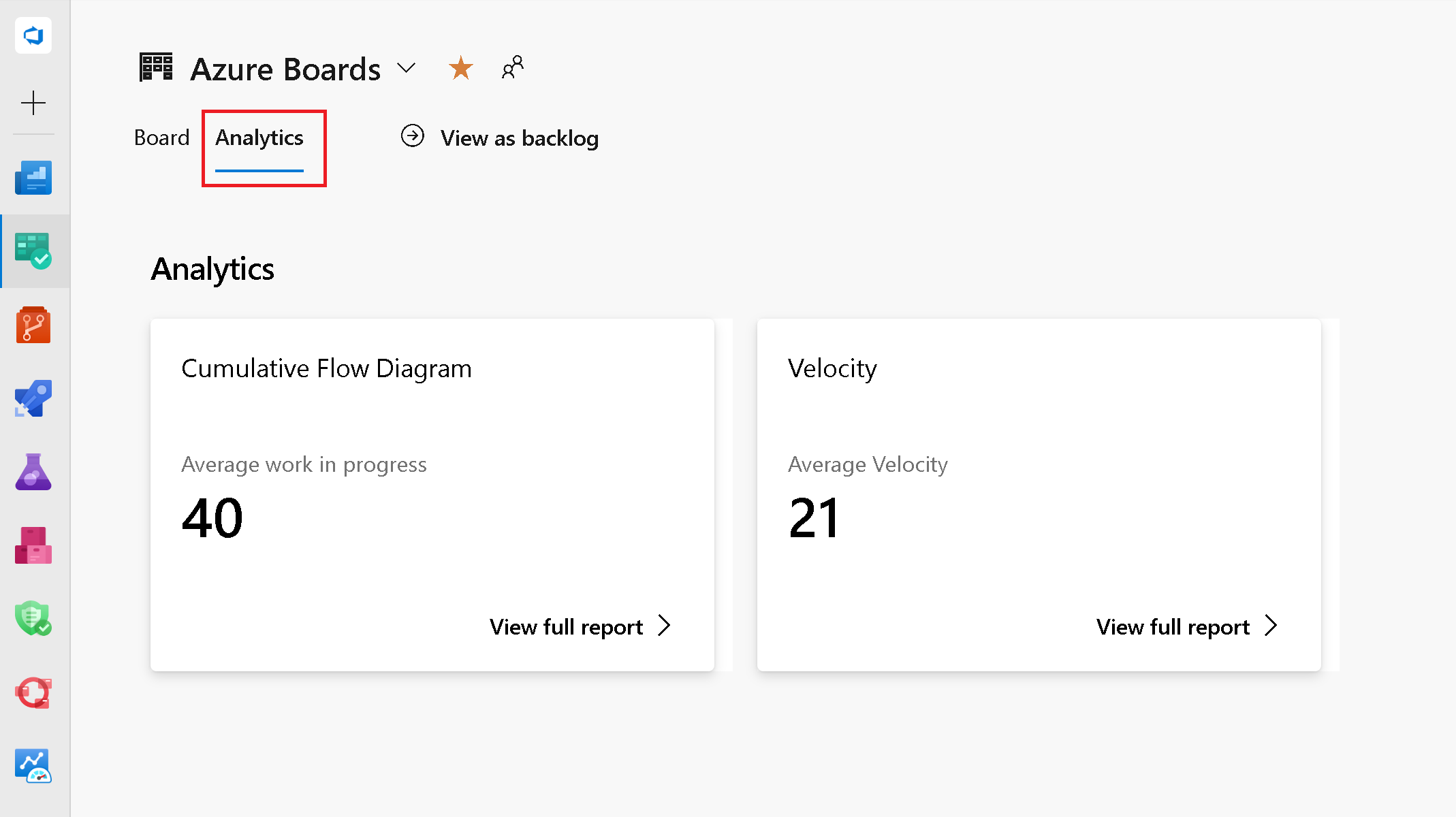

Interactive boards reports

Interactive reports, located in the Boards hub, have been accessible for several years in our hosted version of the product. They replace the older Cumulative Flow Diagram, Velocity, and Sprint Burndown charts. With this release we are making them available.

To view these charts, click the Analytics tab location on the Kanban Board, Backlog, and Sprints pages.

Repos

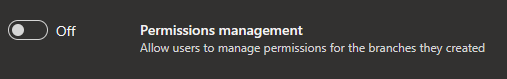

Removing "Edit policies" permission to branch creator

Previously, when you created a new branch, you we're granted permission to edit policies on that branch. With this update, we are changing the default behavior to not grant this permission even if the "Permission management" setting is switched on for the repository.

You will need the "Edit policies" permission granted explicitly (either manually or through REST API) by security permission inheritance or through a group membership.

Pipelines

Current project set as default scope for build access token in classic pipelines

Azure Pipelines uses job access tokens to access other resources in Azure DevOps at run-time. A job access token is a security token that is dynamically generated by Azure Pipelines for each job at run time. Previously, when creating a new classic pipelines, the default value for the access token was set to Project Collection. With this update we are setting the job authorization scope to current project as the default when creating a new classic pipeline.

You can find more details about job access tokens in the Access repositories, artifacts, and other resources documentation.

Change in the default scope of access tokens in classic build pipelines

To improve the security of classic build pipelines, when creating a new one, the build job authorization scope will be Project, by default. Until now, it used to be Project Collection. Read more about Job access tokens. This change does not impact any of the existing pipelines. It only impacts new classic build pipelines that you create from this point on.

Azure Pipelines support for San Diego, Tokyo & Utah releases of ServiceNow

Azure Pipelines has an existing integration with ServiceNow. The integration relies on an app in ServiceNow and an extension in Azure DevOps. We have now updated the app to work with the San Diego, Tokyo & Utah versions of ServiceNow. To ensure that this integration works, upgrade to the new version of the app (4.215.2) from the ServiceNow store.

For more information, see the Integrate with ServiceNow Change Management documentation.

Use proxy URLs for GitHub Enterprise integration

Azure Pipelines integrates with on-premises GitHub Enterprise Servers to run continuous integration and PR builds. In some cases, the GitHub Enterprise Server is behind a firewall and requires the traffic to be routed through a proxy server. To support this scenario, the GitHub Enterprise Server service connections in Azure DevOps lets you configure a proxy URL. Previously, not all the traffic from Azure DevOps was being routed through this proxy URL. With this update, we are ensuring that we route the following traffic from Azure Pipelines to go through the proxy URL:

- Get branches

- Get pull request information

- Report build status

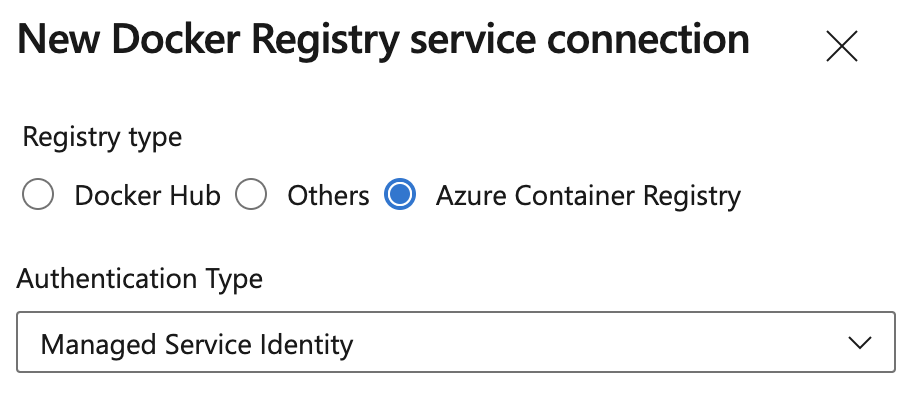

Container Registry service connections can now use Azure Managed Identities

You can use a System-assigned Managed Identity when creating Docker Registry service connections for Azure Container Registry. This allows you to access Azure Container Registry using a Managed Identity associated with a self-hosted Azure Pipelines agent, eliminating the need to manage credentials.

Note

The Managed Identity used to access Azure Container Registry will need the appropriate Azure Role Based Access Control (RBAC) assignment, e.g. AcrPull or AcrPush role.

Automatic tokens created for Kubernetes Service Connection

Since Kubernetes 1.24, tokens were no longer created automatically when creating a new Kubernetes Service Connection. We have added this functionality back. However, it is recommended to use the Azure Service connection when accessing AKS, to learn more see the Service Connection guidance for AKS customers using Kubernetes tasks blog post.

Kubernetes tasks now support kubelogin

We have updated the KuberentesManifest@1, HelmDeploy@0, Kubernetes@1 and AzureFunctionOnKubernetes@1 tasks to support kubelogin. This allows you to target Azure Kubernetes Service (AKS) configured with Azure Active Directory integration.

Kubelogin isn't pre-installed on Hosted images. To make sure above mentioned tasks use kubelogin, install it by inserting the KubeloginInstaller@0 task before the task that depends on it:

- task: KubeloginInstaller@0

- task: HelmDeploy@0

# arguments do not need to be modified to use kubelogin

Experience improvements to pipeline permissions

We've improved the experience around managing pipeline permissions to make the permissions system remember if a pipeline had previously used a protected resource, such as a service connection.

In the past, if you checked off "Grant access permission to all pipelines" when you created a protected resource, but then you restricted access to the resource, your pipeline needed a new authorization to use the resource. This behavior was inconsistent with subsequent opening and closing access to the resource, where a new authorization wasn't required. This is now fixed.

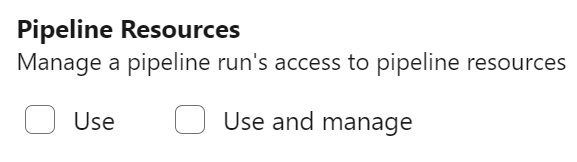

New PAT Scope for managing pipeline authorization and approvals and checks

To limit damage done by leaking a PAT token, we've added a new PAT scope, named Pipeline Resources. You can use this PAT scope when managing pipeline authorization using a protected resource, such as a service connection, or to manage approvals and checks for that resource.

The following REST API calls support the new PAT scope as follows:

- Update an Approval supports scope

Pipeline Resources Use - Manage Checks supports scope

Pipeline Resources Use and Manage - Update Pipeline Permissions For Resources supports scope

Pipeline Resources Use and Manage - Authorize Definition Resources supports scope

Pipeline Resources Use and Manage - Authorize Project Resources supports scope

Pipeline Resources Use and Manage

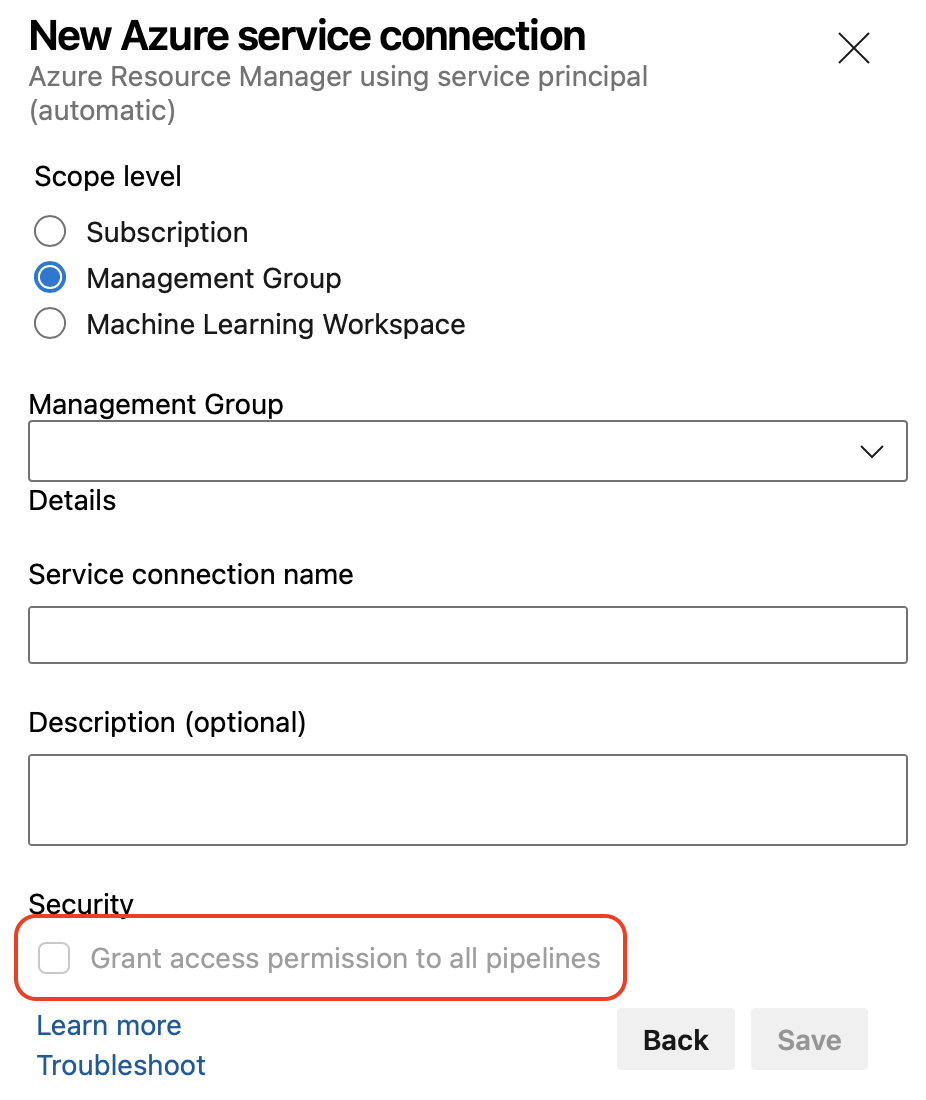

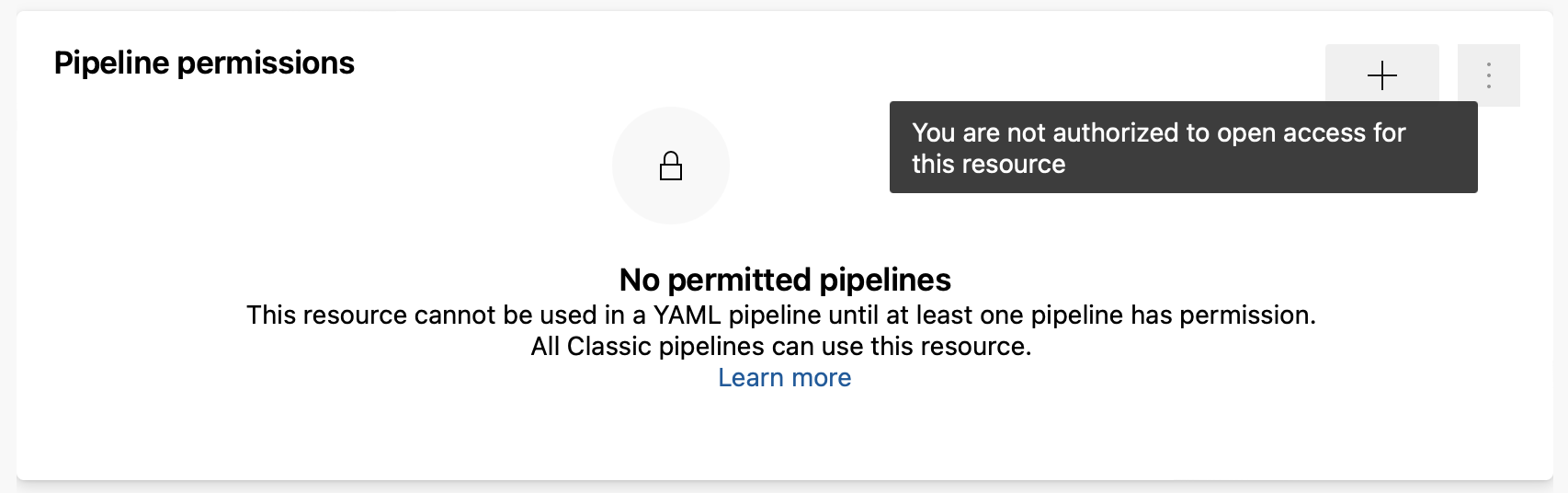

Restrict opening protected resources to resource administrators

To improve the security of resources that are critical to your ability to securely build and deploy your applications, Azure Pipelines now requires resource-type administrator role when opening up access to a resource to all pipelines.

For example, a general Service Connections Administrator role is required to allow any pipeline to use a service connection.. This restriction applies when creating a protected resource or when editing its Security configuration.

In addition, when creating a service connection, and you don't have sufficient rights, the Grant access permission to all pipelines option is disabled.

Also, when trying to open access to an existing resource and you don't have sufficient rights, you'll get a You are not authorized to open access for this resource message.

Also, when trying to open access to an existing resource and you don't have sufficient rights, you'll get a You are not authorized to open access for this resource message.

Pipelines REST API Security Improvements

Majority of the REST APIs in Azure DevOps use scoped PAT tokens. However, some of them require fully-scoped PAT tokens. In other words, you would have to create a PAT token by selecting 'Full access' in order to use some of these APIs. Such tokens pose a security risk since they can be used to call any REST API. We have been making improvements across Azure DevOps to remove the need for fully-scoped tokens by incorporating each REST API into a specific scope. As part of this effort, the REST API to update pipeline permissions for a resource now requires a specific scope. The scope depends on the type of resource being authorized for use:

Code (read, write, and manage)for resources of typerepositoryAgent Pools (read, manage)orEnvironment (read, manage)for resources of typequeueandagentpoolSecure Files (read, create, and manage)for resources of typesecurefileVariable Groups (read, create and manage)for resources of typevariablegroupService Endpoints (read, query and manage)for resources of typeendpointEnvironment (read, manage)for resources of typeenvironment

To bulk edit pipelines permissions, you will still need a fully-scoped PAT token. To learn more about updating Pipeline permissions for resources, see the Pipeline Permissions - Update Pipeline Permissions For Resources documentation.

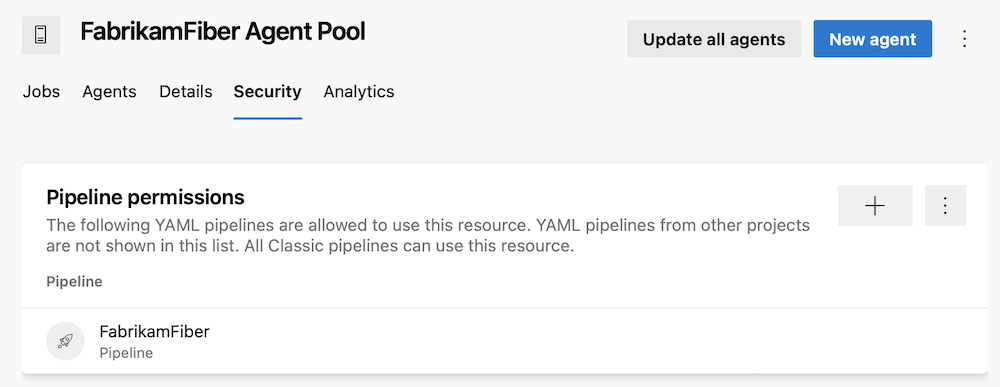

Fine-grained access management for agent pools

Agent pools allow you to specify and manage the machines on which your pipelines run.

Previously, if you used a custom agent pool, managing which pipelines can access it was coarse-grained. You could allow all pipelines to use it, or you could require each pipeline ask for permission. Unfortunately, once you granted a pipeline access permission to an agent pool, you could not revoke it using the pipelines UI.

Azure Pipelines now provides a fine-grained access management for agent pools. The experience is similar to the one for managing pipeline permissions for Service Connections.

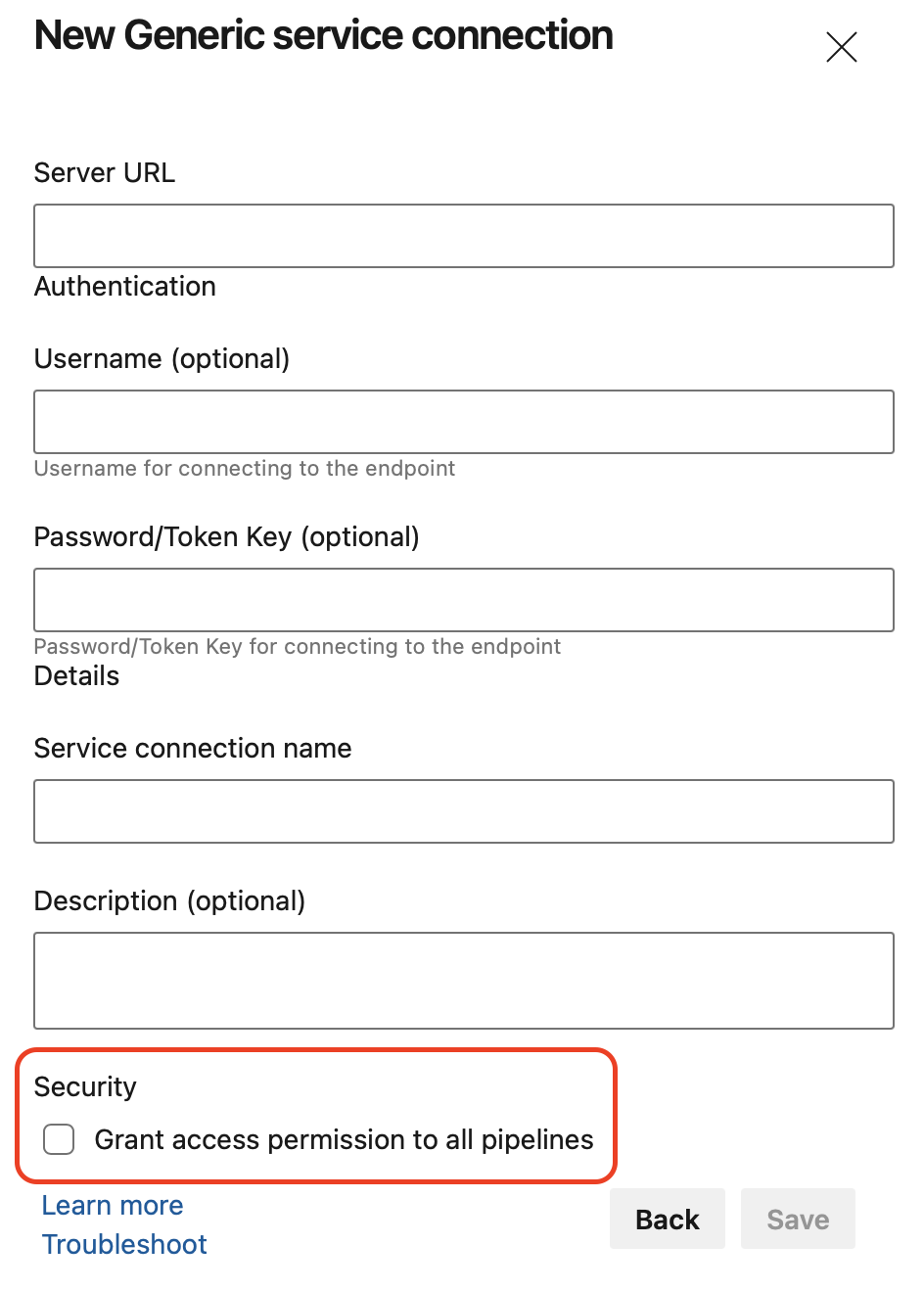

Prevent granting all pipelines access to protected resources

When you create a protected resource such as a service connection or an environment, you have the option to select the Grant access permission to all pipelines checkbox. Until now, this option was checked by default.

While this makes it easier for pipelines to use new protected resources, the reverse is that it favors accidentally granting too many pipelines the right to access the resource.

To promote a secure-by-default choice, Azure DevOps now leaves the checkbox unticked.

Ability to disable masking for short secrets

Azure Pipelines masks secrets in logs. Secrets can be variables marked as secret, variables from variable groups that are linked to Azure Key Vault or elements of a Service Connection marked as secret by the Service Connection provider.

All occurrences of secret value are masked. Masking short secrets e.g. '1', '2', 'Dev' makes it easy to guess their values e.g. in a date: 'Jan 3, 202***'

It's now clear '3' is a secret. In such cases you may prefer not masking the secret altogether. If it's not possible to not mark the value as secret (e.g. the value is taken from Key Vault), you can set the AZP_IGNORE_SECRETS_SHORTER_THAN knob to a value of up to 4.

Node runner download task

When adopting agent releases that exclude the Node 6 task runner you may have an occasional need to run tasks that have not been updated to use a newer Node runner. For this scenario we provide a method to still use tasks dependent on Node End-of-Life runners, see Node runner guidance blog post.

The below task is a method to install the Node 6 runner just-in-time, so an old task can still execute:

steps:

- task: NodeTaskRunnerInstaller@0

inputs:

runnerVersion: 6

Updated TFX Node runner validation

Task authors use the extension packaging tool (TFX) to publish extensions. TFX has been updated to perform validations on Node runner versions, see Node runner guidance blog post.

Extensions that contain tasks using the Node 6 runner will see this warning:

Task <TaskName> is dependent on a task runner that is end-of-life and will be removed in the future. Authors should review Node upgrade guidance: https://aka.ms/node-runner-guidance.

Instructions for manual pre-installation of Node 6 on Pipeline agents

If you use the pipeline- agent feed, you do not have Node 6 included in the agent. In some cases, where a Marketplace task is still dependent on Node 6 and the agent is not able to use the NodeTaskRunnerInstaller task (e.g. due to connectivity restrictions), you will need to pre-install Node 6 independently. To accomplish this, check out the instructions on how install Node 6 runner manually.

Pipeline task changelog

We now publish changes to Pipeline tasks to this changelog. This contains the complete list of changes made to built-in Pipeline tasks. We have retroactively published prior changes, so the changelog provides a historical record of task updates.

Release tasks use Microsoft Graph API

We have updated our release tasks to use the Microsoft Graph API. This removes the usage of the AAD Graph API from our tasks.

Windows PowerShell task performance improvement

You can use tasks to define automation in a pipeline. One of these tasks is the PowerShell@2 utility task that lets you execute PowerShell scripts in your pipeline. To use PowerShell script to target an Azure environment, you can use the AzurePowerShell@5 task. Some PowerShell commands that can print progress updates, for example Invoke-WebRequest, now execute faster. The improvement is more significant if you have many of these commands in your script, or when they are long running. With this update, the progressPreference property of the PowerShell@2 and AzurePowerShell@5 tasks is now set to SilentlyContinue by default.

Pipelines Agent v3 on .NET 6

The Pipeline agent has been upgraded to use .NET 3.1 Core to .NET 6 as its runtime. This introduces native support for Apple Silicon as well as Windows Arm64.

Using .NET 6 impacts system requirements for the agent. Specifically, we drop support for the following Operating Systems: CentOS 6, Fedora 29-33, Linux Mint 17-18, Red Hat Enterprise Linux 6. See documentation of Agent software version 3.

This script can be used to identify pipelines that use agents with unsupported operating systems.

Important

Please be aware that agents running on any of the above operating systems will either no longer update or fail once we roll out the .NET 6 based agent.

Specify agent version in Agent VM extension

Azure VM's can be included in Deployment Groups using a VM Extension. The VM extension has been updated to optionally specify the desired agent version to be installed:

"properties": {

...

"settings": {

...

"AgentMajorVersion": "auto|2|3",

...

},

...

}

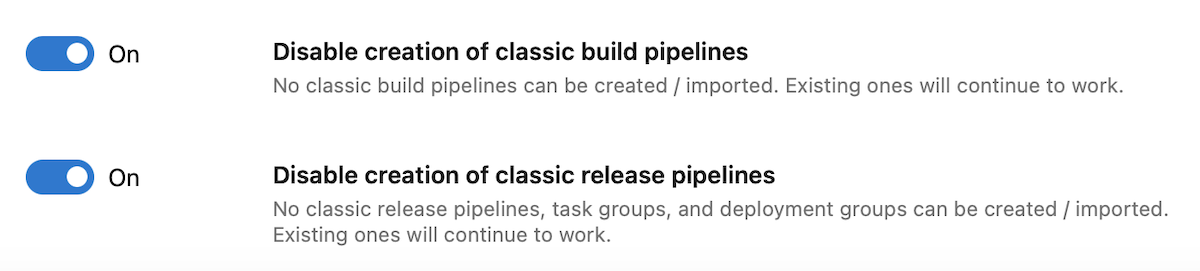

New toggles to control creation of classic pipelines

Azure DevOps now lets you ensure your project collection uses only YAML pipelines, by disabling the creation of classic build pipelines, classic release pipelines, task groups, and deployment groups. Your existing classic pipelines will continue to run, and you'll be able to edit them, but you won't be able to create new ones.

Azure DevOps now lets you ensure your organization only uses YAML pipelines, by disabling the creation of classic build pipelines, classic release pipelines, task groups, and deployment groups. Your existing classic pipelines will continue to run, and you'll be able to edit them, but you won't be able to create new ones.

You can disable creation of classic pipelines at the project collection level or project-level, by turning on the corresponding toggles. The toggles can be found in Project / Project Settings -> Pipelines -> Settings. There are two toggles: one for classic build pipelines and one for classic release pipelines, deployment groups, and task groups.

The toggles state is off by default, and you will need admin rights to change the state. If the toggle is on at the organization-level, the disabling is enforced for all projects. Otherwise, each project is free to choose whether to enforce or not the disablement.

When disabling the creation of classic pipelines is enforced, REST APIs related to creating classic pipelines, task groups, and deployment groups will fail. REST APIs that create YAML pipelines will work.

Updates to "Run stage state changed" service hook event

Service hooks let you run tasks on other services when events happen in your project in Azure DevOps, the Run stage state changed is one of these events. The Run stage state changed event must contain information about the run including the name of the pipeline. Previously, it only included information about the id and name of the run. With this update, we made changes to the event to include missing information.

Service hook for job state change

Service hooks allow you to react in response to events related to state changes in your pipeline runs. Up until now, you could configure service hooks for pipeline run- and stage state changes.

Starting now, you can configure service hooks that fire when the state of a job in your pipeline run changes. The payload structure of the new event is shown in the following example.

{

"subscriptionId": "8d91ad83-1db5-4d43-8c5a-9bb2239644b1",

"notificationId": 29,

"id": "fcad4962-f3a6-4fbf-9653-2058c304503f",

"eventType": "ms.vss-pipelines.job-state-changed-event",

"publisherId": "pipelines",

"message":

{

"text": "Run 20221121.5 stage Build job Compile succeeded.",

"html": "Run 20221121.5 stage Build job <a href=\"https://dev.azure.com/fabrikamfiber/fabrikamfiber-viva/_build/results?buildId=2710088\">Compile</a> succeeded.",

"markdown": "Run 20221121.5 stage Build job [Compile](https://dev.azure.com/fabrikamfiber/fabrikamfiber-viva/_build/results?buildId=2710088) succeeded."

},

"detailedMessage":

{

"text": "Run 20221121.5 stage Build job Compile succeeded.",

"html": "Run 20221121.5 stage Build job <a href=\"https://dev.azure.com/fabrikamfiber/fabrikamfiber-viva/_build/results?buildId=2710088\">Compile</a> succeeded.",

"markdown": "Run 20221121.5 stage Build job [Compile](https://dev.azure.com/fabrikamfiber/fabrikamfiber-viva/_build/results?buildId=2710088) succeeded."

},

"resource":

{

"job":

{

"_links":

{

"web":

{

"href": "https://dev.azure.com/fabrikamfiber/fabrikamfiber-viva/_build/results?buildId=2710088"

},

"pipeline.web":

{

"href": "https://dev.azure.com/fabrikamfiber/fabrikamfiber-viva/_build/definition?definitionId=4647"

}

},

"id": "e87e3d16-29b0-5003-7d86-82b704b96244",

"name": "Compile",

"state": "completed",

"result": "succeeded",

"startTime": "2022-11-21T16:10:28.49Z",

"finishTime": "2022-11-21T16:10:53.66Z"

},

"stage": { ... },

"run": { ... },

"pipeline": { ... },

"repositories": [ ... ]

},

"resourceVersion": "5.1-preview.1",

"createdDate": "2022-11-21T16:11:02.9207334Z"

}

Run, stage, and job state change service hook events now contain a repository property that lists the Azure Repos consumed by the pipeline run. For example:

"repositories":

[

{

"type": "Git",

"change":

{

"author":

{

"name": "Fabrikam John",

"email": "john@fabrikamfiber.com",

"date": "2022-11-11T15:09:21Z"

},

"committer":

{

"name": "Fabrikam John",

"email": "john@fabrikamfiber.com",

"date": "2022-11-11T15:09:21Z"

},

"message": "Added Viva support"

},

"url": "https://fabrikamfiber@dev.azure.com/fabrikamfiber/fabrikamfiber-viva/_git/fabrikamfiber"

}

]

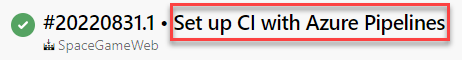

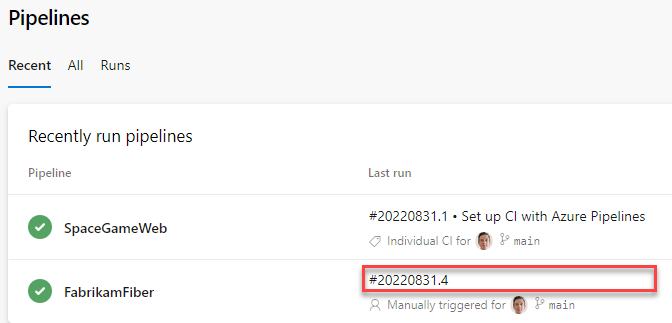

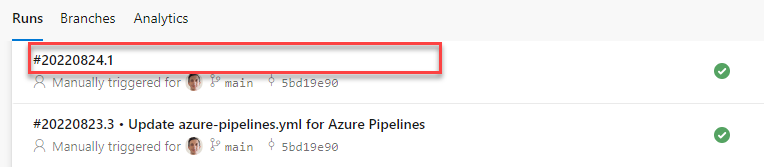

Disable showing the last commit message for a pipeline run

Previously, the Pipelines UI used to show the last commit message when displaying a pipeline's run.

This message can be confusing, for example, when your YAML pipeline's code lives in a repository different from the one that holds the code it's building. We heard your feedback from the Developer Community asking us for a way to enable/disable appending the latest commit message to the title of every pipeline run.

With this update, we've added a new YAML property, named appendCommitMessageToRunName, that lets you do exactly that. By default, the property is set to true. When you set it to false, the pipeline run will only display the BuildNumber.

Increased Azure Pipeline limits to align with the 4 MB maximum Azure Resource Manager (ARM) template size.

You can use the Azure Resource Manager Template Deployment task to create Azure infrastructure. In response to your feedback, we have increased the Azure Pipelines integration limit of 2 MB to 4 MB. This will align with the ARM Templates maximum size of 4 MB resolving size constraints during integration of large templates.

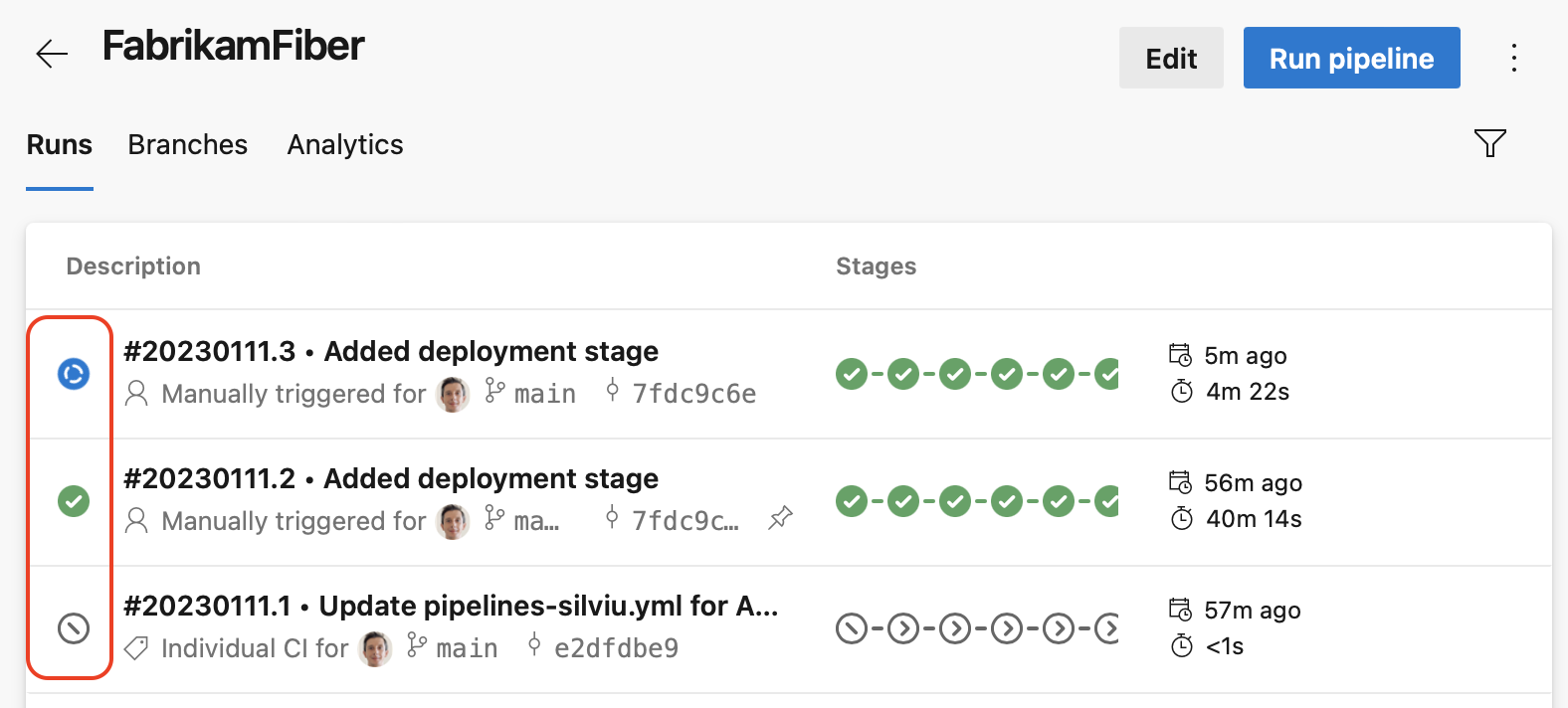

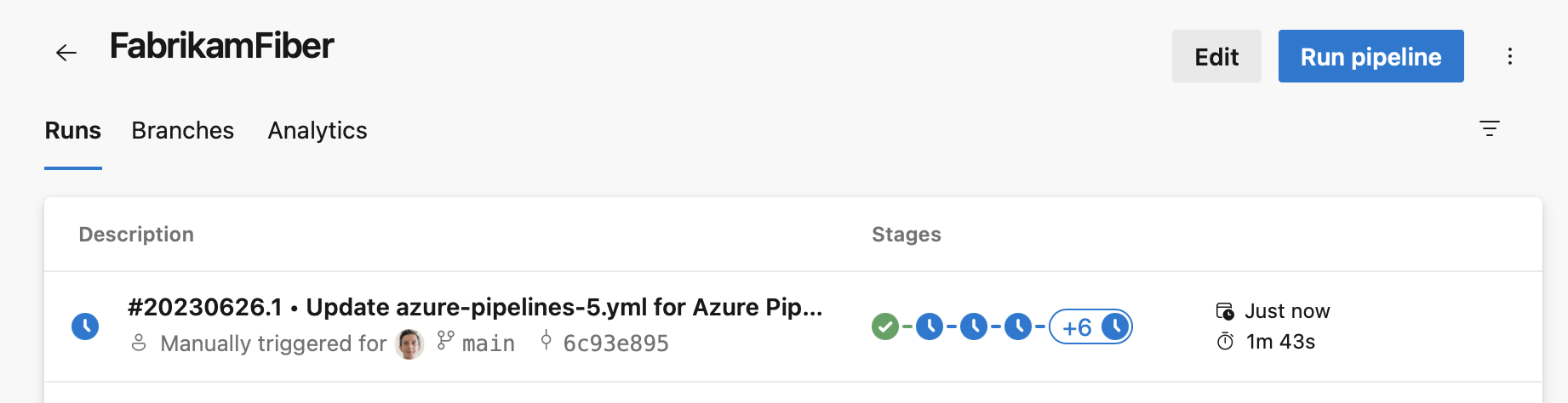

Pipeline run status overview icon

With this release, we're making it easier to know the overall status of a pipeline run.

For YAML pipelines that have many stages, it used to be hard to know the status of a pipeline run, that is, is it still running or it finished. And if it finished, what is the overall state: successful, failed, or canceled. We fixed this issue by adding a run status overview icon.

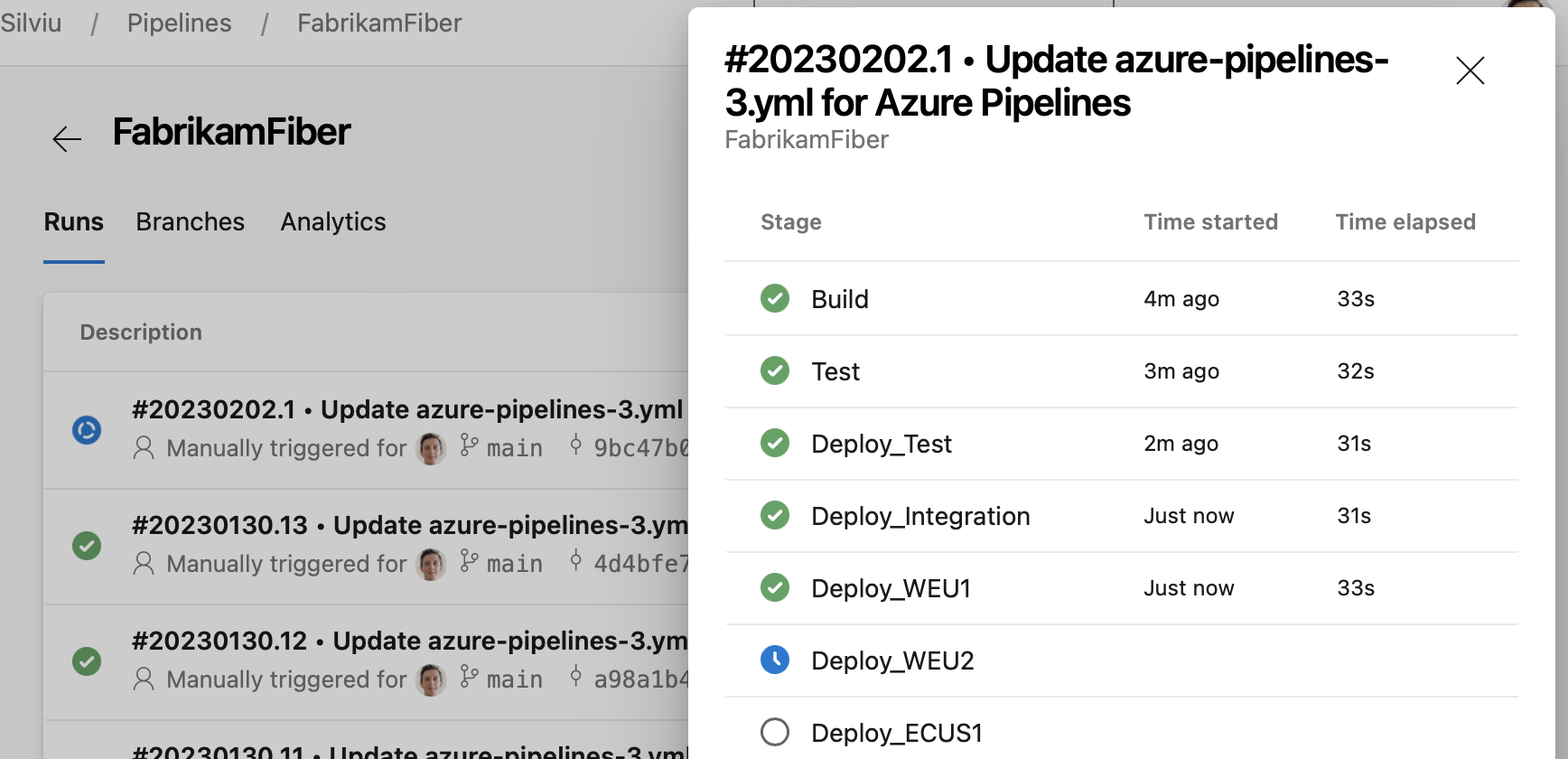

Pipeline stages side panel

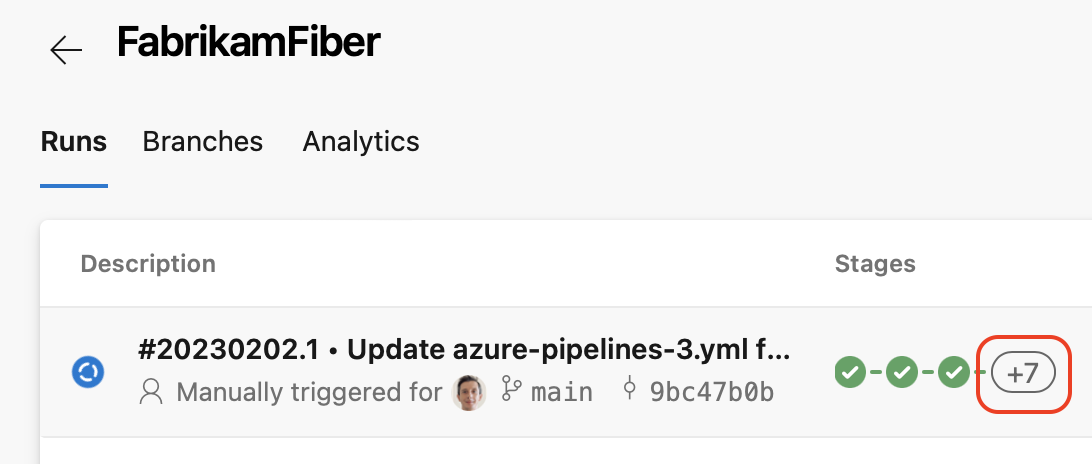

YAML pipelines can have tens of stages, and not all of them will fit on your screen. While the pipeline run overview icon tells you the overall state of your run, it is still hard to know which stage failed, or which stage is still running, for example.

In this release, we've added a pipeline stages side panel that lets you quickly see the state of all your stages. You can then click on a stage and get directly to its logs.

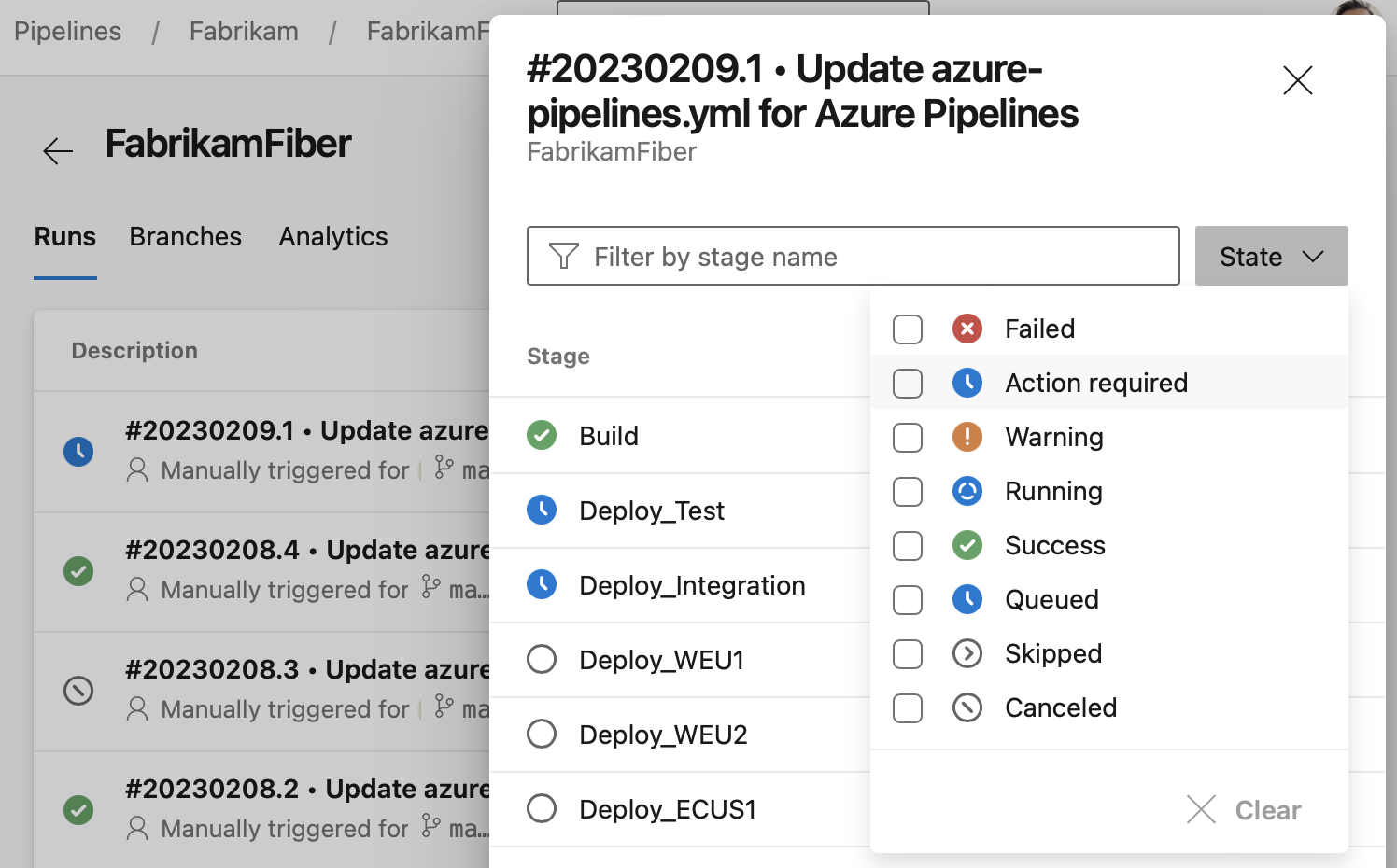

Search for stages in side panel

We've made it easier to find the stages you're looking for in the stages side panel. You can now quickly filter for stages based on their status, for example running stages or those that require manual intervention. You can also search for stages by their name.

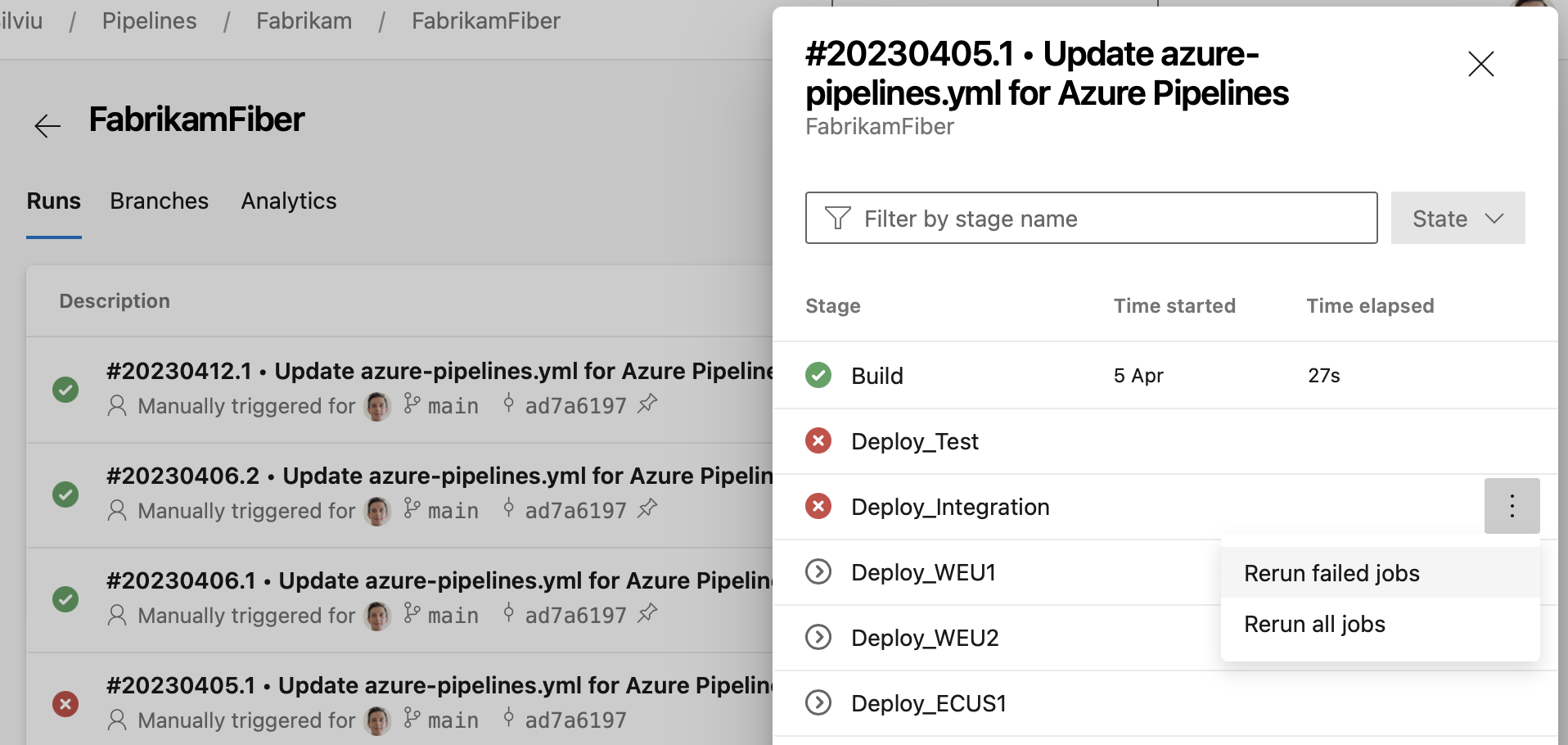

Stage quick actions

A pipeline's Runs screen gives you quick access to all a runs stages. In this release, we've added a stages panel from where you can perform actions for each stage. For example, you can easily rerun failed jobs or rerun the entire stage. The panel is available when not all stages fit in the user interface, as you can see in the following screenshot.

When you click on the '+' sign in the stages column, the stages panel shows up, and then you can perform stage actions.

When you click on the '+' sign in the stages column, the stages panel shows up, and then you can perform stage actions.

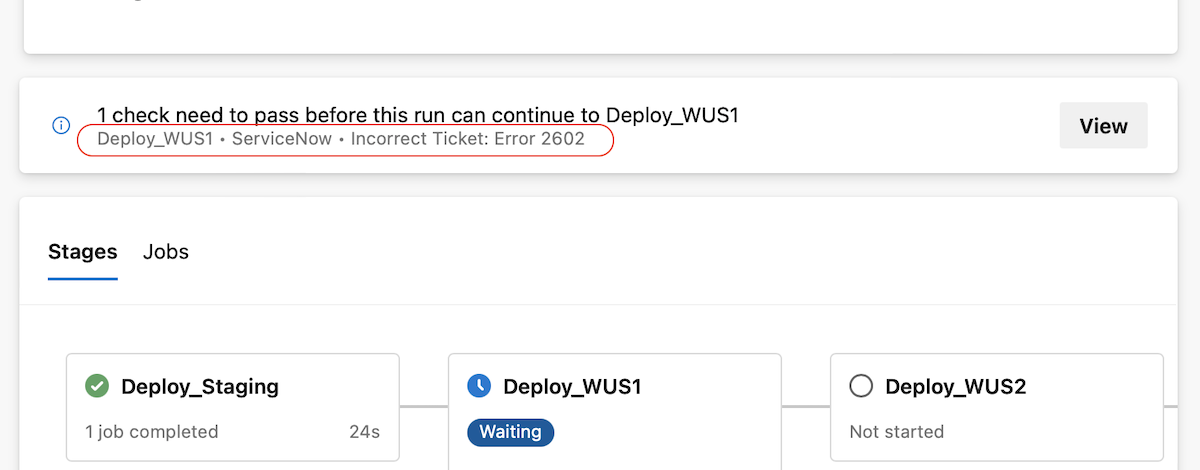

Checks user experience improvements

We are making reading checks logs easier. Checks logs provide information critical for your deployment's success. They can tell you if you forgot to close a work item ticket, or that you need to update a ticket in ServiceNow. Previously, knowing that a check provided such critical information was hard.

Now, the pipeline run details page shows the latest check log. This is only for checks that follow our recommended usage.

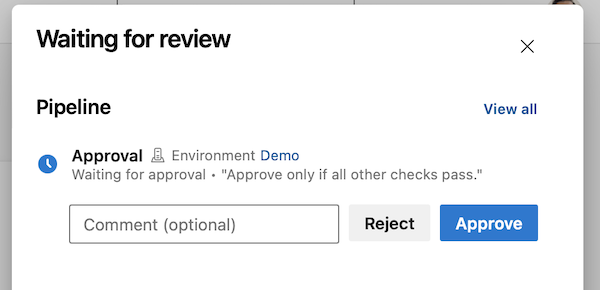

To prevent mistakenly approved Approvals, Azure DevOps shows the Instructions to approvers in the Approvals and checks side panel in a pipeline run's detail page.

To prevent mistakenly approved Approvals, Azure DevOps shows the Instructions to approvers in the Approvals and checks side panel in a pipeline run's detail page.

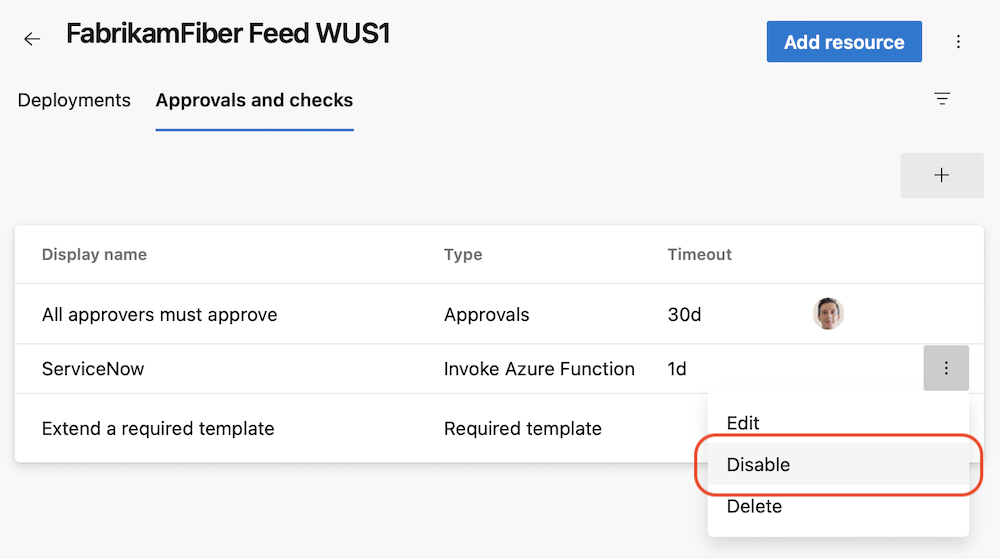

Disable a check

We made debugging checks less tedious. Sometimes, an Invoke Azure Function or Invoke REST API check doesn't work correctly, and you need to fix it. Previously, you had to delete such checks, to prevent them from erroneously blocking a deployment. Once you fixed the check, you had to add it back and configure it correctly, making sure all the required headers are set or the query parameters are correct. This is tedious.

Now, you can just disable a check. The disabled check won't run in subsequent check suite evaluations.

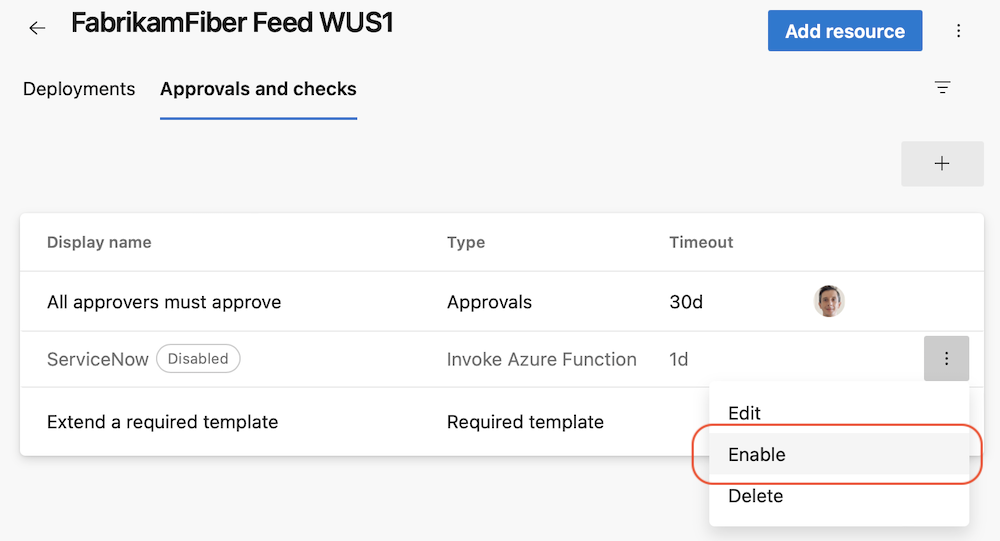

Once you fix the erroneous check, you can just enable it.

Once you fix the erroneous check, you can just enable it.

Consumed resources and template parameters in Pipelines Runs Rest API

The extended Pipelines Runs REST API now returns more types of artifacts used by a pipeline run and the parameters used to trigger that run. We enhanced the API to return the container and pipeline resources and the template parameters used in a pipeline run. You can now, for example, write compliance checks that evaluate the repositories, containers, and other pipeline runs used by a pipeline.

Here is an example of the new response body.

"resources":

{

"repositories":

{

"self":

{

"repository":

{

"id": "e5c55144-277b-49e3-9905-2dc162e3f663",

"type": "azureReposGit"

},

"refName": "refs/heads/main",

"version": "44153346ecdbbf66c68c20fadf27f53ea1394db7"

},

"MyFirstProject":

{

"repository":

{

"id": "e5c55144-277b-49e3-9905-2dc162e3f663",

"type": "azureReposGit"

},

"refName": "refs/heads/main",

"version": "44153346ecdbbf66c68c20fadf27f53ea1394db7"

}

},

"pipelines":

{

"SourcePipelineResource":

{

"pipeline":

{

"url": "https://dev.azure.com/fabrikam/20317ad0-ae49-4588-ae92-6263028b4d83/_apis/pipelines/51?revision=3",

"id": 51,

"revision": 3,

"name": "SourcePipeline",

"folder": "\\source"

},

"version": "20220801.1"

}

},

"containers":

{

"windowscontainer":

{

"container":

{

"environment":

{

"Test": "test"

},

"mapDockerSocket": false,

"image": "mcr.microsoft.com/windows/servercore:ltsc2019",

"options": "-e 'another_test=tst'",

"volumes":

[

"C:\\Users\\fabrikamuser\\mount-fabrikam:c:\\mount-fabrikam"

],

"ports":

[

"8080:80",

"6379"

]

}

}

}

},

"templateParameters":

{

"includeTemplateSteps": "True"

}

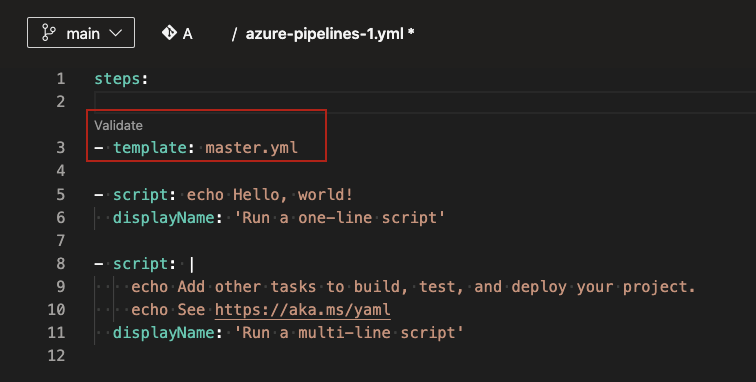

General Availability template support in YAML editor

Templates are a commonly used feature in YAML pipelines. They are an easy way to share pipeline snippets. They are also a powerful mechanism in verifying or enforcing security and governance through your pipeline.

Azure Pipelines supports a YAML editor, which can be helpful when editing your pipeline. However the editor did not support templates until now. Authors of YAML pipelines could not get assistance through intellisense when using a template. Template authors could not make use of the YAML editor. In this release, we are adding support for templates in the YAML editor.

As you edit your main Azure Pipelines YAML file, you can either include or extend a template. When you type in the name of your template, you will be prompted to validate your template. Once validated, the YAML editor understands the schema of the template including the input parameters.

Post validation, you can choose to navigate into the template. You will be able to make changes to the template using all the features of the YAML editor.

There are known limitations:

- If the template has required parameters that are not provided as inputs in the main YAML file, then the validation fails and prompts you to provide those inputs. In an ideal experience, the validation should not be blocked, and you should be able to fill in the input parameters using intellisense.

- You cannot create a new template from the editor. You can only use or edit existing templates.

New predefined system variable

We introduced a new predefined system variable, named Build.DefinitionFolderPath, whose value is the folder path of a build pipeline definition. The variable is available in both YAML and classic build pipelines.

For example, if your pipeline is housed under the FabrikamFiber\Chat folder in Azure Pipelines, then the value of Build.DefinitionFolderPath is FabrikamFiber\Chat.

Add support for string split function in YAML template expressions

YAML pipelines provide you convenient ways to reduce code duplication, such as looping through each value of a list or property of an object.

Sometimes, the set of items to iterate through is represented as a string. For example, when the list of environments to deploy to is defined by the string integration1, integration2.

As we listened to your feedback from the Developer Community, we heard you wanted a string split function in YAML template expressions.

Now, you can split a string and iterate over each of its substrings.

variables:

environments: integration1, integration2

jobs:

- job: Deploy

steps:

- ${{ each env in split(variables.environments, ', ') }}:

- script: ./deploy.sh -e ${{ env }}

- script: ./runTest.sh -e ${{ env }}

Template Expressions in Repository Resource Definition

We've added support for template expressions when defining the ref property of a repository resource in a YAML pipeline. This was a highly-requested feature by our Developer Community.

There exist use cases when you want your pipeline to check out different branches of the same repository resource.

For example, say you have a pipeline that builds its own repository, and for this, it needs to check out a library from a resource repository. Furthermore, say you want your pipeline to check out the same library branch as itself is using. For example, if your pipeline runs on the main branch, it should check out the main branch of the library repo. If the pipelines runs on the dev branch, it should check out the dev library branch.

Up until today, you had to explicitly specify the branch to check out, and change the pipeline code whenever the branch changes.

Now, you can use template expressions to choose the branch of a repository resource. See the following example of YAML code to use for the non-main branches of your pipeline:

resources:

repositories:

- repository: library

type: git

name: FabrikamLibrary

ref: ${{ variables['Build.SourceBranch'] }}

steps:

- checkout: library

- script: echo ./build.sh

- script: echo ./test.sh

When you run the pipeline, you can specify the branch to check out for the library repository.

Specify the version of a template to extend at build queue-time

Templates represent a great way to reduce code duplication and improve the security of your pipelines.

One popular use case is to house templates in their own repository. This reduces the coupling between a template and the pipelines that extend it and makes it easier to evolve the template and the pipelines independently.

Consider the following example, in which a template is used to monitor the execution of a list of steps. The template code is located in the Templates repository.

# template.yml in repository Templates

parameters:

- name: steps

type: stepList

default: []

jobs:

- job:

steps:

- script: ./startMonitoring.sh

- ${{ parameters.steps }}

- script: ./stopMonitoring.sh

Say you have a YAML pipeline that extends this template, located in repository FabrikamFiber. Up until today, it was not possible to specify the ref property of the templates repository resource dynamically when using the repository as template source. This meant you had to change the code of the pipeline if you wanted your pipeline to:

extend a template from a different branch

extend a template from the same branch name as your pipeline, regardless on which branch you ran your pipeline

With the introduction of template expressions in repository resource definition, you can write your pipeline as follows:

resources:

repositories:

- repository: templates

type: git

name: Templates

ref: ${{ variables['Build.SourceBranch'] }}

extends:

template: template.yml@templates

parameters:

steps:

- script: echo ./build.sh

- script: echo ./test.sh

By doing so, your pipeline will extend the template in the same branch as the branch on which the pipeline runs, so you can make sure your pipeline's and template's branches always match. That is, if you run your pipeline on a branch dev, it will extend the template specified by the template.yml file in the dev branch of the templates repository.

Or you can choose, at build queue-time, which template repository branch to use, by writing the following YAML code.

parameters:

- name: branch

default: main

resources:

repositories:

- repository: templates

type: git

name: Templates

ref: ${{ parameters.branch }}

extends:

template: template.yml@templates

parameters:

steps:

- script: ./build.sh

- script: ./test.sh

Now, you can have your pipeline on branch main extend a template from branch dev in one run, and extend a template from branch main in another run, without changing the code of your pipeline.

When specifying a template expression for the ref property of a repository resource, you can use parameters and system predefined variables, but you cannot use YAML or Pipelines UI-defined variables.

Template Expressions in Container Resource Definition

We've added support for template expressions when defining the endpoint, volumes, ports, and options properties of a container resource in a YAML pipeline. This was a highly-requested feature by our Developer Community.

Now, you can write YAML pipelines like the following.

parameters:

- name: endpointName

default: AzDOACR

type: string

resources:

containers:

- container: linux

endpoint: ${{ parameters.endpointName }}

image: fabrikamfiber.azurecr.io/ubuntu:latest

jobs:

- job:

container: linux

steps:

- task: CmdLine@2

inputs:

script: 'echo Hello world'

You can use parameters. and variables. in your template expressions. For variables, you can only use the ones defined in the YAML file, but not those defined in the Pipelines UI. If you redefine the variable, for example, using agent log commands, it will not have any effect.

Scheduled builds improvements

We fixed an issue that was causing a pipeline's schedule information to become corrupted, and the pipeline to not load. This was caused for example, when the name of the branch exceeded a certain number of characters.

Improved error message when failing to load pipelines

Azure Pipelines provides several types of triggers to configure how your pipeline starts. One way to run a pipeline is by using scheduled triggers. Sometimes, the Scheduled Run information of a pipeline gets corrupted and can cause a load to fail. Previously, we were displaying a misleading error message, claiming that the pipeline was not found. With this update, we resolved this issue and are returning an informative error message. Going forward you will received the message similar to: Build schedule data is corrupted if a pipeline fails to load.

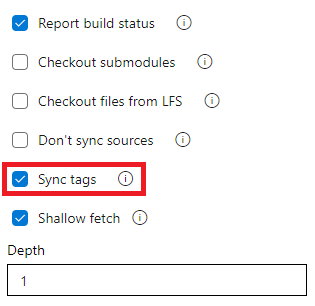

Do not sync tags when fetching a Git repository

The checkout task uses --tags option in fetching the contents of a Git repository. This causes the server to fetch all tags as well as all objects that are pointed to by those tags. This increases the time to run the task in a pipeline - particularly if you have a large repository with a number of tags. Furthermore, the checkout task syncs tags even when you enable the shallow fetch option, thereby possibly defeating its purpose. To reduce the amount of data fetched or pulled from a Git repository, we have now added a new option to the task to control the behavior of syncing tags. This option is available both in classic and YAML pipelines.

This behavior can either be controlled from the YAML file or from the UI.

To opt-out from syncing the tags through YAML file, add the fetchTags: false to the checkout step. When the fetchTags option is not specified, it's the same as if fetchTags: true is used.

steps:

- checkout: self # self represents the repo where the initial Pipelines YAML file was found

clean: boolean # whether to fetch clean each time

fetchTags: boolean # whether to sync the tags

fetchDepth: number # the depth of commits to ask Git to fetch

lfs: boolean # whether to download Git-LFS files

submodules: boolean | recursive # set to 'true' for a single level of submodules or 'recursive' to get submodules of submodules

path: string # path to check out source code, relative to the agent's build directory (e.g. \_work\1)

persistCredentials: boolean # set to 'true' to leave the OAuth token in the Git config after the initial fetch

If you want to change the behavior of existing YAML pipelines, it may be more convenient to set this option in the UI instead of updating the YAML file. To navigate to the UI, open the YAML editor for the pipeline, select Triggers, then Process, and then the Checkout step.

If you specify this setting both in the YAML file and in the UI, then the value specified in the YAML file takes precedence.

For all new pipelines you create (YAML or Classic), tags are still synced by default. This option does not change the behavior of existing pipelines. Tags will still be synced in those pipelines unless you explicitly change the option as described above.

Artifacts

Updated default feed permissions

Project Collection Build Service accounts will now have the Collaborator role by default when a new project collection-scoped Azure Artifacts feed is created, instead of the current Contributor role.

New user interface for upstream package search

Previously, you could see upstream packages if you had a copy of the feed. The pain-point was that you couldn't search for packages that are available in the upstream and that are not yet saved in the feed. Now, you can search for available upstream packages with the new feed user interface.

Azure Artifacts now provide a user interface that allows you to search for packages in your upstream sources and save packages versions into your feed. This aligns with Microsoft’s goal to improve our products and services.

Reporting

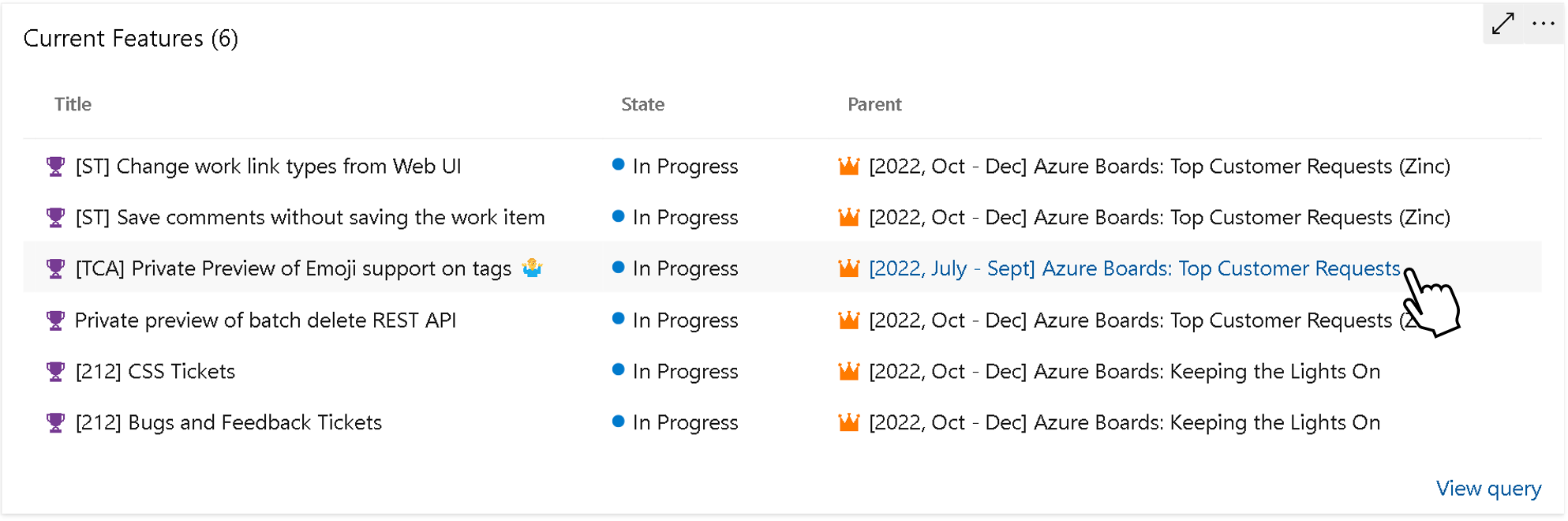

Show Parent in Query Results Widget

The Query Results Widget now support the parent's name and a direct link to that parent.

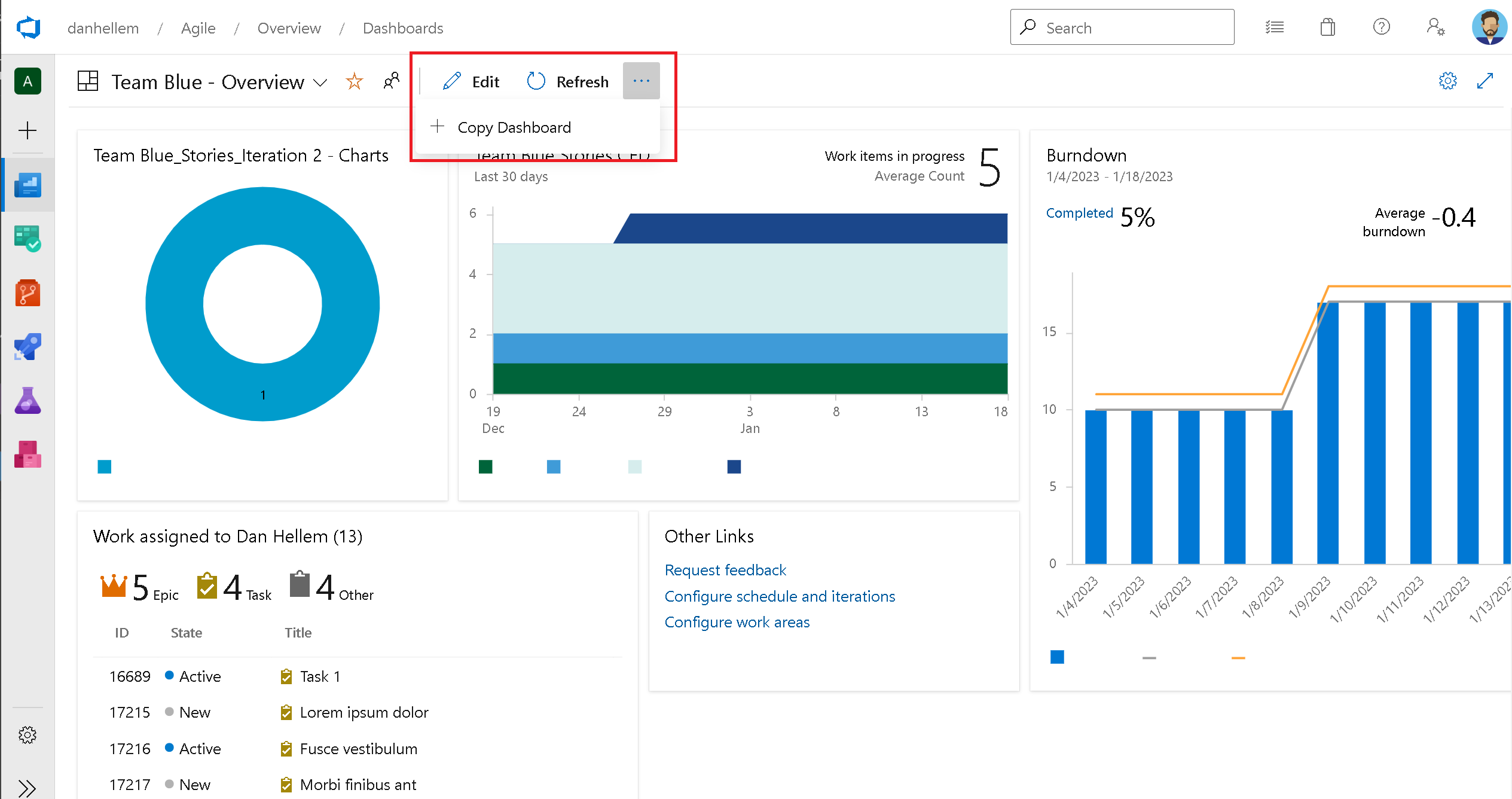

Copy Dashboard

With this release we are including Copy Dashboard.

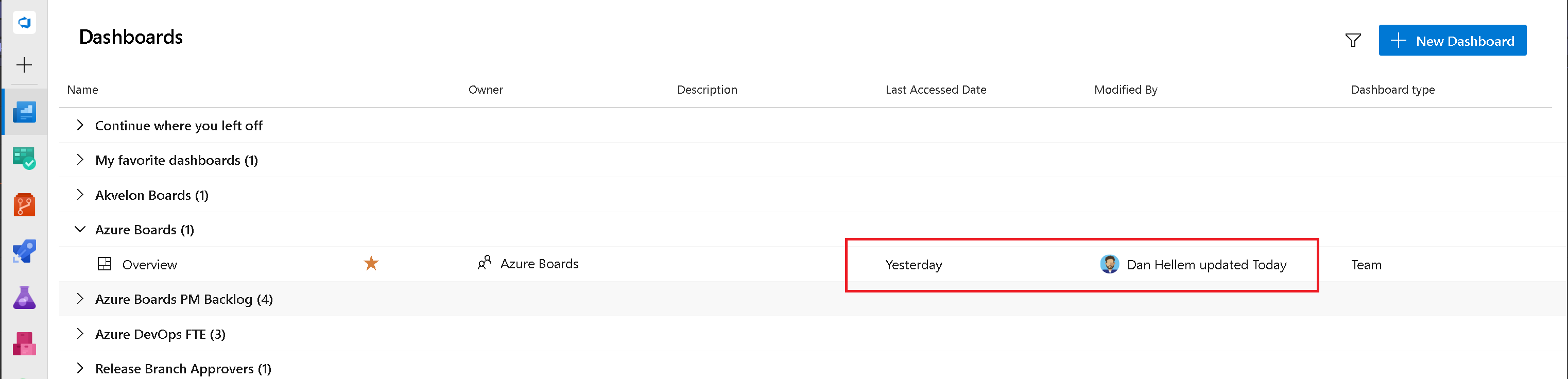

Dashboards Last Accessed Date and Modified By

One of the challenges of allowing teams to create several dashboards is the managing and cleanup of the outdated and unused. Knowing when a dashboard was last visited or modified is an important part to understanding which ones can be removed. In this release, we have included two new columns to the Dashboards directory page. Last Accessed Date will track when the dashboard was most recently visited. Modified By tracks when the dashboard was last edited and by whom.

The Modified By information will also be displayed on the dashboard page itself.

We hope these new fields help project administrators understand the activity level for dashboards to make an educated decision if they should be removed or not.

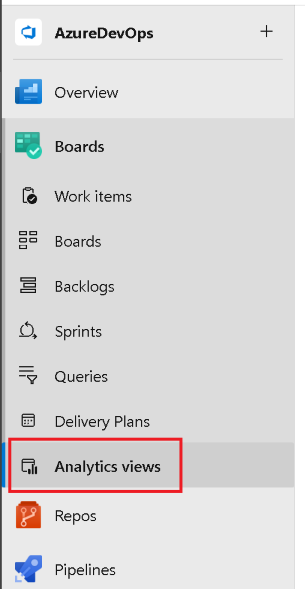

Analytics Views are now available

The Analytics Views feature has been in a preview state for an extended period of time in our hosted version of the product. With this release we are happy to announce that this feature is now available to all project collections.

In the navigation, Analytics views moved from the Overview tab to the Boards tab.

An Analytics view provides a simplified way to specify the filter criteria for a Power BI report based on Analytics data. If you are not familiar with Analytics Views, here is some documentation to get you caught up:

- About Analytics views

- Create an Analytics view in Azure DevOps

- Manage Analytics views

- Create a Power BI report with a default Analytics view

- Connect to Analytics with Power BI Data Connector

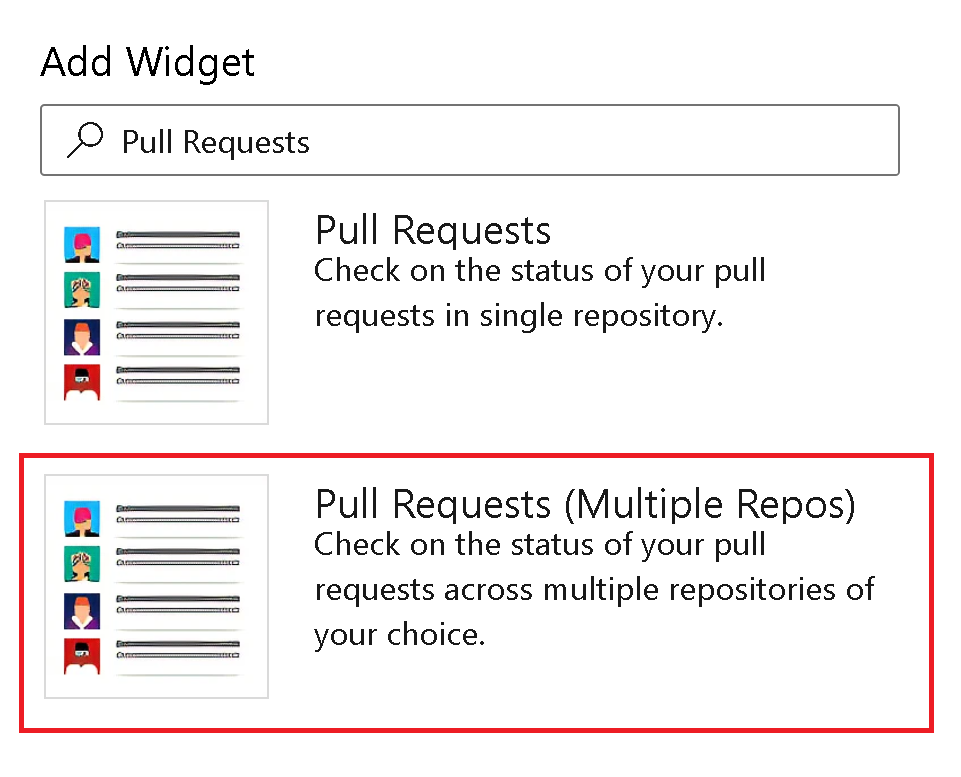

Pull Request widget for multiple repos is now available

We are thrilled to announce that Pull Request widget for multiple repositories is available in Azure DevOps Server 2022.1. With this new widget, you can effortlessly view pull requests from up to 10 different repositories in a single, streamlined list, making it easier than ever to stay on top of your pull requests.

Introducing resolved as completed in burndown and burnup charts

We understand the importance of accurately reflecting team progress, including resolved items as completed in the charts. With a simple toggle option, you can now choose to display resolved items as completed, providing a true reflection of the team's burndown state. This enhancement allows for more accurate tracking and planning, empowering teams to make informed decisions based on actual progress. Experience improved transparency and better insights with the updated burndown and burnup charts in Reporting.

Wiki

Support for additional diagram types in wiki pages

We have upgraded the version of mermaid charts used in wiki pages to 8.13.9. With this upgrade, you can now include the following diagrams and visualizations in your Azure DevOps wiki pages:

- Flowchart

- Sequence diagrams

- Gantt charts

- Pie charts

- Requirement diagrams

- State diagrams

- User Journey

Diagrams that are in experimental mode such as Entity Relationship and Git Graph are not included. For more information about the new features, please see the mermaid release notes.

Feedback

We would love to hear from you! You can report a problem or provide an idea and track it through Developer Community and get advice on Stack Overflow.