Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

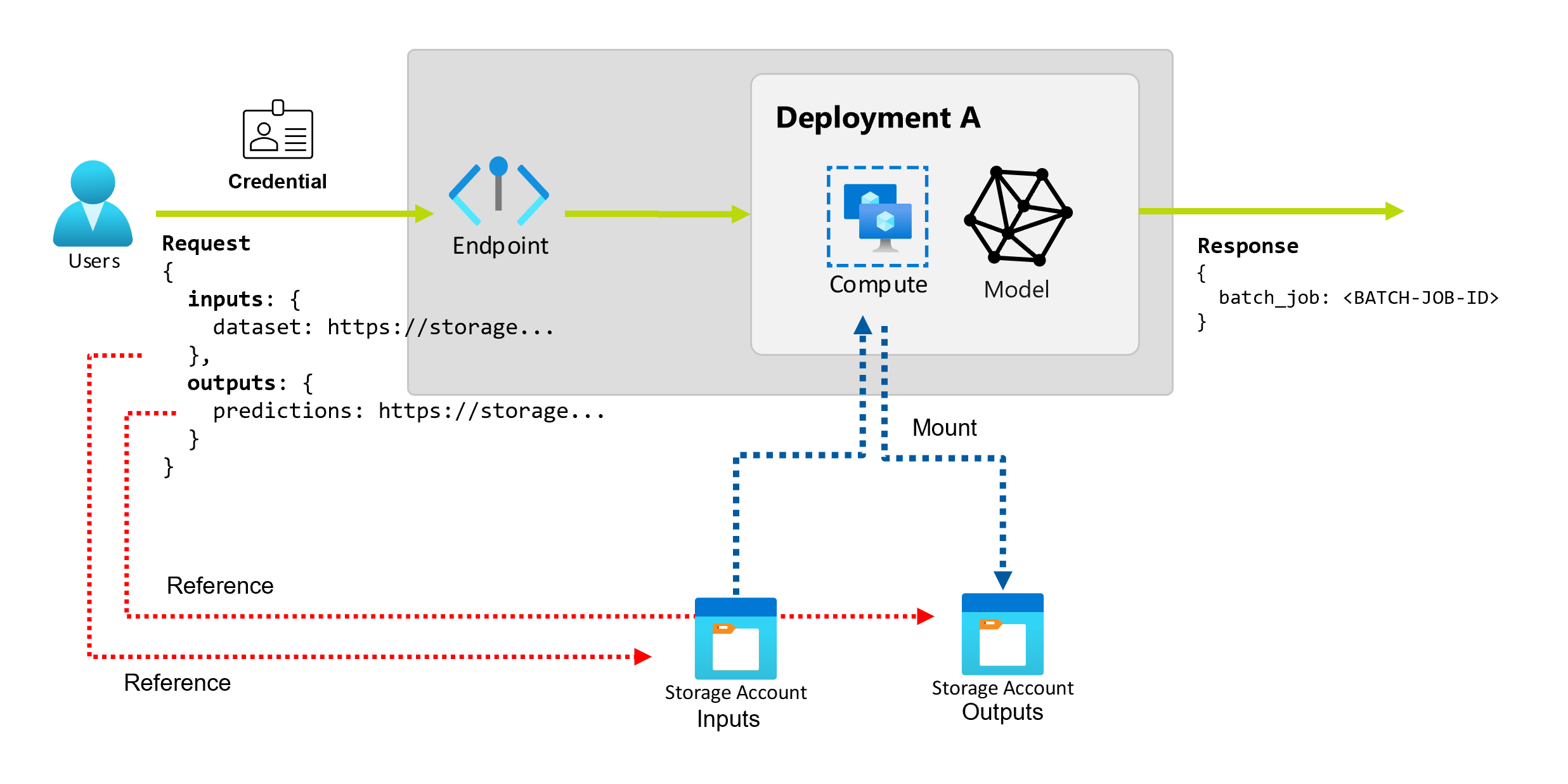

When you use batch endpoints in Azure Machine Learning, you can perform long batch operations over large amounts of input data. The data can be located in different places, such as across different regions. Certain types of batch endpoints can also receive literal parameters as inputs.

This article describes how to specify parameter inputs for batch endpoints and create deployment jobs. The process supports working with data from various sources, such as data assets, data stores, storage accounts, and local files.

Prerequisites

A batch endpoint and deployment. To create these resources, see Deploy MLflow models in batch deployments in Azure Machine Learning.

Permissions to run a batch endpoint deployment. You can use the AzureML Data Scientist, Contributor, and Owner roles to run a deployment. To review specific permissions that are required for custom role definitions, see Authorization on batch endpoints.

Credentials to invoke an endpoint. For more information, see Establish authentication.

Read access to the input data from the compute cluster where the endpoint is deployed.

Tip

Certain situations require using a credential-less data store or external Azure Storage account as data input. In these scenarios, ensure you configure compute clusters for data access, because the managed identity of the compute cluster is used for mounting the storage account. You still have granular access control, because the identity of the job (invoker) is used to read the underlying data.

Establish authentication

To invoke an endpoint, you need a valid Microsoft Entra token. When you invoke an endpoint, Azure Machine Learning creates a batch deployment job under the identity that's associated with the token.

- If you use the Azure Machine Learning CLI (v2) or the Azure Machine Learning SDK for Python (v2) to invoke endpoints, you don't need to get the Microsoft Entra token manually. During sign in, the system authenticates your user identity. It also retrieves and passes the token for you.

- If you use the REST API to invoke endpoints, you need to get the token manually.

You can use your own credentials for the invocation, as described in the following procedures.

Use the Azure CLI to sign in with interactive or device code authentication:

az login

For more information about various types of credentials, see How to run jobs using different types of credentials.

Create basic jobs

To create a job from a batch endpoint, you invoke the endpoint. Invocation can be done by using the Azure Machine Learning CLI, the Azure Machine Learning SDK for Python, or a REST API call.

The following examples show invocation basics for a batch endpoint that receives a single input data folder for processing. For examples that involve various inputs and outputs, see Understand inputs and outputs.

Use the invoke operation under batch endpoints:

az ml batch-endpoint invoke --name $ENDPOINT_NAME \

--input https://azuremlexampledata.blob.core.windows.net/data/heart-disease-uci/data

Invoke a specific deployment

Batch endpoints can host multiple deployments under the same endpoint. The default endpoint is used unless the user specifies otherwise. You can use the following procedures to change the deployment that you use.

Use the argument --deployment-name or -d to specify the name of the deployment:

az ml batch-endpoint invoke --name $ENDPOINT_NAME \

--deployment-name $DEPLOYMENT_NAME \

--input https://azuremlexampledata.blob.core.windows.net/data/heart-disease-uci/data

Configure job properties

You can configure some job properties at invocation time.

Note

Currently, you can configure job properties only in batch endpoints with pipeline component deployments.

Configure the experiment name

Use the following procedures to configure your experiment name.

Use the argument --experiment-name to specify the name of the experiment:

az ml batch-endpoint invoke --name $ENDPOINT_NAME \

--experiment-name "my-batch-job-experiment" \

--input https://azuremlexampledata.blob.core.windows.net/data/heart-disease-uci/data

Understand inputs and outputs

Batch endpoints provide a durable API that consumers can use to create batch jobs. The same interface can be used to specify the inputs and outputs your deployment expects. Use inputs to pass any information your endpoint needs to perform the job.

Batch endpoints support two types of inputs:

- Data inputs, or pointers to a specific storage location or Azure Machine Learning asset

- Literal inputs, or literal values like numbers or strings that you want to pass to the job

The number and type of inputs and outputs depend on the type of batch deployment. Model deployments always require one data input and produce one data output. Literal inputs aren't supported in model deployments. In contrast, pipeline component deployments provide a more general construct for building endpoints. In a pipeline component deployment, you can specify any number of data inputs, literal inputs, and outputs.

The following table summarizes the inputs and outputs for batch deployments:

| Deployment type | Number of inputs | Supported input types | Number of outputs | Supported output types |

|---|---|---|---|---|

| Model deployment | 1 | Data inputs | 1 | Data outputs |

| Pipeline component deployment | 0-N | Data inputs and literal inputs | 0-N | Data outputs |

Tip

Inputs and outputs are always named. Each name serves as a key for identifying the data and passing the value during invocation. Because model deployments always require one input and output, the names are ignored during invocation in model deployments. You can assign the name that best describes your use case, such as sales_estimation.

Explore data inputs

Data inputs refer to inputs that point to a location where data is placed. Because batch endpoints usually consume large amounts of data, you can't pass the input data as part of the invocation request. Instead, you specify the location where the batch endpoint should go to look for the data. Input data is mounted and streamed on the target compute instance to improve performance.

Batch endpoints can read files that are located in the following types of storage:

- Azure Machine Learning data assets, including the folder (

uri_folder) and file (uri_file) types. - Azure Machine Learning data stores, including Azure Blob Storage, Azure Data Lake Storage Gen1, and Azure Data Lake Storage Gen2.

- Azure Storage accounts, including Blob Storage, Data Lake Storage Gen1, and Data Lake Storage Gen2.

- Local data folders and files, when you use the Azure Machine Learning CLI or the Azure Machine Learning SDK for Python to invoke endpoints. But the local data gets uploaded to the default data store of your Azure Machine Learning workspace.

Important

Deprecation notice: Data assets of type FileDataset (V1) are deprecated and will be retired in the future. Existing batch endpoints that rely on this functionality will continue to work. But there's no support for V1 datasets in batch endpoints that are created with:

- Versions of the Azure Machine Learning CLI v2 that are generally available (2.4.0 and newer).

- Versions of the REST API that are generally available (2022-05-01 and newer).

Explore literal inputs

Literal inputs refer to inputs that can be represented and resolved at invocation time, like strings, numbers, and boolean values. You typically use literal inputs to pass parameters to your endpoint as part of a pipeline component deployment. Batch endpoints support the following literal types:

stringbooleanfloatinteger

Literal inputs are only supported in pipeline component deployments. To see how to specify literal endpoints, see Create jobs with literal inputs.

Explore data outputs

Data outputs refer to the location where the results of a batch job are placed. Each output has an identifiable name, and Azure Machine Learning automatically assigns a unique path to each named output. You can specify another path if you need to.

Important

Batch endpoints only support writing outputs in Blob Storage data stores. If you need to write to a storage account with hierarchical namespaces enabled, such as Data Lake Storage Gen2, you can register the storage service as a Blob Storage data store, because the services are fully compatible. In this way, you can write outputs from batch endpoints to Data Lake Storage Gen2.

Create jobs with data inputs

The following examples show how to create jobs while taking data inputs from data assets, data stores, and Azure Storage accounts.

Use input data from a data asset

Azure Machine Learning data assets (formerly known as datasets) are supported as inputs for jobs. Follow these steps to run a batch endpoint job that uses input data that's stored in a registered data asset in Azure Machine Learning.

Warning

Data assets of type table (MLTable) aren't currently supported for model deployments. MLTable is supported for pipeline component deployments.

Create the data asset. In this example, it consists of a folder that contains multiple CSV files. You use batch endpoints to process the files in parallel. You can skip this step if your data is already registered as a data asset.

Create a data asset definition in a YAML file named heart-data.yml:

$schema: https://azuremlschemas.azureedge.net/latest/data.schema.json name: heart-data description: An unlabeled data asset for heart classification. type: uri_folder path: dataCreate the data asset:

az ml data create -f heart-data.yml

Set up the input:

DATA_ASSET_ID=$(az ml data show -n heart-data --label latest | jq -r .id)The data asset ID has the format

/subscriptions/<subscription-ID>/resourceGroups/<resource-group-name>/providers/Microsoft.MachineLearningServices/workspaces/<workspace-name>/data/<data-asset-name>/versions/<data-asset-version>.Run the endpoint:

Use the

--setargument to specify the input. First replace any hyphens in the data asset name with underscore characters. Keys can contain only alphanumeric characters and underscore characters.az ml batch-endpoint invoke --name $ENDPOINT_NAME \ --set inputs.heart_data.type="uri_folder" inputs.heart_data.path=$DATA_ASSET_IDFor an endpoint that serves a model deployment, you can use the

--inputargument to specify the data input, because a model deployment always requires only one data input.az ml batch-endpoint invoke --name $ENDPOINT_NAME --input $DATA_ASSET_IDThe argument

--settends to produce long commands when you specify multiple inputs. In such cases, you can list your inputs in a file and then refer to the file when you invoke your endpoint. For instance, you can create a YAML file named inputs.yml that contains the following lines:inputs: heart_data: type: uri_folder path: /subscriptions/<subscription-ID>/resourceGroups/<resource-group-name>/providers/Microsoft.MachineLearningServices/workspaces/<workspace-name>/data/heart-data/versions/1Then you can run the following command, which uses the

--fileargument to specify the inputs:az ml batch-endpoint invoke --name $ENDPOINT_NAME --file inputs.yml

Use input data from a data store

Your batch deployment jobs can directly reference data that's in Azure Machine Learning registered data stores. In this example, you first upload some data to a data store in your Azure Machine Learning workspace. Then you run a batch deployment on that data.

This example uses the default data store, but you can use a different data store. In any Azure Machine Learning workspace, the name of the default blob data store is workspaceblobstore. If you want to use a different data store in the following steps, replace workspaceblobstore with the name of your preferred data store.

Upload sample data to the data store. The sample data is available in the azureml-examples repository. You can find the data in the sdk/python/endpoints/batch/deploy-models/heart-classifier-mlflow/data folder of that repository.

- In Azure Machine Learning studio, open the data assets page for your default blob data store, and then look up the name of its blob container.

- Use a tool like Azure Storage Explorer or AzCopy to upload the sample data to a folder named heart-disease-uci-unlabeled in that container.

Set up the input information:

Place the file path in the

INPUT_PATHvariable:DATA_PATH="heart-disease-uci-unlabeled" INPUT_PATH="azureml://datastores/workspaceblobstore/paths/$DATA_PATH"Notice how the

pathsfolder is part of the input path. This format indicates that the value that follows is a path.Run the endpoint:

Use the

--setargument to specify the input:az ml batch-endpoint invoke --name $ENDPOINT_NAME \ --set inputs.heart_data.type="uri_folder" inputs.heart_data.path=$INPUT_PATHFor an endpoint that serves a model deployment, you can use the

--inputargument to specify the data input, because a model deployment always requires only one data input.az ml batch-endpoint invoke --name $ENDPOINT_NAME --input $INPUT_PATH --input-type uri_folderThe argument

--settends to produce long commands when you specify multiple inputs. In such cases, you can list your inputs in a file and then refer to the file when you invoke your endpoint. For instance, you can create a YAML file named inputs.yml that contains the following lines:inputs: heart_data: type: uri_folder path: azureml://datastores/workspaceblobstore/paths/<data-path>If your data is in a file, use the

uri_filetype for the input instead.Then you can run the following command, which uses the

--fileargument to specify the inputs:az ml batch-endpoint invoke --name $ENDPOINT_NAME --file inputs.yml

Use input data from an Azure Storage account

Azure Machine Learning batch endpoints can read data from cloud locations in Azure Storage accounts, both public and private. Use the following steps to run a batch endpoint job with data in a storage account.

For more information about extra required configurations for reading data from storage accounts, see Configure compute clusters for data access.

Set up the input:

Set the

INPUT_DATAvariable:INPUT_DATA="https://azuremlexampledata.blob.core.windows.net/data/heart-disease-uci/data"If your data is in a file, use a format similar to the following one to define the input path:

INPUT_DATA="https://azuremlexampledata.blob.core.windows.net/data/heart-disease-uci/data/heart.csv"Run the endpoint:

Use the

--setargument to specify the input:az ml batch-endpoint invoke --name $ENDPOINT_NAME \ --set inputs.heart_data.type="uri_folder" inputs.heart_data.path=$INPUT_DATAFor an endpoint that serves a model deployment, you can use the

--inputargument to specify the data input, because a model deployment always requires only one data input.az ml batch-endpoint invoke --name $ENDPOINT_NAME --input $INPUT_DATA --input-type uri_folderThe

--setargument tends to produce long commands when you specify multiple inputs. In such cases, you can list your inputs in a file and then refer to the file when you invoke your endpoint. For instance, you can create a YAML file named inputs.yml that contains the following lines:inputs: heart_data: type: uri_folder path: https://azuremlexampledata.blob.core.windows.net/data/heart-disease-uci/dataThen you can run the following command, which uses the

--fileargument to specify the inputs:az ml batch-endpoint invoke --name $ENDPOINT_NAME --file inputs.ymlIf your data is in a file, use the

uri_filetype in the inputs.yml file for the data input.

Create jobs with literal inputs

Pipeline component deployments can take literal inputs. For an example of a batch deployment that contains a basic pipeline, see How to deploy pipelines with batch endpoints.

The following example shows how to specify an input named score_mode, of type string, with a value of append:

Place your inputs in a YAML file, such as one named inputs.yml:

inputs:

score_mode:

type: string

default: append

Run the following command, which uses the --file argument to specify the inputs.

az ml batch-endpoint invoke --name $ENDPOINT_NAME --file inputs.yml

You can also use the --set argument to specify the type and default value. But this approach tends to produce long commands when you specify multiple inputs:

az ml batch-endpoint invoke --name $ENDPOINT_NAME \

--set inputs.score_mode.type="string" inputs.score_mode.default="append"

Create jobs with data outputs

The following example shows how to change the location of an output named score. For completeness, the example also configures an input named heart_data.

This example uses the default data store, workspaceblobstore. But you can use any other data store in your workspace as long as it's a Blob Storage account. If you want to use a different data store, replace workspaceblobstore in the following steps with the name of your preferred data store.

Get the ID of the data store.

DATA_STORE_ID=$(az ml datastore show -n workspaceblobstore | jq -r '.id')The data store ID has the format

/subscriptions/<subscription-ID>/resourceGroups/<resource-group-name>/providers/Microsoft.MachineLearningServices/workspaces/<workspace-name>/datastores/workspaceblobstore.Create a data output:

Define the input and output values in a file named inputs-and-outputs.yml. Use the data store ID in the output path. For completeness, also define the data input.

inputs: heart_data: type: uri_folder path: https://azuremlexampledata.blob.core.windows.net/data/heart-disease-uci/data outputs: score: type: uri_file path: <data-store-ID>/paths/batch-jobs/my-unique-pathNote

Notice how the

pathsfolder is part of the output path. This format indicates that the value that follows is a path.Run the deployment:

Use the

--fileargument to specify the input and output values:az ml batch-endpoint invoke --name $ENDPOINT_NAME --file inputs-and-outputs.yml