Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:  Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

Tip

You can use Azure Machine Learning managed virtual networks instead of the steps in this article. With a managed virtual network, Azure Machine Learning handles the job of network isolation for your workspace and managed computes. You can also add private endpoints for resources needed by the workspace, such as Azure Storage Account. For more information, see Workspace managed network isolation.

Azure Machine Learning compute instance, serverless compute, and compute cluster can be used to securely train models in an Azure Virtual Network. When planning your environment, you can configure the compute instance/cluster or serverless compute with or without a public IP address. The general differences between the two are:

- No public IP: Reduces costs as it doesn't have the same networking resource requirements. Improves security by removing the requirement for inbound traffic from the internet. However, there are additional configuration changes required to enable outbound access to required resources (Microsoft Entra ID, Azure Resource Manager, etc.).

- Public IP: Works by default, but costs more due to additional Azure networking resources. Requires inbound communication from the Azure Machine Learning service over the public internet.

The following table contains the differences between these configurations:

| Configuration | With public IP | Without public IP |

|---|---|---|

| Inbound traffic | AzureMachineLearning service tag. |

None |

| Outbound traffic | By default, can access the public internet with no restrictions. You can restrict what it accesses using a Network Security Group or firewall. |

By default, can access the public network using the default outbound access provided by Azure. We recommend using a Virtual Network NAT gateway or Firewall instead if you need to route outbound traffic to required resources on the internet. |

| Azure networking resources | Public IP address, load balancer, network interface | None |

You can also use Azure Databricks or HDInsight to train models in a virtual network.

Important

Items marked (preview) in this article are currently in public preview. The preview version is provided without a service level agreement, and it's not recommended for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

This article is part of a series on securing an Azure Machine Learning workflow. See the other articles in this series:

- Virtual network overview

- Secure the workspace resources

- Secure the inference environment

- Enable studio functionality

- Use custom DNS

- Use a firewall

For a tutorial on creating a secure workspace, see Tutorial: Create a secure workspace, Bicep template, or Terraform template.

In this article you learn how to secure the following training compute resources in a virtual network:

- Azure Machine Learning compute cluster

- Azure Machine Learning compute instance

- Azure Machine Learning serverless compute

- Azure Databricks

- Virtual Machine

- HDInsight cluster

Prerequisites

Read the Network security overview article to understand common virtual network scenarios and overall virtual network architecture.

An existing virtual network and subnet to use with your compute resources. This VNet must be in the same subscription as your Azure Machine Learning workspace.

- We recommend putting the storage accounts used by your workspace and training jobs in the same Azure region that you plan to use for compute instances, serverless compute, and clusters. If they aren't in the same Azure region, you may incur data transfer costs and increased network latency.

- Make sure that WebSocket communication is allowed to

*.instances.azureml.netand*.instances.azureml.msin your VNet. WebSockets are used by Jupyter on compute instances.

An existing subnet in the virtual network. This subnet is used when creating compute instances, clusters, and nodes for serverless compute.

- Make sure that the subnet isn't delegated to other Azure services.

- Make sure that the subnet contains enough free IP addresses. Each compute instance requires one IP address. Each node within a compute cluster and each serverless compute node requires one IP address.

If you have your own DNS server, we recommend using DNS forwarding to resolve the fully qualified domain names (FQDN) of compute instances and clusters. For more information, see Use a custom DNS with Azure Machine Learning.

To deploy resources into a virtual network or subnet, your user account must have permissions to the following actions in Azure role-based access control (Azure RBAC):

- "Microsoft.Network/*/read" on the virtual network resource. This permission isn't needed for Azure Resource Manager (ARM) template deployments.

- "Microsoft.Network/virtualNetworks/join/action" on the virtual network resource.

- "Microsoft.Network/virtualNetworks/subnets/join/action" on the subnet resource.

For more information on Azure RBAC with networking, see the Networking built-in roles

Limitations

Compute cluster/instance and serverless compute deployment in virtual network isn't supported with Azure Lighthouse.

Port 445 must be open for private network communications between your compute instances and the default storage account during training. For example, if your computes are in one VNet and the storage account is in another, don't block port 445 to the storage account VNet.

Compute cluster in a different VNet/region from workspace

Important

You can't create a compute instance in a different region/VNet, only a compute cluster.

To create a compute cluster in an Azure Virtual Network in a different region than your workspace virtual network, you have couple of options to enable communication between the two VNets.

- Use VNet Peering.

- Add a private endpoint for your workspace in the virtual network that will contain the compute cluster.

Important

Regardless of the method selected, you must also create the VNet for the compute cluster; Azure Machine Learning will not create it for you.

You must also allow the default storage account, Azure Container Registry, and Azure Key Vault to access the VNet for the compute cluster. There are multiple ways to accomplish this. For example, you can create a private endpoint for each resource in the VNet for the compute cluster, or you can use VNet peering to allow the workspace VNet to access the compute cluster VNet.

Scenario: VNet peering

Configure your workspace to use an Azure Virtual Network. For more information, see Secure your workspace resources.

Create a second Azure Virtual Network that will be used for your compute clusters. It can be in a different Azure region than the one used for your workspace.

Configure VNet Peering between the two VNets.

Tip

Wait until the VNet Peering status is Connected before continuing.

Modify the

privatelink.api.azureml.msDNS zone to add a link to the VNet for the compute cluster. This zone is created by your Azure Machine Learning workspace when it uses a private endpoint to participate in a VNet.Add a new virtual network link to the DNS zone. You can do this multiple ways:

- From the Azure portal, navigate to the DNS zone and select Virtual network links. Then select + Add and select the VNet that you created for your compute clusters.

- From the Azure CLI, use the

az network private-dns link vnet createcommand. For more information, see az network private-dns link vnet create. - From Azure PowerShell, use the

New-AzPrivateDnsVirtualNetworkLinkcommand. For more information, see New-AzPrivateDnsVirtualNetworkLink.

Repeat the previous step and sub-steps for the

privatelink.notebooks.azure.netDNS zone.Configure the following Azure resources to allow access from both VNets.

- The default storage account for the workspace.

- The Azure Container registry for the workspace.

- The Azure Key Vault for the workspace.

Tip

There are multiple ways that you might configure these services to allow access to the VNets. For example, you might create a private endpoint for each resource in both VNets. Or you might configure the resources to allow access from both VNets.

Create a compute cluster as you normally would when using a VNet, but select the VNet that you created for the compute cluster. If the VNet is in a different region, select that region when creating the compute cluster.

Warning

When setting the region, if it is a different region than your workspace or datastores you may see increased network latency and data transfer costs. The latency and costs can occur when creating the cluster, and when running jobs on it.

Scenario: Private endpoint

Configure your workspace to use an Azure Virtual Network. For more information, see Secure your workspace resources.

Create a second Azure Virtual Network that will be used for your compute clusters. It can be in a different Azure region than the one used for your workspace.

Create a new private endpoint for your workspace in the VNet that will contain the compute cluster.

To add a new private endpoint using the Azure portal, select your workspace and then select Networking. Select Private endpoint connections, + Private endpoint and use the fields to create a new private endpoint.

- When selecting the Region, select the same region as your virtual network.

- When selecting Resource type, use Microsoft.MachineLearningServices/workspaces.

- Set the Resource to your workspace name.

- Set the Virtual network and Subnet to the VNet and subnet that you created for your compute clusters.

Finally, select Create to create the private endpoint.

To add a new private endpoint using the Azure CLI, use the

az network private-endpoint create. For an example of using this command, see Configure a private endpoint for Azure Machine Learning workspace.

Create a compute cluster as you normally would when using a VNet, but select the VNet that you created for the compute cluster. If the VNet is in a different region, select that region when creating the compute cluster.

Warning

When setting the region, if it is a different region than your workspace or datastores you may see increased network latency and data transfer costs. The latency and costs can occur when creating the cluster, and when running jobs on it.

Compute instance/cluster or serverless compute with no public IP

Important

This information is only valid when using an Azure Virtual Network. If you are using a managed virtual network, compute resources can't be deployed in your Azure Virtual Network. For information on using a managed virtual network, see managed compute with a managed network.

Important

If you have been using compute instances or compute clusters configured for no public IP without opting-in to the preview, you will need to delete and recreate them after January 20, 2023 (when the feature is generally available).

If you were previously using the preview of no public IP, you may also need to modify what traffic you allow inbound and outbound, as the requirements have changed for general availability:

- Outbound requirements - Two additional outbound, which are only used for the management of compute instances and clusters. The destination of these service tags are owned by Microsoft:

AzureMachineLearningservice tag on UDP port 5831.BatchNodeManagementservice tag on TCP port 443.

The following configurations are in addition to those listed in the Prerequisites section, and are specific to creating a compute instances/clusters configured for no public IP. They also apply to serverless compute:

You must use a workspace private endpoint for the compute resource to communicate with Azure Machine Learning services from the VNet. For more information, see Configure a private endpoint for Azure Machine Learning workspace.

In your VNet, allow outbound traffic to the following service tags or fully qualified domain names (FQDN):

Service tag Protocol Port Notes AzureMachineLearningTCP

UDP443/8787/18881

5831Communication with the Azure Machine Learning service. BatchNodeManagement.<region>ANY 443 Replace <region>with the Azure region that contains your Azure Machine Learning workspace. Communication with Azure Batch. Compute instance and compute cluster are implemented using the Azure Batch service.Storage.<region>TCP 443 Replace <region>with the Azure region that contains your Azure Machine Learning workspace. This service tag is used to communicate with the Azure Storage account used by Azure Batch.Important

The outbound access to

Storage.<region>could potentially be used to exfiltrate data from your workspace. By using a Service Endpoint Policy, you can mitigate this vulnerability. For more information, see the Azure Machine Learning data exfiltration prevention article.FQDN Protocol Port Notes <region>.tundra.azureml.msUDP 5831 Replace <region>with the Azure region that contains your Azure Machine Learning workspace.graph.windows.netTCP 443 Communication with the Microsoft Graph API. *.instances.azureml.msTCP 443/8787/18881 Communication with Azure Machine Learning. *.<region>.batch.azure.comANY 443 Replace <region>with the Azure region that contains your Azure Machine Learning workspace. Communication with Azure Batch.*.<region>.service.batch.azure.comANY 443 Replace <region>with the Azure region that contains your Azure Machine Learning workspace. Communication with Azure Batch.*.blob.core.windows.netTCP 443 Communication with Azure Blob storage. *.queue.core.windows.netTCP 443 Communication with Azure Queue storage. *.table.core.windows.netTCP 443 Communication with Azure Table storage. By default, a compute instance/cluster configured for no public IP doesn't have outbound access to the internet. If you can access the internet from it, it is because of Azure default outbound access and you have an NSG that allows outbound to the internet. However, we don't recommend using the default outbound access. If you need outbound access to the internet, we recommend using either a firewall and outbound rules or a NAT gateway and network service groups to allow outbound traffic instead.

For more information on the outbound traffic that is used by Azure Machine Learning, see the following articles:

For more information on service tags that can be used with Azure Firewall, see the Virtual network service tags article.

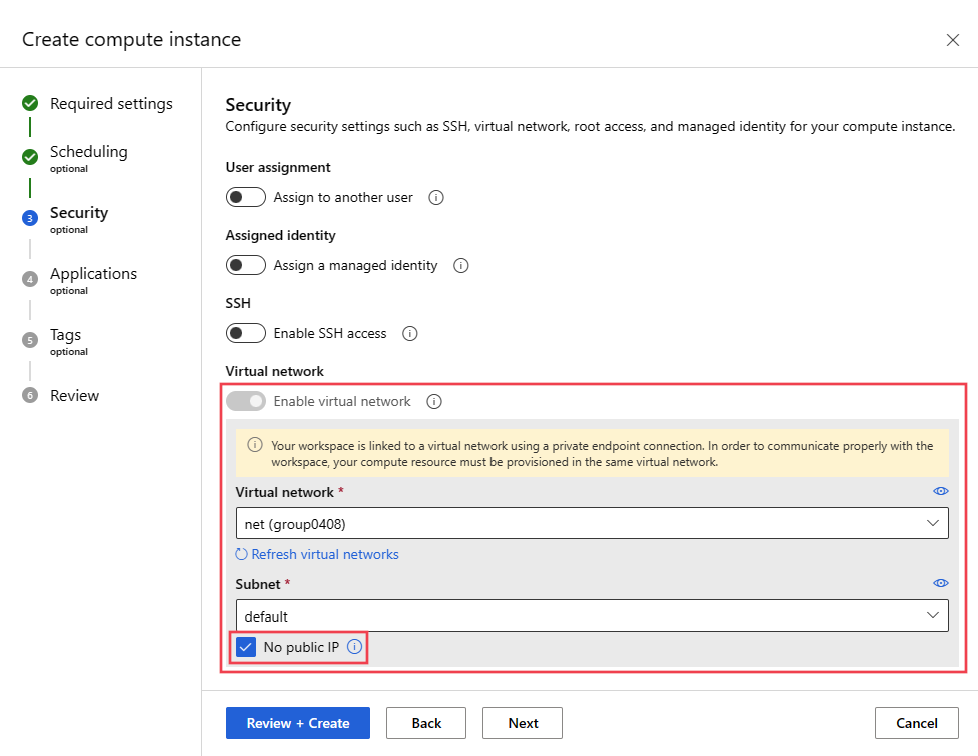

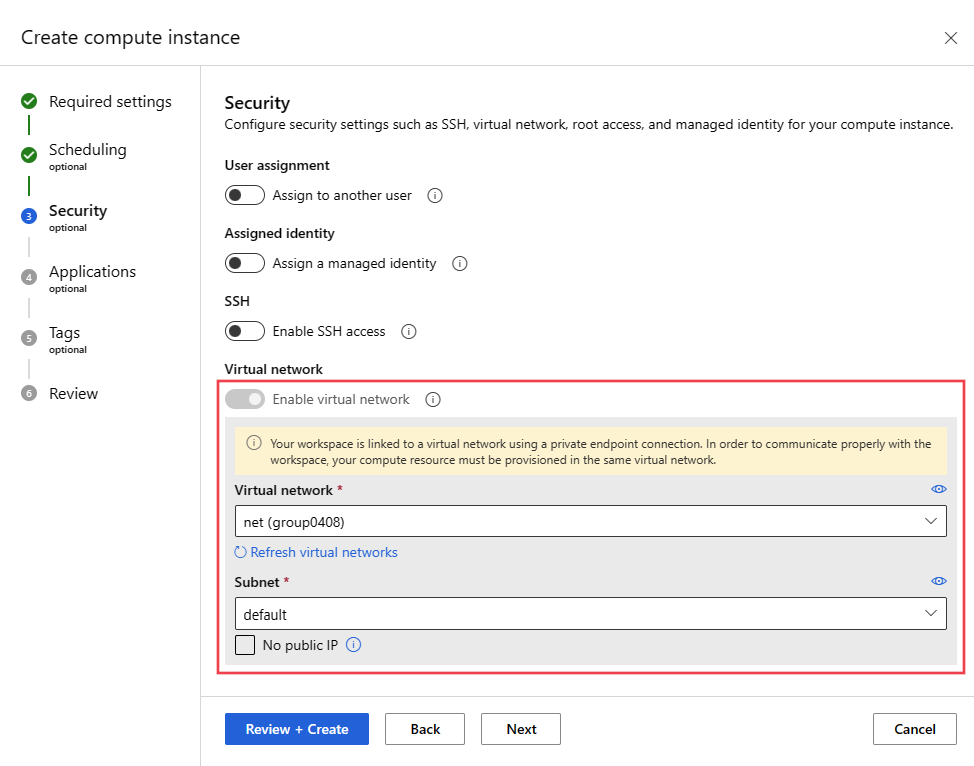

Use the following information to create a compute instance or cluster with no public IP address:

In the az ml compute create command, replace the following values:

rg: The resource group that the compute will be created in.ws: The Azure Machine Learning workspace name.yourvnet: The Azure Virtual Network.yoursubnet: The subnet to use for the compute.AmlComputeorComputeInstance: SpecifyingAmlComputecreates a compute cluster.ComputeInstancecreates a compute instance.

# create a compute cluster with no public IP

az ml compute create --name cpu-cluster --resource-group rg --workspace-name ws --vnet-name yourvnet --subnet yoursubnet --type AmlCompute --set enable_node_public_ip=False

# create a compute instance with no public IP

az ml compute create --name myci --resource-group rg --workspace-name ws --vnet-name yourvnet --subnet yoursubnet --type ComputeInstance --set enable_node_public_ip=False

Use the following information to configure serverless compute nodes with no public IP address in the VNet for a given workspace:

Important

If you are using a no public IP serverless compute and the workspace uses an IP allow list, you must add an outbound private endpoint to the workspace. The serverless compute needs to communicate with the workspace, but when configured for no public IP it uses the Azure Default Outbound for internet access. The public IP for this outbound is dynamic, and can't be added to the IP allow list. Creating an outbound private endpoint to the workspace allows traffic from the serverless compute bound for the workspace to bypass the IP allow list.

Create a workspace:

az ml workspace create -n <workspace-name> -g <resource-group-name> --file serverlesscomputevnetsettings.yml

name: testserverlesswithnpip

location: eastus

public_network_access: Disabled

serverless_compute:

custom_subnet: /subscriptions/<sub id>/resourceGroups/<resource group>/providers/Microsoft.Network/virtualNetworks/<vnet name>/subnets/<subnet name>

no_public_ip: true

Update workspace:

az ml workspace update -n <workspace-name> -g <resource-group-name> --file serverlesscomputevnetsettings.yml

serverless_compute:

custom_subnet: /subscriptions/<sub id>/resourceGroups/<resource group>/providers/Microsoft.Network/virtualNetworks/<vnet name>/subnets/<subnet name>

no_public_ip: true

Compute instance/cluster or serverless compute with public IP

Important

This information is only valid when using an Azure Virtual Network. If you are using a managed virtual network, compute resources can't be deployed in your Azure Virtual Network. For information on using a managed virtual network, see managed compute with a managed network.

The following configurations are in addition to those listed in the Prerequisites section, and are specific to creating compute instances/clusters that have a public IP. They also apply to serverless compute:

If you put multiple compute instances/clusters in one virtual network, you might need to request a quota increase for one or more of your resources. The Machine Learning compute instance or cluster automatically allocates networking resources in the resource group that contains the virtual network. For each compute instance or cluster, the service allocates the following resources:

A network security group (NSG) is automatically created. This NSG allows inbound TCP traffic on port 44224 from the

AzureMachineLearningservice tag.Important

Compute instance and compute cluster automatically create an NSG with the required rules.

If you have another NSG at the subnet level, the rules in the subnet level NSG mustn't conflict with the rules in the automatically created NSG.

To learn how the NSGs filter your network traffic, see How network security groups filter network traffic.

One load balancer

For compute clusters, these resources are deleted every time the cluster scales down to 0 nodes and created when scaling up.

For a compute instance, these resources are kept until the instance is deleted. Stopping the instance doesn't remove the resources.

Important

These resources are limited by the subscription's resource quotas. If the virtual network resource group is locked then deletion of compute cluster/instance will fail. Load balancer cannot be deleted until the compute cluster/instance is deleted. Also please ensure there is no Azure Policy assignment which prohibits creation of network security groups.

In your VNet, allow inbound TCP traffic on port 44224 from the

AzureMachineLearningservice tag.Important

The compute instance/cluster is dynamically assigned an IP address when it is created. Since the address is not known before creation, and inbound access is required as part of the creation process, you cannot statically assign it on your firewall. Instead, if you are using a firewall with the VNet you must create a user-defined route to allow this inbound traffic.

In your VNet, allow outbound traffic to the following service tags:

Service tag Protocol Port Notes AzureMachineLearningTCP

UDP443/8787/18881

5831Communication with the Azure Machine Learning service. BatchNodeManagement.<region>ANY 443 Replace <region>with the Azure region that contains your Azure Machine Learning workspace. Communication with Azure Batch. Compute instance and compute cluster are implemented using the Azure Batch service.Storage.<region>TCP 443 Replace <region>with the Azure region that contains your Azure Machine Learning workspace. This service tag is used to communicate with the Azure Storage account used by Azure Batch.Important

The outbound access to

Storage.<region>could potentially be used to exfiltrate data from your workspace. By using a Service Endpoint Policy, you can mitigate this vulnerability. For more information, see the Azure Machine Learning data exfiltration prevention article.FQDN Protocol Port Notes <region>.tundra.azureml.msUDP 5831 Replace <region>with the Azure region that contains your Azure Machine Learning workspace.graph.windows.netTCP 443 Communication with the Microsoft Graph API. *.instances.azureml.msTCP 443/8787/18881 Communication with Azure Machine Learning. *.<region>.batch.azure.comANY 443 Replace <region>with the Azure region that contains your Azure Machine Learning workspace. Communication with Azure Batch.*.<region>.service.batch.azure.comANY 443 Replace <region>with the Azure region that contains your Azure Machine Learning workspace. Communication with Azure Batch.*.blob.core.windows.netTCP 443 Communication with Azure Blob storage. *.queue.core.windows.netTCP 443 Communication with Azure Queue storage. *.table.core.windows.netTCP 443 Communication with Azure Table storage.

Use the following information to create a compute instance or cluster with a public IP address in the VNet:

In the az ml compute create command, replace the following values:

rg: The resource group that the compute will be created in.ws: The Azure Machine Learning workspace name.yourvnet: The Azure Virtual Network.yoursubnet: The subnet to use for the compute.AmlComputeorComputeInstance: SpecifyingAmlComputecreates a compute cluster.ComputeInstancecreates a compute instance.

# create a compute cluster with a public IP

az ml compute create --name cpu-cluster --resource-group rg --workspace-name ws --vnet-name yourvnet --subnet yoursubnet --type AmlCompute

# create a compute instance with a public IP

az ml compute create --name myci --resource-group rg --workspace-name ws --vnet-name yourvnet --subnet yoursubnet --type ComputeInstance

Use the following information to configure serverless compute nodes with a public IP address in the VNet for a given workspace:

Create a workspace:

az ml workspace create -n <workspace-name> -g <resource-group-name> --file serverlesscomputevnetsettings.yml

name: testserverlesswithvnet

location: eastus

serverless_compute:

custom_subnet: /subscriptions/<sub id>/resourceGroups/<resource group>/providers/Microsoft.Network/virtualNetworks/<vnet name>/subnets/<subnet name>

no_public_ip: false

Update workspace:

az ml workspace update -n <workspace-name> -g <resource-group-name> --file serverlesscomputevnetsettings.yml

serverless_compute:

custom_subnet: /subscriptions/<sub id>/resourceGroups/<resource group>/providers/Microsoft.Network/virtualNetworks/<vnet name>/subnets/<subnet name>

no_public_ip: false

Azure Databricks

- The virtual network must be in the same subscription and region as the Azure Machine Learning workspace.

- If the Azure Storage Account(s) for the workspace are also secured in a virtual network, they must be in the same virtual network as the Azure Databricks cluster.

- In addition to the databricks-private and databricks-public subnets used by Azure Databricks, the default subnet created for the virtual network is also required.

- Azure Databricks doesn't use a private endpoint to communicate with the virtual network.

For specific information on using Azure Databricks with a virtual network, see Deploy Azure Databricks in your Azure Virtual Network.

Virtual machine or HDInsight cluster

In this section, you learn how to use a virtual machine or Azure HDInsight cluster in a virtual network with your workspace.

Create the VM or HDInsight cluster

Important

Azure Machine Learning supports only virtual machines that are running Ubuntu.

Create a VM or HDInsight cluster by using the Azure portal or the Azure CLI, and put the cluster in an Azure virtual network. For more information, see the following articles:

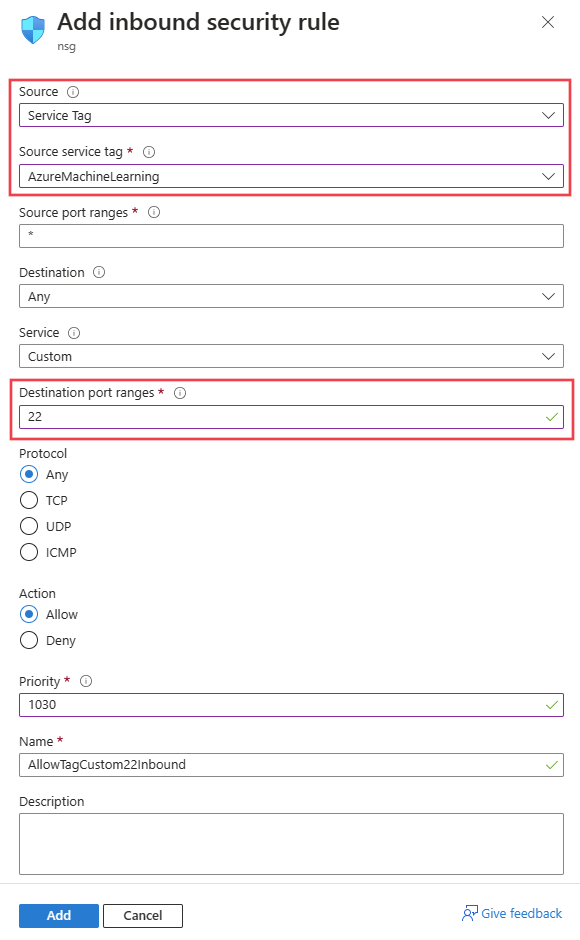

Configure network ports

Allow Azure Machine Learning to communicate with the SSH port on the VM or cluster, configure a source entry for the network security group. The SSH port is usually port 22. To allow traffic from this source, do the following actions:

In the Source drop-down list, select Service Tag.

In the Source service tag drop-down list, select AzureMachineLearning.

In the Source port ranges drop-down list, select *.

In the Destination drop-down list, select Any.

In the Destination port ranges drop-down list, select 22.

Under Protocol, select Any.

Under Action, select Allow.

Keep the default outbound rules for the network security group. For more information, see the default security rules in Security groups.

If you don't want to use the default outbound rules and you do want to limit the outbound access of your virtual network, see the required public internet access section.

Attach the VM or HDInsight cluster

Attach the VM or HDInsight cluster to your Azure Machine Learning workspace. For more information, see Manage compute resources for model training and deployment in studio.

Required public internet access to train models

Important

While previous sections of this article describe configurations required to create compute resources, the configuration information in this section is required to use these resources to train models.

Azure Machine Learning requires both inbound and outbound access to the public internet. The following tables provide an overview of the required access and what purpose it serves. For service tags that end in .region, replace region with the Azure region that contains your workspace. For example, Storage.westus:

Tip

The required tab lists the required inbound and outbound configuration. The situational tab lists optional inbound and outbound configurations required by specific configurations you might want to enable.

| Direction | Protocol & ports |

Service tag | Purpose |

|---|---|---|---|

| Outbound | TCP: 80, 443 | AzureActiveDirectory |

Authentication using Microsoft Entra ID. |

| Outbound | TCP: 443, 18881 UDP: 5831 |

AzureMachineLearning |

Using Azure Machine Learning services. Python intellisense in notebooks uses port 18881. Creating, updating, and deleting an Azure Machine Learning compute instance uses port 5831. |

| Outbound | ANY: 443 | BatchNodeManagement.region |

Communication with Azure Batch back-end for Azure Machine Learning compute instances/clusters. |

| Outbound | TCP: 443 | AzureResourceManager |

Creation of Azure resources with Azure Machine Learning, Azure CLI, and Azure Machine Learning SDK. |

| Outbound | TCP: 443 | Storage.region |

Access data stored in the Azure Storage Account for compute cluster and compute instance. For information on preventing data exfiltration over this outbound, see Data exfiltration protection. |

| Outbound | TCP: 443 | AzureFrontDoor.FrontEnd* Not needed in Microsoft Azure operated by 21Vianet. |

Global entry point for Azure Machine Learning studio. Store images and environments for AutoML. For information on preventing data exfiltration over this outbound, see Data exfiltration protection. |

| Outbound | TCP: 443 | MicrosoftContainerRegistry.regionNote that this tag has a dependency on the AzureFrontDoor.FirstParty tag |

Access docker images provided by Microsoft. Setup of the Azure Machine Learning router for Azure Kubernetes Service. |

Tip

If you need the IP addresses instead of service tags, use one of the following options:

- Download a list from Azure IP Ranges and Service Tags.

- Use the Azure CLI az network list-service-tags command.

- Use the Azure PowerShell Get-AzNetworkServiceTag command.

The IP addresses may change periodically.

You may also need to allow outbound traffic to Visual Studio Code and non-Microsoft sites for the installation of packages required by your machine learning project. The following table lists commonly used repositories for machine learning:

| Host name | Purpose |

|---|---|

anaconda.com*.anaconda.com |

Used to install default packages. |

*.anaconda.org |

Used to get repo data. |

pypi.org |

Used to list dependencies from the default index, if any, and the index isn't overwritten by user settings. If the index is overwritten, you must also allow *.pythonhosted.org. |

cloud.r-project.org |

Used when installing CRAN packages for R development. |

*.pytorch.org |

Used by some examples based on PyTorch. |

*.tensorflow.org |

Used by some examples based on TensorFlow. |

code.visualstudio.com |

Required to download and install Visual Studio Code desktop. This isn't required for Visual Studio Code Web. |

update.code.visualstudio.com*.vo.msecnd.net |

Used to retrieve Visual Studio Code server bits that are installed on the compute instance through a setup script. |

marketplace.visualstudio.comvscode.blob.core.windows.net*.gallerycdn.vsassets.io |

Required to download and install Visual Studio Code extensions. These hosts enable the remote connection to Compute Instances provided by the Azure ML extension for Visual Studio Code. For more information, see Connect to an Azure Machine Learning compute instance in Visual Studio Code. |

raw.githubusercontent.com/microsoft/vscode-tools-for-ai/master/azureml_remote_websocket_server/* |

Used to retrieve websocket server bits, which are installed on the compute instance. The websocket server is used to transmit requests from Visual Studio Code client (desktop application) to Visual Studio Code server running on the compute instance. |

Note

When using the Azure Machine Learning VS Code extension the remote compute instance will require an access to public repositories to install the packages required by the extension. If the compute instance requires a proxy to access these public repositories or the Internet, you will need to set and export the HTTP_PROXY and HTTPS_PROXY environment variables in the ~/.bashrc file of the compute instance. This process can be automated at provisioning time by using a custom script.

When using Azure Kubernetes Service (AKS) with Azure Machine Learning, allow the following traffic to the AKS VNet:

- General inbound/outbound requirements for AKS as described in the Restrict egress traffic in Azure Kubernetes Service article.

- Outbound to mcr.microsoft.com.

- When deploying a model to an AKS cluster, use the guidance in the Deploy ML models to Azure Kubernetes Service article.

For information on using a firewall solution, see Use a firewall with Azure Machine Learning.

Next steps

This article is part of a series on securing an Azure Machine Learning workflow. See the other articles in this series: