Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Multimodal Large Language Models (LLMs), which can process and interpret diverse forms of data inputs, present a powerful tool that can elevate the capabilities of language-only systems to new heights. Among the various data types, images are important for many real-world applications. The incorporation of image data into AI systems provides an essential layer of visual understanding.

In this article, you'll learn:

- How to use image data in prompt flow

- How to use built-in GPT-4V tool to analyze image inputs.

- How to build a chatbot that can process image and text inputs.

- How to create a batch run using image data.

- How to consume online endpoint with image data.

Important

Prompt flow image support is currently in public preview. This preview is provided without a service-level agreement, and is not recommended for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Image type in prompt flow

Prompt flow input and output support Image as a new data type.

To use image data in prompt flow authoring page:

Add a flow input, select the data type as Image. You can upload, drag and drop an image file, paste an image from clipboard, or specify an image URL or the relative image path in the flow folder.

Preview the image. If the image isn't displayed correctly, delete the image and add it again.

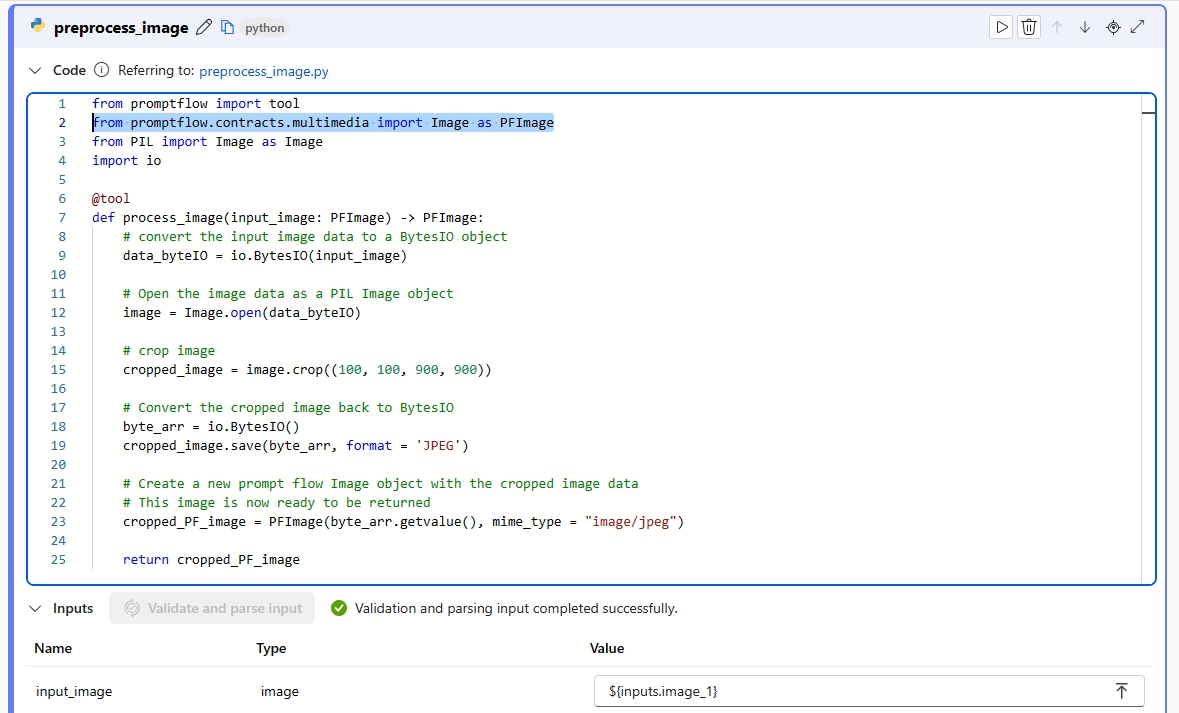

You might want to preprocess the image using Python tool before feeding it to LLM, for example, you can resize or crop the image to a smaller size.

Important

To process image using Python function, you need to use the

Imageclass, import it frompromptflow.contracts.multimediapackage. The Image class is used to represent an Image type within prompt flow. It is designed to work with image data in byte format, which is convenient when you need to handle or manipulate the image data directly.To return the processed image data, you need to use the

Imageclass to wrap the image data. Create anImageobject by providing the image data in bytes and the MIME typemime_type. The MIME type lets the system understand the format of the image data, or it can be*for unknown type.Run the Python node and check the output. In this example, the Python function returns the processed Image object. Select the image output to preview the image.

If the Image object from Python node is set as the flow output, you can preview the image in the flow output page as well.

If the Image object from Python node is set as the flow output, you can preview the image in the flow output page as well.

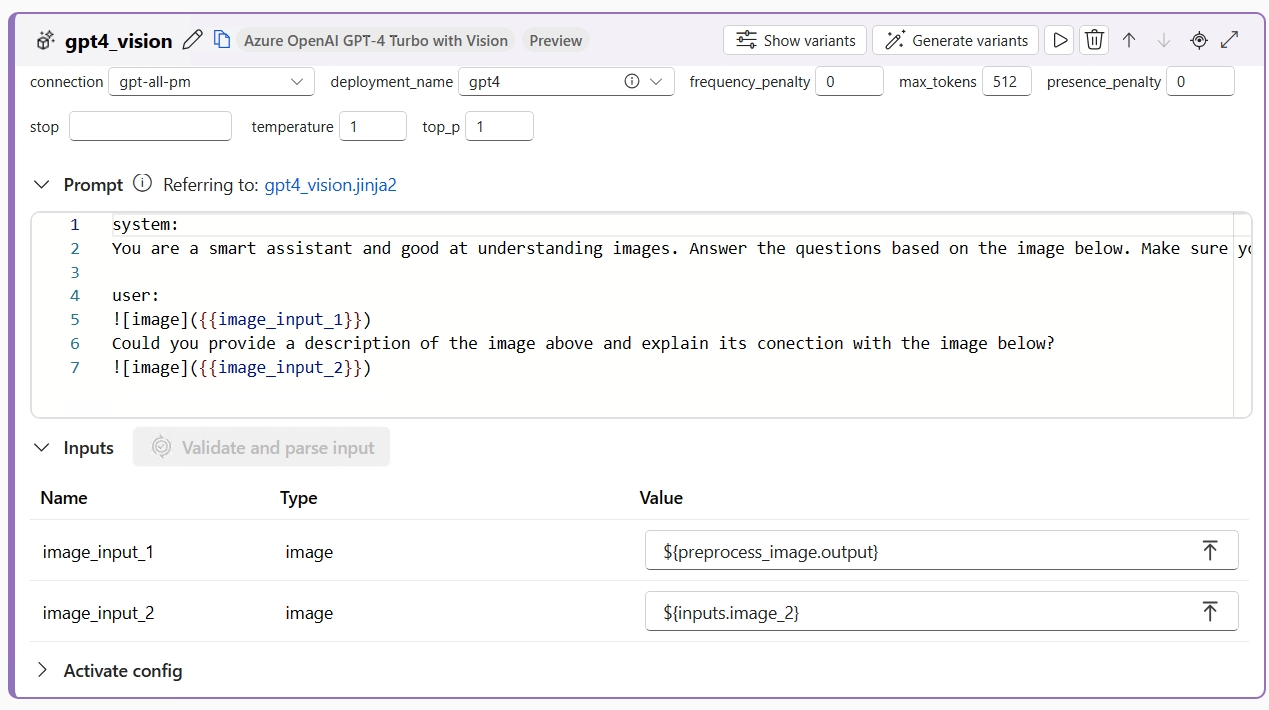

Use GPT-4V tool

Azure OpenAI GPT-4 Turbo with Vision tool and OpenAI GPT-4V are built-in tools in prompt flow that can use OpenAI GPT-4V model to answer questions based on input images. You can find the tool by selecting More tool in the flow authoring page.

Add the Azure OpenAI GPT-4 Turbo with Vision tool to the flow. Make sure you have an Azure OpenAI connection, with the availability of GPT-4 vision-preview models.

The Jinja template for composing prompts in the GPT-4V tool follows a similar structure to the chat API in the LLM tool. To represent an image input within your prompt, you can use the syntax . Image input can be passed in the user, system and assistant messages.

Once you've composed the prompt, select the Validate and parse input button to parse the input placeholders. The image input represented by  will be parsed as image type with the input name as INPUT NAME.

You can assign a value to the image input through the following ways:

- Reference from the flow input of Image type.

- Reference from other node's output of Image type.

- Upload, drag, paste an image, or specify an image URL or the relative image path.

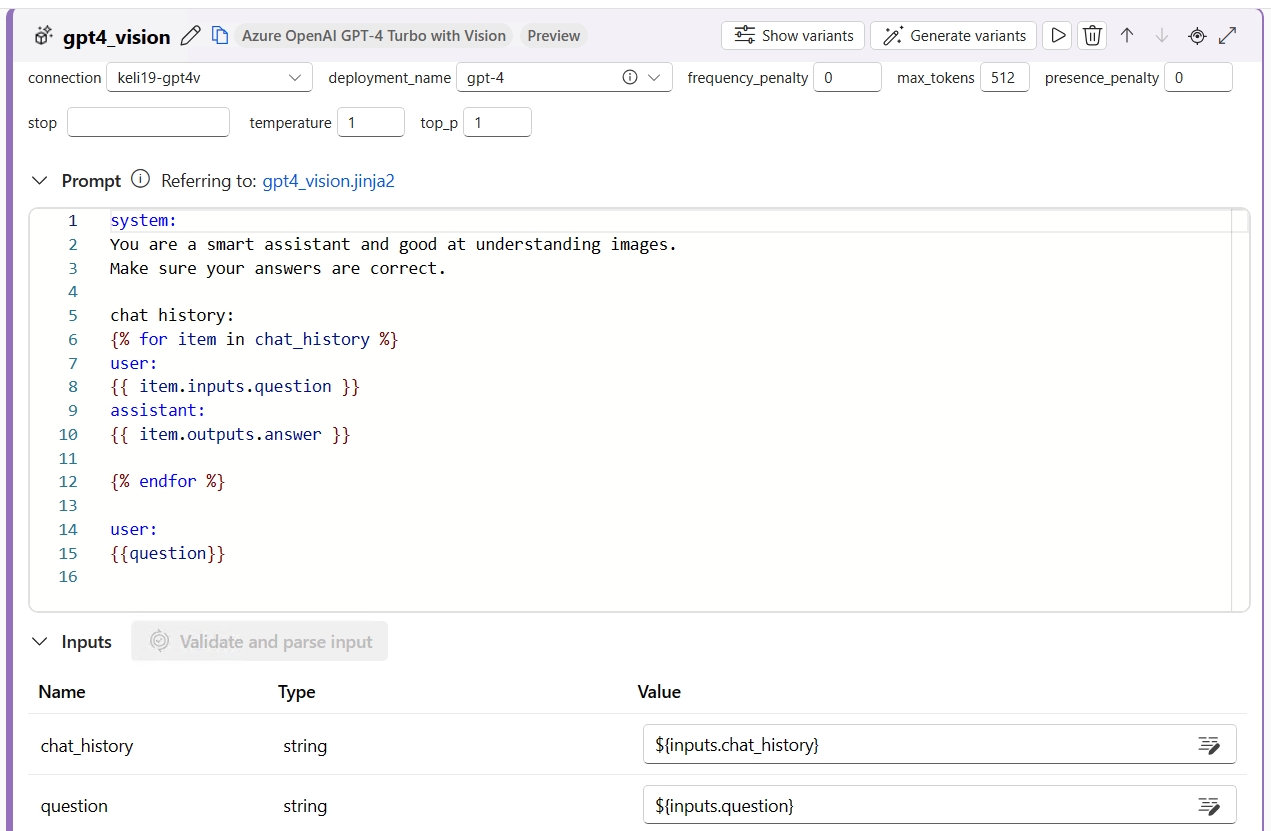

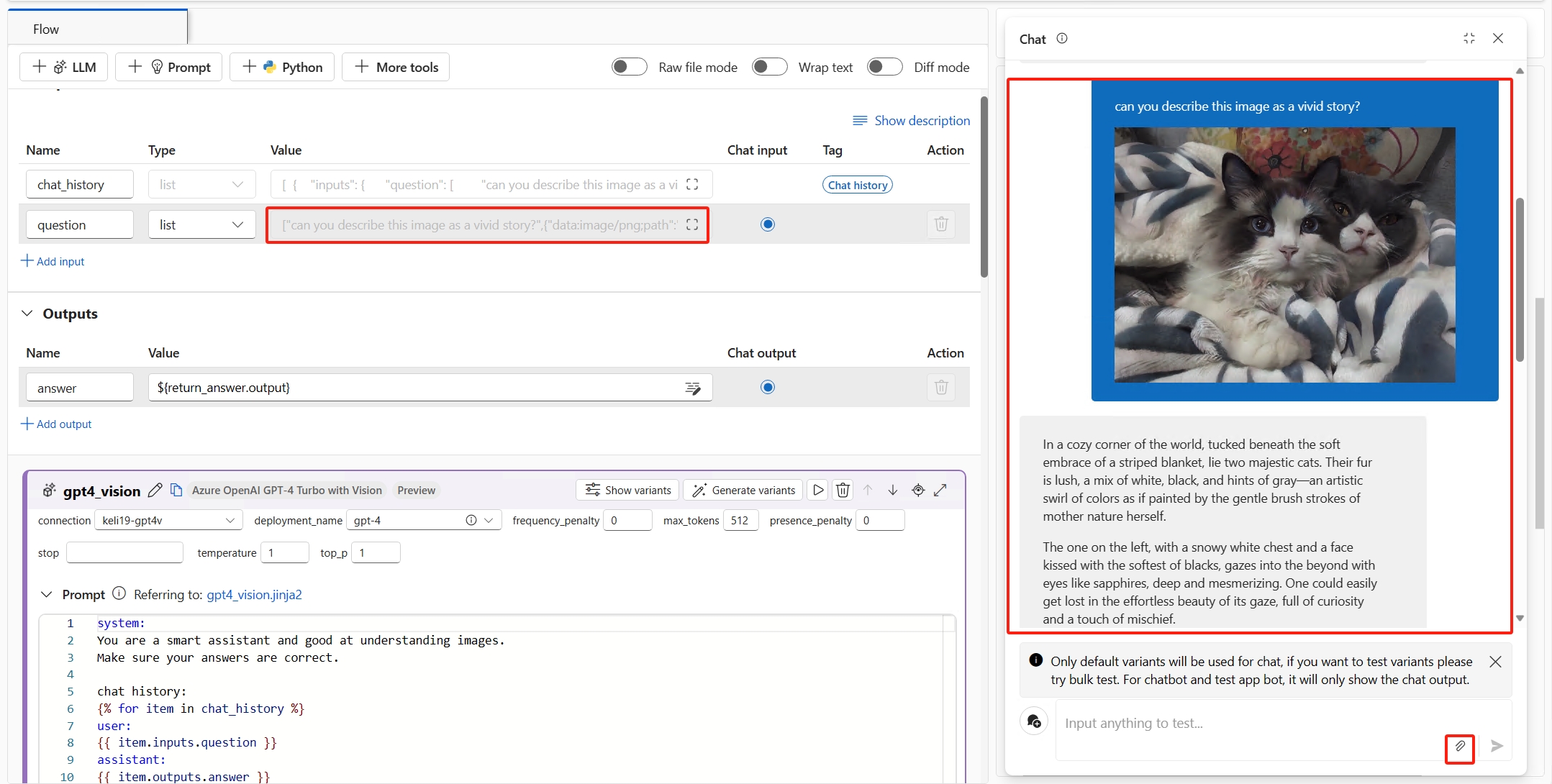

Build a chatbot to process images

In this section, you'll learn how to build a chatbot that can process image and text inputs.

Assume you want to build a chatbot that can answer any questions about the image and text together. You can achieve this by following the steps below:

Create a chat flow.

Add a chat input, select the data type as "list". In the chat box, user can input a mixed sequence of texts and images, and prompt flow service will transform that into a list.

-

In this example,

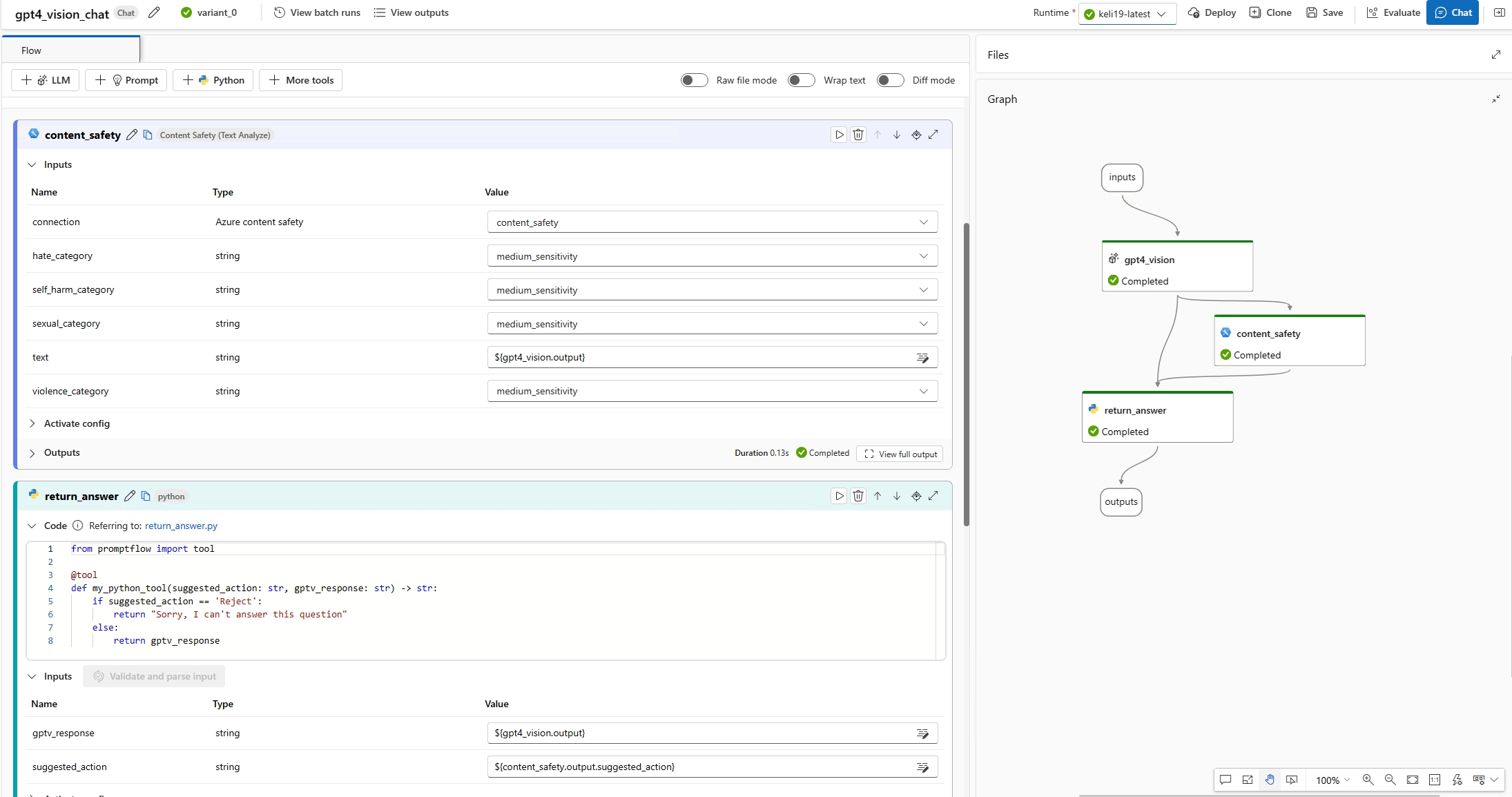

{{question}}refers to the chat input, which is a list of texts and images. (Optional) You can add any custom logic to the flow to process the GPT-4V output. For example, you can add content safety tool to detect if the answer contains any inappropriate content, and return a final answer to the user.

Now you can test the chatbot. Open the chat window, and input any questions with images. The chatbot will answer the questions based on the image and text inputs. The chat input value is automatically backfilled from the input in the chat window. You can find the texts with images in the chat box which is translated into a list of texts and images.

Note

To enable your chatbot to respond with rich text and images, make the chat output list type. The list should consist of strings (for text) and prompt flow Image objects (for images) in custom order.

Create a batch run using image data

A batch run allows you to test the flow with an extensive dataset. There are three methods to represent image data: through an image file, a public image URL, or a Base64 string.

- Image file: To test with image files in batch run, you need to prepare a data folder. This folder should contain a batch run entry file in

jsonlformat located in the root directory, along with all image files stored in the same folder or subfolders. In the entry file, you should use the format:

In the entry file, you should use the format: {"data:<mime type>;path": "<image relative path>"}to reference each image file. For example,{"data:image/png;path": "./images/1.png"}. - Public image URL: You can also reference the image URL in the entry file using this format:

{"data:<mime type>;url": "<image URL>"}. For example,{"data:image/png;url": "https://www.example.com/images/1.png"}. - Base64 string: A Base64 string can be referenced in the entry file using this format:

{"data:<mime type>;base64": "<base64 string>"}. For example,{"data:image/png;base64": "iVBORw0KGgoAAAANSUhEUgAAAGQAAABLAQMAAAC81rD0AAAABGdBTUEAALGPC/xhBQAAACBjSFJNAAB6JgAAgIQAAPoAAACA6AAAdTAAAOpgAAA6mAAAF3CculE8AAAABlBMVEUAAP7////DYP5JAAAAAWJLR0QB/wIt3gAAAAlwSFlzAAALEgAACxIB0t1+/AAAAAd0SU1FB+QIGBcKN7/nP/UAAAASSURBVDjLY2AYBaNgFIwCdAAABBoAAaNglfsAAAAZdEVYdGNvbW1lbnQAQ3JlYXRlZCB3aXRoIEdJTVDnr0DLAAAAJXRFWHRkYXRlOmNyZWF0ZQAyMDIwLTA4LTI0VDIzOjEwOjU1KzAzOjAwkHdeuQAAACV0RVh0ZGF0ZTptb2RpZnkAMjAyMC0wOC0yNFQyMzoxMDo1NSswMzowMOEq5gUAAAAASUVORK5CYII="}.

In summary, prompt flow uses a unique dictionary format to represent an image, which is {"data:<mime type>;<representation>": "<value>"}. Here, <mime type> refers to HTML standard MIME image types, and <representation> refers to the supported image representations: path,url and base64.

Create a batch run

In flow authoring page, select the Evaluate button to initiate a batch run. In Batch run settings, select a dataset, which can be either a folder (containing the entry file and image files) or a file (containing only the entry file). You can preview the entry file and perform input mapping to align the columns in the entry file with the flow inputs.

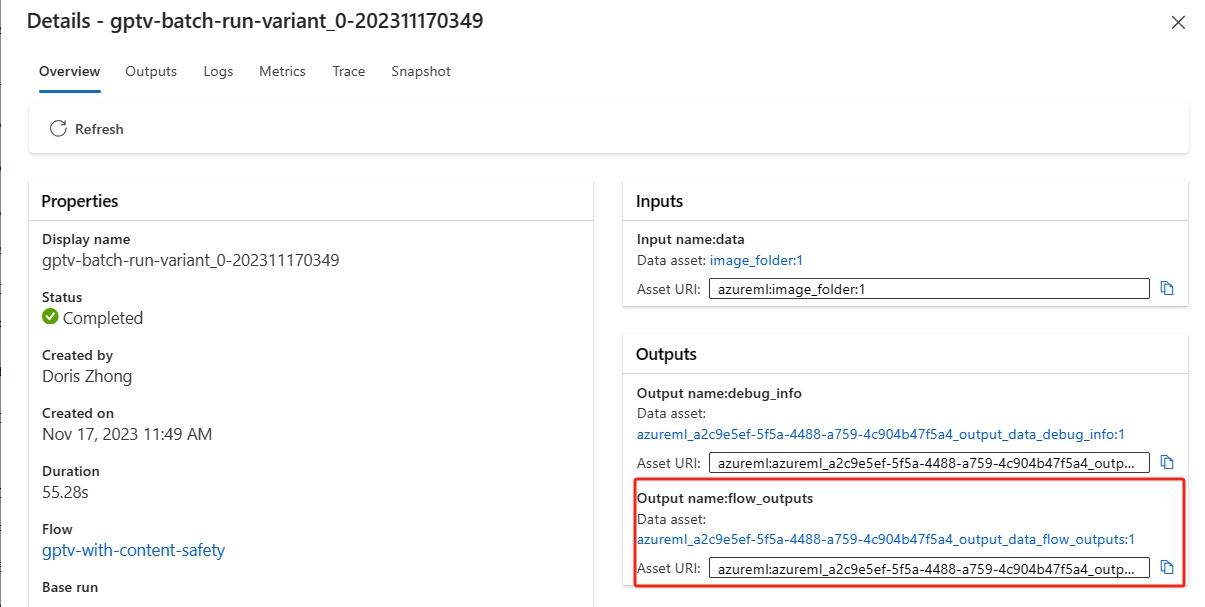

View batch run results

You can check the batch run outputs in the run detail page. Select the image object in the output table to easily preview the image.

If the batch run outputs contain images, you can check the flow_outputs dataset with the output jsonl file and the output images.

Consume online endpoint with image data

You can deploy a flow to an online endpoint for real-time inference.

Currently the Test tab in the deployment detail page does not support image inputs or outputs. It will be supported soon.

For now, you can test the endpoint by sending request including image inputs.

To consume the online endpoint with image input, you should represent the image by using the format {"data:<mime type>;<representation>": "<value>"}. In this case, <representation> can either be url or base64.

If the flow generates image output, it will be returned with base64 format, for example, {"data:<mime type>;base64": "<base64 string>"}.