Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Important

Support for Machine Learning Studio (classic) will end on 31 August 2024. We recommend you transition to Azure Machine Learning by that date.

Beginning 1 December 2021, you will not be able to create new Machine Learning Studio (classic) resources. Through 31 August 2024, you can continue to use the existing Machine Learning Studio (classic) resources.

- See information on moving machine learning projects from ML Studio (classic) to Azure Machine Learning.

- Learn more about Azure Machine Learning.

ML Studio (classic) documentation is being retired and may not be updated in the future.

Loads data from external sources on the web; from various forms of cloud-based storage in Azure such as tables, blobs, and SQL databases; and from on-premises SQL Server databases

Category: Data Input and Output

Note

Applies to: Machine Learning Studio (classic) only

Similar drag-and-drop modules are available in Azure Machine Learning designer.

Module overview

This article describes how to use the Import Data module in Machine Learning Studio (classic), to load data into a machine learning experiment from existing cloud data services.

The module now features a wizard to help you choose a storage option and select from among existing subscriptions and accounts to quickly configure all options. Need to edit an existing data connection? No problem; the wizard loads all previous configuration details so that you don't have to start again from scratch.

After you define the data you want and connect to the source, Import Data infers the data type of each column based on the values it contains, and loads the data into your Machine Learning Studio (classic) workspace. The output of Import Data is a dataset that can be used with any experiment.

Important

Currently, there are limitations on the types of storage accounts that are supported. For more information, see Technical Notes.

If your source data changes, you can refresh the dataset and add new data by re-running Import Data. However, if you don't want to re-read from the source each time you run the experiment, select the Use cached results option to TRUE. When this option is selected, the module checks whether the experiment has run previously using the same source and same input options. If a previous run is found, the data in the cache is used, instead of re-loading the data from the source.

Note

This module was previously named Reader. If you previously used the Reader module in an experiment, it is renamed to Import Data when you refresh the experiment.

Data sources

The Import Data module supports the following data sources. Click the links for detailed instructions and examples of using each data source.

If you are not sure how or where you should store your data, see this guide to common data scenarios in the data science process: Scenarios for advanced analytics in Machine Learning.

| Data source | Use with |

|---|---|

| Web URL via HTTP | Get data that is hosted on a web URL that uses HTTP and that has been provided in the CSV, TSV, ARFF, or SvmLight formats |

| Hive Query | Get data from distributed storage in Hadoop. You specify the data you want by using the HiveQL language |

| Azure SQL Database | Get data from Azure SQL Database or from Azure SQL Data Warehouse |

| Azure Table | Get data that is stored in the Azure table service |

| Import from Azure Blob Storage | Get data that is stored in the Azure blob service |

| Data Feed Providers | Get data exposed as a feed in OData format |

| Import from On-Premises SQL Server Database | Get data from an on-premises SQL Server database using the Microsoft Data Management Gateway |

| Azure Cosmos DB | Get data stored in JSON format in Azure Cosmos DB. |

Tip

Need to import data in the JSON format? Both R and Python support REST APIs, so use the Execute Python Script or Execute R Script modules to parse your data and save it as an Azure ML dataset.

Or, use the SQL DB API for CosmosDB, which supports multiple JSON stores, including MongoDB, to read your data using the Import from Azure Cosmos DB option. For more information, see Import from Azure Cosmos DB.

How to use Import Data

Add the Import Data module to your experiment. You can find this module in the Data Input and Output category in Studio (classic).

Click Launch Data Import Wizard to configure the data source using a wizard.

The wizard gets the account name and credentials, and help you configure other options. If you are editing an existing configuration, it loads the current values first.

If you do not want to use the wizard, click Data source, and choose the type of cloud-based storage you are reading from.

Additional settings depend on the type of storage you choose, and whether the storage is secured or not. You might need to provide the account name, file type, or credentials. Some sources do not require authentication; for others, you might need to know the account name, a key, or container name.

For details, see the list of Data sources.

Select the Use cached results option if you want to cache the dataset for re-use on successive runs.

Assuming there have been no other changes to module parameters, the experiment loads the data only the first time the module is run, and thereafter uses a cached version of the dataset.

Deselect this option if you need to reload the data each time you run the experiment.

Run the experiment.

When Import Data loads the data into Studio (classic), it infers the data type of each column based on the values it contains, either numerical or categorical.

If a header is present, the header is used to name the columns of the output dataset.

If there are no existing column headers in the data, new column names are generated using the format col1, col2,… ,coln.

Results

When import completes, click the output dataset and select Visualize to see if the data was imported successfully.

If you want to save the data for re-use, rather than importing a new set of data each time the experiment is run, right-click the output and select Save as Dataset. Choose a name for the dataset. The saved dataset preserves the data at the time of saving, and data is not updated when the experiment is re-run, even if the dataset in the experiment changes. This can be handy for taking snapshots of data.

After importing the data, it might need some additional preparations for modeling and analysis:

Generate statistical summaries of the data, using Summarize Data or Compute Elementary Statistics.

Use Edit Metadata to change column names, to handle a column as a different data type, or to indicate that some columns are labels or features.

Use Select Columns in Dataset to select a subset of columns to transform or use in modeling. The transformed or removed columns can easily be rejoined to the original dataset by using the Add Columns module or the Join Data module.

Use Partition and Sample to divide the dataset, perform sampling, or get the top n rows.

Use Apply SQL Transformation to aggregate data, filter, or transform using SQL statements.

Use these modules to clean up text columns and generate new text features:

- Preprocess Text

- Extract N-Gram Features from Text

- Named Entity Recognition

- Execute Python Script, to implement custom NLP based on nltk.

Technical notes

This section provides a list of known issues with the Import Data module, as well as some general troubleshooting information not specific to a source type.

Supported account types

Frequently Azure releases new services or new storage types; however, there is typically a delay while support for new account types is implemented in Machine Learning Studio (classic).

Currently, Machine Learning supports all general purpose storage accounts, except for those using zone-redundant storage (ZRS).

Locally redundant storage (LRS) and geo-redundant storage options are supported.

Block blobs are supported but Append blobs are not.

Common questions and issues

This section describes some known issues, common questions, and workarounds.

Headers must be single rows

If you are importing from CSV files, be aware that Machine Learning allows a single header row. You cannot insert multi-line headers.

Custom separators supported on import but not export

The Import Data module supports importing data that uses alternative column separators, such as the semicolon (;), which is often used in Europe. When you import data from CSV files in external storage, select the CSV with encodings option, and pick a supported encoding.

However, you cannot generate alternative separators when you prepare data for export using the Convert to CSV module.

Poor column separation on string data containing commas

Just about every character that can be specified as a column separator (tabs, spaces, commas, etc.) can also be found randomly in text fields. Importing text from CSV always requires caution to avoid separating text across unnecessary new columns. It is a common problem in text processing that you have probably encountered and handled in different ways.

Problems can also occur when you try to export a column of string data that contains commas. Machine Learning does not support any special handling or special translation of such data, such as enclosing strings in quotation marks. Also, you cannot use escape characters before a comma to ensure that commas are handled as a literal character. AS a consequence, new fields are created in the output file for each comma that is encountered in the string field.

To avoid problems on export, use the Preprocess Text module to remove punctuation characters from string fields.

You can also use custom R script or Python script to process complex text and ensure that data can be imported or exported correctly.

UTF-8 encoding required

Machine Learning requires UTF-8 encoding. If the data you are importing uses a different encoding, or was exported from a data source that uses a different default encoding, various problems might appear in the text.

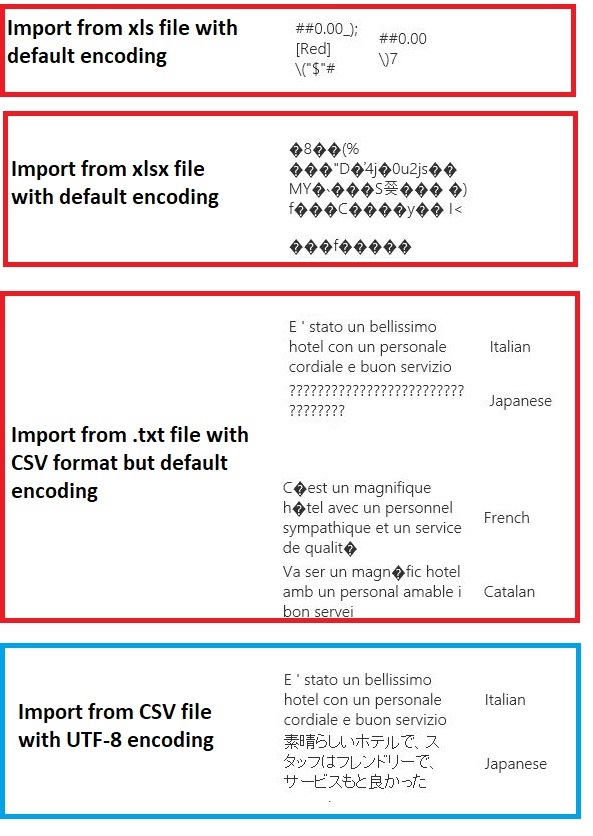

For example, the following image contains the same multilanguage dataset exported from Excel and then imported into Machine Learning under four different combinations of file type and encoding.

The third example represents data that was lost during while saving from Excel in CSV format, because the correct encoding was not specified at that time. Therefore, if you run into problems, be sure to check not just the file you are importing from, but whether the file was correctly exported from the source.

Dataset does not have column names

If the dataset you are importing does not have column names, be sure to specify one of the "no header" options. When you do so, Import Data adds default column names using the format Col1, Col2, etc. Later, use Edit Metadata to fix the column names.

If you are exporting a dataset to a CSV file, use Edit Metadata to add column names before converting or exporting it.

Workarounds for unsupported data sources

If you need to get data from a source that is not in the list, there are various workarounds you can try:

To upload data from a file on your computer, click New in Studio (classic), select Dataset, and then select From Local File. Locate the file and specify the format (TSV, CSV, etc.). For more information, see Import training data into Studio (classic).

Use R or Python. You can use the Execute R Script module with an appropriate R package to get data from other cloud databases.

The Execute Python Script module also lets you read and convert data from a variety of sources. See these examples from Microsoft data scientists in the Cortana Intelligence Gallery:

Get data from AWS clusters. You can run a query against a generic Hive cluster with WebHCat or HCatalog endpoint enabled. Or publish as a page and read from the Web URL.

Get data from MongoDB. The data migration utility for Azure Cosmos DB supports a wide variety of sources and formats. For more information and examples, see Azure Cosmos DB: Data migration tool

For more ideas and workarounds, see the Machine Learning forum or Azure AI Gallery.

Module parameters

Each data source must be configured using different options. This table lists only the options that are common to all data sources.

| Name | Range | Type | Default | Description |

|---|---|---|---|---|

| Data source | List | DataSource Or Sink | Blob service in Azure Storage | Data source can be HTTP, anonymous HTTPS, a file in the Blob service or Table service, a SQL database in Azure, an Azure SQL Data Warehouse, a Hive table, or an OData endpoint. |

| Use cached results | TRUE/FALSE | Boolean | FALSE | If TRUE, the module will check whether the experiment has run previously using the same source and same input options, and if a previous run is found, the data in the cache is used. If FALSE, or if changes are found, data will be reloaded from the source. |

Outputs

| Name | Type | Description |

|---|---|---|

| Results dataset | Data Table | Dataset with downloaded data |

Exceptions

| Exception | Description |

|---|---|

| Error 0027 | An exception occurs when two objects have to be the same size, but they are not. |

| Error 0003 | An exception occurs if one or more of inputs are null or empty. |

| Error 0029 | An exception occurs when an invalid URI is passed. |

| Error 0030 | an exception occurs in when it is not possible to download a file. |

| Error 0002 | An exception occurs if one or more parameters could not be parsed or converted from the specified type to the type required by the target method. |

| Error 0009 | An exception occurs if the Azure storage account name or the container name is specified incorrectly. |

| Error 0048 | An exception occurs when it is not possible to open a file. |

| Error 0015 | An exception occurs if the database connection has failed. |

| Error 0046 | An exception occurs when it is not possible to create a directory on specified path. |

| Error 0049 | An exception occurs when it is not possible to parse a file. |

For a list of errors specific to Studio (classic) modules, see Machine Learning Error codes.

For a list of API exceptions, see Machine Learning REST API Error Codes.

See also

Data Input and Output

Data Format Conversions

Export Data

A-Z Module List