Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This tutorial series shows how features seamlessly integrate all phases of the machine learning lifecycle: prototyping, training, and operationalization.

You can use Azure Machine Learning managed feature store to discover, create, and operationalize features. The machine learning lifecycle includes a prototyping phase, where you experiment with various features. It also involves an operationalization phase, where models are deployed and inference steps look up feature data. Features serve as the connective tissue in the machine learning lifecycle. To learn more about basic concepts for managed feature store, visit the What is managed feature store? and Understanding top-level entities in managed feature store resources.

This tutorial describes how to create a feature set specification with custom transformations. It then uses that feature set to generate training data, enable materialization, and perform a backfill. Materialization computes the feature values for a feature window, and then stores those values in a materialization store. All feature queries can then use those values from the materialization store.

Without materialization, a feature set query applies the transformations to the source on the fly, to compute the features before it returns the values. This process works well for the prototyping phase. However, for training and inference operations in a production environment, we recommend that you materialize the features, for greater reliability and availability.

This tutorial is the first part of the managed feature store tutorial series. Here, you learn how to:

- Create a new, minimal feature store resource.

- Develop and locally test a feature set with feature transformation capability.

- Register a feature store entity with the feature store.

- Register the feature set that you developed with the feature store.

- Generate a sample training DataFrame by using the features that you created.

- Enable offline materialization on the feature sets, and backfill the feature data.

This tutorial series has two tracks:

- The SDK-only track uses only Python SDKs. Choose this track for pure, Python-based development and deployment.

- The SDK and CLI track uses the Python SDK for feature set development and testing only, and it uses the CLI for CRUD (create, read, update, and delete) operations. This track is useful in continuous integration and continuous delivery (CI/CD) or GitOps scenarios, where CLI/YAML is preferred.

Prerequisites

Before you proceed with this tutorial, be sure to cover these prerequisites:

An Azure Machine Learning workspace. For more information about workspace creation, visit Quickstart: Create workspace resources.

On your user account, you need the Owner role for the resource group where the feature store is created.

If you choose to use a new resource group for this tutorial, you can easily delete all the resources by deleting the resource group.

Prepare the notebook environment

This tutorial uses an Azure Machine Learning Spark notebook for development.

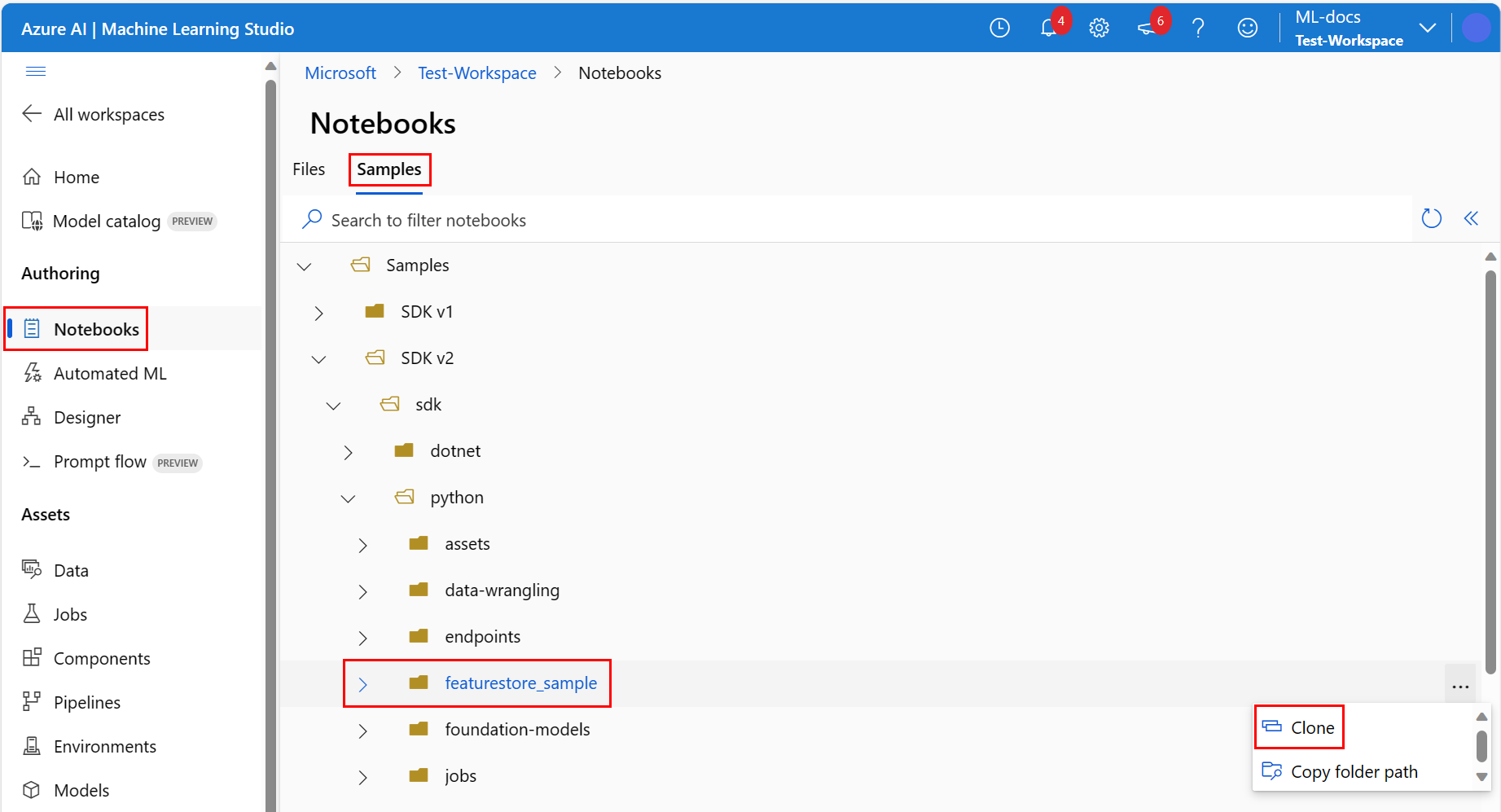

In the Azure Machine Learning studio environment, select Notebooks on the left pane, and then select the Samples tab.

Browse to the featurestore_sample directory (select Samples > SDK v2 > sdk > python > featurestore_sample), and then select Clone.

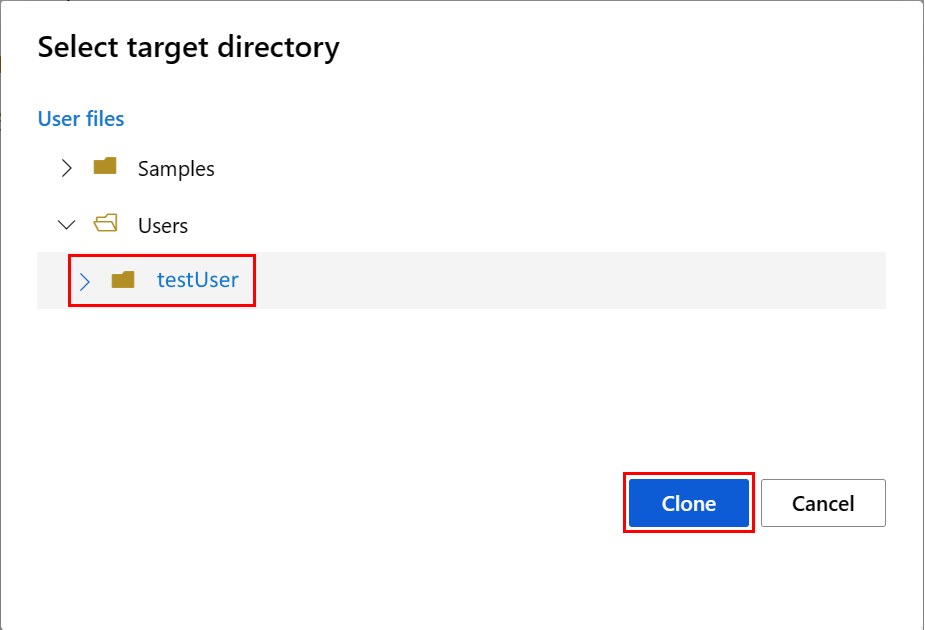

The Select target directory panel opens. Select the Users directory, then select your user name, and finally select Clone.

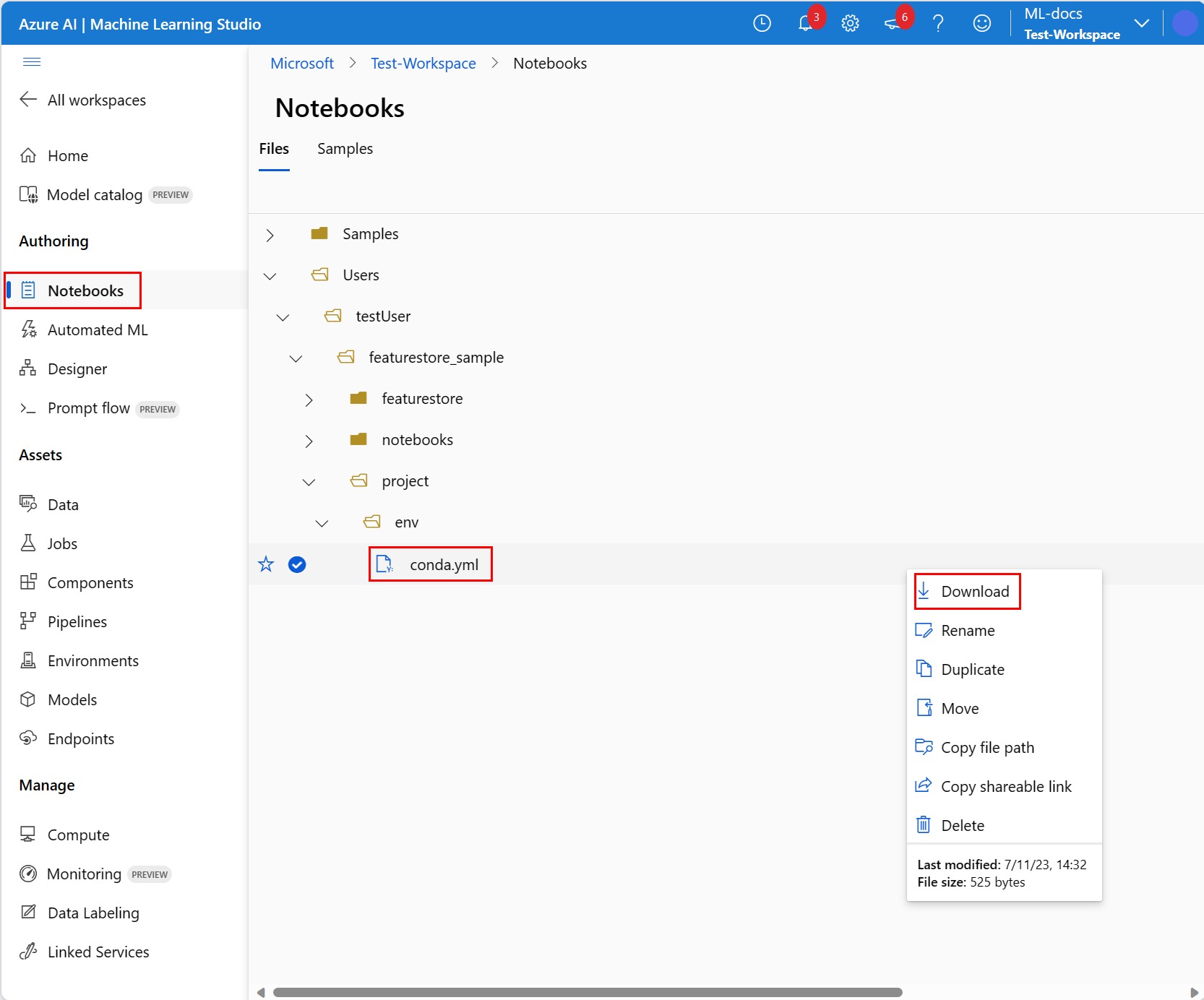

To configure the notebook environment, you must upload the conda.yml file:

- Select Notebooks on the left pane, and then select the Files tab.

- Browse to the env directory (select Users > your_user_name > featurestore_sample > project > env), and then select the conda.yml file.

- Select Download.

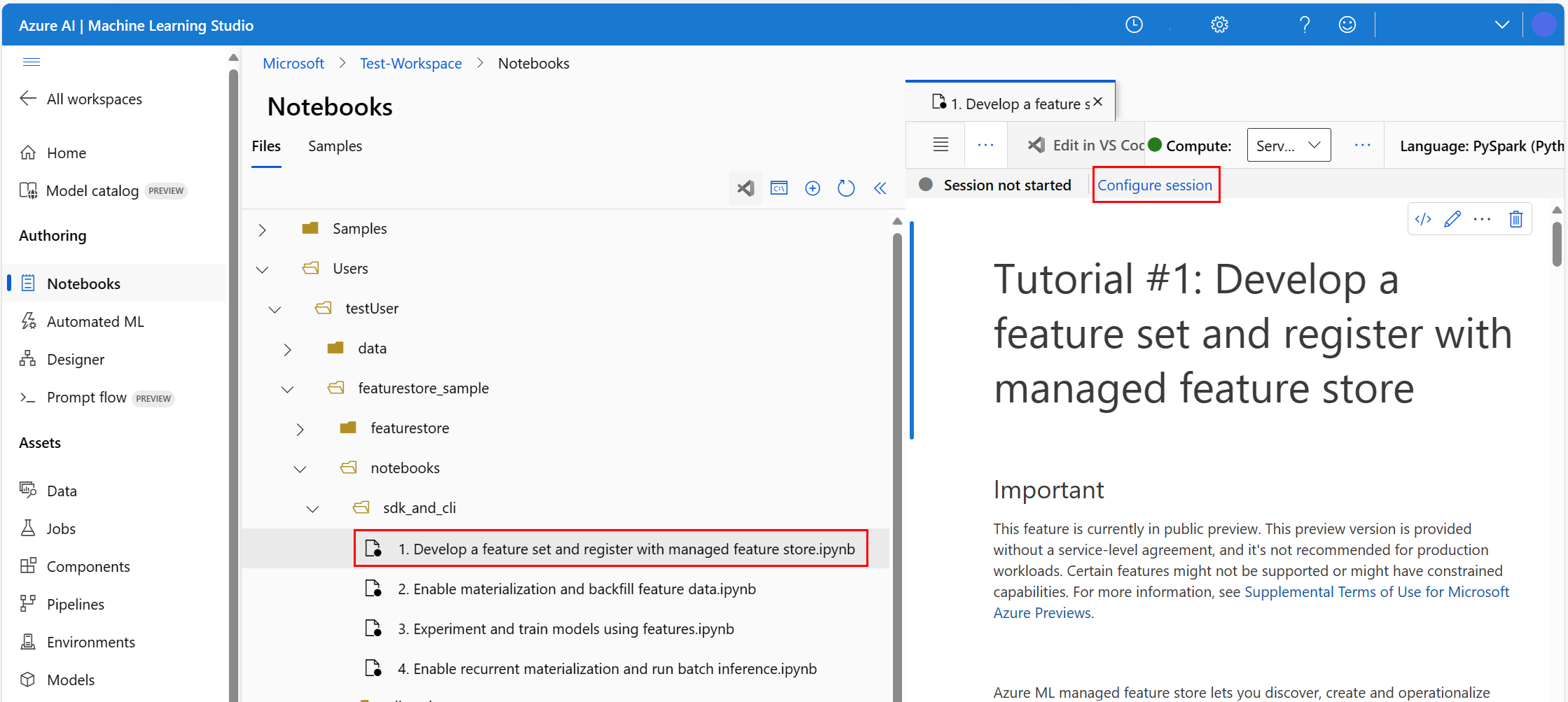

- Select Serverless Spark Compute in the top navigation Compute dropdown. This operation might take one to two minutes. Wait for a status bar in the top to display Configure session.

- Select Configure session in the top status bar.

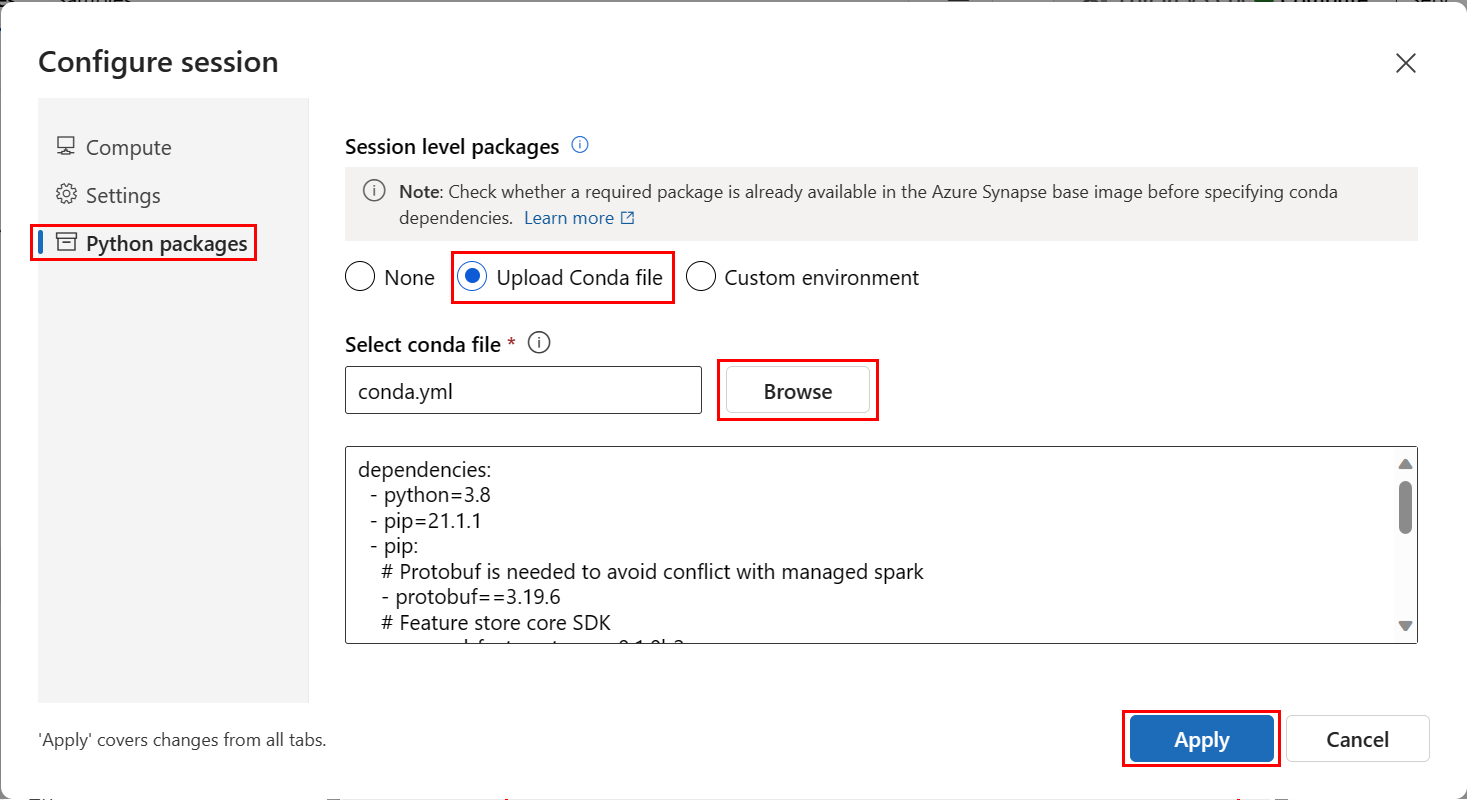

- Select Python packages.

- Select Upload conda files.

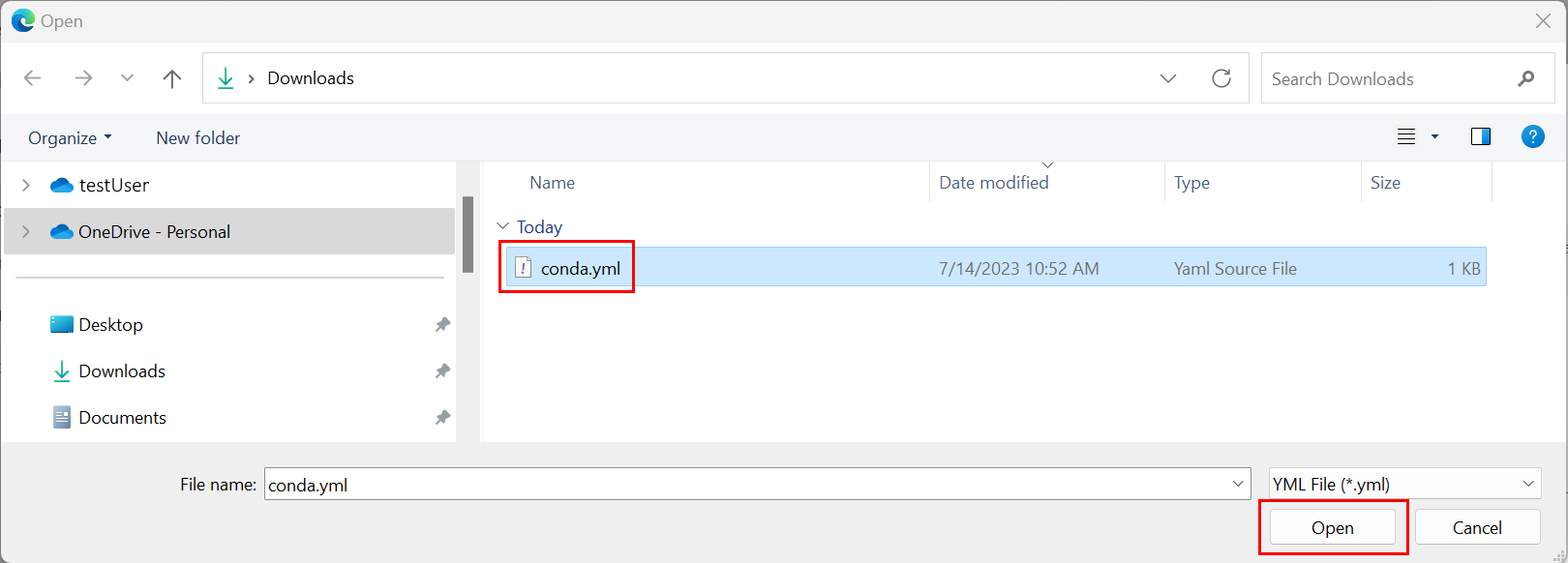

- Select the

conda.ymlfile you downloaded on your local device. - (Optional) Increase the session time-out (idle time in minutes) to reduce the serverless spark cluster startup time.

In the Azure Machine Learning environment, open the notebook, and then select Configure session.

On the Configure session panel, select Python packages.

Upload the Conda file:

- On the Python packages tab, select Upload Conda file.

- Browse to the directory that hosts the Conda file.

- Select conda.yml, and then select Open.

Select Apply.

Start the Spark session

# Run this cell to start the spark session (any code block will start the session ). This can take around 10 mins.

print("start spark session")Set up the root directory for the samples

import os

# Please update <your_user_alias> below (or any custom directory you uploaded the samples to).

# You can find the name from the directory structure in the left navigation panel.

root_dir = "./Users/<your_user_alias>/featurestore_sample"

if os.path.isdir(root_dir):

print("The folder exists.")

else:

print("The folder does not exist. Please create or fix the path")Set up the CLI

Not applicable.

Note

You use a feature store to reuse features across projects. You use a project workspace (an Azure Machine Learning workspace) to train inference models, by taking advantage of features from feature stores. Many project workspaces can share and reuse the same feature store.

This tutorial uses two SDKs:

Feature store CRUD SDK

You use the same

MLClient(package nameazure-ai-ml) SDK that you use with the Azure Machine Learning workspace. A feature store is implemented as a type of workspace. As a result, this SDK is used for CRUD operations for feature stores, feature sets, and feature store entities.Feature store core SDK

This SDK (

azureml-featurestore) is for feature set development and consumption. Later steps in this tutorial describe these operations:- Develop a feature set specification.

- Retrieve feature data.

- List or get a registered feature set.

- Generate and resolve feature retrieval specifications.

- Generate training and inference data by using point-in-time joins.

This tutorial doesn't require explicit installation of those SDKs, because the earlier conda.yml instructions cover this step.

Create a minimal feature store

Set feature store parameters, including name, location, and other values.

# We use the subscription, resource group, region of this active project workspace. # You can optionally replace them to create the resources in a different subsciprtion/resource group, or use existing resources. import os featurestore_name = "<FEATURESTORE_NAME>" featurestore_location = "eastus" featurestore_subscription_id = os.environ["AZUREML_ARM_SUBSCRIPTION"] featurestore_resource_group_name = os.environ["AZUREML_ARM_RESOURCEGROUP"]Create the feature store.

from azure.ai.ml import MLClient from azure.ai.ml.entities import ( FeatureStore, FeatureStoreEntity, FeatureSet, ) from azure.ai.ml.identity import AzureMLOnBehalfOfCredential ml_client = MLClient( AzureMLOnBehalfOfCredential(), subscription_id=featurestore_subscription_id, resource_group_name=featurestore_resource_group_name, ) fs = FeatureStore(name=featurestore_name, location=featurestore_location) # wait for feature store creation fs_poller = ml_client.feature_stores.begin_create(fs) print(fs_poller.result())Initialize a feature store core SDK client for Azure Machine Learning.

As explained earlier in this tutorial, the feature store core SDK client is used to develop and consume features.

# feature store client from azureml.featurestore import FeatureStoreClient from azure.ai.ml.identity import AzureMLOnBehalfOfCredential featurestore = FeatureStoreClient( credential=AzureMLOnBehalfOfCredential(), subscription_id=featurestore_subscription_id, resource_group_name=featurestore_resource_group_name, name=featurestore_name, )Grant the "Azure Machine Learning Data Scientist" role on the feature store to your user identity. Obtain your Microsoft Entra object ID value from the Azure portal, as described in Find the user object ID.

Assign the AzureML Data Scientist role to your user identity, so that it can create resources in feature store workspace. The permissions might need some time to propagate.

For more information more about access control, visit the Manage access control for managed feature store resource.

your_aad_objectid = "<USER_AAD_OBJECTID>" !az role assignment create --role "AzureML Data Scientist" --assignee-object-id $your_aad_objectid --assignee-principal-type User --scope $feature_store_arm_id

Prototype and develop a feature set

In these steps, you build a feature set named transactions that has rolling window aggregate-based features:

Explore the

transactionssource data.This notebook uses sample data hosted in a publicly accessible blob container. It can be read into Spark only through a

wasbsdriver. When you create feature sets by using your own source data, host them in an Azure Data Lake Storage Gen2 account, and use anabfssdriver in the data path.# remove the "." in the roor directory path as we need to generate absolute path to read from spark transactions_source_data_path = "wasbs://data@azuremlexampledata.blob.core.windows.net/feature-store-prp/datasources/transactions-source/*.parquet" transactions_src_df = spark.read.parquet(transactions_source_data_path) display(transactions_src_df.head(5)) # Note: display(training_df.head(5)) displays the timestamp column in a different format. You can can call transactions_src_df.show() to see correctly formatted valueLocally develop the feature set.

A feature set specification is a self-contained definition of a feature set that you can locally develop and test. Here, you create these rolling window aggregate features:

transactions three-day counttransactions amount three-day avgtransactions amount three-day sumtransactions seven-day counttransactions amount seven-day avgtransactions amount seven-day sum

Review the feature transformation code file: featurestore/featuresets/transactions/transformation_code/transaction_transform.py. Note the rolling aggregation defined for the features. This is a Spark transformer.

To learn more about the feature set and transformations, visit the What is managed feature store? resource.

from azureml.featurestore import create_feature_set_spec from azureml.featurestore.contracts import ( DateTimeOffset, TransformationCode, Column, ColumnType, SourceType, TimestampColumn, ) from azureml.featurestore.feature_source import ParquetFeatureSource transactions_featureset_code_path = ( root_dir + "/featurestore/featuresets/transactions/transformation_code" ) transactions_featureset_spec = create_feature_set_spec( source=ParquetFeatureSource( path="wasbs://data@azuremlexampledata.blob.core.windows.net/feature-store-prp/datasources/transactions-source/*.parquet", timestamp_column=TimestampColumn(name="timestamp"), source_delay=DateTimeOffset(days=0, hours=0, minutes=20), ), feature_transformation=TransformationCode( path=transactions_featureset_code_path, transformer_class="transaction_transform.TransactionFeatureTransformer", ), index_columns=[Column(name="accountID", type=ColumnType.string)], source_lookback=DateTimeOffset(days=7, hours=0, minutes=0), temporal_join_lookback=DateTimeOffset(days=1, hours=0, minutes=0), infer_schema=True, )Export as a feature set specification.

To register the feature set specification with the feature store, you must save that specification in a specific format.

Review the generated

transactionsfeature set specification. Open this file from the file tree to see the featurestore/featuresets/accounts/spec/FeaturesetSpec.yaml specification.The specification contains these elements:

source: A reference to a storage resource. In this case, it's a parquet file in a blob storage resource.features: A list of features and their datatypes. If you provide transformation code, the code must return a DataFrame that maps to the features and datatypes.index_columns: The join keys required to access values from the feature set.

To learn more about the specification, visit the Understanding top-level entities in managed feature store and CLI (v2) feature set YAML schema resources.

Persisting the feature set specification offers another benefit: the feature set specification supports source control.

import os # Create a new folder to dump the feature set specification. transactions_featureset_spec_folder = ( root_dir + "/featurestore/featuresets/transactions/spec" ) # Check if the folder exists, create one if it does not exist. if not os.path.exists(transactions_featureset_spec_folder): os.makedirs(transactions_featureset_spec_folder) transactions_featureset_spec.dump(transactions_featureset_spec_folder, overwrite=True)

Register a feature store entity

As a best practice, entities help enforce use of the same join key definition, across feature sets that use the same logical entities. Examples of entities include accounts and customers. Entities are typically created once and then reused across feature sets. To learn more, visit the Understanding top-level entities in managed feature store.

Initialize the feature store CRUD client.

As explained earlier in this tutorial,

MLClientis used to create, read, update, and delete a feature store asset. The notebook code cell sample shown here searches for the feature store that you created in an earlier step. Here, you can't reuse the sameml_clientvalue that you used earlier in this tutorial, because that value is scoped at the resource group level. Proper scoping is a prerequisite for feature store creation.In this code sample, the client is scoped at feature store level.

# MLClient for feature store. fs_client = MLClient( AzureMLOnBehalfOfCredential(), featurestore_subscription_id, featurestore_resource_group_name, featurestore_name, )Register the

accountentity with the feature store.Create an

accountentity that has the join keyaccountIDof typestring.from azure.ai.ml.entities import DataColumn, DataColumnType account_entity_config = FeatureStoreEntity( name="account", version="1", index_columns=[DataColumn(name="accountID", type=DataColumnType.STRING)], stage="Development", description="This entity represents user account index key accountID.", tags={"data_typ": "nonPII"}, ) poller = fs_client.feature_store_entities.begin_create_or_update(account_entity_config) print(poller.result())

Register the transaction feature set with the feature store

Use this code to register a feature set asset with the feature store. You can then reuse that asset and easily share it. Registration of a feature set asset offers managed capabilities, including versioning and materialization. Later steps in this tutorial series cover managed capabilities.

from azure.ai.ml.entities import FeatureSetSpecification

transaction_fset_config = FeatureSet(

name="transactions",

version="1",

description="7-day and 3-day rolling aggregation of transactions featureset",

entities=[f"azureml:account:1"],

stage="Development",

specification=FeatureSetSpecification(path=transactions_featureset_spec_folder),

tags={"data_type": "nonPII"},

)

poller = fs_client.feature_sets.begin_create_or_update(transaction_fset_config)

print(poller.result())Explore the feature store UI

Feature store asset creation and updates can happen only through the SDK and CLI. You can use the UI to search or browse through the feature store:

- Open the Azure Machine Learning global landing page.

- Select Feature stores on the left pane.

- From the list of accessible feature stores, select the feature store that you created earlier in this tutorial.

Grant the Storage Blob Data Reader role access to your user account in the offline store

The Storage Blob Data Reader role must be assigned to your user account on the offline store. This ensures that the user account can read materialized feature data from the offline materialization store.

Obtain your Microsoft Entra object ID value from the Azure portal, as described in Find the user object ID.

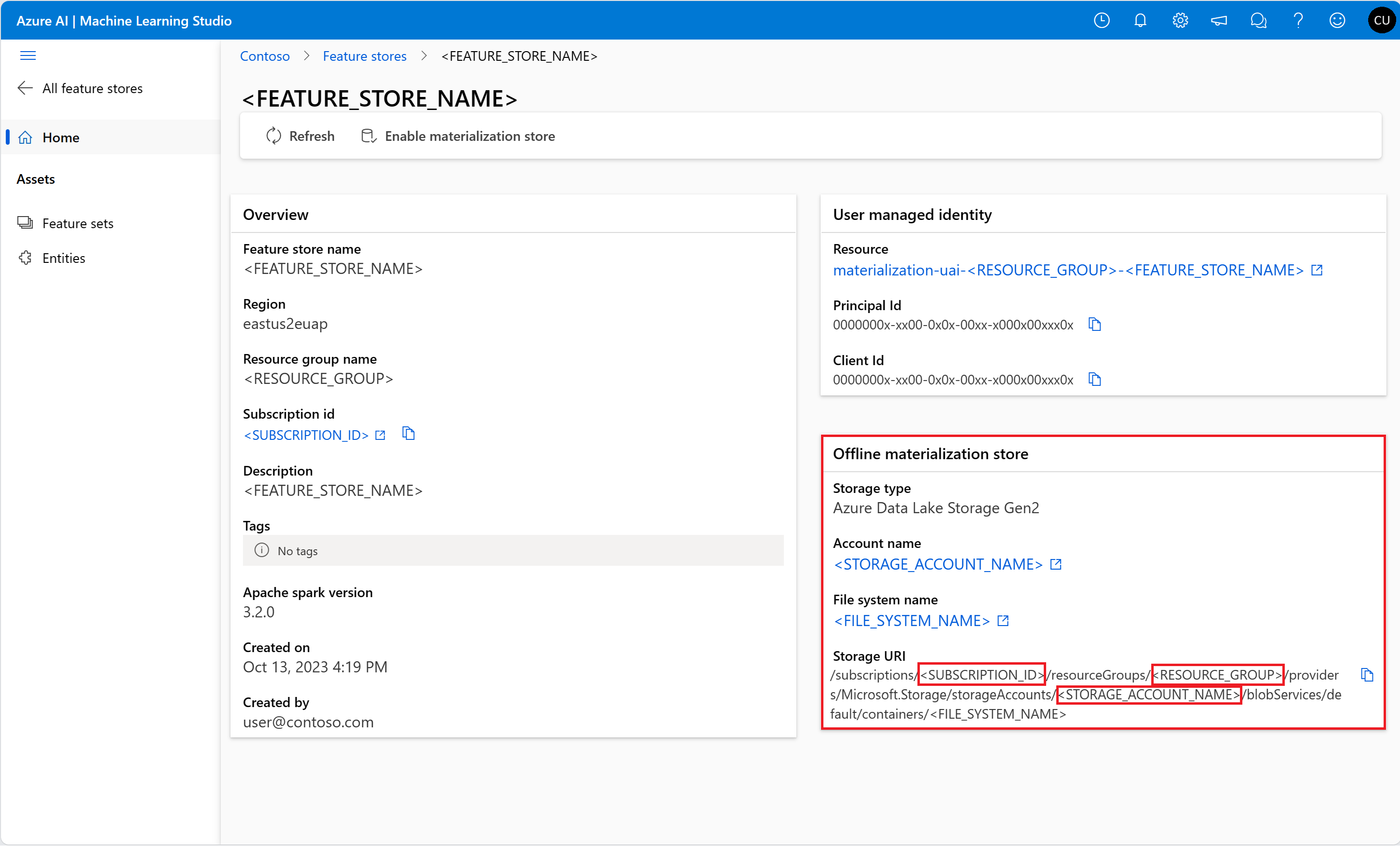

Obtain information about the offline materialization store from the Feature Store Overview page in the Feature Store UI. You can find the values for the storage account subscription ID, storage account resource group name, and storage account name for offline materialization store in the Offline materialization store card.

For more information about access control, visit the Manage access control for managed feature store resource.

Execute this code cell for role assignment. The permissions might need some time to propagate.

# This utility function is created for ease of use in the docs tutorials. It uses standard azure API's. # You can optionally inspect it `featurestore/setup/setup_storage_uai.py`. import sys sys.path.insert(0, root_dir + "/featurestore/setup") from setup_storage_uai import grant_user_aad_storage_data_reader_role your_aad_objectid = "<USER_AAD_OBJECTID>" storage_subscription_id = "<SUBSCRIPTION_ID>" storage_resource_group_name = "<RESOURCE_GROUP>" storage_account_name = "<STORAGE_ACCOUNT_NAME>" grant_user_aad_storage_data_reader_role( AzureMLOnBehalfOfCredential(), your_aad_objectid, storage_subscription_id, storage_resource_group_name, storage_account_name, )

Generate a training data DataFrame by using the registered feature set

Load observation data.

Observation data typically involves the core data used for training and inferencing. This data joins with the feature data to create the full training data resource.

Observation data is data captured during the event itself. Here, it has core transaction data, including transaction ID, account ID, and transaction amount values. Because you use it for training, it also has an appended target variable (is_fraud).

observation_data_path = "wasbs://data@azuremlexampledata.blob.core.windows.net/feature-store-prp/observation_data/train/*.parquet" observation_data_df = spark.read.parquet(observation_data_path) obs_data_timestamp_column = "timestamp" display(observation_data_df) # Note: the timestamp column is displayed in a different format. Optionally, you can can call training_df.show() to see correctly formatted valueGet the registered feature set, and list its features.

# Look up the featureset by providing a name and a version. transactions_featureset = featurestore.feature_sets.get("transactions", "1") # List its features. transactions_featureset.features# Print sample values. display(transactions_featureset.to_spark_dataframe().head(5))Select the features that become part of the training data. Then, use the feature store SDK to generate the training data itself.

from azureml.featurestore import get_offline_features # You can select features in pythonic way. features = [ transactions_featureset.get_feature("transaction_amount_7d_sum"), transactions_featureset.get_feature("transaction_amount_7d_avg"), ] # You can also specify features in string form: featureset:version:feature. more_features = [ f"transactions:1:transaction_3d_count", f"transactions:1:transaction_amount_3d_avg", ] more_features = featurestore.resolve_feature_uri(more_features) features.extend(more_features) # Generate training dataframe by using feature data and observation data. training_df = get_offline_features( features=features, observation_data=observation_data_df, timestamp_column=obs_data_timestamp_column, ) # Ignore the message that says feature set is not materialized (materialization is optional). We will enable materialization in the subsequent part of the tutorial. display(training_df) # Note: the timestamp column is displayed in a different format. Optionally, you can can call training_df.show() to see correctly formatted valueA point-in-time join appends the features to the training data.

Enable offline materialization on the transactions feature set

After feature set materialization is enabled, you can perform a backfill. You can also schedule recurrent materialization jobs. For more information, visit the third tutorial in the series resource.

Set spark.sql.shuffle.partitions in the yaml file according to the feature data size

The spark configuration spark.sql.shuffle.partitions is an OPTIONAL parameter that can affect the number of parquet files generated (per day) when the feature set is materialized into the offline store. The default value of this parameter is 200. As best practice, avoid generation of many small parquet files. If offline feature retrieval becomes slow after feature set materialization, go to the corresponding folder in the offline store to check whether the issue involves too many small parquet files (per day), and adjust the value of this parameter accordingly.

Note

The sample data used in this notebook is small. Therefore, this parameter is set to 1 in the featureset_asset_offline_enabled.yaml file.

from azure.ai.ml.entities import (

MaterializationSettings,

MaterializationComputeResource,

)

transactions_fset_config = fs_client._featuresets.get(name="transactions", version="1")

transactions_fset_config.materialization_settings = MaterializationSettings(

offline_enabled=True,

resource=MaterializationComputeResource(instance_type="standard_e8s_v3"),

spark_configuration={

"spark.driver.cores": 4,

"spark.driver.memory": "36g",

"spark.executor.cores": 4,

"spark.executor.memory": "36g",

"spark.executor.instances": 2,

"spark.sql.shuffle.partitions": 1,

},

schedule=None,

)

fs_poller = fs_client.feature_sets.begin_create_or_update(transactions_fset_config)

print(fs_poller.result())You can also save the feature set asset as a YAML resource.

## uncomment to run

transactions_fset_config.dump(

root_dir

+ "/featurestore/featuresets/transactions/featureset_asset_offline_enabled.yaml"

)Backfill data for the transactions feature set

As explained earlier, materialization computes the feature values for a feature window, and it stores these computed values in a materialization store. Feature materialization increases the reliability and availability of the computed values. All feature queries now use the values from the materialization store. This step performs a one-time backfill for a feature window of 18 months.

Note

You might need to determine a backfill data window value. The window must match the window of your training data. For example, to use 18 months of data for training, you must retrieve features for 18 months. This means you should backfill for an 18-month window.

This code cell materializes data by current status None or Incomplete for the defined feature window.

from datetime import datetime

from azure.ai.ml.entities import DataAvailabilityStatus

st = datetime(2022, 1, 1, 0, 0, 0, 0)

et = datetime(2023, 6, 30, 0, 0, 0, 0)

poller = fs_client.feature_sets.begin_backfill(

name="transactions",

version="1",

feature_window_start_time=st,

feature_window_end_time=et,

data_status=[DataAvailabilityStatus.NONE],

)

print(poller.result().job_ids)# Get the job URL, and stream the job logs.

fs_client.jobs.stream(poller.result().job_ids[0])Tip

- The

timestampcolumn should followyyyy-MM-ddTHH:mm:ss.fffZformat. - The

feature_window_start_timeandfeature_window_end_timegranularity is limited to seconds. Any milliseconds provided in thedatetimeobject will be ignored. - A materialization job will only be submitted if data in the feature window matches the

data_statusthat is defined while submitting the backfill job.

Print sample data from the feature set. The output information shows that the data was retrieved from the materialization store. The get_offline_features() method retrieved the training and inference data. It also uses the materialization store by default.

# Look up the feature set by providing a name and a version and display few records.

transactions_featureset = featurestore.feature_sets.get("transactions", "1")

display(transactions_featureset.to_spark_dataframe().head(5))Further explore offline feature materialization

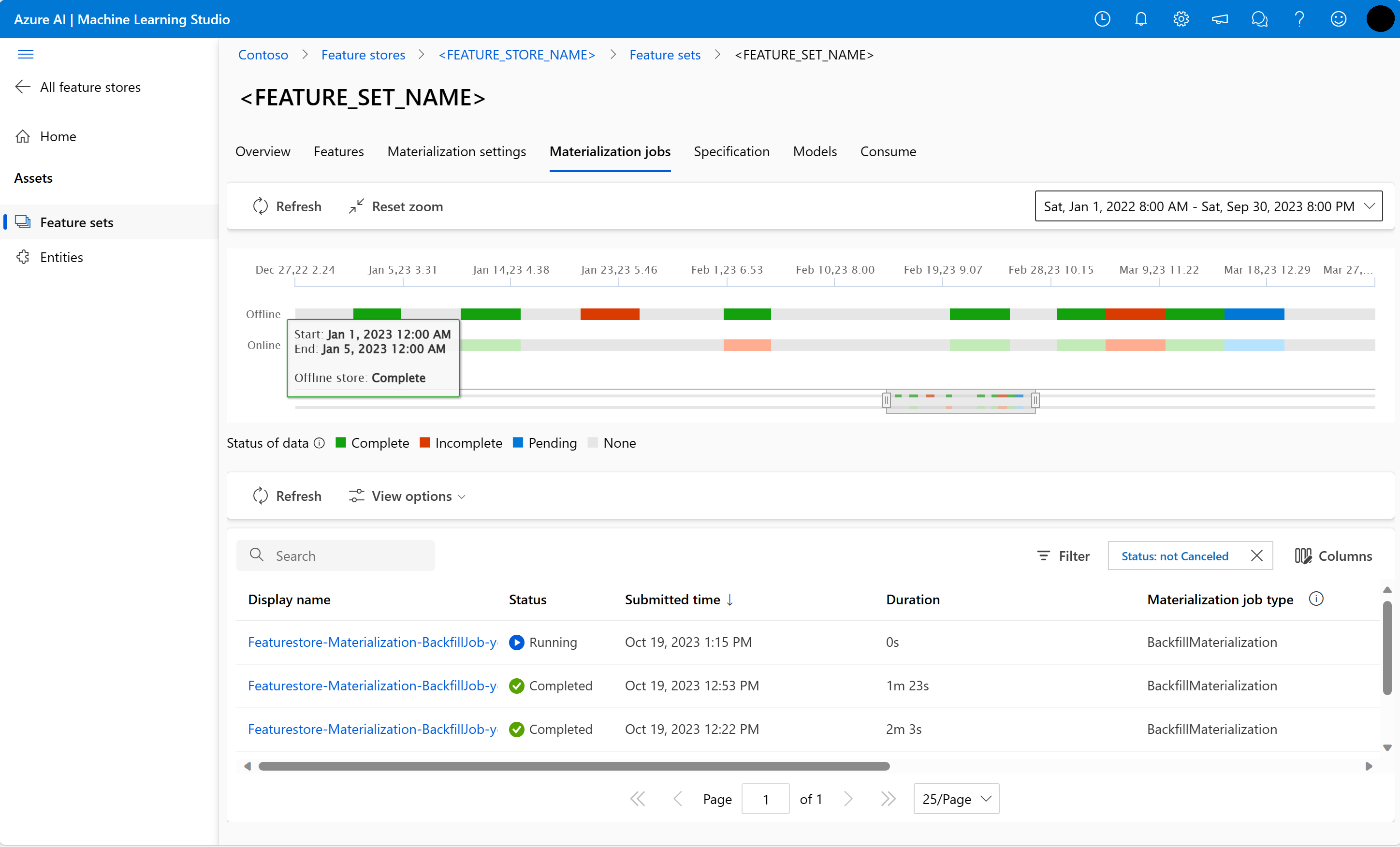

You can explore feature materialization status for a feature set in the Materialization jobs UI.

Select Feature stores on the left pane.

From the list of accessible feature stores, select the feature store for which you performed backfill.

Select Materialization jobs tab.

Data materialization status can be

- Complete (green)

- Incomplete (red)

- Pending (blue)

- None (gray)

A data interval represents a contiguous portion of data with same data materialization status. For example, the earlier snapshot has 16 data intervals in the offline materialization store.

The data can have a maximum of 2,000 data intervals. If your data contains more than 2,000 data intervals, create a new feature set version.

You can provide a list of more than one data statuses (for example,

["None", "Incomplete"]) in a single backfill job.During backfill, a new materialization job is submitted for each data interval that falls within the defined feature window.

If a materialization job is pending, or that job is running for a data interval that hasn't yet been backfilled, a new job isn't submitted for that data interval.

You can retry a failed materialization job.

Note

To get the job ID of a failed materialization job:

- Navigate to the feature set Materialization jobs UI.

- Select the Display name of a specific job with Status of Failed.

- Locate the job ID under the Name property found on the job Overview page. It starts with

Featurestore-Materialization-.

poller = fs_client.feature_sets.begin_backfill(

name="transactions",

version=version,

job_id="<JOB_ID_OF_FAILED_MATERIALIZATION_JOB>",

)

print(poller.result().job_ids)

Updating offline materialization store

- If an offline materialization store must be updated at the feature store level, then all feature sets in the feature store should have offline materialization disabled.

- If offline materialization is disabled on a feature set, materialization status of the data already materialized in the offline materialization store resets. The reset renders data that is already materialized unusable. You must resubmit materialization jobs after enabling offline materialization.

This tutorial built the training data with features from the feature store, enabled materialization to offline feature store, and performed a backfill. Next, you'll run model training using these features.

Clean up

The fifth tutorial in the series describes how to delete the resources.

Next steps

- See the next tutorial in the series: Experiment and train models by using features.

- Learn about feature store concepts and top-level entities in managed feature store.

- Learn about identity and access control for managed feature store.

- View the troubleshooting guide for managed feature store.

- View the YAML reference.