Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Learn how to use Filesystem Spec (FSSPEC) to read/write data to Azure Data Lake Storage (ADLS) using a linked service in a serverless Apache Spark pool in Azure Synapse Analytics.

In this tutorial, you learn how to:

- Read/write ADLS data in a dedicated Spark session.

If you don't have an Azure subscription, create a free account before you begin.

Prerequisites

- Azure Synapse Analytics workspace with an Azure Data Lake Storage Gen2 storage account configured as the default storage. You need to be the Storage Blob Data Contributor of the Data Lake Storage Gen2 file system that you work with.

- Spark pool in your Azure Synapse Analytics workspace. For details, see Create a Spark pool in Azure Synapse.

Sign in to the Azure portal

Sign in to the Azure portal.

Create linked services

In Azure Synapse Analytics, a linked service is where you define your connection information to other services. In this section, you add an Azure Synapse Analytics and Azure Data Lake Storage Gen2 linked service.

Open the Azure Synapse Studio and select the Manage tab.

Under External connections, select Linked services.

To add a linked service, select New.

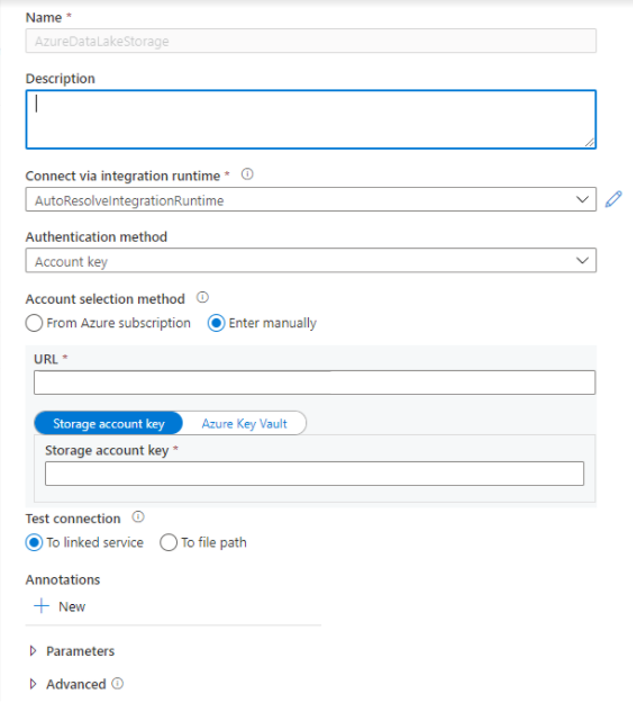

Select the Azure Data Lake Storage Gen2 tile from the list and select Continue.

Enter your authentication credentials. Account key is currently supported authentication type. Select Test connection to verify your credentials are correct. Select Create.

Important

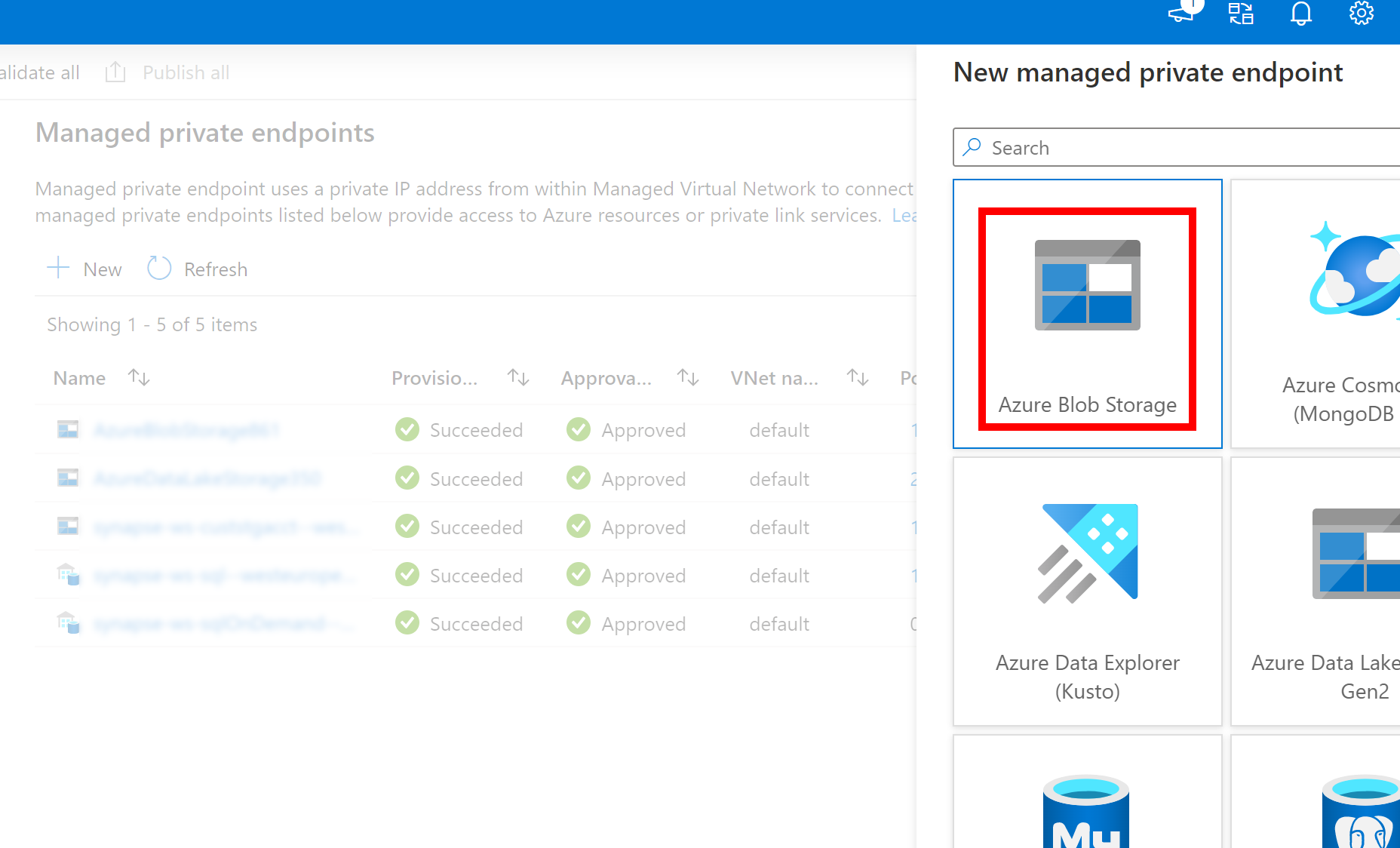

- If the preceding Linked Service to Azure Data Lake Storage Gen2 uses a managed private endpoint (with a dfs URI) , then you need to create another secondary managed private endpoint using the Azure Blob Storage option (with a blob URI) to ensure that the internal fsspec/adlfs code can connect using the BlobServiceClient interface.

- If the secondary managed private endpoint isn't configured correctly, then an error message appears:

ServiceRequestError: Cannot connect to host [storageaccountname].blob.core.windows.net:443 ssl:True [Name or service not known].

Read/Write data using storage account name and key

FSSPEC can read/write ADLS data by specifying the storage account name and key directly.

In Synapse studio, open Data > Linked > Azure Data Lake Storage Gen2. Upload data to the default storage account.

Run the following code.

Note

Update the file URL, ADLS Gen2 storage name and key in this script before running it.

# To read data import fsspec import pandas adls_account_name = '' #Provide exact ADLS account name adls_account_key = '' #Provide exact ADLS account key fsspec_handle = fsspec.open('abfs[s]://<container>/<path-to-file>', account_name=adls_account_name, account_key=adls_account_key) with fsspec_handle.open() as f: df = pandas.read_csv(f) # To write data import fsspec import pandas adls_account_name = '' #Provide exact ADLS account name adls_account_key = '' #Provide exact ADLS account key data = pandas.DataFrame({'Name':['Tom', 'nick', 'krish', 'jack'], 'Age':[20, 21, 19, 18]}) fsspec_handle = fsspec.open('abfs[s]://<container>/<path-to-file>', account_name=adls_account_name, account_key=adls_account_key, mode="wt") with fsspec_handle.open() as f: data.to_csv(f)

Read/Write data using linked service

FSSPEC can read/write ADLS data by specifying the linked service name.

In Synapse studio, open Data > Linked > Azure Data Lake Storage Gen2. Upload data to the default storage account.

Run the following code.

Note

Update the file URL, Linked Service Name, and ADLS Gen2 storage name in this script before running it.

# To read data import fsspec import pandas linked_service_name = '' #Provide exact Linked Service Name fsspec_handle = fsspec.open('abfs[s]://<container>/<path-to-file>', storage_options={'linked_service': linked_service_name}) with fsspec_handle.open() as f: df = pandas.read_csv(f) # To write data import fsspec import pandas linked_service_name = '' #Provide exact Linked Service Name data = pandas.DataFrame({'Name':['Tom', 'nick', 'krish', 'jack'], 'Age':[20, 21, 19, 18]}) fsspec_handle = fsspec.open('abfs[s]://<container>/<path-to-file>', storage_options={'linked_service': linked_service_name}, mode="wt") with fsspec_handle.open() as f: data.to_csv(f)

Upload file from local file system to default ADLS storage account of Synapse workspace

FSSPEC can upload a file from the local file system to a Synapse workspace default ADLS storage account.

Run the following code.

Note

Update the file URL in this script before running it. Ensure you import pandas when using the following code snippet. This is critical for proper handling of access tokens.

# Import libraries

import fsspec

import os

import pandas

# Set variables

local_file_name = "<local_file_name>"

ADLS_Store_Path = "abfs[s]://<filesystemname>@<account name>.dfs.windows.core.net/"+local_file_name

# Generate local file for testing

with open(local_file_name, mode='w') as f:

for i in range(1000):

f.write("Testing local file functionality\n")

print("Created: " + local_file_name)

# Upload local file to ADLS

fs = fsspec.filesystem('abfs[s]')

fs.upload(local_file_name, ADLS_Store_Path)