Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

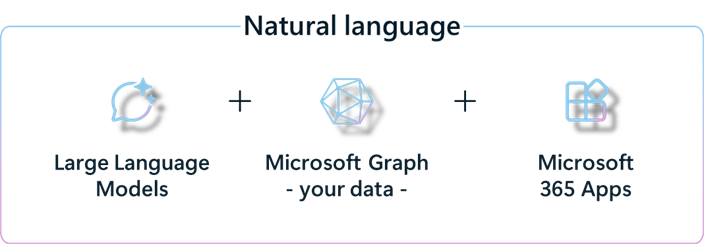

Copilot for Microsoft 365 provides users with a generative AI powered assistant that interacts in natural language to streamline your workflow. Examples include summarizing emails and documents, providing recaps of meetings, creating new content, and answering questions, among other capabilities, integrated directly into the everyday Microsoft 365 applications (Office). Copilot provides a natural language interface onto a sophisticated AI assistant within Office.

An Australian Government Protected Infosec Registered Assessors Program (IRAP) assessment of Microsoft 365 was released on the Microsoft Service Trust Portal in March 2024 and includes Copilot for Microsoft 365 in the scope of assessed services. This is the highest level of security assessment applicable to a public cloud service in Australia. However, as with any new service deployed into a sensitive environment, introducing Copilot requires some specific configuration. This configuration planning guide is dedicated to addressing the particular considerations necessary for the successful deployment of Copilot for Microsoft 365 within a sensitive operational framework. It offers a comprehensive overview of the service architecture and essential integration considerations for a sensitive environment. This guidance assumes access to Copilot for Microsoft 365 subscription licensing and Microsoft 365 Enterprise E5, in alignment with the Australian Signals Directorate (ASD) Blueprint for Secure Cloud configuration guidance for Microsoft 365.

| Copilot is… | Copilot is not… |

|---|---|

| your AI assistant | your AI replacement |

| human controlled | an independant coworker |

| boosting your productivity | eliminating your role |

| drafting content | selecting 'Send' or 'Save' |

| helping you to find answers | automated decision making |

| ready-to-go SaaS | an app dev component |

Service architecture

When considering the integration of Copilot for Microsoft 365, it's useful to evaluate Copilot for Microsoft 365 within the context of the Microsoft AI Shared Responsibility Model. This approach mirrors the considerations made for other cloud services across Infrastructure, Platform, and Software as a Service models. AI services have a similar distinction, and the due considerations and focus of a consumer of such services should be similarly informed. It's recommended customers familiarize themselves with the Microsoft AI Shared Responsibility Model.

The specific architecture of Copilot for Microsoft 365 can be more easily understood in this context, as Copilot for Microsoft 365 is a Software-as-a-Service (SaaS) product seamlessly embedded within Microsoft 365.

Customer responsibilities

The five areas of the AI Shared Responsibility Model that are relevant to consider in implementing SaaS applications such as Copilot for Microsoft 365 are detailed in this article. Customers are advised to follow the configuration guidance in this document, and to reflect on how their internal policies and practices may affect these areas of responsibility.

Within the AI Shared Responsibility Model, Copilot for Microsoft 365 covers all three responsibility scenarios:

Customer responsibility: These items are solely addressed by the customer. Microsoft doesn't have a responsibility to address these components.

Microsoft responsibility: These items are solely addressed by Microsoft. The customer doesn't have a responsibility to address these components.

Shared responsibility: Within the items of shared responsibility in this model, customers are responsible for configuring the service to meet their specific needs, while Microsoft ensures the platform’s integrity and enforces the customer configured controls. Customers are responsible for nontechnical aspects of these items, such as adopting and adapting policies and procedures, establishing recurring patterns & practices, and making decisions about the configurations to apply.

Microsoft compliance with these requirements is demonstrated by the Microsoft 365 IRAP assessment report, ensuring that Copilot for Microsoft 365 operates within the established data security and compliance parameters of the Microsoft 365 environment.

User training and accountability

Within the AI Shared Responsibility Model, customers have full responsibility for user training and accountability.

As with any new technology, effective training helps users understand and confidently apply generative AI in their work, reducing the chance of errors or inappropriate user behavior and ensuring the AI-assisted outputs are fit for purpose.

Important

Microsoft recommends that all customers prioritize adoption and change management when implementing generative AI products. This includes developing a comprehensive strategy that ensures users are appropriately trained and coached on the correct ways to utilize AI systems.

Generative AI services, such as Copilot for Microsoft 365, offer substantial benefits in enhancing productivity, creativity, and work quality, but they aren't infallible and do make mistakes. Therefore, a human-centric approach that acknowledges the end user's autonomy is crucial. Customers must ensure that users are properly trained and aware of their responsibilities when using generative AI services. This human-centred philosophy is integral to the Copilot model, ensuring that users remain in control of and accountable for their use of AI.

It's equally imperative that IT professionals, the custodians of the service, and senior stakeholders and business leaders within the organization, are educated about the use of Copilot for Microsoft 365. Leaders play a crucial role in communicating the vision for generative AI services throughout the organization, ensuring that end users receive the necessary guidance and coaching to maximize the services benefits. This includes articulating the vision for Copilot for Microsoft 365, detailing its benefits, and providing appropriate usage scenarios for a holistic understanding and effective utilization.

Important

It's important that the commitment of an organization's leadership to drive the vision and align policies and practices within these responsibilities is equally crucial for a successful implementation of Copilot for Microsoft 365.

By following these practices, many customers are effectively integrating generative AI, aligning it with their business objectives, and enhancing productivity.

For more information about initiating the appropriate change management, adoption, and user training for IT professionals, see:

- Get Started with Copilot for Microsoft 365

- Prepare your Organization for Copilot for Microsoft 365

- Product documentation

- Extensibility

For more information about initiating the appropriate change management, adoption, and user training for end-users, see:

- Product overview

- Product videos

- Copilot Interaction Lab (requires license and sign-in)

- Adoption and change management

Usage policy, admin controls

Within the AI Shared Responsibility Model, customers have full responsibility for usage policy and the application of admin controls.

The establishment of a usage policy is a matter for individual organizations to consider in the context of their legal, contractual, and regulatory frameworks, own work patterns, and stakeholder expectations, such as:

- Internal use expectations versus guests

- Use in the creation of external communications

- Acceptance of AI involvement in personalized interactions

- Stakeholder demographics

The predominant focus of this configuration planning guide is on admin controls, which are detailed in the Recommended configuration article.

Identity, device, and access management

Within the AI Shared Responsibility Model, identity, device, and access management are a shared responsibility.

Copilot for Microsoft 365 is designed to integrate seamlessly with the existing identity, device, and access management configurations within a customer’s Microsoft 365 environment. This guide focuses on additional controls specific to Copilot for Microsoft 365 or highlights existing controls that are relevant for organizations implementing Copilot for Microsoft 365.

For further configuration guidance in the deployment of a sensitive Microsoft 365 environment, consult the ASD Blueprint for Secure Cloud that offers comprehensive recommendations, and protocols for a secure configuration.

Data governance

Within the AI Shared Responsibility Model, data governance is a shared responsibility. Effective data governance is essential for managing knowledge and information, enhancing discoverability and boosting productivity within any organization that has undergone digital transformation. This document outlines the crucial data governance mechanisms, and practices that are integral to a successful implementation of Copilot for Microsoft 365.

For more information on data governance within the broader Microsoft 365 ecosystem, see:

AI plugins and data connections

Within the AI Shared Responsibility Model, plugins and data connections are a shared responsibility. Connectors and Plugins enable customers to broaden the capabilities of Copilot for Microsoft 365, allowing it to interface with systems and data beyond their Microsoft 365 environment. Therefore, customers need to consider both the responsibility to manage the risks with using external data and systems, and the integration itself, as these may be Microsoft developed, customer developed, or non-Microsoft in origin. Customers are responsible for any non-Microsoft or custom developed connectors and plugins.

IT professionals are encouraged to become acquainted with Copilot for Microsoft 365 extensibility options and architectures.

Plugins, such as Bing, differ from connectors in that they operate in real-time to augment Copilot with new abilities and insights. A more detailed description of plugins and connectors is provided in the Service components article.