Events

Take the Microsoft Learn AI Skills Challenge

Sep 24, 11 PM - Nov 1, 11 PM

Elevate your skills in Microsoft Fabric and earn a digital badge by November 1.

Register nowThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

The initial step in the Hive Metastore (HMS) migration involves determining the databases, tables, and partitions you want to transfer. It's not necessary to migrate everything; you can select specific databases. When identifying databases for migration, make sure to verify if there are managed or external Spark tables.

For HMS considerations, refer to differences between Azure Synapse Spark and Fabric.

Note

Alternatively, if your ADLS Gen2 contains Delta tables, you can create a OneLake shortcut to a Delta table in ADLS Gen2.

Follow these key steps for migration:

Note

Scripts only copy Spark catalog objects to Fabric lakehouse. Assumption is that the data is already copied (for example, from warehouse location to ADLS Gen2) or available for managed and external tables (for example, via shortcuts—preferred) into the Fabric lakehouse.

The focus of Step 1 is on exporting the metadata from source HMS to the Files section of your Fabric lakehouse. This process is as follows:

1.1) Import HMS metadata export notebook into your Azure Synapse workspace. This notebook queries and exports HMS metadata of databases, tables, and partitions to an intermediate directory in OneLake (functions not included yet). Spark internal catalog API is used in this script to read catalog objects.

1.2) Configure the parameters in the first command to export metadata information to an intermediate storage (OneLake). The following snippet is used to configure the source and destination parameters. Ensure to replace them with your own values.

// Azure Synapse workspace config

var SynapseWorkspaceName = "<synapse_workspace_name>"

var DatabaseNames = "<db1_name>;<db2_name>"

var SkipExportTablesWithUnrecognizedType:Boolean = false

// Fabric config

var WorkspaceId = "<workspace_id>"

var LakehouseId = "<lakehouse_id>"

var ExportFolderName = f"export/${SynapseWorkspaceName}/sparkCatalogMetadata"

var OutputFolder = f"abfss://${WorkspaceId}@onelake.dfs.fabric.microsoft.com/${LakehouseId}/Files/${ExportFolderName}/"

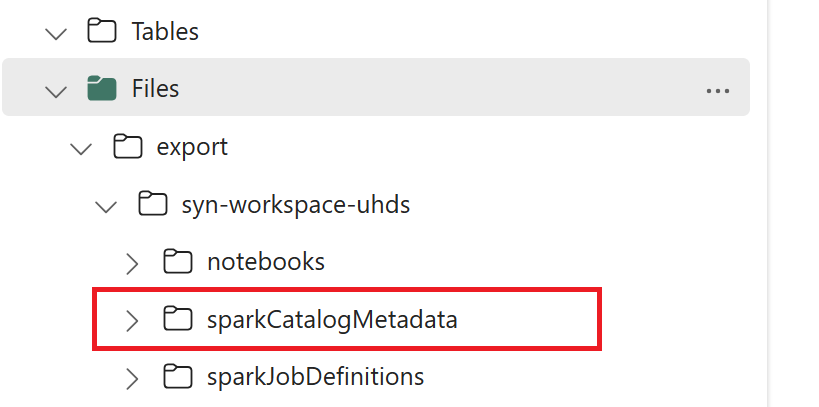

1.3) Run all notebook commands to export catalog objects to OneLake. Once cells are completed, this folder structure under the intermediate output directory is created.

Step 2 is when the actual metadata is imported from intermediate storage into the Fabric lakehouse. The output of this step is to have all HMS metadata (databases, tables, and partitions) migrated. This process is as follows:

2.1) Create a shortcut within the “Files” section of the lakehouse. This shortcut needs to point to the source Spark warehouse directory and is used later to do the replacement for Spark managed tables. See shortcut examples pointing to Spark warehouse directory:

abfss://<container>@<storage_name>.dfs.core.windows.net/synapse/workspaces/<workspace_name>/warehousedbfs:/mnt/<warehouse_dir>abfss://<container>@<storage_name>.dfs.core.windows.net/apps/spark/warehouse2.2) Import HMS metadata import notebook into your Fabric workspace. Import this notebook to import database, table, and partition objects from intermediate storage. Spark internal catalog API is used in this script to create catalog objects in Fabric.

2.3) Configure the parameters in the first command. In Apache Spark, when you create a managed table, the data for that table is stored in a location managed by Spark itself, typically within the Spark's warehouse directory. The exact location is determined by Spark. This contrasts with external tables, where you specify the location and manage the underlying data. When you migrate the metadata of a managed table (without moving the actual data), the metadata still contains the original location information pointing to the old Spark warehouse directory. Hence, for managed tables, WarehouseMappings is used to do the replacement using the shortcut created in Step 2.1. All source managed tables are converted as external tables using this script. LakehouseId refers to the lakehouse created in Step 2.1 containing shortcuts.

// Azure Synapse workspace config

var ContainerName = "<container_name>"

var StorageName = "<storage_name>"

var SynapseWorkspaceName = "<synapse_workspace_name>"

// Fabric config

var WorkspaceId = "<workspace_id>"

var LakehouseId = "<lakehouse_id>"

var ExportFolderName = f"export/${SynapseWorkspaceName}/sparkCatalogMetadata"

var ShortcutName = "<warehouse_dir_shortcut_name>"

var WarehouseMappings:Map[String, String] = Map(

f"abfss://${ContainerName}@${StorageName}.dfs.core.windows.net/synapse/workspaces/${SynapseWorkspaceName}/warehouse"-> f"abfss://${WorkspaceId}@onelake.dfs.fabric.microsoft.com/${LakehouseId}/Files/${ShortcutName}"

)

var OutputFolder = f"abfss://${WorkspaceId}@onelake.dfs.fabric.microsoft.com/${LakehouseId}/Files/${ExportFolderName}/"

var DatabasePrefix = ""

var TablePrefix = ""

var IgnoreIfExists = true

2.4) Run all notebook commands to import catalog objects from intermediate path.

Note

When importing multiple databases, you can (i) create one lakehouse per database (the approach used here), or (ii) move all tables from different databases to a single lakehouse. For the latter, all migrated tables could be <lakehouse>.<db_name>_<table_name>, and you will need to adjust the import notebook accordingly.

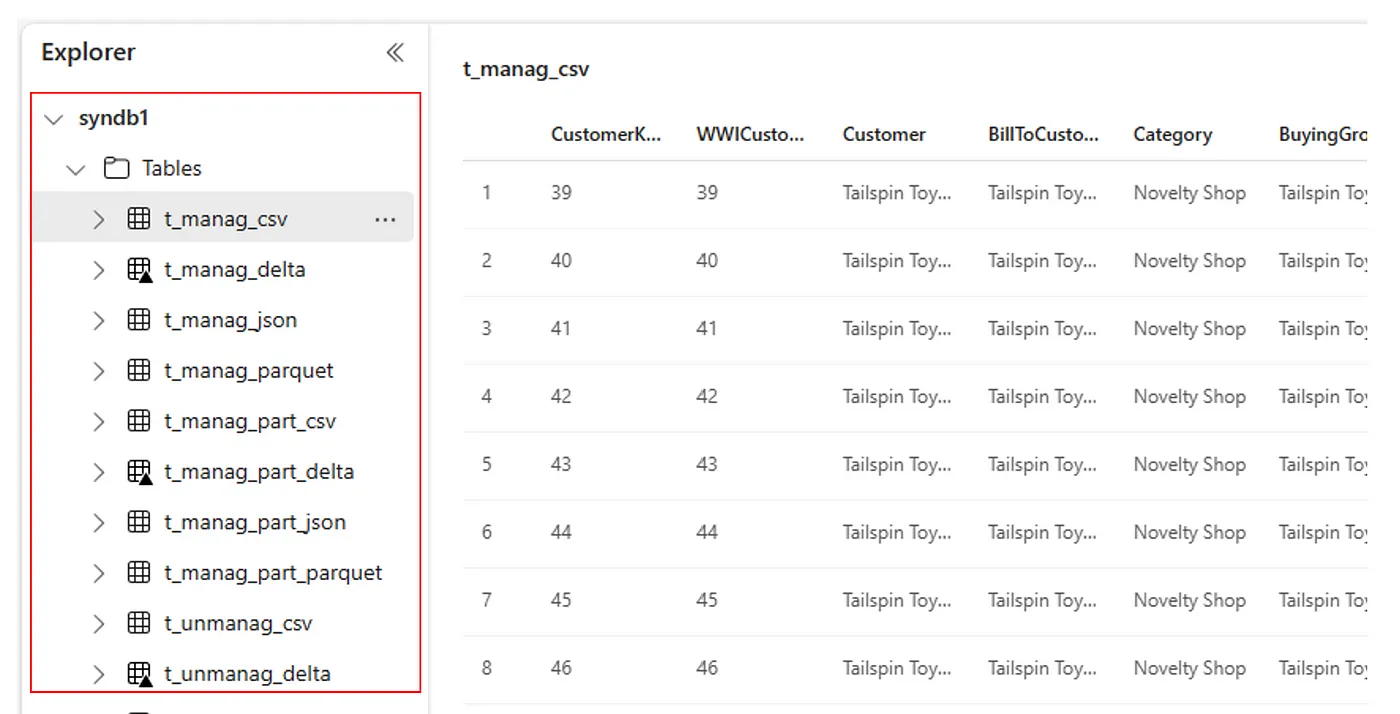

Step 3 is where you validate that metadata has been migrated successfully. See different examples.

You can see the databases imported by running:

%%sql

SHOW DATABASES

You can check all tables in a lakehouse (database) by running:

%%sql

SHOW TABLES IN <lakehouse_name>

You can see the details of a particular table by running:

%%sql

DESCRIBE EXTENDED <lakehouse_name>.<table_name>

Alternatively, all imported tables are visible within the Lakehouse explorer UI Tables section for each lakehouse.

Events

Take the Microsoft Learn AI Skills Challenge

Sep 24, 11 PM - Nov 1, 11 PM

Elevate your skills in Microsoft Fabric and earn a digital badge by November 1.

Register nowTraining

Learning path

Implement a Lakehouse with Microsoft Fabric - Training

Implement a Lakehouse with Microsoft Fabric

Certification

Microsoft Certified: Fabric Analytics Engineer Associate - Certifications

As a Fabric analytics engineer associate, you should have subject matter expertise in designing, creating, and deploying enterprise-scale data analytics solutions.