Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

In this tutorial, you build a pipeline to move a Sample dataset to the Data Warehouse. This experience shows you a quick demo about how to use pipeline copy activity and how to load data into Data Warehouse.

Prerequisites

To get started, you must complete the following prerequisites:

- A Microsoft Fabric tenant account with an active subscription. Create an account for free.

- Make sure you have a Microsoft Fabric enabled Workspace: Create a workspace.

- Make sure you have already created a Data Warehouse. To create it, refer to Create a Data Warehouse

Create a pipeline

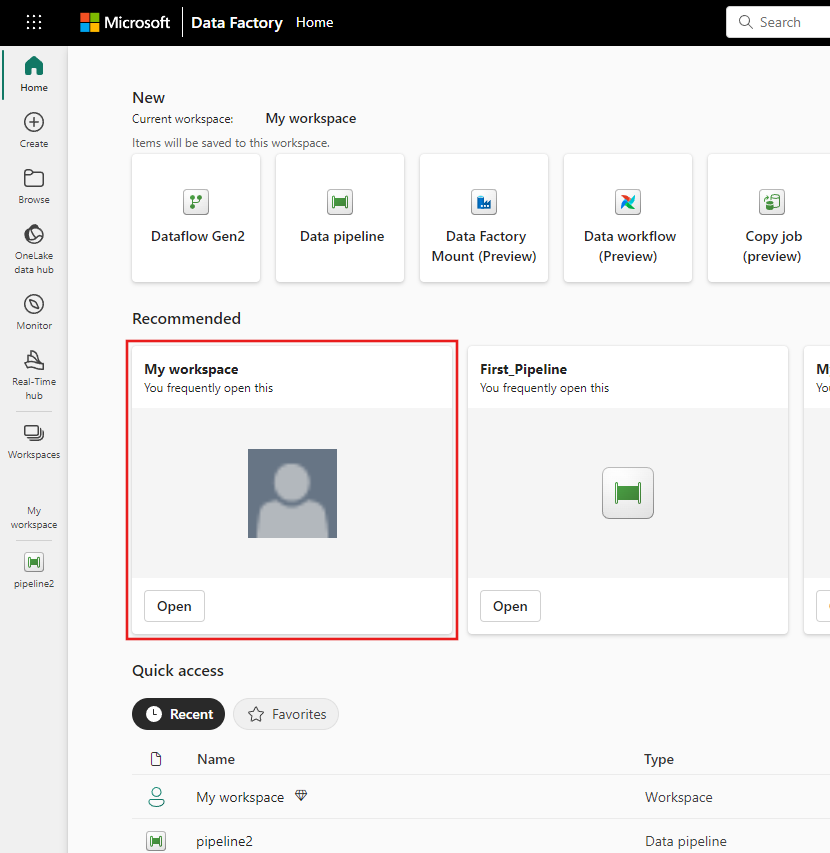

Navigate to Power BI.

Select the Power BI icon in the bottom left of the screen, then select Fabric to open homepage of Data Factory.

Navigate to your Microsoft Fabric workspace. If you created a new workspace in the prior Prerequisites section, use this one.

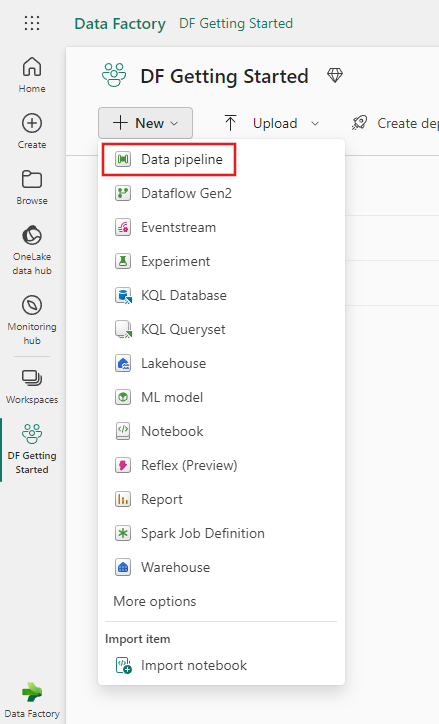

Select + New item.

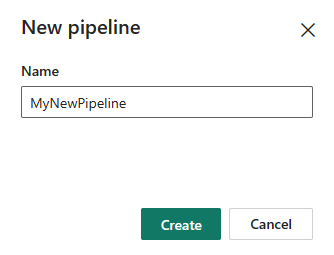

Search for and select Pipeline and then input a pipeline name to create a new pipeline. to create a new pipeline.

Copy data using pipeline

In this session, you start to build your pipeline by following below steps about copying from a sample dataset provided by pipeline into Data Warehouse.

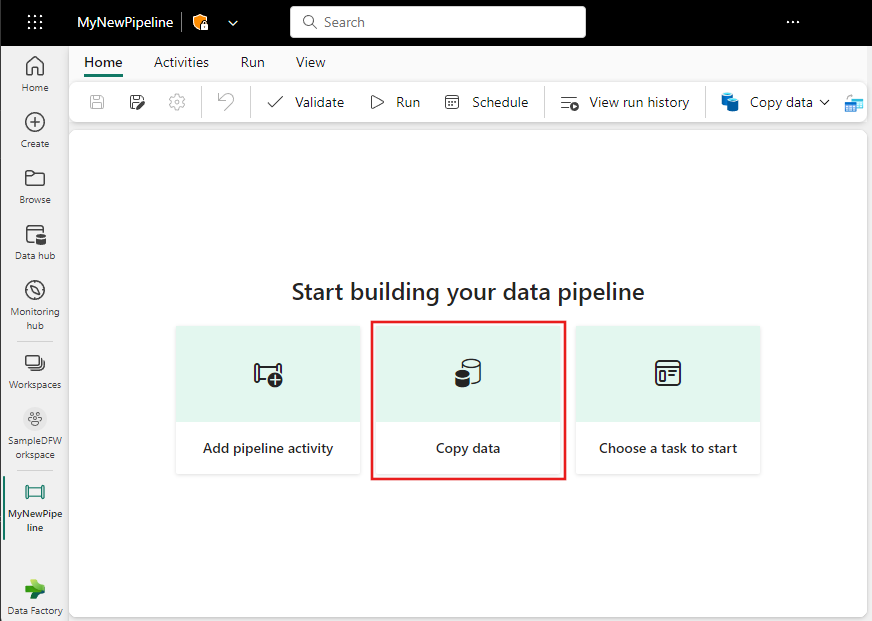

Step 1: Start with the Copy assistant

Select Copy data assistant on the canvas to open the copy assistant tool to get started. Or Select Use copy assistant from the Copy data drop down list under the Activities tab on the ribbon.

Step 2: Configure your source

Choose the NYC Taxi - Green from the Sample data options for your data source.

In the Connect to data source section of the Copy data assistant, a preview of the sample data NYC Taxi - Green is displayed. Select Next to move on to the data destination.

Step 3: Configure your destination

Select the OneLake tab and choose an existing Warehouse.

Configure and map your source data to the destination Warehouse table by entering Table, then select Next one more time.

Configure other settings on Settings page. In this tutorial, select Next directly since you don't need to use staging and copy command.

Step 4: Review and run your copy activity

Review your copy activity settings in the previous steps and select Save + Run to start the activity. Or you can revisit the previous steps in the tool to edit your settings, if needed.

The Copy activity is added to your new pipeline canvas. All settings including advanced settings for the activity are available in the tabs below the pipeline canvas when the created Copy data activity is selected.

Schedule your pipeline

You can monitor the running process and check the results on the Output tab below the pipeline canvas. Select the run details button (with the glasses icon highlighted) to view the run details.

The run details show how much data was read and written and various other details about the run.

You can also schedule the pipeline to run with a specific frequency as required. Below is an example scheduling the pipeline to run every 15 minutes. You can also specify the Start time and End time for your schedule. If you don't specify a start time, the start time is the time your schedule applies. If you don't specify an end time, your pipeline run will keep recurring every 15 minutes.

Related content

This sample shows you how to load sample data into a Data Warehouse using Data Factory in Microsoft Fabric. You learned how to:

- Create a pipeline.

- Copy data using your pipeline.

- Run and schedule your pipeline.

Next, advance to learn more about monitoring your pipeline runs.