Usage considerations for DICOM data ingestion in healthcare data solutions (preview)

[This article is prerelease documentation and is subject to change.]

This article outlines key considerations to review before using the DICOM data ingestion capability. Understanding these factors ensures smooth integration and operation within your healthcare data solutions (preview) environment. This resource also helps you effectively navigate some potential challenges and limitations with the capability.

Ingestion file size

For the preview release of the DICOM data ingestion capability, there's a logical size limit of 3 GB for ZIP files and up to 600 MB for native DCM files. If your files exceed these limits, you might experience longer execution times or failed ingestion. We recommend splitting the ZIP files into smaller segments (under 3 GB) to ensure successful execution. For native DCM files larger than 600 MB, ensure you scale up the Spark nodes (executors) as needed.

DICOM tag extraction

We prioritize promoting the 30 tags present in the bronze lakehouse dicomimagingmetastore delta table for the following two reasons:

- These 30 tags are crucial for general querying and exploration of DICOM data, such as modality and laterality.

- These tags are necessary for generating and populating the silver (FHIR) and gold (OMOP) delta tables in subsequent execution steps.

You can extend and promote other DICOM tags of your interest. However, the DICOM data ingestion notebooks don't automatically recognize or process any other columns of DICOM tags that you add to the dicomimagingmetastore delta table in the bronze lakehouse. You need to process the extra columns independently.

Append pattern in the bronze lakehouse

All newly ingested DCM (or ZIP) files are appended to the dicomimagingmetastore delta table in the bronze lakehouse. For every successfully ingested DCM file, we create a new record entry in the dicomimagingmetastore delta table. There's no business logic for merge or update operations at the bronze lakehouse level.

The dicomimagingmetastore delta table reflects every ingested DCM file at the DICOM instance level and should be considered as such. If the same DCM file is ingested again into the Ingest folder, we add another entry to the dicomimagingmetastore delta table for the same file. However, the file names are different due to the Unix prefix timestamp. Depending on the date of ingestion, the file might be placed within a different yyyy/mm/dd folder.

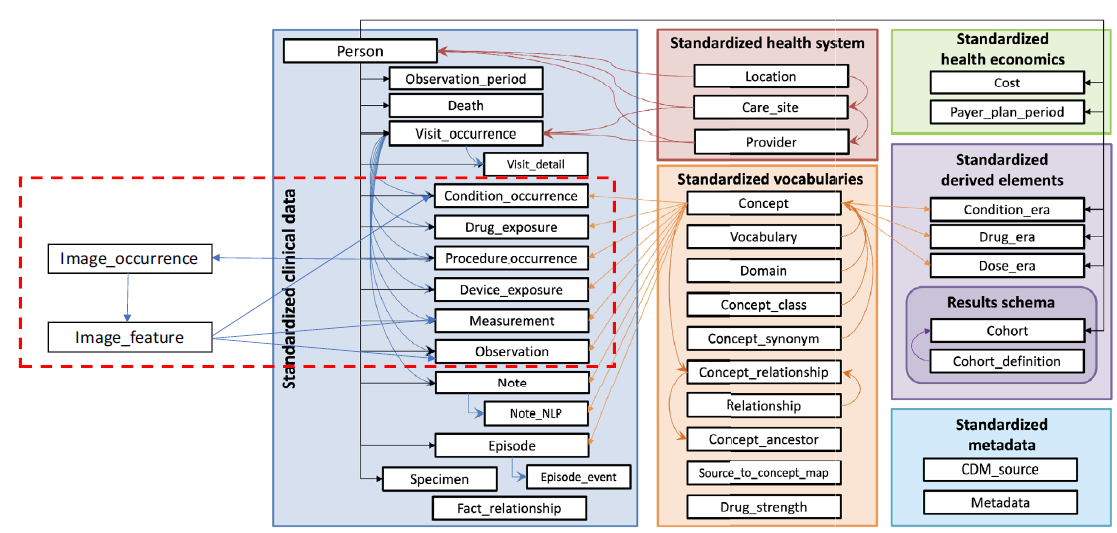

OMOP version and imaging extensions

The current implementation of the gold lakehouse is based on Observational Medical Outcomes Partnership (OMOP) Common Data Model version 5.4. OMOP doesn't yet have a normative extension to support imaging data. Hence, the capability implements the extension proposed in Development of Medical Imaging Data Standardization for Imaging-Based Observational Research: OMOP Common Data Model Extension. This extension is the most recent proposal in the imaging research field, and published on February 5, 2024. The preview release of the DICOM data ingestion capability is limited to the Image_Occurrence table in the gold lakehouse.

Structured streaming in Spark

Structured streaming is a scalable and fault-tolerant stream processing engine built on the Spark SQL engine. You can express your streaming computation the same way you would express a batch computation on static data. The system ensures end-to-end fault-tolerance guarantees through checkpoints and Write-Ahead Logs. To learn more about structured streaming, go to Structured Streaming Programming Guide (v3.5.1).

We use ForeachBatch to process the incremental data. This method applies arbitrary operations and writes the logic on the output of a streaming query. The query is executed on the output data of every micro-batch of a streaming query. In the DICOM data ingestion capability, structured streaming is used in the following execution steps:

| Execution step | Checkpoint folder location | Tracked objects |

|---|---|---|

| Extract DICOM metadata into the bronze lakehouse | healthcare#.HealthDataManager\DMHCheckpoint\medical_imaging\dicom_metadata_extraction |

DCM files in the bronze lakehouse under Files\Process\Imaging\DICOM\yyyy\mm\dd. |

| Convert DICOM metadata to the FHIR format | healthcare#.HealthDataManager\DMHCheckpoint\medical_imaging\dicom_to_fhir |

Delta table dicomimagingmetastore in the bronze lakehouse. |

| Ingest data into the bronze lakehouse ImagingStudy delta table | healthcare#.HealthDataManager\DMHCheckpoint\<bronzelakehouse>\ImagingStudy |

FHIR NDJSON files in the bronze lakehouse under Files\Process\Clinical\FHIR NDJSON\yyyy\mm\dd\ImagingStudy. |

| Ingest data into the silver lakehouse ImagingStudy delta table | healthcare#.HealthDataManager\DMHCheckpoint\<silverlakehouse>\ImagingStudy |

Delta table ImagingStudy in the bronze lakehouse. |

Tip

You can use OneLake file explorer to view the content of the files and folders listed in the table. For more information, see Use OneLake file explorer.

Group pattern in the bronze lakehouse

Group patterns are applied when you ingest new records from the dicomimagingmetastore delta table in the bronze lakehouse to the ImagingStudy delta table in the bronze lakehouse. The DICOM data ingestion capability groups all the instance-level records in the dicomimagingmetastore delta table by the study level. It creates one record per DICOM study as an ImagingStudy, and then inserts the record into the ImagingStudy delta table in the bronze lakehouse.

Upsert pattern in the silver lakehouse

The upsert operation compares the FHIR delta tables between the bronze and silver lakehouses based on the {FHIRResource}.id

- If a match is identified, the silver record is updated with the new bronze record.

- If there's no match identified, the bronze record is inserted as a new record in the silver lakehouse.

ImagingStudy limitations

The upsert operation works as expected when you ingest DCM files from the same DICOM study in the same batch execution. However, if you later ingest more DCM files (from a different batch) that belong to the same DICOM study previously ingested into the silver lakehouse, the ingestion results in an Insert operation. The process doesn't perform an Update operation.

This Insert operation occurs because the notebook creates a new {FHIRResource}.id for ImagingStudy in each batch execution. This new ID doesn't match with IDs in the previous batch. As a result, you see two ImagingStudy records in the silver table with different ImagingStudy.id values. These IDs are related to their respective batch executions but belong to the same DICOM study.

As a workaround, complete the batch executions and merge the two ImagingStudy records in the silver lakehouse based on a combination of unique IDs. However, don't use ImagingStudy.id for the merge. Instead, you can use other IDs such as [studyinstanceuid (0020,000D)] and [patientid (0010,0020)] to merge the records.

OMOP tracking approach

The healthcare#_msft_silver_omop notebook uses the OMOP API to monitor changes in the silver lakehouse delta table. It identifies newly modified or added records that require upserting into the gold lakehouse delta tables. This process is known as Watermarking.

The OMOP API compares the date and time values between {Silver.FHIRDeltatable.modified_date} and {Gold.OMOPDeltatable.SourceModifiedOn} to determine the incremental records that were modified or added since the last notebook execution. However, this OMOP tracking approach doesn't apply to all delta tables in the gold lakehouse. The following tables aren't ingested from the delta table in the silver lakehouse:

- concept

- concept_ancestor

- concept_class

- concept_relationship

- concept_synonym

- fhir_system_to_omop_vocab_mapping

- vocabulary

These gold delta tables populate using the vocabulary data included in the clinical sample data deployment. The vocabulary dataset in this folder is managed using Structured streaming in Spark.

You can locate the vocabulary sample data at the following path:

healthcare#.HealthDataManager\DMHSampleData\healthcare-sampledata\msft_dmh_omop_vocab_data

Upsert pattern in the gold lakehouse

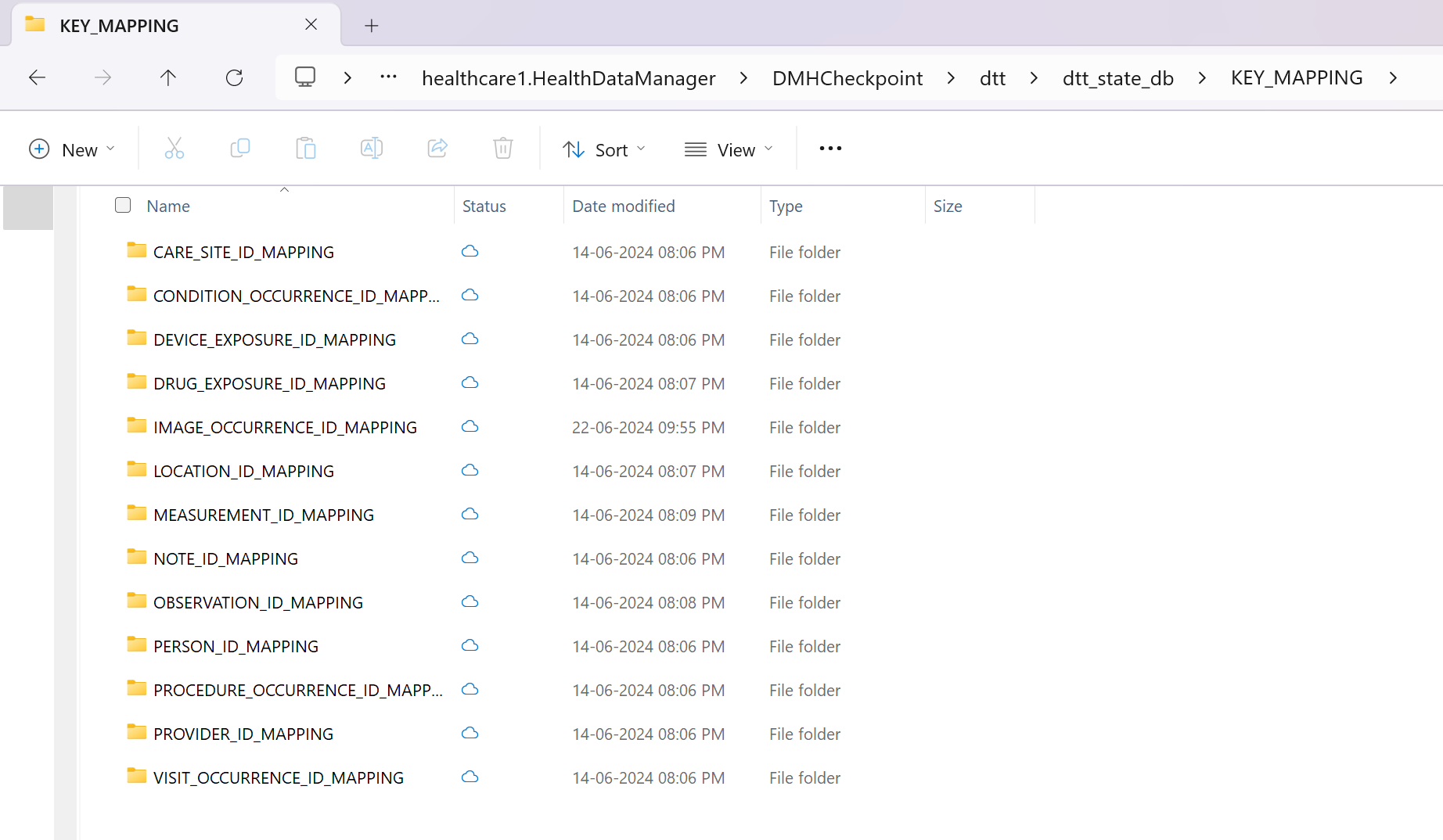

The upsert pattern in the gold lakehouse differs from the silver lakehouse. The OMOP API used by the healthcare#_msft_silver_omop notebook creates new IDs for each entry in the delta tables of the gold lakehouse. The API creates these IDs when it ingests or converts new records from the silver to gold lakehouse. The OMOP API also maintains internal mappings between the newly created IDs and their corresponding internal IDs in the silver lakehouse delta table.

The API operates as follows:

If converting a record from a silver to gold delta table for the first time, the API generates a new ID in the OMOP gold lakehouse and establishes a mapping between this new ID and the original ID in the silver lakehouse. The record is then inserted into the gold delta table with the newly generated ID.

If the same record in the silver lakehouse undergoes some modification and is ingested again into the gold lakehouse, the OMOP API recognizes the existing record in the gold lakehouse (using the mapping information). The records are then updated to the gold lakehouse under the same ID previously generated by the OMOP API.

Mappings between the newly generated IDs (ADRM_ID) in the gold lakehouse and the original IDs (INTERNAL_ID) for each OMOP delta table are stored in OneLake parquet files. You can locate the parquet files at the following file path:

[OneLakePath]\[workspace]\healthcare#.HealthDataManager\DMHCheckpoint\dtt\dtt_state_db\KEY_MAPPING\[OMOPTableName]_ID_MAPPING

You can also query the parquet files in a Spark notebook to view the mapping.

Integration with DICOM service

The current integration between the DICOM data ingestion capability and the Azure Health Data Services DICOM service supports only Create and Update events. This means you can create new imaging studies, series, and instances, or even update existing ones. However, the integration doesn't yet support Delete events. If you delete a study, series, or instance in the DICOM service, the DICOM data ingestion capability doesn't reflect this change. The imaging data remains unchanged and isn't deleted.

Use OneLake file explorer

The OneLake file explorer application seamlessly integrates OneLake with Windows file explorer. You can use the OneLake file explorer to view any folder or file deployed within your Fabric workspace. You can also see the sample data, OneLake files and folders, and the checkpoint files.

Use Azure Storage Explorer

You can also use Azure Storage Explorer to: