Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Improving developer self-service should be one of the first problems you tackle in your platform engineering journey.

One of the easiest ways to start enabling automated self-service experiences is to reuse your existing engineering systems. Not only are these systems familiar to you and your internal customers, but they can enable a wide breadth of automation scenarios even if the initial user experience isn't pretty.

This article provides tips for applying your engineering systems to tackle a wider array of self-service scenarios, and details on how to encapsulate best practices into templates that help you start right and stay right.

Evaluate your base DevOps and DevSecOps practices

Engineering systems are a critical aspect of your internal developer platform. Internal developer platforms build up from the main tenants of DevOps and DevSecOps to reduce cognitive load for everyone involved.

DevOps combines development and operations to unite people, process, and technology in application planning, development, delivery, and operations. It's intended to improve collaboration across historically siloed roles like development, IT operations, quality engineering, and security. You establish a continuous loop between development, deployment, monitoring, observation, and feedback. DevSecOps layers into this loop with continuous security practices throughout the application development process.

The following sections focus on improvements more directly attributed to the platform engineering movement: paved paths, automated infrastructure provisioning (in addition to application deployment), coding environment setup, along with self-service provisioning and configuration of tools, team assets, and services that aren't directly part of the application development loop.

Establish your desired paved paths

If you have multiple sets of tools that make up your engineering systems already, one early decision to make is whether you want to consolidate them as a part of your initial platform engineering efforts or if you'll support a constellation of different tools from the outset. Defining a set of paved paths within this constellation of tools is most effective and provides an increased level of flexibility.

As you start shifting towards a product mindset, think of the engineering systems within these paved paths as consisting of tools that are managed centrally as a service to development teams. Individual teams or divisions within your organization can then deviate but are expected to manage, maintain, and pay for their tools separately while still adhering to any compliance requirements. This provides a way to feed new tools into the ecosystem without disruption since you can evaluate anything that deviates for possible inclusion in a paved path over time. As one platform engineering lead put it:

You can still do your own thing but do it in a direction we're going… you can change whatever you want, but this becomes your responsibility. You own the changes – you own the sharp knives. - Mark, platform engineering lead, large European multinational retail company

Given that a key goal for platform engineering is to shift to a product mindset where you provide value to your internal customers, this constellation approach typically works better than a top-down mandate. As you establish and refine your paved paths, leaving some flexibility allows teams to provide input and address any truly unique requirements for a given application without affecting others in the organization. This leads to a set of fully paved, golden paths, while others are only partially paved. In cases where there are no unique requirements, the extra work development that teams take on naturally causes them to want to move to a supported path over time.

If you prefer a consolidation strategy, migrating existing applications might be more work than you expect, so to start you'll likely want focus on the start right aspect of this space and focus on new projects. This provides your first paved path, while everything existing is inherently unpaved. Development teams on the unpaved path can then consider moving once your new paved path shows its value to the organization. At that point you can run a get right campaign to get everyone on your desired state through two-way communication since development teams view this as a benefit rather than a tax. During the campaign, platform engineering teams can focus on helping teams migrate, while the dev teams provide feedback on how to make the paved paths better.

Regardless, avoid mandating the use of your paved paths. The most effective way to roll out paved paths is to emphasize what teams get out of them rather than through forced adoption. Since your internal developer platform focuses on making these exact same teams happy, budget and time-to-value pressure on individual leaders takes care of the rest. Get right campaigns then provide an avenue for two-way conversations on the best way for those on an unpaved path to switch over.

Use developer automation tools to improve self-service for your paved paths

Part of creating your first paved path should be to establish your core developer automation products. These are important as you start to think about enabling developer self-service capabilities.

Enable automatic application infrastructure provisioning during continuous delivery

If not already implemented, the problems you identified during your planning likely point to problems that continuous integration (CI) and continuous delivery (CD) can help resolve. Products like GitHub Actions, Azure DevOps, Jenkins, along with pull-based GitOps solutions like Flux or Argo CD exist in this space. You can get started on these topics in the Microsoft DevOps resource center.

Even if you've already implemented a way to continuously deploy your application in existing infrastructure, you should consider using infrastructure as code (IaC) to create or update needed application infrastructure as a part of your CD pipeline.

For example, consider the following illustrations that show two approaches that use GitHub Actions to update infrastructure and deploy into Azure Kubernetes Service: one using push-based deployments, and one pull-based (GitOps) deployments.

Which you choose is driven by your existing IaC skill set and the details of your target application platform. The GitOps approach is more recent and is popular among organizations using Kubernetes as a base for their applications, while the pull-based model currently gives you the most flexibility given the number of available options for it. We expect most organizations use a mix of the two. Regardless, becoming well versed in IaC practices can help you learn patterns that apply to further automation scenarios.

Centralize IaC in a catalog or registry to scale and improve security

To manage and scale IaC across applications, you should publish your IaC artifacts centrally for reuse. For example, you can use Terraform modules in a registry, Bicep modules, Radius recipes, or Helm Charts stored in a cloud-native OCI Artifact registry like Azure Container Registry (ACR), DockerHub, or the catalog in Azure Deployment Environments (ADE). For GitOps and Kubernetes, the Cluster API (and implementations like CAPZ) can let you manage Kubernetes workload clusters, while custom resource definitions like Azure Service Operator can give added support for other kinds of Azure resources. Other tools such as Crossplane support resources across multiple clouds. These allow you to use centralized or common Helm charts in something like ACR for a wider array of scenarios.

Centralizing IaC improves security by giving you better control over who can make updates since they're no longer stored with application code. There's less of a risk of an accidental break caused by an inadvertent change during a code update when experts, operations, or platform engineers make needed changes. Developers also benefit from these building blocks since they don't have to author complete IaC templates themselves and automatically benefit from encoded best practices.

Which IaC format you choose depends on your existing skill set, the level of control you need, and the app model you use. For example, Azure Container Apps (ACA) and the recent experimental Radius OSS incubation project are more opinionated than using Kubernetes directly, but also streamline developer experience. To learn about the pros and cons of different models, see Describe cloud service types. Regardless, referencing centralized and managed IaC rather than having complete definitions in your source tree has significant benefits.

Persisting any needed provisioning identities or secrets in a way that developers can't directly access them layers in the basic building blocks for governance. For example, consider this illustration on the role separation you can achieve using Azure Deployment Environments (ADE).

Here, platform engineers and other specialists develop IaC and other templates and place them in a catalog. Operations can then add managed identities and subscriptions by environment type and assign developers and other users who are allowed to use them for provisioning.

Developers or your CI/CD pipeline can then use the Azure CLI or Azure Developer CLI to provision preconfigured and controlled infrastructure without even having access to the underlying subscription or identities required to do so. Whether you use something like ADE or not, your continuous delivery system of choice can help you update infrastructure safely and securely by separating secrets and sourcing IaC content from locations developers can't access or modify on their own.

Enable self-service in scenarios beyond application continuous delivery

While CI and CD concepts are tied to application development, many of the things your internal customers want to provision don't directly tie to a particular application. This can be shared infrastructure, creating a repository, provisioning tools, and more.

To understand where this might help, think about where you currently have manual or service-desk based processes. For each, think about these questions:

- How often does this process happen?

- Is the process slow, error prone, or require significant work to achieve?

- Are these processes manual due to a required approval step or simply lack of automation?

- Are approvers familiar with source control systems and pull request processes?

- What are the auditing requirements for the processes? Do these differ from your source control system’s auditing requirements?

- Are there processes that you can start with that are lower risk before moving on to more complex ones?

Identify frequent, high-effort, or error-prone processes as potential targets to automate first.

Use the everything as code pattern

One of the nice things about Git in addition to its ubiquity is that it's intended to be a secure, auditable source of information. Beyond the commit history and access controls, concepts like pull requests and branch protection provide a way to establish specific reviewers, a conversation history, and or automated checks that must pass before merging into the main branch. When combined with flexible task engines like those found in CI/CD systems, you have a secure automation framework.

The idea behind everything as code is that you can turn nearly anything into a file in a secure Git repository. Different tools or agents connected to the repository can then read the content. Treating everything as code aids repeatability through templating and simplifies developer self-service. Let's go through several examples of how this can work.

Apply IaC patterns to any infrastructure

While IaC gained popularity for helping automate application delivery, the pattern extends to any infrastructure, tools, or services you might want to provision and configure, not just those tied to a specific application. For example, shared Kubernetes clusters with Flux installed, provisioning something like DataDog that's used by multiple teams and applications, or even setting up your favorite collaboration tools.

The way this works is that you have a separate, secured centralized repository that houses a series of files that represent what should be provisioned and configured (in this case anything from Bicep or Terraform, to Helm charts and other Kubernetes native formats). An operations team or other set of administrators own the repository, and developers (or systems) can submit pull requests (PRs). Once these PRs are merged into the main branch by these administrators, the same CI/CD tools used during application development can kick in to process the changes. Consider the following illustration that shows GitHub Actions, IaC, and deployment identities housed in Azure Deployment Environments:

If you're already using a GitOps approach for application deployment, you can reuse these tools as well. Combining tools like Flux and Azure Service Operator allows you to expand outside of Kubernetes:

In either case, you have a fully managed, reproducible, and auditable source of information, even if what's produced isn't for an application. As with application development, any secrets or managed identities you need are stored in the pipeline/workflow engine or in the native capabilities of a provisioning service.

Since the people making the PRs don't have direct access to these secrets, it provides a way for developers to safely initiate actions that they don't have direct permission to do themselves. This allows you to adhere to the principle of least privilege while still giving developers a self-service option.

Track provisioned infrastructure

As you begin to scale this approach, think about how you want to track the infrastructure that was provisioned. Your Git repository is a source of truth for the configuration, but doesn't tell you the specific URIs and state information about what you created. However, following an everything as code approach gives you a source of information to tap into to synthesize an inventory of provisioned infrastructure. Your provisioner might also be a good source of this information that you can tap into. For example, Azure Deployment Environments includes environment tracking capabilities that developers have visibility into.

To learn more about tracking across various data sources, see Design a developer self-service foundation.

Apply the security as code and policy as code patterns

While provisioning infrastructure is useful, making sure that these environments are secure and generally follow your organization's policies is equally important. This led to the rise of the policy as code concept. Here, configuration files in a source-control repository can be used to do things like drive security scanning or apply infrastructure policies.

Many different products and open-source projects have adopted this approach, including Azure Policy, Open Policy Agent, GitHub Advanced Security, and GitHub CODEOWNERS, among others. When selecting your application infrastructure, services, or tools, be sure to evaluate how well they support these patterns. For more information on refining your application and governance, see Refine your application platform.

Use everything as code for your own scenarios

Everything as code extends these patterns to a wide variety of automation and configuration tasks beyond IaC. It can support not only creating or configuring any type of infrastructure, but also updating data or triggering workflows in any downstream system.

The PR becomes a good baseline self-service user experience for various different processes, particularly when you're getting started. The processes naturally gain the security, auditability, and rollback benefits that Git itself provides and the systems involved can also change over time without impacting the user experience.

Teams as code

One example of applying everything as code to your own scenarios is the teams as code pattern. Organizations apply this pattern to standardize team membership and, in some cases, developer tooling/service entitlements across a wide variety of systems. This pattern eliminates manual onboarding and offboarding service desk processes that are driven by the need for systems developers and operators to access their own grouping, user, and access concepts. Manual service desks processes are a potential security risk because it’s possible to overprovision access. When using the teams as code pattern, the combination of Git and PRs can enable self-service from an auditable data source.

For an example of a mature, extensive variation of this pattern, check out GitHub's blog post on how they manage Entitlements. GitHub also open-sourced their sophisticated Entitlements implementation for you to try out or adopt. Although the blog post describes all-up employee entitlements, you can apply the teams as code concept to more narrowly scoped development team scenarios. These development teams might not be represented in an employee org chart at all and involve proprietary tools or services that can complicate onboarding or offboarding team members.

Here's summary of a simplified variation of this idea that uses a CI/CD system and identity provider groups to coordinate updates:

In this example:

- Each system involved was set up to use your identity provider (for example, Microsoft Entra ID) for single sign-on (SSO).

- You use identity provider groups (for example, Entra groups) across systems to manage membership by role to reduce complexity and maintain centralized auditing.

At a high level, here's how this pattern works:

- A central, locked down Git repository has a set of (typically YAML) files in it that represent each abstract team, related user membership, and user roles. Owners or approvers for team changes can also be stored in this same spot (for example, via CODEOWNERS). The reference to a user in these files is the identity provider, but this repository acts as the source of truth for these teams (but not users).

- All updates to these files are done through pull requests. This ties conversations, and related participants on the request to Git commit for auditability.

- Leads and individual users can make PRs to add or remove people, and dev leads and other roles can create new teams using PRs with a new team file from a template.

- Whenever a PR is merged into main, a CI/CD system tied to the repository then updates the identity provider system and all downstream systems as appropriate.

Specifically, the CI/CD system:

- Uses the appropriate identity provider system API to create or update an identity provider group per role with exactly the individuals in the file (no more, no less).

- Uses APIs for each downstream system to tie the systems grouping concept to an identify provider groups for each role (for example, GitHub and Azure DevOps). This could result in a one-to-many relationship between your team and the downstream system to represent a role.

- (Optionally) Uses APIs for each downstream system to implement permissions logic tied to the system’s grouping mechanism.

- Uses an API to update a locked-down data store with the results (including associating the downstream system team IDs) that can then be consumed for any of your internally built systems. You can also store associations for different system representations of user IDs for the same identity provider user/account here, if needed.

If your organization already uses something like Entra entitlement management, you might be able to omit managing group membership from this pattern.

Your needs and policies might change the specifics, but the general pattern can be adapted to any number of variations. Any secrets required to integrate with any downstream systems are maintained either in the CI/CD system (for example, in GitHub Actions or Azure Pipelines) or in something like Azure Key Vault.

Use manual or externally triggered, parameterized workflows

Some of the self-service related problems you identify might not be conducive to using files in Git. Or, you might have a user interface you want to use to drive the self-service experience.

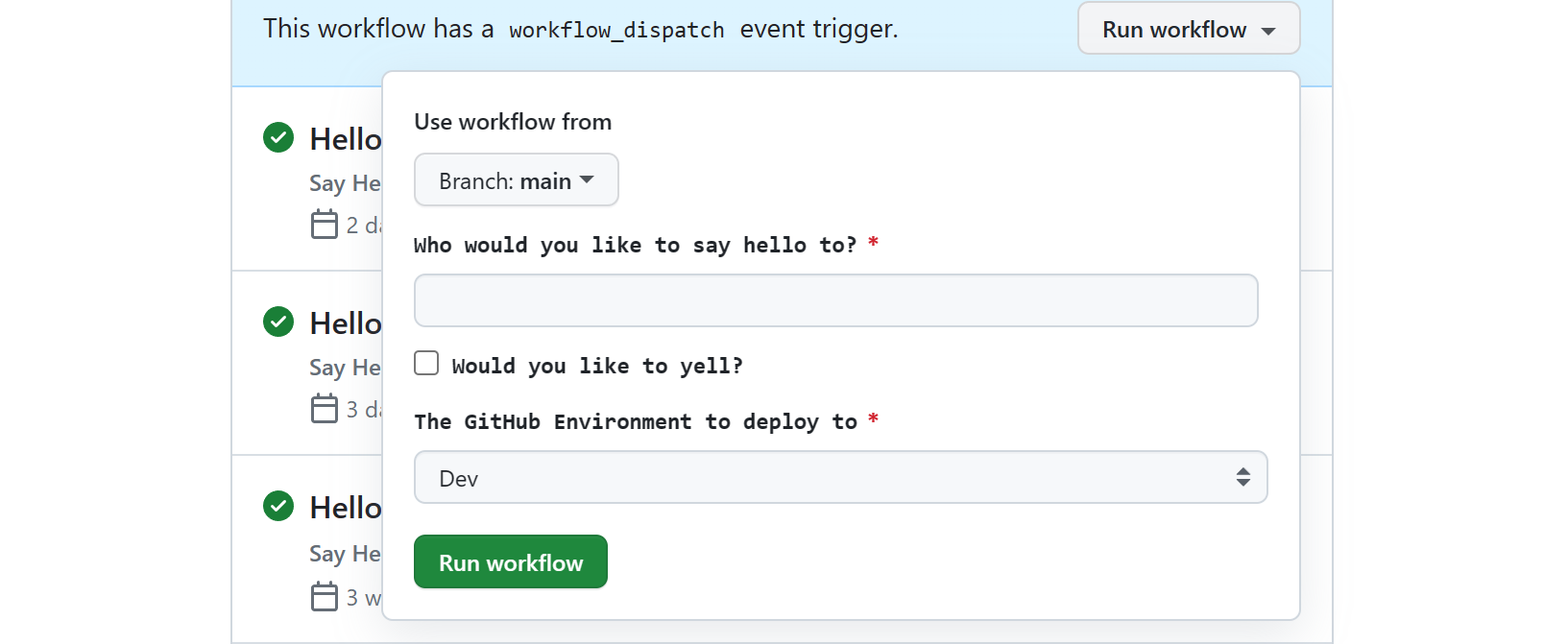

Fortunately, most CI systems, including GitHub Actions and Azure Pipelines, have the ability to set up a workflow with inputs that you can then manually trigger through their UIs or CLIs. Given that developers and related operations roles are likely already familiar with these user experiences, manual triggers can augment the everything as code pattern to enable automation for activities (or jobs) that either don't have a natural file representation or should be fully automated without requiring a PR process.

Your CI system might allow you to opt into triggering these workflows or pipelines from your own user experiences through an API. For GitHub Actions, the key to making this work is the Actions REST API to fire a workflow dispatch event to trigger a workflow run. Azure DevOps triggers are similar and you can also use the Azure DevOps Pipeline API for runs. You'll likely see the same capabilities in other products. Whether triggered manually or through an API, each workflow can support a set of inputs by adding a workflow_dispatch configuration to the workflow YAML file. For example, this is how portal toolkits like Backstage.io interact with GitHub Actions.

Your CI/CD system's workflow or job system undoubtedly tracks activities, reports back status, and has detailed logs that both developers and operations teams can use to see what went wrong. In this way, it has some of the same security, auditability, and visibility advantages as the everything as code pattern. However, one thing to keep in mind is that any actions performed by these workflows or pipelines look like a system identity (for example, service principal or managed identity in Microsoft Entra ID) to downstream systems.

You'll have visibility into who initiates requests in your CI/CD system, but you should assess whether this is enough information and make sure your CI/CD retention settings comply with your auditing requirements for cases when this information is critical.

In other cases, the tools you integrate with might have their own tracking mechanisms you can rely on. For example, these CI/CD tools almost always have several notification mechanisms available like using a Microsoft Teams or Slack channel, which can allow you to keep anyone submitting a request to get status updates and the channel provides an informal record of what happened. These same workflows engines are often already designed to integrate with operations tools to further extend the usefulness of these patterns.

In summary, you can implement some automation using files stored in a source control repository thanks to the flexibility of CI/CD tools and their out-of-box user experiences. To see how internal developer platforms can use this approach as a starting point without compromising on more sophisticated capabilities over time, see Design a developer self-service foundation.

Automate setup of developer coding environments

Another common problem in engineering systems is developer coding environment bootstrapping and normalization. Here are some of the common problems you might hear about in this area:

- In some cases, it can take weeks for a developer to get to their first pull request. This is a problematic area when you transfer developers between feature crews and projects fairly frequently (for example, in matrixed organizations), need to ramp up contractors, or are on a team that is in a hiring phase.

- Inconsistency between developers and with your CI systems can lead to frequent "it works on my machine" problems even for seasoned team members.

- Experimentation and upgrading frameworks, run times, and other software can also break existing developer environments and lead to lost time trying to figure out exactly what went wrong.

- For dev leads, code reviews can slow development given they might necessitate a configuration change to test and undoing them once the review is done.

- Team members and operators also have to spend time ramping up related roles beyond development (operators, QA, business, sponsors) to help test, see progress, train business roles, and evangelize the work the team is doing.

Part of your paved paths

To help resolve these problems, think about setup of specific tools and utilities as a part of your well-defined paved paths. Scripting developer machine setup can help, and you can reuse these same scripts in your CI environment. However, consider supporting containerized or virtualized development environments because of the benefits they can provide. These coding environments can be set up in advance to your organization’s or project's specifications.

Workstation replacement and targeting Windows

If you're either targeting Windows or want to do full workstation virtualization (client tools and host OS settings in addition to project specific settings), VMs usually provide the best functionality. These environments can be useful for anything from Windows client development to Windows service or managing and maintaining .NET full framework web applications.

| Approach | Examples |

|---|---|

| Use cloud-hosted VMs | Microsoft Dev Box is a full Windows workstation virtualization option with built-in integration to desktop management software. |

| Use local virtual machines | Hashicorp Vagrant is a good option and you can use HashiCorp Packer to build VM images for both it and Dev Box. |

Workspace virtualization and targeting Linux

If you're targeting Linux, consider a workspace virtualization option. These options focus less on replacing your developer desktop and more on project or application specific workspaces.

| Approach | Examples |

|---|---|

| Use cloud-hosted containers | GitHub Codespaces is a cloud-based environment for Dev Containers that supports integrating with VS Code, JetBrains’ IntelliJ, and terminal-based tools. If this or a similar service doesn't meet your needs, you can use VS Code's SSH or remote tunnels support with Dev Containers on remote Linux VMs. The tunnel-based option that not only works with the client, but the web-based vscode.dev. |

| Use local containers | If you would prefer a local Dev Containers option instead or in addition to a cloud hosted one, Dev Containers have solid support in VS Code, support in IntelliJ, and other tools and services. |

| Use cloud hosted VMs | If you find containers too limiting, SSH support in tools like VS Code or JetBrains tools like IntelliJ enable you to directly connect to Linux VMs that you manage yourself. VS Code has tunnel-based option works here as well. |

| Use the Windows Subsystem for Linux | If your developers are exclusively on Windows, Windows Subsystem for Linux (WSL) is a great way for developers to target Linux locally. You can export a WSL distribution for your team and share it with everything set up. For a cloud option, cloud workstation services like Microsoft Dev Box can also take advantage of WSL to target Linux development. |

Create start right application templates that include stay right configuration

The great thing about the everything as code pattern is that it can keep developers on the paved paths that you established from the beginning. If this is a challenge for your organization, application templates can quickly become a critical way to reuse building blocks to drive consistency, promote standardization, and codify your organization’s best practices.

To start, you can use something as simple as a GitHub template repository, but if your organization follows a monorepo pattern this might be less effective. You can also want to create templates that help set up something that isn't directly related to an application source tree. Instead, you can use a templating engine like cookiecutter, Yeoman, or something like the Azure Developer CLI (azd) that, in addition to templating and simplified CI/CD setup, also provides a convenient set of developer commands. Since the Azure Developer CLI can be used to drive environment setup in all scenarios, it integrates with Azure Deployment Environments to provide improved security, integrated IaC, environment tracking, separation of concerns, and simplified CD setup.

Once you have a set of templates, dev leads can use these command line tools or other integrated user experiences to scaffold their content for their applications. However, because developers might not have permission to create repositories or other content from your templates, this is also another opportunity to use manually triggered, parameterized workflows or pipelines. You can set up inputs have your CI/CD system create anything from a repository to infrastructure on their behalf.

Staying right and getting right

However, to help scale, these application templates should reference centralized building blocks where possible (for example, IaC templates or even CI/CD workflows and pipelines). In fact, treating these centralized building blocks as their own form of start right templates could be an effective strategy to resolve some of the problems you've identified.

Each of these individual templates can be applied not only to new applications, but also existing ones that you intend to update as a part of a get right campaign to roll out updated or improved guidelines. Even better, this centralization helps you keep both new and existing applications stay right allowing you to evolve or expand your best practices over time.

Template contents

We recommend considering the following areas when creating templates.

| Area | Details |

|---|---|

| Sufficient sample source code to drive app patterns, SDKs, and tool use | Include code and configuration to steer developers towards recommended languages, app models and services, APIs, SDKs, and architectural patterns. Be sure to include code for distributed tracing, logging, and observability using your tools of choice. |

| Build and deployment scripts | Provide developers with a common way to trigger a build and a local / sandbox deployment. Include in-IDE/editor debug configuration for your tools of choice to use them. This is an important way to avoid maintenance headaches and prevent CI/CD from being out of sync. If your templating engine is opinionated like the Azure Developer CLI, there might already be commands you can just use. |

| Configuration for CI/CD | Provide workflows / pipelines for building and deploying applications based on your recommendations. Take advantage centralized, reusable, or templated workflows / pipelines to help keep them up-to-date. In fact, these reusable workflows / pipelines can be start right templates of their own. Be sure to consider an option to manually trigger these workflows. |

| Infrastructure as code assets | Provide recommended IaC configurations including references to centrally managed modules or catalog items to ensure that any infrastructure setup follows best practices from the get-go. These references can also help teams keep right as time goes on. Combined with workflows / pipelines, you can also include IaC or EaC to provision just about anything. |

| Security and policy as code assets | The DevSecOps movement moved security configuration into code, which is great for templates. Some policy as code artifacts also can be applied at the application level. Include as everything from files like CODEOWNERS to scanning configuration like dependabot.yaml in GitHub Advanced Security. Provide scheduled workflows / pipeline runs for scans using something like Defender for Cloud along with environment test runs. This is important for supply chain security, and be sure to factor in container images in addition to application packages and code. These steps help development teams stay right. |

| Observability, monitoring, and logging | Part of enabling self-service is providing easy visibility into applications once deployed. Beyond runtime infrastructure, be sure to include setup for observability and monitoring. In most cases, there's an IaC aspect to setup (for example, agent deployment, instrumentation) while in others it might be another type of config-as code artifact (for example, monitoring dashboards for Azure Application Insights). Finally, sure to include code sample code for distributed tracing, logging, and observability using your tools of choice. |

| Coding environment setup | Include configuration files for coding linters, formatters, editors, and IDEs. Include setup scripts along with workspace or workstation virtualization files like devcontainer.json, devbox.yaml, developer focused Dockerfiles, Docker Compose files, or Vagrantfiles. |

| Test configuration | Provide configuration files for both unit and more in-depth testing using your preferred services like Microsoft Playwright Testing for UI or Azure App Testing. |

| Collaboration tool setup | If your issue management and source control management system supports task / issue / PR templates as code, include these as well. In cases where more setup is required, you can optionally provide a workflow / pipeline that updates your systems using an available CLI or API. This can also allow you to set up other collaboration tools like Microsoft Teams or Slack. |