Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

The ways you can consume data from Microsoft dataflows depends on several factors, like storage and type of dataflow. In this article, you learn how to choose the right dataflow for your needs.

Type of dataflow

There are multiple types of dataflows available for you to create. You can choose between a Power BI dataflow, standard dataflow, or an analytical dataflow. To learn more about the differences and how to select the right type based on your needs, go to Understanding the differences between dataflow types.

Storage type

A dataflow can write to multiple output destination types. In short, you should be using the Dataflows connector unless your destination is a Dataverse table. Then you use the Dataverse/CDS connector.

Azure Data Lake Storage

Azure Data Lake storage is available in Power BI dataflows and Power Apps analytical dataflows. By default you're using a Microsoft Managed Data Lake. However, you can also connect a self-hosted data lake to the dataflow environment. The following articles describe how to connect the data lake to your environment:

- Connect Data Lake Gen 2 storage to a Power BI Workspace

- Connect Data Lake Gen 2 storage to a Power Apps Environment

When you've connected your data lake, you should still use the Dataflows connector. If this connector doesn't meet your needs, you could consider using the Azure Data Lake connector instead.

Dataverse

A standard dataflow writes the output data to a Dataverse table. Dataverse lets you securely store and manage data that's used by business applications. After you load data in the Dataverse table, you can consume the data using the Dataverse connector.

Dataflows can get data from other dataflows

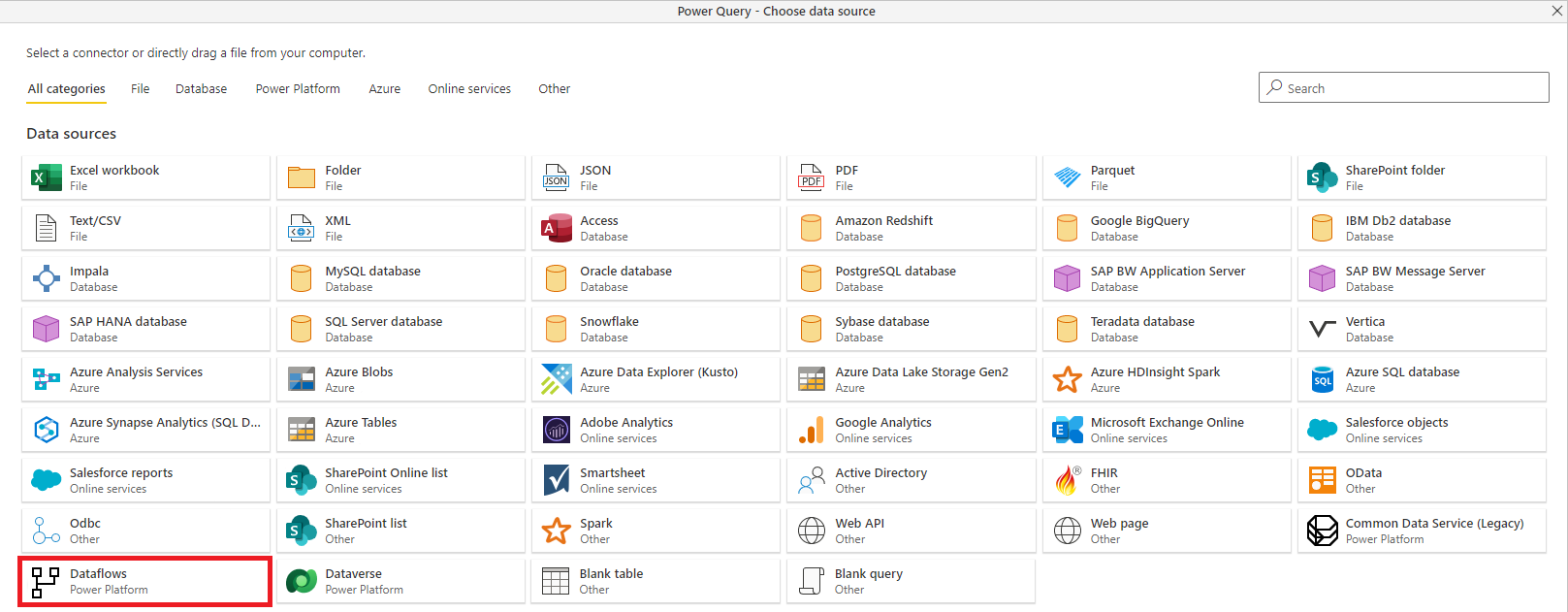

If you'd like to reuse data created by one dataflow in another dataflow, you can do so by using the Dataflow connector in the Power Query editor when you create the new dataflow.

When you get data from the output of another dataflow, a linked table is created. Linked tables provide a way to make data created in an upstream dataflow available in a downstream dataflow, without copying the data to the downstream dataflow. Because linked tables are just pointers to tables created in other dataflows, they're kept up to date by the refresh logic of the upstream dataflow. If both dataflows reside in the same workspace or environment, those dataflows are refreshed together, to keep data in both dataflows always up to date. More information: Link tables between dataflows

Separating data transformation from data consumption

When you use the output of a dataflow in other dataflows or data sets, you can create an abstraction between the data transformation layer and the rest of the data model. This abstraction is important because it creates a multi-role architecture, in which the Power Query customer can focus on building the data transformations, and data modelers can focus on data modeling.

Frequently asked questions

My dataflow table doesn't show up in the dataflow connector in Power BI

You're probably using a Dataverse table as the destination for your standard dataflow. Use the Dataverse/CDS connector instead or consider switching to an analytical dataflow.

There's a difference in the data when I remove duplicates in dataflows—how can I resolve this?

There could be a difference in data between design-time and refresh-time. We don't guarantee which instance is being kept during refresh time. For information that will help you avoid inconsistencies in your data, go to Working with duplicate values.

Next Steps

The following articles provide more details about related articles.