Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

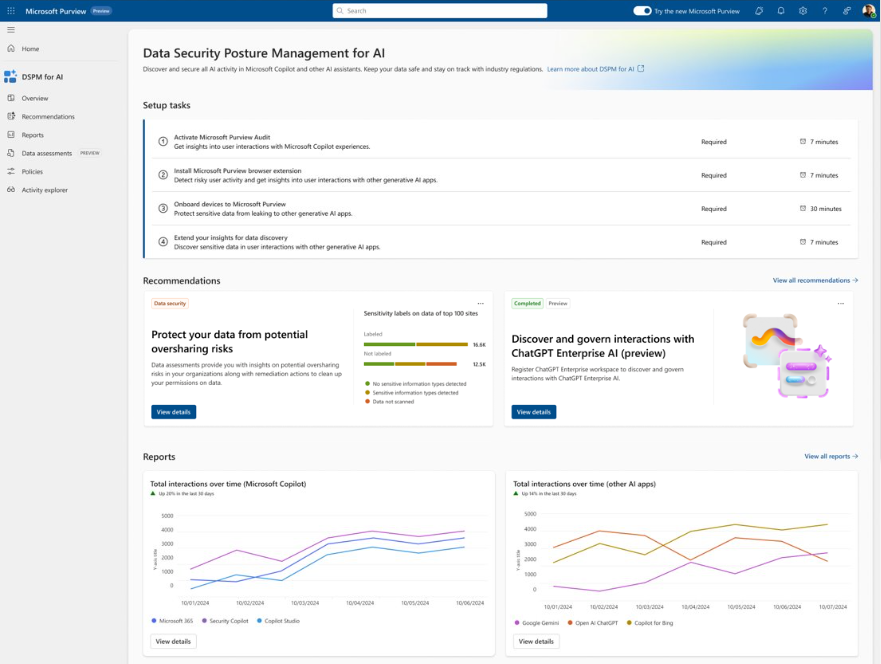

Microsoft Purview Data Security Posture Management (DSPM) for AI from the Microsoft Purview portal provides a central management location to help you quickly secure data for AI apps and proactively monitor AI use. These apps include Copilots, agents, and other AI apps that use third-party large language modules (LLMs).

Data Security Posture Management for AI offers a set of capabilities so you can safely adopt AI without having to choose between productivity and protection:

Insights and analytics into AI activity in your organization

Ready-to-use policies to protect data and prevent data loss in AI prompts

Data risk assessments to identify, remediate, and monitor potential oversharing of data.

Compliance controls to apply optimal data handling and storing policies

For a list of supported third-party AI sites, such as those used for Gemini and ChatGPT, see Supported AI sites by Microsoft Purview for data security and compliance protections.

How to use Data Security Posture Management for AI

To help you more quickly gain insights into AI usage and protect your data, Data Security Posture Management for AI provides some recommended preconfigured policies that you can activate with a single click. Allow at least 24 hours for these new policies to collect data to display the results in Data Security Posture Management for AI, or reflect any changes that you make to the default settings.

No activation needed, Data Security Posture Management for AI automatically runs a weekly data risk assessment for the top 100 SharePoint sites based on usage in your organization. You can supplement this with your own custom data risk assessments, currently in preview. These assessments are designed specifically to help you identify, remediate, and monitor potential oversharing of data, so you can be more confident about your organization using Microsoft 365 Copilot and agents, and Microsoft 365 Copilot Chat.

To get started with Data Security Posture Management for AI, use the Microsoft Purview portal. You need an account that has appropriate permissions for compliance management, such as an account that's a member of the Microsoft Entra Compliance Administrator group role.

Tip

The following steps provide a recommended walkthrough to use all the available capabilities from DSPM for AI. If you're interested in using DSPM for AI just for a specific AI app, use the links in the Next steps section.

Sign in to the Microsoft Purview portal > Solutions > DSPM for AI.

From Overview, review the Get started section to learn more about Data Security Posture Management for AI, and the immediate actions you can take. Select each one to display the flyout pane to learn more, take actions, and verify your current status.

Action More information Turn on Microsoft Purview Audit Auditing is on by default for new tenants, so you might already meet this prerequisite. If you do, and users are already assigned licenses for Microsoft 365 Copilot, you start to see insights about Copilot and agents activities from the Reports section further down the page. Install Microsoft Purview browser extension A prerequisite for third-party AI sites. Onboard devices to Microsoft Purview Also a prerequisite for third-party AI sites. Extend your insights for data discovery One-click policies for collecting information about users visiting third-party generative AI sites and sending sensitive information to them. The option is the same as the Extend your insights button in the AI data analytics section further down the page. For more information about the prerequisites, see Prerequisites for Data Security Posture Management for AI.

For more information about the preconfigured policies that you can activate, see One-click policies from Data Security Posture Management for AI.

Then, review the Recommendations section and decide whether to implement any options that are relevant to your tenant. View each recommendation to understand how they're relevant to your data and learn more.

These options include running a data risk assessment across SharePoint sites, creating sensitivity labels and policies to protect your data, and creating some default policies to immediately help you detect and protect sensitive data sent to generative AI sites. Examples of recommendations:

- Protect your data from potential oversharing risks by viewing the results of your default data risk assessment to identify and fix issues to help you more confidently deploy Microsoft 365 Copilot.

- Protect your data with sensitivity labels by creating a set of default sensitivity labels if you don't yet have sensitivity labels, and policies to publish them and automatically label documents and emails.

- Protect sensitive data referenced in Microsoft 365 Copilot and agents by creating a DLP policy that selects sensitivity labels to prevent Microsoft 365 Copilot and agents summarizing the labeled data. For more information, see Learn about the Microsoft 365 Copilot policy location.

- Detect risky interactions in AI apps to calculate user risk by detecting risky prompts and responses in Microsoft 365 Copilot, agents, and other generative AI apps. For more information, see Risky AI usage (preview).

- Discover and govern interactions with ChatGPT Enterprise AI by registering ChatGPT Enterprise workspaces, you can identify potential data exposure risks by detecting sensitive information that's shared with ChatGPT Enterprise.

- Get guided assistance to AI regulations, which uses control-mapping regulatory templates from Compliance Manager.

- Secure interactions for Microsoft Copilot experiences, which captures prompts and responses for Copilot in Fabric, and Security Copilot. Without a policy similar to the one that's created with this recommendation, auditing events are captured for Copilot in Fabric and Security Copilot, but not the prompts and responses.

- Detect sensitive info shared with AI via network, which uses network data security to detect sensitive info types shared with AI apps in browsers, applications, APIs, add-ins, and more, using a Secure Access Service Edge or Security Service Edge integration.

- Secure interactions from enterprise apps, which captures prompts and responses for regulatory compliance from Entra-registered AI apps, ChatGPT Enterprise Connector, and applications built on Azure AI services.

- Secure data in Azure AI apps and agents, which has set up instructions to capture prompts and responses for AI apps that use one or more Azure AI subscriptions.

You can use the View all recommendations link, or Recommendations from the navigation pane to see all the available recommendations for your tenant, and their status. When a recommendation is complete or dismissed, you no longer see it on the Overview page.

Use the Reports section or the Reports page from the navigation pane to view the results of the default policies created. You need to wait at least a day for the reports to be populated. Select the categories of Copilot experiences and agents, Enterprise AI apps, and Other AI apps to help you identify the specific generative AI app.

Use the Policies page to monitor the status of the default one-click policies created and AI-related policies from other Microsoft Purview solutions. To edit the policies, use the corresponding management solution in the portal. For example, for DSPM for AI - Unethical behavior in Copilot, you can review and remediate the matches from the Communication Compliance solution.

Note

If you have the older retention policies for the location Teams chats and Copilot interactions, they aren't included on this page. Learn how to create separate retention policies for Microsoft Copilot Experiences that will be included on this Policy page.

Select Apps and agents to view a dashboard of AI apps and their agents used across your organization so you can identify and manage any potential data security risks. For each agent, view details about sensitive data that they accessed and how they are protected by policies from Microsoft Purview.

Select Activity explorer to see details of the data collected from your policies.

This more detailed information includes activity type and user, date and time, AI app category and app, app accessed in, any sensitive information types, files referenced, and sensitive files referenced.

Examples of activities include AI interaction, Sensitive info types, and AI website visit. Prompts and responses are included in the AI interaction events when you have the right permisisons. For more information about the events, see Activity explorer events.

Similar to the report categories, workloads include Copilot experiences and agents, Enterprise AI apps, and Other AI Apps. Examples of an app ID and app host for Copilot experiences and agents include Microsoft 365 Copilots and Copilot Studio. Enterprise AI apps includes ChatGPT Enterprise. Other AI Apps include the apps from the supported third-party AI sites, such as those used for Gemini and ChatGPT.

Select Data risk assessments to identify and fix potential data oversharing risks in your organization.

A default data risk assessment automatically runs weekly for the top 100 SharePoint sites based on usage in your organization, and you might have already run a custom assessment as one of the recommendations. However, come back regularly to this option to check the latest weekly results of the default assessment and run custom assessments when you want to check for different users or specific sites. After a custom assessment has run, wait at least 48 hours to see the results that don't update again. You'll need a new assessment to see any changes in the results.

Because of the power and speed AI can proactively surface content that might be obsolete, over-permissioned, or lacking governance controls, generative AI amplifies the problem of oversharing data. Use data risk assessements to both identify and remediate issues.

The Default assessment displays at the top of the page with a quick summary, such as the total number of items found, the number of sensitive data detected, and the number of links sharing data with anyone. The first time the default assessment is created there's a 4-day delay before results are displayed.

After you select View details for more in-depth information, from the list, select each site to access the flyout pane that has tabs for Overview, Identify, Protect, and Monitor. Use the information on each tab to learn more, and take recommended actions. For example:

Use the Identify tab to identify how much data has been scanned or not scanned for sensitive information types, with an option to initiate an on-demand classification scan as needed.

Use the Protect tab to select options to remediate oversharing, which include:

- Restrict access by label: Use Microsoft Purview Data Loss Prevention to create a DLP policy that prevents Microsoft 365 Copilot and agents from summarizing data when it has sensitivity labels that you select. For more information about how this works and supported scenarios, see Learn about the Microsoft 365 Copilot policy location.

- Restrict all items: Use SharePoint Restricted Content Discoverability to list the SharePoint sites to be exempt from Microsoft 365 Copilot. For more information, see Restricted Content Discoverability for SharePoint Sites.

- Create an auto-labeling policy: When sensitive information is found for unlabeled files, use Microsoft Purview Information Protection to create an auto-labeling policy to automatically apply a sensitivity label for sensitive data. For more information about how to create this policy, see How to configure auto-labeling policies for SharePoint, OneDrive, and Exchange.

- Create retention policies: When content hasn't been accessed for at least 3 years, use Microsoft Purview Data Lifecycle Management to automatically delete it. For more information about how to create the retention policy, see Create and configure retention policies.

Use the Monitor tab to view the number of items in the site shared with anyone, shared with everyone in the organization, shared with specific people, and shared externally. Select Start a SharePoint site access review for information how to use the SharePoint data access governance reports.

Example screenshot, after a data risk assessment has been run, showing the Protect tab with resolutions for problems found:

To create your own custom data risk assessment, select Create assessment to identify potential oversharing issues for all or selected users, the data sources to scan (currently supported for SharePoint only), and run the assessment.

If you select all sites, you don't need to be a member of the sites, but you must be a member to select specific sites.

This data risk assessment is created in the Custom assessments category. Wait for the status of your assessment to display Scan completed, and select it to view details. To rerun a custom data risk assessment, and to see results after the 30-day expiration, use the duplicate option to create a new assessment, starting with the same selections.

Tip

Both the default and custom data risk assessments provide an Export option that let you save and customize the data into a choice of file formats (Excel, .csv, JSON, TSV).

Data risk assessments currently support a maximum of 200,000 items per location. The count of files reported might not be accurate when there are more than 100,000 files per location.

Next steps

Use the policies, tools, and insights from DSPM for AI in conjunction with additional protections and compliance capabilities from Microsoft Purview. If you're interested in a specific AI app, use the following links: