ALM workshop topics

In this unit, we'll look at the things to consider as you fill out and evaluate the Application lifecycle Management (ALM) workshop template. These topics are designed to help the solution architect evaluate the information collected during the workshop, and to produce the findings and recommendations. It's important to remember ALM isn't one size fits all. The amount and sophistication of ALM must be decided on a project-by-project basis. A good starting point for understanding ALM with Dynamics 365 and Microsoft Power Platform can be found by reading and reviewing the Application lifecycle management with Microsoft Power Platform topics on this docs page.

General strategy

It's important to understand the development methodology that is being used by the project team to produce the solution. Also, it's important to understand the number of teams involved, especially those involved in making customizations. The ALM strategy must be influenced to support these teams and allow the project teams to effectively work.

Key things to look for

Lack of any development methodology. This is a bad place in a project to "just kind of go for it."

More traditional waterfall approaches are not recommended with Microsoft Power Platform and Dynamics 365 projects. Most teams find some form of agile or iterative approaches to be more effective in delivering a successful project.

Not having a clear understanding of who will be involved in making the changes and how they'll coordinate, is a risk.

Is there a tool in place to track work items including work item assignment?

Evolution of ALM

Not all customers fully automate their ALM process from the beginning. Some choose to take a crawl, walk, run approach.

Unmanaged in development

Crawl:

- Export/Import

- Export/Save to file

- System/Import

Walk:

- Multiple solutions without source control

- Introduce master data

- Introduce solution-based source control

Run:

- Introduce automation with package deployments

- Version control enabled through solution packager

Managed everywhere upstream

- Automated builds and instance management

- Automated source controlled Azure Devops

Environments

Environments should be created to support the ALM strategy that is being implemented. At a minimum, the project should generally have a development, test, and production environment. These environments should either be a sandbox or production type of environment, and not a trial or community environment. Also, consider having a hotfix environment for changes that need to be applied to production post go-live.

If environments are to be created in different geographic regions, the teams should be aware that the environments will update at different times. For example, if development is in the Canada region and production is in the North America region, it's possible that Canada will have features that North America doesn't yet have. When using multiple geographic regions, more coordination with Microsoft updates and features is required.

Each Microsoft Dataverse solution should have at least one development environment supporting team members making customizations. With a source-centric model, multiple development environments are possible but require coordination to resolve merge conflicts as they occur. Single development environments are simpler to manage through basic coordination, but multiple environments allow for higher developer isolation and team velocity. Proper access control should be put in place to ensure only the right team members have access to the different environments. This also helps ensure people aren't making changes outside of the assigned development environment.

Key things to look for with environments

Have a reasonable number of environments been identified? At a minimum, they should have a development, test, and production environment. Too many environments without a clear purpose can lead towards complexity that is hard to manage.

Are they planning on storing unpacked solutions in source control?

Are trial environments being used? If so, they risk expiring and losing customizations unexpectedly.

Is storage capacity available to support the environment plan?

Source control

Also important is understanding how customizations and other code assets will be promoted from development through the different test environments to production. An important part of this is looking at what will be considered the source of truth for the customization and code assets. In the past, projects have been environment-centric where the development environment, for example, would be the source of truth for customizations. In this model, customizations would move directly from development to test and onto production. Changes would be transported as a solution exported from development.

If you're enhancing an existing deployment that was completed in the past, this may be the model that they used for the original project. New projects should use source control as the source of truth for all customizations and project code assets. For example, Azure DevOps git repositories could be used to store everything. Using this model, the project team checks their customizations into the source control. The managed solution that will be deployed will be generated from source control and used to promote the customizations to test and finally production. This approach makes the development environment(s) disposable. The other benefit of the source-control-centric approach is that it keeps detailed information of what has been changed and facilitates a branching strategy. Branching is essential to enable service to a production version while the next version is being built. Also, by linking the pull request or commits to work items creates traceability which improves the overall development process.

Key things to look for with source control

If they previously used an environment-centric approach versus a source control centric, do they have a plan to move to source control?

Have a reasonable number of environments been identified? At a minimum, they should have a development test and production. Too many environments can lead towards complexity that is hard to manage.

Are managed solutions being used for all environments other than development?

Solutions

When possible, all project assets that are solution-aware components should be managed in a Dataverse solution. Most projects focused on a single line of business or business process should use a single custom solution. The project team should associate a custom solution publisher that represents the company or product that they're building and not use the default publisher. Do not use multiple publishers unless there is a business case to do so.

If using multiple solutions, they should be used with a specific purpose that they're solving. For example, a project that might be put in a separate solution is a reusable component that they plan to use multiple times. Solutions can be segmented by line of business or business process. These segmented solutions can share a common base but should be isolated to only their customizations and not have dependencies on each other. This, however, can quickly lead to more complexity and should be evaluated against the benefits of having separate solutions.

Each solution should have its own development environment keeping them isolated from each other. When a solution is dependent on another solution the managed version of the dependent solution should be installed in the development environment. While the solution framework supports the concept of patch solutions, they're not recommended when using a source-centric model.

Key things to look for with solutions

Has a custom publisher been created with an appropriate custom pre-fix, and the team isn't using the default publisher?

Does the proposed solution architecture use a reasonable number of solutions, each with a clear purpose?

Does the environment plan support the number of solutions being used in the project ensuring proper solution isolation?

Build plan

In this section, we look at how the team plans to manage the building of solutions using multiple environments. Solutions should be composed in the development environment where their components are modified. The ALM plan should ensure that changes are only made in the development environment. The development environment should be the only place where unmanaged solutions are used; all other environments should have managed solutions installed. Each development environment should only have a single unmanaged solution.

While there is support for manually exporting solutions and importing them, ideally the build process would include some automation to create a repeatable process.

Key things to look for with build plans

Do development environments only contain a single unmanaged solution?

Are managed solutions used in all environments other than development?

Have access restrictions or other mechanisms been put in place to ensure modifications only take place in development?

Not all components are solution aware and there must be a plan to handle non-solution aware components (for example, Power BI visualizations).

Solution packager

Solution packager should be used to take the unmanaged solution from development and prepare it for storing in a source control repository. Solution packager takes the single solution file and breaks it into individual files representing each solution component. This process is referred to as unpacking the solution. The output from solution packager is then checked in to the source control repository. This checked in version now represents the source of truth for the project.

Solution packager can also pack the folder from source control, re-creating the single solution file. This is how the files that are checked into source control are then used to create the solutions that will be imported into the other environments.

Key things to look for with solution packagers

Is a solution packager and a source control repository planned for?

Is the solution deployed to test and production built from the source control repository?

Solution checker

As Power Automate and Power Apps are used to customize a Dynamics 365 deployment, each offer their own inline app checkers that are helpful for real-time issue resolution. Solution checker, however, is able to look at the whole solution and do static analysis and produce a detailed list of any issues found. (More details can be found in Use solution checker to validate your model-driven apps in Power Apps.)

Solution checker should be run regularly on any unmanaged solution you're building in your development environments. Solution checker can analyze Power Apps and Power Automate flows as well as code assets like plugins that developers create. The project team can manually run Solution checker from the maker portal by selecting the solution and then running the checker.

Solution checker can also be automated to run as part of a build process either using PowerShell or Azure DevOps pipeline tasks. By automating the run of solution checker, it can become an integral part of the build process and even can be set up to stop the build if too many errors have occurred. Simply running solution checker is not enough for the team to be successful, they must also have a plan in place to regularly evaluate and resolve problems identified.

Key things to look for with solution checkers

Is there a plan in place for running solution checker as part of the build process?

Are the results of solution checker regularly reviewed and incorporated into the process?

Has solution checker been integrated into the build automation, so that it runs without manual effort?

Automating deployment

An area that is as important as part of the build plan is looking at what automation can be used to make the process repeatable. There are many tools available both from Microsoft and the community that can be used to automate the build process. Azure DevOps and Microsoft Power Platform tasks is one option that can be used to automate the solution management and deployment tasks.

For example, a team could have an Azure DevOps pipeline that extracts from development every day at 7:00 PM and checks it into a git repository. The same pipeline can also be used to run solution checker so when the team comes into work in the morning, they know immediately if there were any issues identified in the prior night's build.

The pipeline could also import the solution into a clean build environment that will allow detection of any dependencies that were introduced unintendedly through that day's development. This ensures what is checked into source control is a clean version ready for deployment into other environments. Pipelines can also be used to automate testing so that it's just another step within the pipeline.

Azure Pipelines are also used to produce the managed solution artifact that will be used in the release pipelines to deploy to the upstream environments such as test and production. The same solution artifact that was used in test is used all the way to production. This ensures that it's not introducing new surprising changes as the promotion progresses through the series of environments ending at production. Azure Pipelines can also be used to build developer code assets to ensure that they're not being built on a local developer workstation.

GitHub Actions is another option for automating deployments.

Key things to look for with automating deployment

Is Azure DevOps or another automation tool used to automate the build process, or is the team still relying on manual effort?

Has the solution checker been integrated as part of the automated pipeline?

Are all developer code assets being built through a build process, and not on an individual developer's local machine?

Version control

Another element of the build plan that should be reviewed is the strategy for managing multiple active versions. By default, as changes progress from dev to test to production, it represents a single work stream of changes. If a problem is identified in production, you would fix it in development and then promote it through the series of environments back to production. A single work stream like this works well if no new development is done.

If the development team has already moved on to version two in their development environment, and then fixes a bug that was identified in production, as the fix is moved to production, so would any of the work in progress for version two because it was all mixed together. The ideal action is where the change is made in a separate work stream development environment that represented only what was already in production, and what was promoted only included the fix, and not anything from version two. This requires the project team to plan ahead and have a strategy for managing multiple active versions. This could be as simple as an active development stream and a maintenance stream to support production. More complex projects might even have multiple active development streams going on at the same time.

Handling both active development and maintenance streams at the same time is typically handled through a combination of Dataverse environments and source control branches. Branches allow having a copy of the project assets, and an isolated way of making changes in an environment associated with that branch. Changes from one branch can be merged with another branch. Branching strategy should be kept as simple as possible to avoid having to resolve many conflicts during merging of branches.

Key things to look for with version control

Has the team considered how they will maintain development work separate from bug fixes for production?

Is the branching strategy identified too complex?

Is the branching for source control coordinated with the environment plan?

Is there a change management process in place to make sure bug fixes make it back into the main development environment?

Test strategy

While test strategy has its own workshop, the testing process itself must be integrated in as part of the overall ALM strategy. For example, if you plan to do daily testing of the previous day's work by the quality assurance team, somehow you have to make sure that the quality assurance team has an environment updated with yesterday's changes ready to go before testing can begin.

Automation of testing should also be considered and could be integrated as part of the build process. This could include provisioning of a test environment, loading of test data and execution of tests. The results of the test could use be used to gate the progress of the build pipelines and prevent them from continuing when there are errors.

Model-driven Power Apps can be tested using EasyRepro. Power Apps canvas apps can be tested using Power Apps test studio. Test studio works by recording actions in the application and replaying it to automate the testing. Easy Repro uses scripts to define the test steps to be conducted. Once recorded and/or scripts generated, the testing can be included as a task in an Azure DevOps pipeline.

As testing happens, it will ultimately identify problems that will need to be evaluated and fixed. The overall ALM strategy must include how to document problems, as well as how to triage and prioritize them. If a development team were to drop everything and fix every bug right as they came in, it would be disruptive to the overall process. Typically, a project should establish a change control process to ensure each issue is given triage and prioritized as to when it will be introduced as a fix.

Key things to look for in a testing strategy

Does the ALM strategy align with the overall testing strategy?

Is there a change control process in place to track and manage any problems that are identified?

Has at least some testing been integrated into the build automation process?

Release and deploy

Once the solution is built, it must be promoted through the test environment(s) to production. Generally, this involves more than just simply importing a solution into those environments. For starters, you have to be aware of the starting state of the environment. For example, for test do you want it to start with some standard test data each time, or from the starting point from the last round of testing? Each environment is likely to have some sort of configuration data (for example, environment variables) that must be configured at least for the first deployment. For test environments, you also need to make sure they're not talking to production services in most cases. Sending a test email to all your real customers is typically not appreciated.

Key things to look for with release and deployment

Is there a plan for what each test environment looks like from a data perspective, as well as its connections to any services?

Have the necessary solution environment variables been created to ensure the solution can be tailored for each environment?

Reference data

Most solutions have some form of reference data that must be managed between the different environments. The configuration migration tool is a tool that can help with exporting data from one environment and importing it into other environments. The configuration migration tool works by defining a schema of the data to be exported including fields and relationships. When the export is run, a data zip file is created containing all the exported data. The configuration migration tool can also take care of importing the data into an environment where the data file can be used with the package deployer tool. This tool is capable of avoiding duplicates by using unique conditions that have been defined for each entity, based on a combination of fields. If a matching record is found, the record is updated, otherwise a new record is created.

Key things to look for with reference data

Is there a clear understanding of what constitutes reference data for the solution?

Is it clear what the master copy of reference data is, and where it will be stored and tracked?

Is there a plan for how to manage reference data between environments?

Package deployer

Another tool that is helpful in managing release and deploy is the package deployer tool. Package deployer allows you to create a package that includes multiple solutions, data from configuration migration tool and developer code logic that runs before and after the import of the package is completed. In many ways, you could think of package deployer as an install wizard for Microsoft Power Platform. Package deployer can be run interactively to manually import packages and data into an environment. Package deployer also supports running using PowerShell, which would allow automation and integration into Azure Pipeline.

Key things to look for with package deployer

- Has the team considered if package deployer would be helpful in their deployment strategy?

Release pipelines

Earlier in this unit, we talked about automating the build process, which would prepare the solution for deployment and create it as a build artifact. Azure Pipeline also supports the concept of release pipelines for managing the release to one or more environments. Release pipelines are intended to take the build artifacts (for example, a managed solution file) and deploy them to test or production environments. The release pipelines can incorporate approval processes between each of the environments. Approvals can be used to coordinate the go decision from multiple stakeholders as the release progresses through the environment tiers.

Key things to look for with release pipelines

Is Azure Pipeline or a similar tool being used to deploy the built solutions between the environments?

As the deployment progress is between the environments, how will approvals be handled?

Run model

This is where we look at the post-go-live strategy. This assumes you have done your initial deployment and are now moving on to servicing what you have deployed and are working on enhancements. As users inevitably use what you have built, they will identify things that they want to change or enhance to make them more productive. The project team should have a tool in place for capturing these change requests, and a way to evaluate, prioritize, and categorize them into updates.

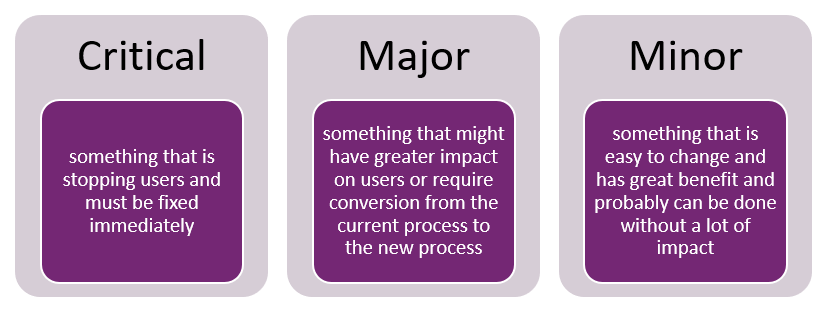

For example, these changes could easily be grouped into three categories: critical, minor, and major.

As you think about managing your ongoing changes, you must have processes in place to deal with at least these three types of changes. Critical changes, for example, must have a way to deploy almost immediately because they're blocking users from completing their work. Typically, these could not wait for a weekly deployment to happen, so you need a way to service production without waiting for a planned build and deployment of the next scheduled update. It's important to have a process to handle these critical problems because what you want to avoid is having to make changes in the production environment to work around the problem.

Key things to look for with post-go-live strategy

Does source control branching and environment strategy support deploying critical fixes immediately?

Is there a plan for bringing any pre-go-live backlog of issues forward into a maintenance and enhancement cycle?

Is there change control in place to track and triage problems identified in the operating application?

Is there a process in place to ensure that critical fixes that happen quickly get rolled up into the normal release process?

Microsoft update cycle

In addition to the updates that your team creates for enhancing or fixing problems, Microsoft also does the same for Microsoft Power Platform and Dynamics 365 applications. Generally, Microsoft rolls out updates weekly that typically won't disrupt the user experience. These updates are rolled out using a safe deployment practice that minimizes any impact to your environments. You can control when these updates are applied to your production environment via the Admin tool.

Most Dataverse and Dynamics 365 application changes roll out by region. To ensure there are no adverse effects on your customizations, one strategy is to test them in an environment that resides in a region that gets the updates before yours, and run automated testing there to identify any problems. Even if that isn't viable, you could still run weekly automated tests for critical functionality to make sure your customizations are not adversely affected.

Currently, twice a year (April and October), Microsoft releases updates that are more significant, and can include changes that can be disruptive to the existing application user experience. These updates are planned in advance, and are first announced by a published release plan detailing the upcoming changes. This happens several months before the actual update is automatically applied.

As part of the process leading up to the automatic update, environments can have the changes manually enabled by administrators ahead of when they would automatically be applied to all environments. This allows for you to copy a production environment to a non-production sandbox environment, and do testing of the changes before the changes are deployed to production. When testing is successful, it allows you to opt into the changes on your own schedule, rather than waiting for the auto updates.

Key things to look for with update cycles

Has the team evaluated if they need to do weekly testing and have plans put in place?

Does the customer understand the update process and how for the twice a year they can opt into testing ahead of time?

Capacity management

Capacity management is important both during the pre-deployment as well as managing usage once the project is live. Capacity management is more than just storage; however, storage is a key element of it. Another important capacity to monitor is API requests and ensure you're staying in line with your license allocations, and where necessary, to add additional add-on API requests. Other products and add-ons including AI Builder and Power Virtual Agents also have capacity you should consider in your overall capacity management effort.

From a storage capacity point of view, Dataverse capacity is broken down into database, log, and file capacity. Each of these is tracked and additional storage can be added individually based on your actual usage. To create new environments in your tenant, you must have at least one GB of database capacity remaining. This is important to consider, as you create your ALM strategy that will include multiple environments. If your ALM strategy involves temporary environments, these only take capacity when they're actually in use. But in order to create them through an automated process, capacity must be available at the time the automation runs, otherwise the automation will fail.

For database capacity, you must estimate initial capacity based on the data you'll migrate into Dataverse when you go live. This includes data that may exist in non-production environments as well. A key first step in this process is understanding the number of records you have per entity. As soon as you can migrate part of your data, you can start getting some insight by extrapolating your full-size data load, based on the sample you load into Datavers. When you're able to do a full load of the data you plan to migrate, you should be able to get sizing information for that environment. Microsoft Power Platform admin center provides tools to allow you to look at the storage usage of the top entities. In addition to the initial capacity, it's important to think about ongoing growth as your solution runs in a live environment. For the main entities, knowing their growth rate can help you determine capacity needs on an ongoing basis.

File capacity usage depends on how much you use attachments and image file data types on records. File capacity is also used if you have one or more installed Insights applications. Estimating storage is like database capacity, and it's essential to understand the number of records and the average file size for those records.

Log capacity usage depends on if you use the auditing and the plug-in trace feature. Even though it uses some capacity, auditing is a valuable feature to have enabled. Auditing is controlled at the environment, entity, and field level, and should be enabled where it makes sense. Auditing is valuable for business scenarios to know who made what change, and for troubleshooting system challenges. Take advantage of the feature that allows you to set a retention timeframe if it meets your business needs.

You can monitor your storage usage in Microsoft Power Platform Admin Center. For more information, see Dataverse analytics for more details.

Key things to look for with capacity

Does the team have a good understanding of the volume of records by entity?

Is there a plan in place to monitor capacity after initial go-live to ensure adequate capacity is provisioned?

API request capacity management

API requests include all API requests from Connectors, each Power Automate step actions, and all Dataverse API usage. A full list of what is counted can be found here in Requests limits and allocations. Each license you have for Power Apps, Power Automate, and Dynamics 365 provides an allocation of API requests per 24 hours. Generally, the licensed allocation includes plenty of API requests for normal usage of the applications you have licensed. If you exceed your allocations on a regular basis, you can purchase additional API requests through add-on licensing. The most common cause of overage is integrations or custom logic doing bulk processing. Often these can be adjusted for more efficient processing to avoid exceeding API requests. Solution architects should pay additional consideration to these types of processes to ensure they optimize their API usage as best as possible.

In addition to API requests allocation tracking, Dataverse also implements service protection limits. These are designed to ensure consistent availability and performance for everyone using the service. Again, normal usage shouldn't trigger the service protection. However, high-volume integrations can occasionally run into errors caused by triggering service protection. The service protection limits are evaluated every five minutes using a sliding window. The usage evaluated is the number of requests sent by a user, combined execution time for the requests, and concurrent requests by a user.

Service protection limits can't be overwritten by purchasing more license allocations, and should be handled in applications by appropriate retry logic. You can read more about service protection limits and how to handle retry here in Service Protection API Limits.

Key things to look for with API requests

Has the team identified any potential hot spots for API requests that could cause problems?

Has retry logic been added to all integrations or bulk API work?

Is there a plan in place to monitor API usage and adjust capacity as needed?