Exercise - Implement the Azure OpenAI service

Let's start with the simplest service, OpenAiService. This service only contains two methods that we need to implement so we can implement basic prompts and completions right away. We're not implementing our Azure Cosmos DB for NoSQL data service until later, so we can't persist our sessions across debugging sessions yet.

In this exercise, we have a few key requirements:

- Send a question from the user to the AI assistant and ask for a response.

- Send a series of prompts to the AI assistant and ask for a summarization of the conversation.

Ask the AI model a question

First, implement a question-answer conversation by sending a system prompt, a question, and session ID so the AI model can provide an answer in the context of the current conversation. Make sure you measure the number of tokens it takes to parse the prompt and return a response (or completion in this context).

Open the Services/OpenAiService.cs file.

Within the

GetChatCompletionAsyncmethod, remove any existing placeholder code.C#public async Task<(string completionText, int completionTokens)> GetChatCompletionAsync(string sessionId, string userPrompt) { }Create a

ChatRequestSystemMessagevariable namedsystemMessage. For this variable, use theUserrole and the_systemPromptvariable for content.C#ChatRequestSystemMessage systemMessage = new(_systemPrompt);Create a

ChatRequestUserMessagevariable nameduserMessage. For this variable, the role should beChatRole.Userand use theuserPromptconstructor parameter for the message's content.C#ChatRequestUserMessage userMessage = new(userPrompt);Create a new variable named

optionsof typeChatCompletionsOptions. Add the two message variables to theMessageslist, set the value ofUserto thesessionIdconstructor parameter, setMaxTokensto4000, and set the remaining properties to the recommended values here.C#ChatCompletionsOptions options = new() { DeploymentName = _modelName, Messages = { systemMessage, userMessage }, User = sessionId, MaxTokens = 4000, Temperature = 0.3f, NucleusSamplingFactor = 0.5f, FrequencyPenalty = 0, PresencePenalty = 0 };Tip

4096 is the maximum number of tokens for the gpt-35-turbo model. We're just rounding down here to simplify things.

Asynchronously invoke the

GetChatCompletionsAsyncmethod of the Azure OpenAI client variable (_client). Pass in theoptionsvariable you created. Store the result in a variable namedcompletionsof typeChatCompletions.C#ChatCompletions completions = await _client.GetChatCompletionsAsync(options);Tip

The

GetChatCompletionsAsyncmethod returns an object of typeTask<Response<ChatCompletions>>. TheResponse<T>class contains a implicit conversion to typeTallowing you to select a type based on your application's needs. You can store the result as eitherResponse<ChatCompletions>to get the full metadata from the response or justChatCompletionsif you only care about the content of the result itself.Finally, return a tuple as the result of the

GetChatCompletionAsyncmethod with the content of the completion as a string, the number of tokens associated with the prompt, and the number of tokens for the response.C#return ( response: completions.Choices[0].Message.Content, promptTokens: completions.Usage.PromptTokens, responseTokens: completions.Usage.CompletionTokens );Save the Services/OpenAiService.cs file.

Ask the AI model to summarize a conversation

Now, send the AI model a different system prompt, your current conversation, and session ID so the AI model can summarize the conversation in a couple of words.

Within the

SummarizeAsyncmethod, remove any existing placeholder code.C#public async Task<string> SummarizeAsync(string sessionId, string conversationText) { }Create a

ChatRequestSystemMessagevariable namedsystemMessage. For this variable, use theUserrole and the_summarizePromptvariable for content.C#ChatRequestSystemMessage systemMessage = new(_summarizePrompt);Create another

ChatRequestUserMessagevariable nameduserMessage. Use theUserrole again and use theconversationTextconstructor parameter for the message's content.C#ChatRequestUserMessage userMessage = new(conversationText);Create a

ChatCompletionsOptionsvariable namedoptionswith the two message variables in theMessageslist,Userset to thesessionIdconstructor parameter,MaxTokensset to200, and the remaining properties to the recommended values here.C#ChatCompletionsOptions options = new() { DeploymentName = _modelName, Messages = { systemMessage, userMessage }, User = sessionId, MaxTokens = 200, Temperature = 0.0f, NucleusSamplingFactor = 1.0f, FrequencyPenalty = 0, PresencePenalty = 0 };Invoke

_client.GetChatCompletionsAsyncasynchronously using theoptionsvariable as a parameter. Store the result in a variable namedcompletionsof typeChatCompletions.C#ChatCompletions completions = await _client.GetChatCompletionsAsync(options);Return the content of the completion as a string as the result of the

SummarizeAsyncmethod.C#return completions.Choices[0].Message.Content;Save the Services/OpenAiService.cs file.

Check your work

At this point, your application should have a thorough enough implementation of the Azure OpenAI service that you can test the application. Remember, you don't have a data store implementation yet, so your conversations aren't persisted between debugging sessions.

Open a new terminal.

Start the application with hot reloads enabled using

dotnet watch.Bashdotnet watch run --non-interactiveTip

The Hot Reload feature is enabled here if you need to make a small correction to the application's code. For more information, see .NET Hot Reload support for ASP.NET Core.

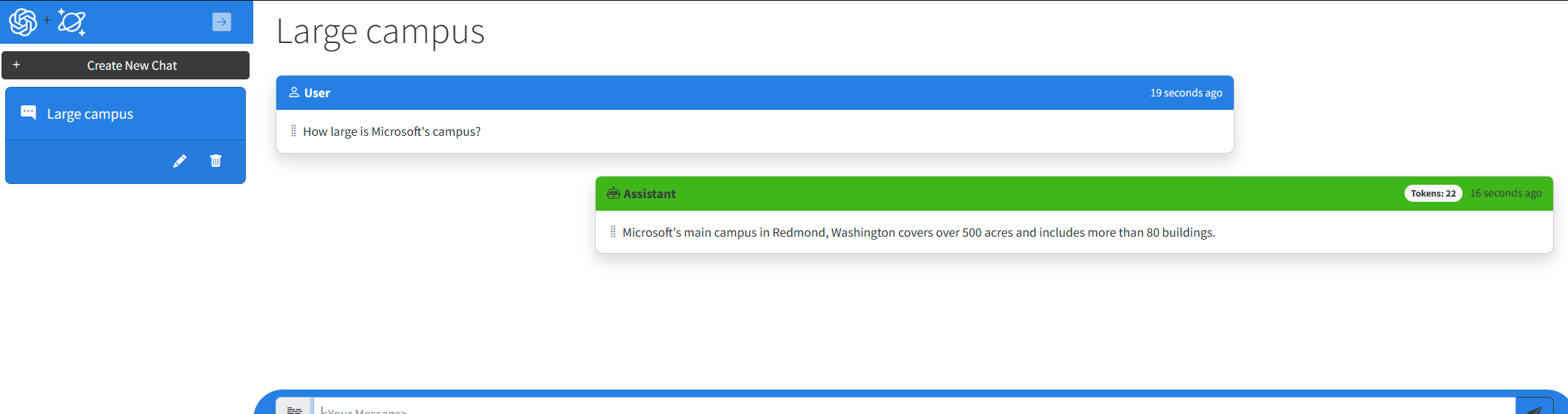

Visual Studio Code launches the in-tool simple browser again with the web application running. In the web application, create a new chat session and ask the AI assistant a question. The AI assistant now responds with a completion created by the model. You should also notice that the token UI fields are now populated with real-world token usage for each completion and prompt.

Close the terminal.