Evaluate responses

When educators write prompts for information, generative AI models don't "know" an answer. Instead, they predict the most likely response based on their training data. Regardless of the quality of your prompt, the model might generate an incorrect or fabricated response.

Misinformation can be spread through these fabrications. Copilot aims to base all its responses on reliable sources—but AI can make mistakes, and third-party content on the internet may not always be accurate or reliable. Copilot may sometimes misrepresent the information it finds, and you may see responses that sound convincing but are incomplete, inaccurate, or inappropriate.

While Copilot works to avoid sharing unexpected offensive content in search results and has taken steps to prevent its chat features from engaging on potentially harmful topics, educators may still see unexpected results. Provide feedback or report concerns directly to Microsoft by using the feedback features underneath the response.

When Copilot provides a response to a prompt, it also provides two key pieces of information: the search terms used to generate the response and the links to content sources. Educators can use these details to inform their evaluation of the response. If the prompt terms don't represent the intended question, start a new prompt with different wording. If the source links aren't reliable, ask Copilot to refine the response using specific, more reliable websites that you provide.

Standard 2.3.b in the ISTE Standards for Educators states an educator will establish a learning culture that promotes curiosity and critical examination of online resources and fosters digital literacy and media fluency. Educators who critically evaluate Copilot results through analysis and lateral reading model digital citizenship skills for their learners. Learn more about preparing today's learners for online success in the Digital Citizenship module.

Practice checking sources

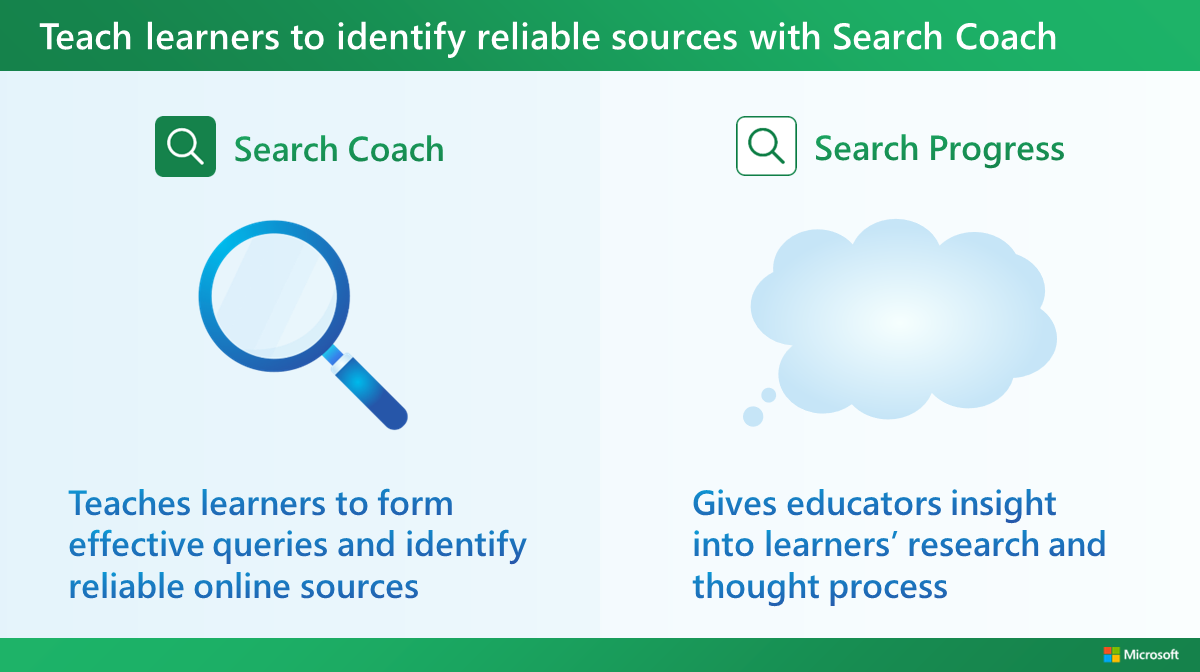

Educators can work with learners to practice these skills using Search Coach and Search Progress, free tools in Microsoft Teams for Education. Search Coach provides learners context and guidance to ask effective questions and discover reliable sources. Many lesson plans are available to introduce concepts learners can apply in search engines like Bing. Educators can use Search Progress to track learner progress in understanding how to evaluate sources for validity, reliability, accuracy, relevance, and bias.

Teach learners to identify reliable sources with Search Coach & Search Progress (Accessible PDF)

Tips for evaluating accuracy and generating reliable content with Copilot

Following are some suggestions for proactive steps in evaluating accuracy and generating reliable content:

- Use the More precise conversation style in Copilot if you're looking for concise, straightforward information.

- Read references carefully. Select the source links and verify that the AI model correctly interpreted the source texts.

- Tell Copilot to summarize specific information rather than ask an open-ended question. For example, instead of asking, "What is the best way to start a class discussion," write a prompt that asks, "List five ways to begin a class discussion." Being specific can help the AI model generate more accurate and relevant responses.

- Rephrase a prompt in multiple ways to check the consistency of the responses.

- Pose a variety of questions from different perspectives to see how the responses compare.