Manage and respond to alerts in Microsoft Defender for Cloud Apps

Alerts are the entry points for organizations to understand their cloud environments more deeply. Based on the information they gather from alerts, organizations might want to create new policies based on what they find. For example, let's assume you discover that an administrator signed in from Greenland, even though no one in your organization ever signed in from Greenland before. You can create a policy that automatically suspends an account when someone uses it to sign in from that location.

Monitor alerts

It's a good idea to review all of your alerts and use them as tools for modifying your policies. If harmless events are being considered violations to existing policies, refine your policies so that you receive fewer unnecessary alerts.

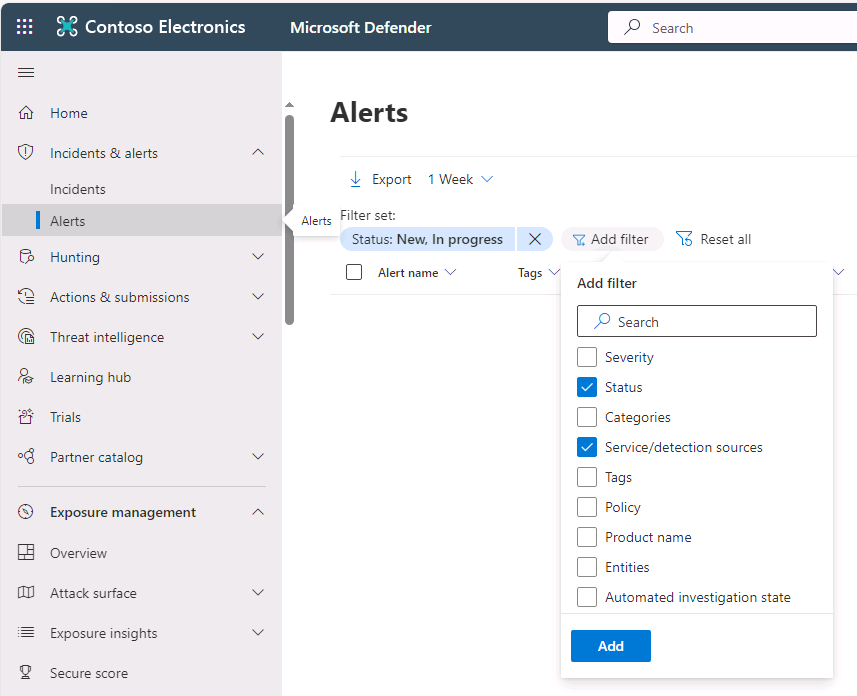

- In the Microsoft Defender portal, in the left-hand navigation pane, select Incidents & alerts to expand the group, and then select Alerts.

- On the Alerts page, select Add filter on the menu bar.

- In the Add filter menu that appears, select any filters specific to your current investigation and then select Add. Select the filters that provide full visibility into any suspicious activity or violation of your established policies. For example, you can:

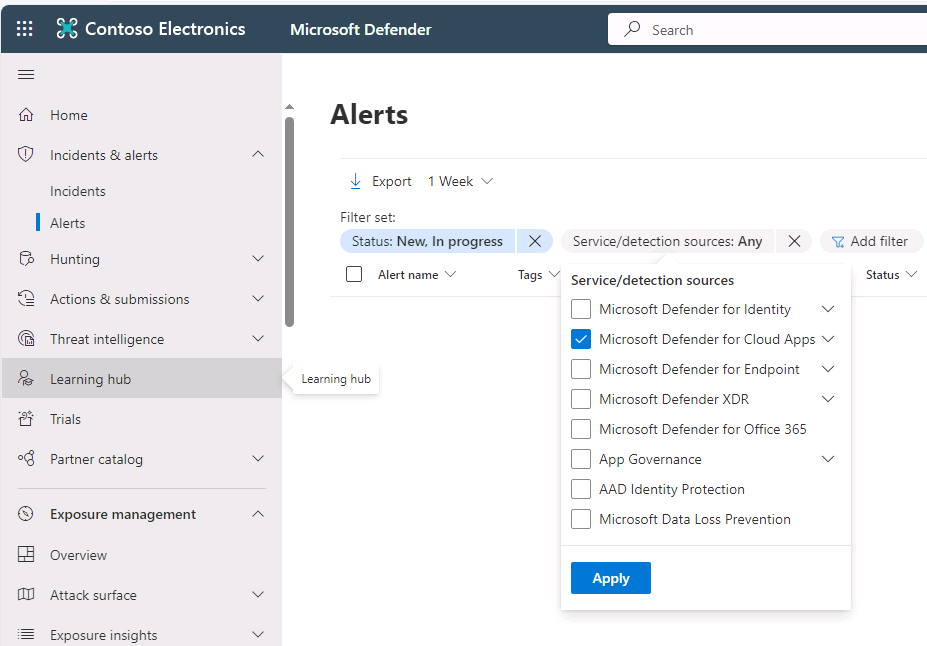

Select the Service/detection sources option and then select Add.

On the Alerts page, select the Service/detection sources: Any filter option on the menu bar.

On the Service/detection sources menu that appears, select Microsoft Defender for Cloud Apps service and then select Apply to see any alerts related to your organization's cloud apps.

The alerts that appear can help you safeguard the security posture you defined for your cloud environment. For each alert, you need to investigate and determine the nature of the violation and the required response.

- You can filter the alerts by Alert type or Severity to process the most important ones first.

- Select a specific alert. Depending on what type of alert it is, you see various actions that you can take before resolving the alerts.

- You can filter based on an app. The apps listed are ones for which Microsoft Defender for Cloud Apps detected activities.

- There are three types of violations you must deal with when investigating alerts:

- Serious violations. Require immediate response. Examples include:

- For a suspicious activity alert, you want to suspend the account until the user changes their password.

- For a data leak, you want to restrict permissions or quarantine the file.

- If Cloud Discover detects a new app, you want to block access to the service on your proxy or firewall.

- Questionable violations. Require further investigation. Examples include:

- You can contact the user or the user's manager about the nature of the activity.

- Leave the activity open until you have more information.

- Authorized violations or anomalous behavior. Can result from legitimate use.

- You can dismiss the alert.

- Serious violations. Require immediate response. Examples include:

When you dismiss an alert, it's helpful if you submit feedback about why you're dismissing the alert. The Microsoft Defender for Cloud Apps team uses this feedback as an indication of the accuracy of the alert. It also uses this information to fine-tune our machine learning models for future alerts. If you select the It's OK to contact me about this alert box, in select cases Microsoft might contact you for additional information.

You can follow these guidelines in deciding how to categorize the alert:

- If a legitimate activity triggered the alert and it isn't a security issue, it could be one of these types:

- Benign positive. The alert is accurate but the activity is legitimate. You can dismiss the alert and set the reason to Actual severity is lower or Not interesting.

- False positive. The alert is inaccurate. Dismiss the alert and set the reason to Alert is not accurate.

- If any activity triggered the alert and it's a security issue, then the alert is:

- True positive. If the alert is related to an actual risky event that an insider or outsider either committed maliciously or unintentionally, you should set the event to Resolve after the organization completed all appropriate actions to remediate the event.

- If there's too much noise to determine the legitimacy and accuracy of an alert, dismiss it and set the reason to Too many similar alerts.

Alert types

The following table provides a list of the types of alerts, and it recommends ways an organization can resolve them.

| Alert type | Description | Recommended resolution |

|---|---|---|

| Activity policy violation | This type of alert is the result of a policy you created. | To work with this type of alert in bulk, Microsoft recommends that organizations work in the Policy center to mitigate them. Fine-tune the policy to exclude noisy entities by adding more filters and more granular controls. This alert is a warranted alert if the policy is accurate. As such, it's a violation that you want to immediately stop. You should consider adding automatic remediation in the policy. |

| File policy violation | This type of alert is the result of a policy you created. | To work with this type of alert in bulk, Microsoft recommends that organizations work in the Policy center to mitigate them. Fine-tune the policy to exclude noisy entities by adding more filters and more granular controls. This alert is a warranted alert if the policy is accurate. As such, it's a violation that you want to immediately stop. You should consider adding automatic remediation in the policy. |

| Compromised account | Microsoft Defender for Cloud Apps triggers this alert when it identifies a compromised account A compromised account means there's a high probability that someone used the account in an unauthorized way. | Microsoft recommends that organizations should suspend compromised accounts until they can reach the users and verify they were the ones who changed their passwords. |

| Inactive account | Microsoft Defender for Cloud Apps triggers this alert when no one used an account in one of the organization's connected cloud apps in the past 60 days. | Contact the user and the user's manager to determine whether the account is still active. If not, suspend the user and terminate the license for the app. |

| New admin user | Alerts you to changes in your privileged accounts for connected apps. | Confirm a user requires the new administrator permissions. If they don't, recommend revoking admin privileges to reduce exposure. |

| New admin location | Alerts you to changes in your privileged accounts for connected apps. | Confirm the sign-in from this anomalous location was legitimate. If it isn't, recommend revoking admin permissions or suspending the account to reduce exposure. |

| New location | An informative alert about access to a connected app from a new location. Microsoft Defender for Cloud Apps only triggers this alert once per country/region. | Investigate the specific user's activity. |

| New discovered service | This alert is an alert about Shadow IT. Cloud Discovery detected a new app. | For sanctioned apps: 1. Assess the risk of the service based on the app catalog. 2. Drill down into the activity to understand usage patterns and prevalence. 3. Decide whether to sanction or unsanction the app. For unsanctioned apps: 1. You might want to block use in your proxy or firewall. 2. If you have an unsanctioned app and a sanctioned app in the same category, you can export a list of users of the unsanctioned app. Then, contact them to migrate them to the sanctioned app. |

| Suspicious activity | This alert lets you know that Microsoft Defender for Cloud Apps detects anomalous activity not aligned with expected activities or users in the organization. | Investigate the behavior and confirm it with the user. This type of alert is a great place to start learning more about your environment and creating new policies with these alerts. For example, let's assume someone suddenly uploads a large amount of data to one of your connected apps. You can set a rule to govern that type of anomalous behavior. |

| Use of personal account | This alert lets you know that a new personal account has access to resources in your connected apps. | Remove the user's collaborations in the external account. |