Access your data in Microsoft OneLake

The tables that you link from Dataverse to Fabric show on Fabric as a lakehouse. You can link the tables to Dataverse by using Dataverse shortcuts from Microsoft OneLake, the storage solution for Fabric. You can access this data through multiple methods.

Access data by using endpoints

When the system establishes the link, it creates a lakehouse in the provided workspace. The name of the lakehouse is unique, which the system generates from a combination of factors. The system generates the following two endpoints from the lakehouse:

SQL Analytics endpoint

Default semantic model

These endpoints allow personas, such as data analysts, to start querying and building reports immediately.

SQL Analytics endpoint

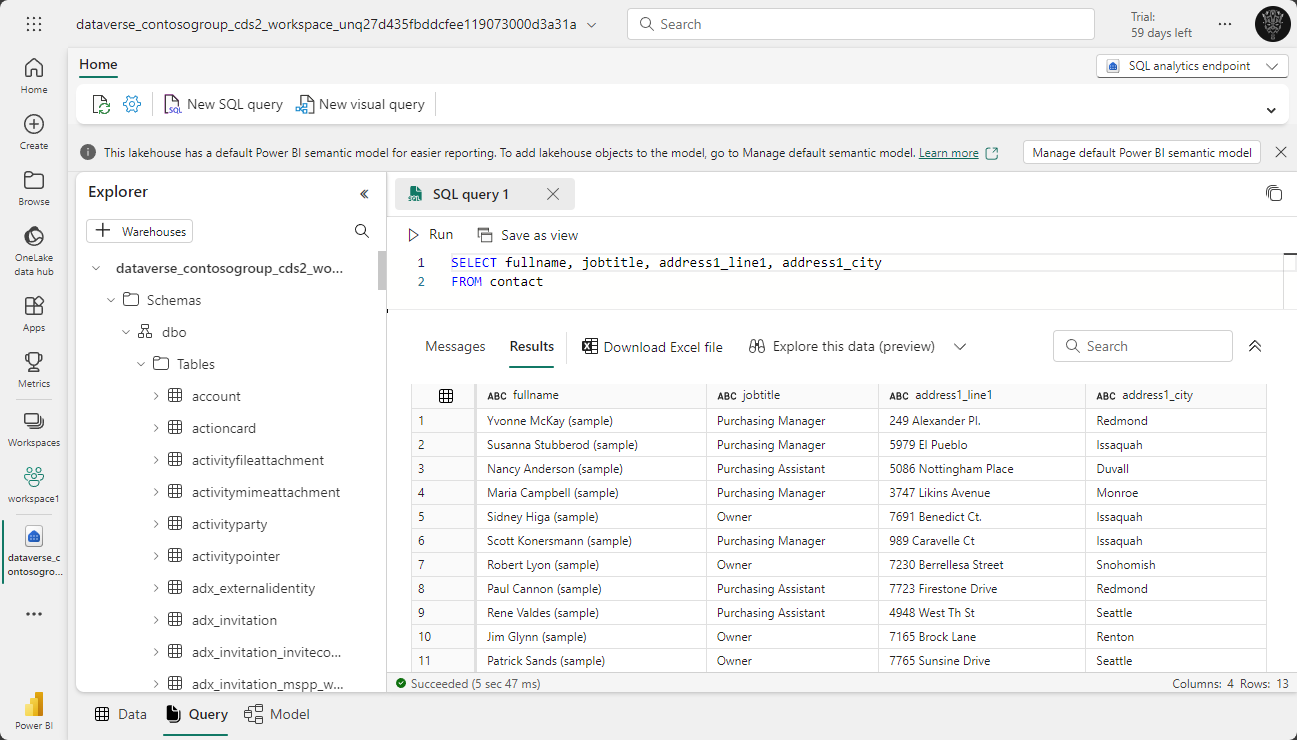

The SQL Analytics endpoint allows you to write queries by using Transact-SQL (T-SQL). You can access it by selecting the SQL Analytics endpoint in the workspace. Data analysts can write queries for reports by combining data from Dataverse and from other sources that are stored on Fabric.

Default semantic model

You can use the default semantic model to build reports by using Power BI. You can access it by selecting the default semantic model in the workspace. The system provides you with options to explore data, create a report from the beginning, auto create a report, and create a paginated report from the semantic model. Data analysts can explore the data and then build reports of their choice by using a visual interface.

Access data by using the lakehouse

A lakehouse provides data and analytics engineers with options to access data directly from the lakehouse. Data and analytics engineers can use the following options:

Notebooks

Custom semantic models

Notebooks

Notebooks allow you to write complex code to process and transform your data. For instance, you can use this option to build transformation logic for a data warehouse. Fabric allows you to write code in notebooks by using multiple languages, including Python, Scala, SQL, and R. You can access or create a notebook from within your lakehouse by selecting Open notebook > New notebook on the command bar.

Custom semantic models

You can create your own semantic models by using the tables in your lakehouse. For instance, you can build a semantic model for a specific use case by only using the required tables, such as for phone call analysis at a call center. You can create a new semantic model from within your lakehouse by selecting New semantic model on the command bar.

Build apps and automations by using Dataverse virtual tables

To derive insights, you can combine the Dataverse data that's linked in Fabric with data that other sources ingest into Fabric. You can link these insights from Fabric by using virtual tables in Dataverse. Then, apps and automations that you built by using Power Apps and Power Automate can take action on these virtual tables.

Next steps

After exploring how to link Dataverse data to Fabric, your next step is to learn how to manage the links to OneLake.