Windows Azure PaaS Compute Diagnostics Data

When troubleshooting a problem one of the most important things to know is what diagnostic data is available. If you don’t know where to look for logs or other diagnostics information then you end up having to resort to the trial-and-error or shotgun approach to troubleshooting problems. However, with access to logs you have a fair chance to troubleshoot any problem, even if it isn’t in your domain of expertise. This blog post will describe the data available in Windows Azure PaaS compute environments, how to easily gather this data, and will begin a series of posts discussing how to troubleshoot issues when using the Azure platform.

In conjunction with this blog post I would highly recommend reading the post at https://blogs.msdn.com/b/kwill/archive/2011/05/05/windows-azure-role-architecture.aspx which explains the different processes in a PaaS VM and how they interact with each other. An understanding of the high level architecture of something you are trying to troubleshoot will significantly improve your ability to resolve problems.

Troubleshooting Series (along with the major concepts or tools covered in each scenario):

- Windows Azure PaaS Compute Diagnostics Data

- AzureTools - The Diagnostic Utility used by the Windows Azure Developer Support Team

- Troubleshooting Scenario 1 - Role Recycling

- Using Task Manager to determine which process is failing and which log to look at first.

- Windows Azure Event Logs

- Troubleshooting Scenario 2 - Role Recycling After Running Fine For 2 Weeks

- WaHostBootstrapper.log

- Failing startup task

- OS restarts

- Troubleshooting Scenario 3 – Role Stuck in Busy

- WaHostBootstrapper.log

- Failing startup task

- Modifying a running service

- Troubleshooting Scenario 5 – Internal Server Error 500 in WebRole

- Browse IIS using DIP

- Troubleshooting Scenario 6 - Role Recycling After Running For Some Time

- Deep dive on WindowsAzureGuestAgent.exe logs (AppAgentRuntime.log and WaAppAgent.log)

- DiagnosticStore LocalStorage resource

- Troubleshooting Scenario 7 - Role Recycling

- Brief look at WaHostBootstrapper and WindowsAzureGuestAgent logs

- AzureTools

- WinDBG

- Intellitrace

There is a new short Channel 9 video demonstrating some of these blog file locations and the use of the SDP package at https://channel9.msdn.com/Series/DIY-Windows-Azure-Troubleshooting/Windows-Azure-PaaS-Diagnostics-Data.

Diagnostic Data Locations

This list includes the most commonly used data sources used when troubleshooting issues in a PaaS VM, roughly ordered by importance (ie. the frequency of using the log to diagnose issues).

- Windows Azure Event Logs – Event Viewer –> Applications and Services Logs –> Windows Azure

- Contains key diagnostic output from the Windows Azure Runtime, including information such as Role starts/stops, startup tasks, OnStart start and stop, OnRun start, crashes, recycles, etc.

- This log is often overlooked because it is under the “Applications and Services Logs” folder in Event Viewer and thus not as visible as the standard Application or System event logs.

- This one diagnostic source will help you identify the cause of several of the most common issues with Azure roles failing to start correctly – startup task failures, and crashing in OnStart or OnRun.

- Captures crashes, with callstacks, in the Azure runtime host processes that run your role entrypoint code (ie. WebRole.cs or WorkerRole.cs).

- Application Event Logs – Event Viewer –> Windows Logs –> Application

- This is standard troubleshooting for both Azure and on-premise servers. You will often find w3wp.exe related errors in these logs.

- App Agent Runtime Logs – C:\Logs\AppAgentRuntime.log

- These logs are written by WindowsAzureGuestAgent.exe and contain information about events happening within the guest agent and the VM. This includes information such as firewall configuration, role state changes, recycles, reboots, health status changes, role stops/starts, certificate configuration, etc.

- This log is useful to get a quick overview of the events happening over time to a role since it logs major changes to the role without logging heartbeats.

- If the guest agent is not able to start the role correctly (ie. a locked file preventing directory cleanup) then you will see it in this log.

- App Agent Heartbeat Logs – C:\Logs\WaAppAgent.log

- These logs are written by WindowsAzureGuestAgent.exe and contain information about the status of the health probes to the host bootstrapper.

- The guest agent process is responsible for reporting health status (ie. Ready, Busy, etc) back to the fabric, so the health status as reported by these logs is the same status that you will see in the Management Portal.

- These logs are typically useful for determining what is the current state of the role within the VM, as well as determining what the state was at some time in the past. With a problem description like “My website was down from 10:00am to 11:30am yesterday”, these heartbeat logs are very useful to determine what the health status of the role was during that time.

- Host Bootstrapper Logs – C:\Resources\WaHostBootstrapper.log

- This log contains entries for startup tasks (including plugins such as Caching or RDP) and health probes to the host process running your role entrypoint code (ie. WebRole.cs code running in WaIISHost.exe).

- A new log file is generated each time the host bootstrapper is restarted (ie. each time your role is recycled due to a crash, recycle, VM restart, upgrade, etc) which makes these logs easy to use to determine how often or when your role recycled.

- IIS Logs - C:\Resources\Directory\{DeploymentID}.{Rolename}.DiagnosticStore\LogFiles\Web

- This is standard troubleshooting for both Azure and on-premise servers.

- One key problem scenario where these logs are often overlooked is the scenario of “My website was down from 10:00am to 11:30am yesterday”. The natural tendency is to blame Azure for the outage (“My site has been working fine for 2 weeks, so it must be a problem with Azure!”), but the IIS logs will often indicate otherwise. You may find increased response times immediately prior to the outage, or non-success status codes being returned from IIS, which would indicate a problem within the website itself (ie. in the ASP.NET code running in w3wp.exe) rather than an Azure issue.

- Performance Counters – perfmon, or Windows Azure Diagnostics

- This is standard troubleshooting for both Azure and on-premise servers.

- The interesting aspect of these logs in Azure is that, assuming you have setup WAD ahead of time, you will often have valuable performance counters to troubleshoot problems which occurred in the past (ie. "My website was down from 10:00am to 11:30am yesterday").

- Other than specific problems where you are gathering specific performance counters, the most common uses for the performance counters gathered by WAD is to look for regular performance counter entries, then a period of no entries, then resuming the regular entries (indicating a scenario where the VM was potentially not running), or 100% CPU (usually indicating an infinite loop or some other logic problem in the website code itself).

- HTTP.SYS Logs – D:\WIndows\System32\LogFiles\HTTPERR

- This is standard troubleshooting for both Azure and on-premise servers.

- Similar to the IIS Logs, these are often overlooked but very important when trying to troubleshoot an issue with a hosted service website not responding. Often times it can be the result of IIS not being able to process the volume of requests coming in, the evidence of which will usually show up in the HTTP.SYS logs.

- IIS Failed Request Log Files - C:\Resources\Directory\{DeploymentID}.{Rolename}.DiagnosticStore\FailedReqLogFiles

- This is standard troubleshooting for both Azure and on-premise servers.

- This is not turned on by default in Windows Azure and is not frequently used. But if you are troubleshooting IIS/ASP.NET specific issues you should consider turning FREB tracing on in order to get additional details.

- Windows Azure Diagnostics Tables and Configuration - C:\Resources\Directory\{DeploymentID}.{Rolename}.DiagnosticStore\Monitor

- This is the local on-VM cache of the Windows Azure Diagnostics (WAD) data. WAD captures the data as you have configured it, stores in in custom .TSF files on the VM, then transfers it to storage based on the scheduled transfer period time you have specified.

- Unfortunately because they are in a custom .TSF format the contents of the WAD data are of limited use, however you can see the diagnostics configuration files which are useful to troubleshoot issues when Windows Azure Diagnostics itself is not working correctly. Look in the Configuration folder for a file called config.xml which will include the configuration data for WAD. If WAD is not working correctly you should check this file to make sure it is reflecting the way that you are expecting WAD to be configured.

- Windows Azure Caching Log Files - C:\Resources\Directory\{DeploymentID}.{Rolename}.DiagnosticStore\AzureCaching

- These logs contain detailed information about Windows Azure role-based caching and can help troubleshoot issues where caching is not working as expected.

- WaIISHost Logs - C:\Resources\Directory\{DeploymentID}.{Rolename}.DiagnosticStore\WaIISHost.log

- This contains logs from the WaIISHost.exe process which is where your role entrypoint code (ie. WebRole.cs) runs for WebRoles. The majority of this information is also included in other logs covered above (ie. the Windows Azure Event Logs), but you may occasionally find additional useful information here.

- IISConfigurator Logs - C:\Resources\Directory\{DeploymentID}.{Rolename}.DiagnosticStore\IISConfigurator.log

- This contains information about the IISConfigurator process which is used to do the actual IIS configuration of your website per the model you have defined in the service definition files.

- This process rarely fails or encounters errors, but if IIS/w3wp.exe does not seem to be setup correctly for your service then this log is the place to check.

- Role Configuration Files – C:\Config\{DeploymentID}.{DeploymentID.{Rolename}.{Version}.xml

- This contains information about the configuration for your role such as settings defined in the ServiceConfiguration.cscfg file, LocalResource directories, DIP and VIP IP addresses and ports, certificate thumbprints, Load Balancer Probes, other instances, etc.

- Similar to the Role Model Definition File, this is not a log file which contains runtime generated information, but can be useful to ensure that your service is being configured in the way that you are expecting.

- Role Model Definition File – E:\RoleModel.xml (or F:\RoleModel.xml)

- This contains information about how your service is defined according to the Azure Runtime, in particular it contains entries for every startup task and how the startup task will be run (ie. background, environment variables, location, etc). You will also be able to see how your <sites> element is defined for a web role.

- This is not a log file which contains runtime generated information, but it will help you validate that Azure is running your service as you are expecting it to. This is often helpful when a developer has a particular version of a service definition on his development machine, but the build/package server is using a different version of the service definition files.

* A note about ETL files

If you look in the C:\Logs folder you will find RuntimeEvents_{iteration}.etl and WaAppAgent_{iteration}.etl files. These are ETW traces which contain a compilation of the information found in the Windows Azure Event Logs, Guest Agent Logs, and other logs. This is a very convenient compilation of all of the most important log data in an Azure VM, but because they are in ETL format it requires a few extra steps to consume the information. If you have a favorite ETW viewing tool then you can ignore several of the above mentioned log files and just look at the information in these two ETL files.

Gathering The Log Files For Offline Analysis and Preservation

In most circumstances you can analyze all of the log files while you are RDPed onto the VM and doing a live troubleshooting session, and you aren't concerned with gathering all of the log files into one central location. However, there are several scenarios in which you want to be able to easily gather all of the log files and be able to save them off of the VM for analysis by someone else or to preserve them for analysis at a later time so that you can redeploy your hosted service and restore your application's functionality.

There are three ways to quickly gather diagnostics logs from a PaaS VM:

- The easiest way is to simply RDP to the VM and run CollectGuestLogs.exe. CollectGuestLogs.exe ships with the Azure Guest Agent which is present on all PaaS VMs and most IaaS VMs and it will create a ZIP file of the logs from the VM. For PaaS VMs, this file is located at D:\Packages\GuestAgent\CollectGuestLogs.exe. For IaaS VMs this file is located at C:\WindowsAzure\Packages\CollectGuestLogs.exe. Note that CollectGuestLogs collects IIS Logs by default, which can be quite large for long running web roles. To prevent IIS log collection run "CollectGuestLogs.exe -Mode:ga", or run "CollectGuestLogs.exe -?" for more information.

- If you want to collect logs without having to RDP to the VM, or collect logs from multiple VMs at once, you can run the Azure Log Collector Extension from your local dev machine. For more information see https://azure.microsoft.com/blog/2015/03/09/simplifying-virtual-machine-troubleshooting-using-azure-log-collector/.

- The older way (before CollectGuestLogs existed) is to use the SDP package created by the Azure support teams. See below for instructions on using this package.

Using the older SDP package

The Windows Azure Developer support team has created an SDP (Support Diagnostics Platform) script which automatically gathers all of the above information into a .CAB file which allows for easy transfer of the necessary logs to the support professional for analysis during the course of working on a support incident. This same SDP package is also available outside of a normal support incident via the following URL:

- Windows Azure Guest OS Family 2 & 3 (Windows Server 2008 R2 and Windows Server 2012. Powershell v2) - 2625.CTS_AzurePaaSLogs_global.DiagCab

- Windows Azure Guest OS Family 1 (Windows Server 2008. Powershell v1) - 5635.CTS_AzurePaaSLogs_en-US_OSFamily1.EXE

* You can find more information about the SDP package at https://support.microsoft.com/kb/2772488 .

Obtaining the SDP Package for Windows Azure Guest OS Family 2 & 3

Obtaining and running the SDP Package is easy in Windows Server 2008 R2 or 2012.

- RDP to the Azure VM

- Open Powershell

- Copy/Paste and Run the following script

md c:\Diagnostics; md $env:LocalAppData\ElevatedDiagnostics\1239425890; Import-Module bitstransfer; explorer $env:LocalAppData\ElevatedDiagnostics\1239425890; Start-BitsTransfer https://dsazure.blob.core.windows.net/azuretools/AzurePaaSLogs\_global-Windows2008R2\_Later.DiagCab c:\Diagnostics\AzurePaaSLogs_global-Windows2008R2_Later.DiagCab; c:\Diagnostics\AzurePaaSLogs_global-Windows2008R2_Later.DiagCab

This script will do the following:

-

- Create a folder C:\Diagnostics

- Load the BitsTransfer module and download the SDP package to C:\Diagnostics\AzurePaaSLogs.DiagCab

- Launch AzurePaaSLogs.DiagCab

- Open Windows Explorer to the folder which will contain the CAB file after the package has completed running.

Obtaining the SDP Package for Windows Azure Guest OS Family 1

If you are on Windows Azure Guest OS Family 1, or you prefer not to directly download and run the file from within your Azure VM, you can download the appropriate file to an on-prem machine and then copy it to the Azure VM when you are ready to run it (standard Copy/Paste [ctrl+c, ctrl+v] the file between your machine and the Azure VM in the RDP session).

Using the SDP Package

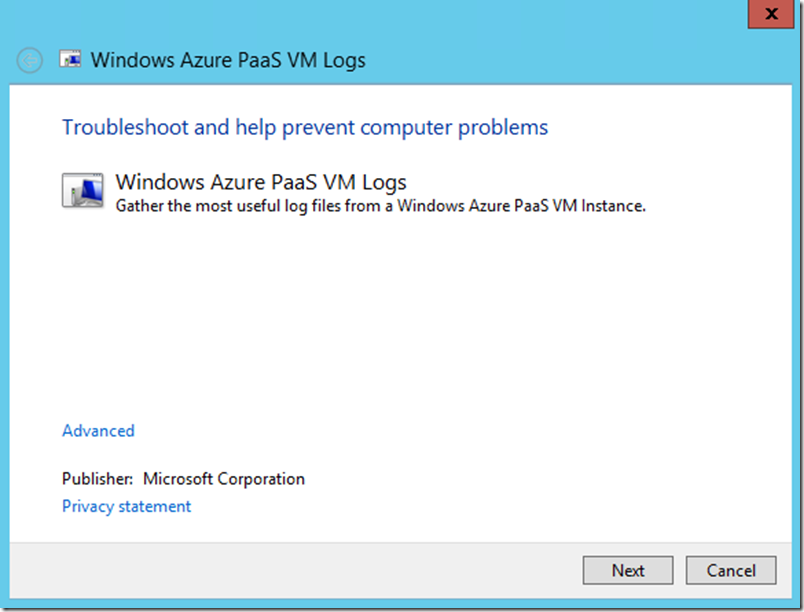

The SDP package will present you with a standard wizard with Next/Cancel buttons.

- The first screen has an ‘Advanced’ option which allows to check or uncheck the ‘Apply repairs automatically’ option. This option has no impact on this SDP package since this package only gathers data and does not make any changes or repairs to the Azure VM. Click Next.

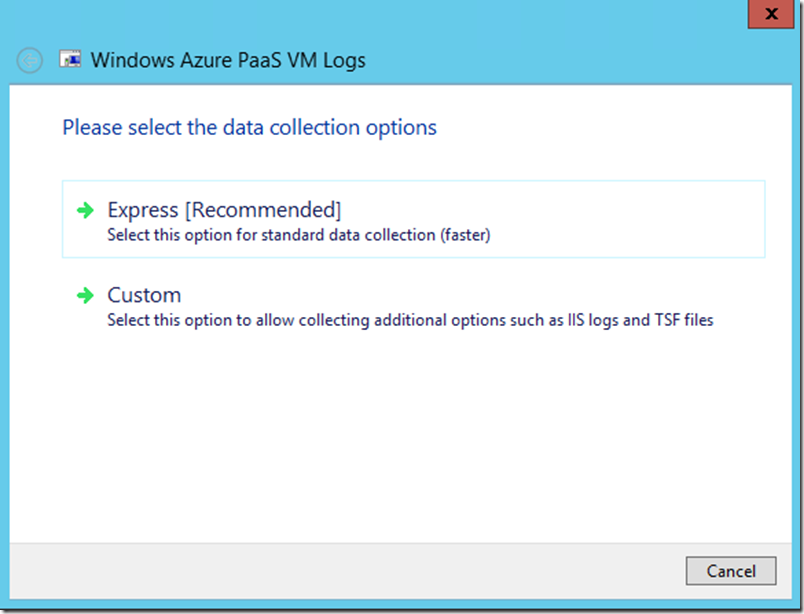

- The next screen gives you the option for ‘Express [Recommended]’ or ‘Custom’. If you choose Custom you will have the option to gather WAD (*.tsf) files and IIS log files. You will typically not gather the WAD *.tsf files since you have no way to analyze the custom format, but depending on the issue you are troubleshooting you may want to gather the IIS log files. Click Next.

- The SDP Package will now gather all of the files into a .CAB file. Depending on how long the VM has been running and how much data is in the various log files this process could take several minutes.

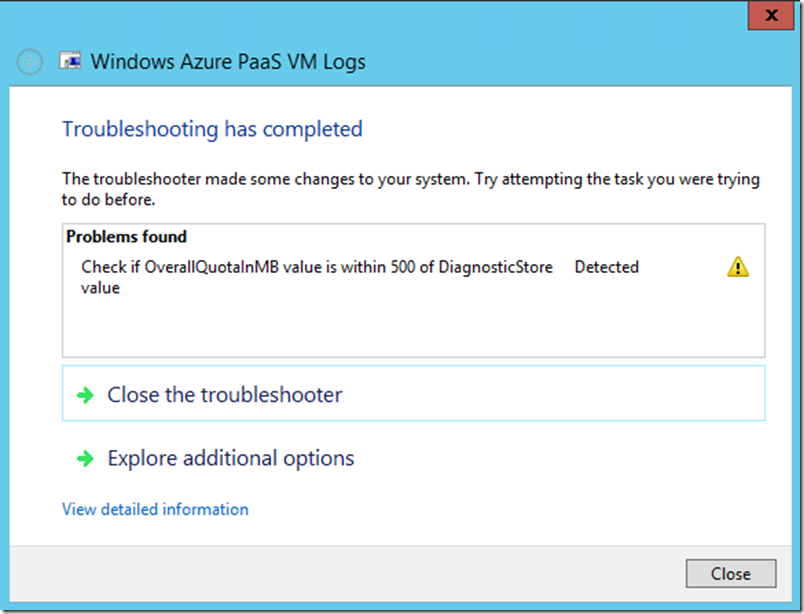

- Once the files have been gathered you will be presented with a screen showing any commonly problems that have been detected. You can click on the ‘Show Additional Information’ button to see a full report with additional details about any detected common problems. This same report is also available within the .CAB file itself so you can view it later. Click ‘Close’.

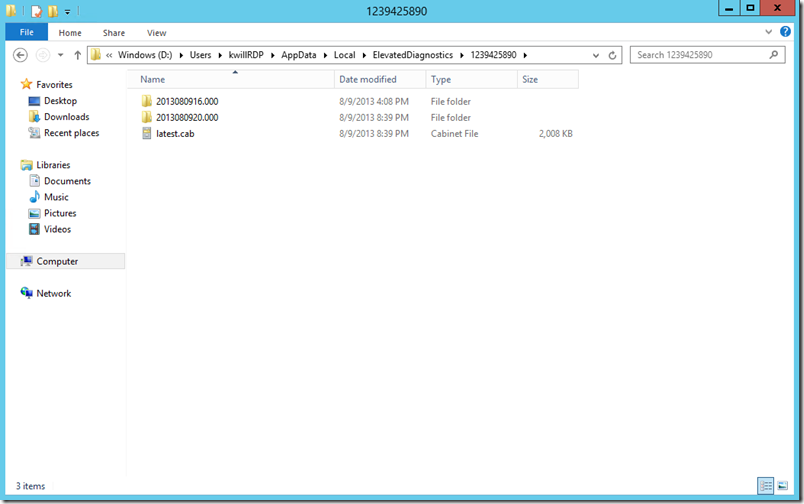

- At this point the CAB file will be available in the %LocalAppData%\ElevatedDiagnostics folder. You will notice a ‘latest.cab’ file along with one or more subfolders. The subfolders contain the timestamped results from every time you run the SDP package, while the latest.cab file contains the results from the most recent execution of the SDP package.

- You can now copy the latest.cab file from the VM to your on-premise machine for preservation or off-line analysis.

Comments

Anonymous

November 23, 2014

Excellent article Kevin! It helps a lot :) Just a little correction in the "Using the SDP Package" session where you mention where the CAB file will be available: %LocalAppData%ElevatedDiagnostics (It's written "Eleveated" in the post. I've tried here and got an error :p ) Thanks again for the Post Kevin. You Rock!Anonymous

December 03, 2014

Thanks for catching that typo Leonardo! It has been fixed.Anonymous

July 08, 2015

This is excellent and still relevant. Thanks for writing this post Kevin.Anonymous

February 15, 2017

The comment has been removed