3 Steps to Build Your First Intelligent App – Conference Buddy

This post is authored by Wilson Lee, Senior Software Engineer at Microsoft.

Imagine yourself at a conference, attending a session with hundreds of other enthusiastic developers. You put away your phone to pay attention to the speaker who is talking about the latest cutting-edge technologies. As you learn about the topic, you start to gather a list of questions you would like to get the answers to right away. But the timing never seems right to ask those questions. Maybe, it's not Q&A time yet. Maybe, you are not thrilled to speak up in front of so many fellow attendees. Or maybe, even if you raised your hand or stood in line during the Q&A period, you were not picked. As a result, you did not have the full learning experience that you felt you deserved.

Even as digital transformation sweeps through every business and industry, we can't help but ask – can AI help in the conference experience above? Can today's manual interaction between speaker and attendees be infused with intelligence to create a more satisfying Q&A experience?

The core of Q&A is a conversation between the speaker and attendees, and – as conversational AI tools gain rapid popularity – they offer an opportunity to elevate the Q&A experience. There are several popular chatbot services that allow us to interact in more natural ways. In addition to conversational AI, there are also pre-built AI tools such as cognitive services which help developers bring human-level cognition into their applications and are a great way for most developers to get started on their AI journey.

In this blog post, we wish to introduce Conference Buddy, an end-to-end intelligent application that is infused with both conversational and pre-built AI capabilities. Conference Buddy showcases one simple example of how AI can help facilitate more effective Q&A experiences at conferences and presentations with large audiences.

We offer three simple steps to develop an intelligent application, using Conference Buddy as our example. We show you the overall AI Oriented Architecture used, with a microservices pattern, and then how to develop the intelligence of the application as an abstraction; how to build the user interface for the attendees to ask questions; and how to create data insights for the speaker, so they can effectively view, search and filter attendees' questions while on stage.

[embed]https://www.youtube.com/watch?v=LSlipMoz2vY[/embed]

Sample application code for Conference Buddy can be found here, and an in-depth look into this application is available in the free e-book, Developer's Guide to Building AI Applications, here.

Architecture

We break down the Q&A experience into these components: Attendees with questions need to be able to ask via a user interface; the speaker needs to view attendees' questions and get insights into that data; and finally, some intelligence that helps improve the overall effectiveness of the interaction.

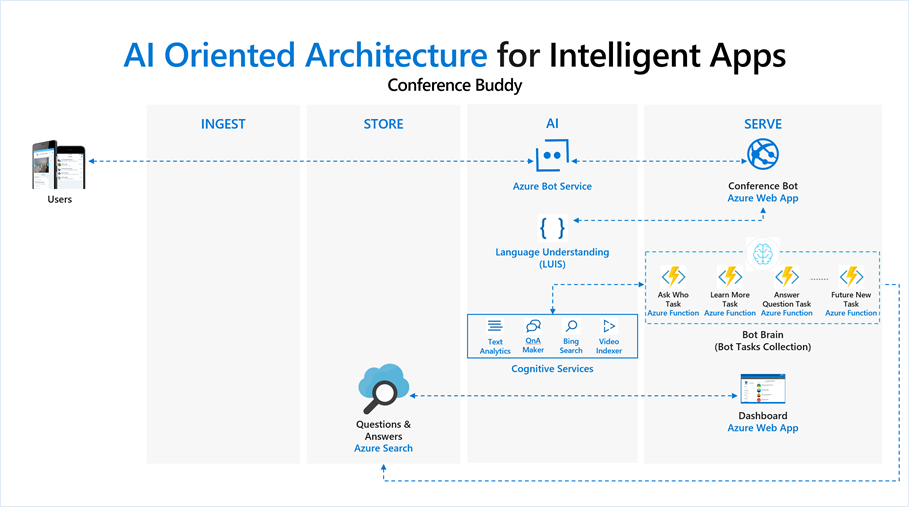

Conference Buddy is designed with what we call an AI Oriented Architecture to incorporate the different components used for intelligence, user interface and data insights. The architecture follows a microservices pattern where the components are loosely coupled. Each component is designed to handle a single responsibility and is fully autonomous. The benefit of this design pattern is that each component can be built, deployed and scaled independently.

Figure 1: AI Oriented Architecture of Conference Buddy.

We build the Conference Buddy in three steps, shown in Figure 1:

- Build the Intelligence: The intelligence is represented by the Bot Brain, an abstraction that encapsulates the business logic of the app with a collection of Bot Tasks. Each bot task is independent, and is responsible for applying intelligence to attendees' questions, to generate relevant answers automatically, and store questions into a data store.

- Build the User Interface: The user interface is represented by the Conference Bot, a chatbot that allows attendees to ask questions and receive relevant answers across a variety of channels.

- Build the Data Insights: Data insights are represented by the Speaker's Dashboard, an intelligent application that allows the speaker and moderators to view, search and filter attendees' questions while on stage, and questions can be projected on a screen or with any mobile device.

We explore each step in the sections that follow.

Step 1: Intelligence – Bot Brain

The first step to build the Conference Buddy intelligent application is to start with the Bot Brain. The bot brain is the intelligence abstraction that powers the entire end-to-end application and it can be called by any other components with valid authentication. The design of the bot brain consists of two concepts: it is a collection of Bot Tasks where each bot task applies intelligence to the request and provides response independently from each other; and it uses Data Contract as a standard request and response for all bot tasks.

Bot Task

A Bot Task within the Bot Brain is a function of the business logic that can take advantage of applying intelligence to process the request. Each bot task is independent of other bot tasks; and it can be deployed to the same service, or into a cluster of services to be scaled independently from one another. This is one of the ways we implemented microservices in our architecture. If one of the bot tasks returns an error, it is much easier to debug and fix the source of the issue without affecting the other bot tasks or components in the system.

With Conference Buddy, each bot task is deployed as an Azure Function and there are three sample bot tasks:

- Ask Who Task: This task leverages Azure Cognitive Services' QnA Maker to provide predefined answers to the attendee's question if they want to learn more about the speaker.

- Learn More Task: This task leverages Azure Cognitive Services' Video Indexer and Bing Search to automatically answer the attendee's question with the world's knowledge.

- Answer Question Task: This task leverages Azure Cognitive Services' Text Analytics to extract information from the attendees' questions and store the results into Azure Search.

Here are some resources to get started with Azure Functions, QnA Maker, Video Indexer, Bing Search, Text Analytics, and Azure Search.

For Conference Buddy to truly be benefiting from the microservices architecture, the components cannot be tightly coupled. The user interface and the data insights components are unaware of the requirements for the bot tasks to invoke the intelligent services, nor does any of the bot task understand the inner workings of other components. All they need is a standard request and response which both the bot tasks and caller components understand. Thus, here comes the Data Contract.

Data Contract

The Data Contract is a standard request and response which all tasks within the Bot Brain and the caller components understand. Without the Data Contract, there will be no abstraction layer at all. The Data Contract acts as the formal agreement between the Bot Bain and its caller components and abstractly describes the data to be exchanged.

Step 2: User Interface – Conference Bot

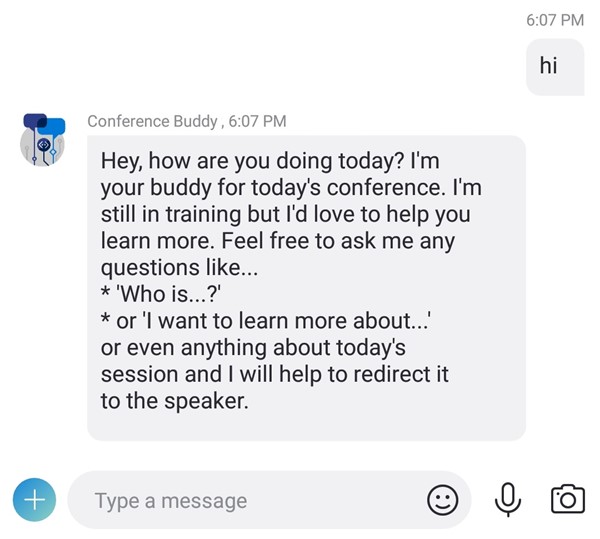

The second step is to build a user interface where the attendees can easily access to ask questions. For Conference Buddy, a Conference Bot is built using the Azure Bot Services, which provides the ability to add naturally fluid conversations as part of the Q&A experience. This also allows the attendees to communicate with the conference bot on multiple channels and devices.

The main responsibility of the Conference bot is to handle messaging. It receives the attendee's question, determine its intent using LUIS for language understanding, invoke the Bot Brain with the request Data Contract, and reply to the attendee by transforming the response Data Contract into various channel message formats.

Figure 2: Greetings from the Conference Bot, during the attendee's first interaction.

Here are some resources to create a chatbot with Azure Bot Service and LUIS.

Step 3: Data Insights – Speaker's Dashboard

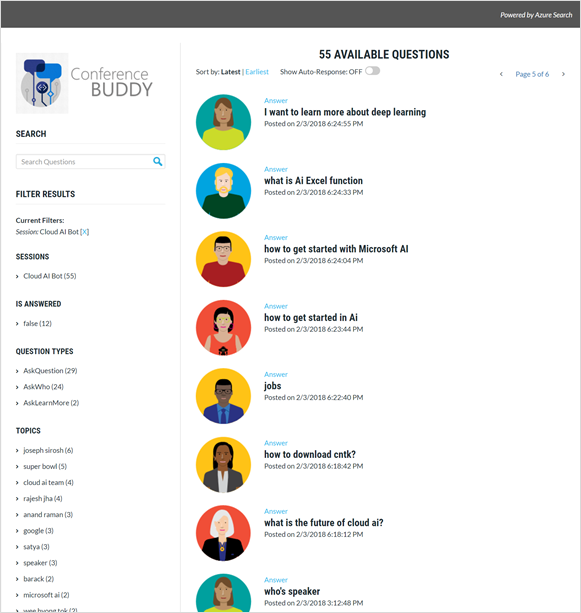

The last step of building the Conference Buddy intelligent application is the data insights for the speaker and moderators: Speaker's Dashboard. The Speaker's Dashboard is used for the speaker and the moderators to view, search, and filter the attendees' questions to be projected on stage or on their devices.

The Speaker's Dashboard is built as an Azure Web App, where it retrieves the questions processed by the Bot Brain that are stored in Azure Search.

Figure 3: Speaker's Dashboard that can be projected on stage or shown on mobile devices.

Here is a sample web app with Azure Search .NET and some resources to create a Azure Web App and accessing Azure Search.

Develop your Intelligent Application

Although in this blog post, we have showed you an overview of the Conference Buddy's AI oriented architecture with microservices pattern; how we have abstracted the intelligence of the application into the Bot Brain concept; how we have built a Conference Bot that is mainly responsible for messaging; and how we can provide insights of the attendees' questions for the speaker to be viewed, searched, and filtered on stage. But this is just the start. At this point in time, the digital transformation with AI is just scratching the surface. There are endless possibilities of how we can develop intelligent applications that can empower better experience in many aspects of our lives. We can't wait to see what creative intelligent application you and other developers will build next.

Wilson

Resources

- Microsoft Channel 9's AI Show, Building an Intelligent Bot: Conference Buddy for this blog post can be found here.

- Deep-dive into Conference Buddy sample application code for this blog post can be found here.

- An in-depth look into Conference Buddy can be found within the free e-book: Developer's Guide to Building AI Applications here.

Acknowledgement

Thanks to Wee Hyong Tok from Microsoft for his guidance with this post.