Create HPC Pack Linux RDMA Cluster in Azure

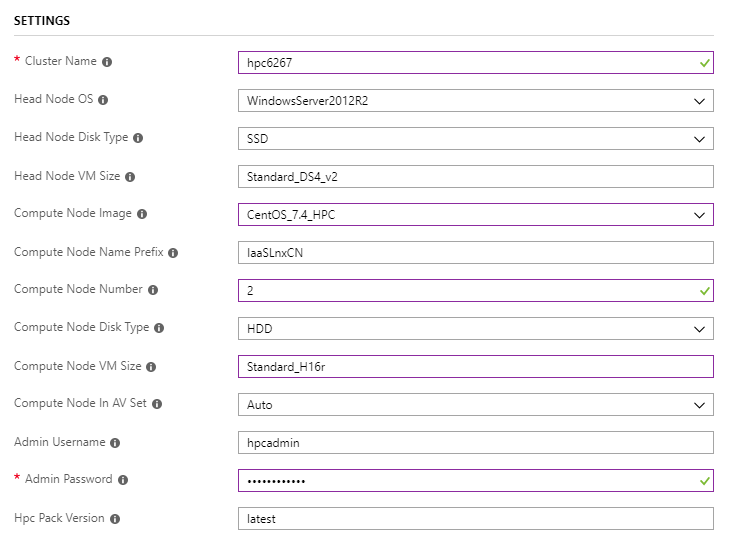

Deploy a cluster with ARM Template

Use Single head node cluster for Linux workloads to deploy the cluster

Notice that

Compute Node Imageshould be ones with suffixHPC, andCompute Node VM Sizeshould beStandard_H16rorStandard_H16mrin H-series so that the cluster could be RDMA-capable.

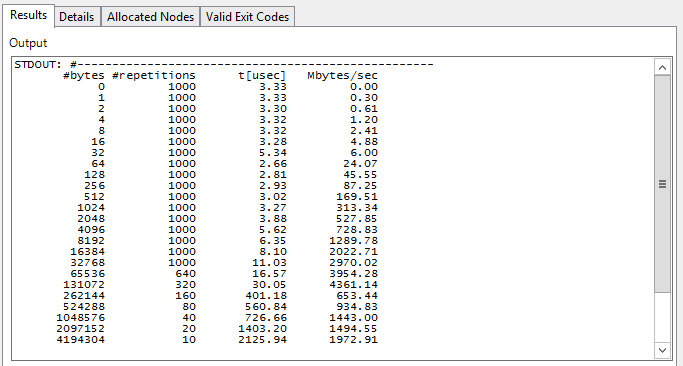

Run Intel MPI Benchmark Pingpong

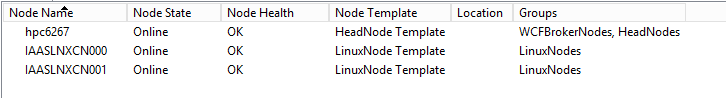

Log on head node hpc6267 and take nodes online

Submit a job to run MPI Pingpong among Linux compute nodes

job submit /numnodes:2 "source /opt/intel/impi/`ls /opt/intel/impi`/bin64/mpivars.sh && mpirun -env I_MPI_FABRICS=shm:dapl -env I_MPI_DAPL_PROVIDER=ofa-v2-ib0 -env I_MPI_DYNAMIC_CONNECTION=0 -env I_MPI_FALLBACK_DEVICE=0 -f $CCP_MPI_HOSTFILE -ppn 1 IMB-MPI1 pingpong | tail -n30"The host file or machine file for MPI task is generated automatically

Environment variable

$CCP_MPI_HOSTFILEcould be used in task command to get the file nameEnvironment variable

$CCP_MPI_HOSTFILE_FORMATcould be set to specify the format of host file or machine fileThe default host file format is like:

nodename1 nodename2 … nodenameNWhen

$CCP_MPI_HOSTFILE_FORMAT=1, the format is like:nodename1:4 nodename2:4 … nodenameN:4When

$CCP_MPI_HOSTFILE_FORMAT=2, the format is like:nodename1 slots=4 nodename2 slots=4 … nodenameN slots=4When

$CCP_MPI_HOSTFILE_FORMAT=3, the format is like:nodename1 4 nodename2 4 … nodenameN 4

Check task result in HPC Pack 2016 Cluster Manager

Run OpenFOAM workload

Download and install Intel MPI

Intel MPI is already installed in the Linux image

CentOS_7.4_HPC, but a newer version is needed for building OpenFOAM, which can be downloaded from Intel MPI libraryDownload and silently install Intel MPI with clusrun

clusrun /nodegroup:LinuxNodes /interleaved "wget https://registrationcenter-download.intel.com/akdlm/irc_nas/tec/13063/l_mpi_2018.3.222.tgz && tar -zxvf l_mpi_2018.3.222.tgz && sed -i -e 's/ACCEPT_EULA=decline/ACCEPT_EULA=accept/g' ./l_mpi_2018.3.222/silent.cfg && ./l_mpi_2018.3.222/install.sh --silent ./l_mpi_2018.3.222/silent.cfg"

Download and compile OpenFOAM

OpenFOAM packages could be downloaded from OpenFOAM download page

Before building OpenFOAM, we need to install

zlib-develandDevelopment Toolson Linux compute nodes(CentOS), to change the value of variableWM_MPLIBfromSYSTEMOPENMPItoINTELMPIin OpenFOAM environment settings filebashrc, and to source the Intel MPI environment settings filempivars.shand OpenFOAM environment settings filebashrcOptionally, environment variable

WM_NCOMPPROCScould be set to specify how many processors to use for compiling OpenFoam, which could accelerate the compilationUse clusrun to achieve all above

clusrun /nodegroup:LinuxNodes /interleaved "yum install -y zlib-devel && yum groupinstall -y 'Development Tools' && wget https://sourceforge.net/projects/openfoamplus/files/v1806/ThirdParty-v1806.tgz && wget https://sourceforge.net/projects/openfoamplus/files/v1806/OpenFOAM-v1806.tgz && mkdir /opt/OpenFOAM && tar -xzf OpenFOAM-v1806.tgz -C /opt/OpenFOAM && tar -xzf ThirdParty-v1806.tgz -C /opt/OpenFOAM && cd /opt/OpenFOAM/OpenFOAM-v1806/ && sed -i -e 's/WM_MPLIB=SYSTEMOPENMPI/WM_MPLIB=INTELMPI/g' ./etc/bashrc && source /opt/intel/impi/2018.3.222/bin64/mpivars.sh && source ./etc/bashrc && export WM_NCOMPPROCS=$((`grep -c ^processor /proc/cpuinfo`-1)) && ./Allwmake"

Create share in cluster

Create a folder named

openfoamon head node and share it toEveryonewithRead/WritepermissionCreate directory

/openfoamand mount the share on Linux compute nodes with clusrunclusrun /nodegroup:LinuxNodes "mkdir /openfoam && mount -t cifs //hpc6267/openfoam /openfoam -o vers=2.1,username=hpcadmin,dir_mode=0777,file_mode=0777,password='********'"Remember to replace the username and password in above code when copying.

Prepare the environment setting file for running MPI tasks

Create file

settings.shin the share with code:#!/bin/bash # impi source /opt/intel/impi/2018.3.222/bin64/mpivars.sh export MPI_ROOT=$I_MPI_ROOT export I_MPI_FABRICS=shm:dapl export I_MPI_DAPL_PROVIDER=ofa-v2-ib0 export I_MPI_DYNAMIC_CONNECTION=0 # openfoam source /opt/OpenFOAM/OpenFOAM-v1806/etc/bashrcBe care of the line endings if the file is edited on head node, which should be

\nrather than\r\n

Prepare sample data for OpenFOAM job

Copy the sample

sloshingTank3Din OpenFOAM tutorials directory to the shareopenfoamOptionally, modify the value of

deltaTfrom0.05to0.5and the value ofwriteIntervalfrom0.05to0.5in/openfoam/sloshingTank3D/system/controlDictto accelerate data processingModify the file

/openfoam/sloshingTank3D/system/decomposeParDictin terms of the core number to use, more refer to OpenFOAM User Guide: 3.4 Running applications in parallel/*--------------------------------*- C++ -*----------------------------------*\ | ========= | | | \\ / F ield | OpenFOAM: The Open Source CFD Toolbox | | \\ / O peration | Version: v1806 | | \\ / A nd | Web: www.OpenFOAM.com | | \\/ M anipulation | | \*---------------------------------------------------------------------------*/ FoamFile { version 2.0; format ascii; class dictionary; object decomposeParDict; } // * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * // numberOfSubdomains 32; method hierarchical; coeffs { n (1 1 32); //delta 0.001; // default=0.001 //order xyz; // default=xzy } distributed no; roots ( ); // ************************************************************************* //Prepare sample data in

/openfoam/sloshingTank3D. Below code could be used when performing it manually on Linux compute node:cd /openfoam/sloshingTank3D source /openfoam/settings.sh source /home/hpcadmin/OpenFOAM/OpenFOAM-v1806/bin/tools/RunFunctions m4 ./system/blockMeshDict.m4 > ./system/blockMeshDict runApplication blockMesh cp ./0/alpha.water.orig ./0/alpha.water runApplication setFieldsSubmit a job to achieve all above

set CORE_NUMBER=32 job submit "cp -r /opt/OpenFOAM/OpenFOAM-v1806/tutorials/multiphase/interFoam/laminar/sloshingTank3D /openfoam/ && sed -i 's/deltaT 0.05;/deltaT 0.5;/g' /openfoam/sloshingTank3D/system/controlDict && sed -i 's/writeInterval 0.05;/writeInterval 0.5;/g' /openfoam/sloshingTank3D/system/controlDict && sed -i 's/numberOfSubdomains 16;/numberOfSubdomains %CORE_NUMBER%;/g' /openfoam/sloshingTank3D/system/decomposeParDict && sed -i 's/n (4 2 2);/n (1 1 %CORE_NUMBER%);/g' /openfoam/sloshingTank3D/system/decomposeParDict && cd /openfoam/sloshingTank3D/ && m4 ./system/blockMeshDict.m4 > ./system/blockMeshDict && source /opt/OpenFOAM/OpenFOAM-v1806/bin/tools/RunFunctions && source /opt/OpenFOAM/OpenFOAM-v1806/etc/bashrc && runApplication blockMesh && cp ./0/alpha.water.orig ./0/alpha.water && runApplication setFields"

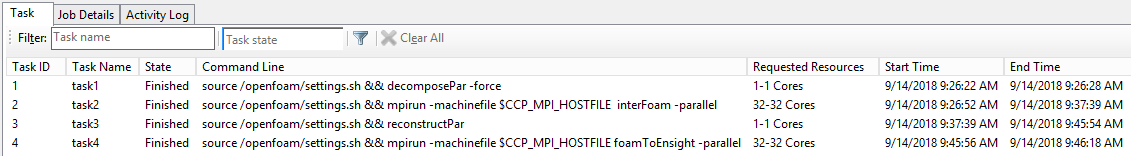

Create job containing MPI tasks to process date

Create a job and add 4 tasks with dependence

Task name Dependent task Cores Command Environment variable task1 N/A 1 source /openfoam/settings.sh && decomposePar -force N/A task2 task1 32 source /openfoam/settings.sh && mpirun -machinefile $CCP_MPI_HOSTFILE interFoam -parallel CCP_MPI_HOSTFILE_FORMAT=1 task3 task2 1 source /openfoam/settings.sh && reconstructPar N/A task4 task3 32 source /openfoam/settings.sh && mpirun -machinefile $CCP_MPI_HOSTFILE foamToEnsight -parallel CCP_MPI_HOSTFILE_FORMAT=1 Set the working directory to

/openfoam/sloshingTank3Dand standard output to${CCP_JOBID}.${CCP_TASKID}.logof each taskAchieve all above with commands:

set CORE_NUMBER=32 job new job add !! /workdir:/openfoam/sloshingTank3D /name:task1 /stdout:${CCP_JOBID}.${CCP_TASKID}.log "source /openfoam/settings.sh && decomposePar -force" job add !! /workdir:/openfoam/sloshingTank3D /name:task2 /stdout:${CCP_JOBID}.${CCP_TASKID}.log /depend:task1 /numcores:%CORE_NUMBER% /env:CCP_MPI_HOSTFILE_FORMAT=1 "source /openfoam/settings.sh && mpirun -machinefile $CCP_MPI_HOSTFILE interFoam -parallel" job add !! /workdir:/openfoam/sloshingTank3D /name:task3 /stdout:${CCP_JOBID}.${CCP_TASKID}.log /depend:task2 "source /openfoam/settings.sh && reconstructPar" job add !! /workdir:/openfoam/sloshingTank3D /name:task4 /stdout:${CCP_JOBID}.${CCP_TASKID}.log /depend:task3 /numcores:%CORE_NUMBER% /env:CCP_MPI_HOSTFILE_FORMAT=1 "source /openfoam/settings.sh && mpirun -machinefile $CCP_MPI_HOSTFILE foamToEnsight -parallel" job submit /id:!!

Get result

Check job result in HPC Pack 2016 Cluster Manager

The result of sample sloshingTank3D is generated as file

\\hpc6267\openfoam\sloshingTank3D\EnSight\sloshingTank3D.case, which could be viewed by Ensight