Test accuracy of a custom speech model

In this article, you learn how to quantitatively measure and improve the accuracy of the base speech to text model or your own custom models. Audio + human-labeled transcript data is required to test accuracy. You should provide from 30 minutes to 5 hours of representative audio.

Important

When testing, the system will perform a transcription. This is important to keep in mind, as pricing varies per service offering and subscription level. Always refer to the official Azure AI services pricing for the latest details.

Create a test

You can test the accuracy of your custom model by creating a test. A test requires a collection of audio files and their corresponding transcriptions. You can compare a custom model's accuracy with a speech to text base model or another custom model. After you get the test results, evaluate the word error rate (WER) compared to speech recognition results.

Follow these steps to create a test:

Sign in to the Speech Studio.

Select Custom speech > Your project name > Test models.

Select Create new test.

Select Evaluate accuracy > Next.

Select one audio + human-labeled transcription dataset, and then select Next. If there aren't any datasets available, cancel the setup, and then go to the Speech datasets menu to upload datasets.

Note

It's important to select an acoustic dataset that's different from the one you used with your model. This approach can provide a more realistic sense of the model's performance.

Select up to two models to evaluate, and then select Next.

Enter the test name and description, and then select Next.

Review the test details, and then select Save and close.

To create a test, use the spx csr evaluation create command. Construct the request parameters according to the following instructions:

- Set the

projectparameter to the ID of an existing project. This parameter is recommended so that you can also view the test in Speech Studio. You can run thespx csr project listcommand to get available projects. - Set the required

model1parameter to the ID of a model that you want to test. - Set the required

model2parameter to the ID of another model that you want to test. If you don't want to compare two models, use the same model for bothmodel1andmodel2. - Set the required

datasetparameter to the ID of a dataset that you want to use for the test. - Set the

languageparameter, otherwise the Speech CLI sets "en-US" by default. This parameter should be the locale of the dataset contents. The locale can't be changed later. The Speech CLIlanguageparameter corresponds to thelocaleproperty in the JSON request and response. - Set the required

nameparameter. This parameter is the name that is displayed in the Speech Studio. The Speech CLInameparameter corresponds to thedisplayNameproperty in the JSON request and response.

Here's an example Speech CLI command that creates a test:

spx csr evaluation create --api-version v3.2 --project 0198f569-cc11-4099-a0e8-9d55bc3d0c52 --dataset 23b6554d-21f9-4df1-89cb-f84510ac8d23 --model1 ff43e922-e3e6-4bf0-8473-55c08fd68048 --model2 13fb305e-09ad-4bce-b3a1-938c9124dda3 --name "My Evaluation" --description "My Evaluation Description"

You should receive a response body in the following format:

{

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/dda6e880-6ccd-49dc-b277-137565cbaa38",

"model1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"model2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"dataset": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/datasets/23b6554d-21f9-4df1-89cb-f84510ac8d23"

},

"transcription2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/transcriptions/b50642a8-febf-43e1-b9d3-e0c90b82a62a"

},

"transcription1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/transcriptions/b50642a8-febf-43e1-b9d3-e0c90b82a62a"

},

"project": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/projects/0198f569-cc11-4099-a0e8-9d55bc3d0c52"

},

"links": {

"files": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/dda6e880-6ccd-49dc-b277-137565cbaa38/files"

},

"properties": {

"wordErrorRate1": -1.0,

"sentenceErrorRate1": -1.0,

"sentenceCount1": -1,

"wordCount1": -1,

"correctWordCount1": -1,

"wordSubstitutionCount1": -1,

"wordDeletionCount1": -1,

"wordInsertionCount1": -1,

"wordErrorRate2": -1.0,

"sentenceErrorRate2": -1.0,

"sentenceCount2": -1,

"wordCount2": -1,

"correctWordCount2": -1,

"wordSubstitutionCount2": -1,

"wordDeletionCount2": -1,

"wordInsertionCount2": -1

},

"lastActionDateTime": "2024-07-14T21:31:14Z",

"status": "NotStarted",

"createdDateTime": "2024-07-14T21:31:14Z",

"locale": "en-US",

"displayName": "My Evaluation",

"description": "My Evaluation Description",

"customProperties": {

"testingKind": "Evaluation"

}

}

The top-level self property in the response body is the evaluation's URI. Use this URI to get details about the project and test results. You also use this URI to update or delete the evaluation.

For Speech CLI help with evaluations, run the following command:

spx help csr evaluation

To create a test, use the Evaluations_Create operation of the Speech to text REST API. Construct the request body according to the following instructions:

- Set the

projectproperty to the URI of an existing project. This property is recommended so that you can also view the test in Speech Studio. You can make a Projects_List request to get available projects. - Set the

testingKindproperty toEvaluationwithincustomProperties. If you don't specifyEvaluation, the test is treated as a quality inspection test. Whether thetestingKindproperty is set toEvaluationorInspection, or not set, you can access the accuracy scores via the API, but not in the Speech Studio. - Set the required

model1property to the URI of a model that you want to test. - Set the required

model2property to the URI of another model that you want to test. If you don't want to compare two models, use the same model for bothmodel1andmodel2. - Set the required

datasetproperty to the URI of a dataset that you want to use for the test. - Set the required

localeproperty. This property should be the locale of the dataset contents. The locale can't be changed later. - Set the required

displayNameproperty. This property is the name that is displayed in the Speech Studio.

Make an HTTP POST request using the URI as shown in the following example. Replace YourSubscriptionKey with your Speech resource key, replace YourServiceRegion with your Speech resource region, and set the request body properties as previously described.

curl -v -X POST -H "Ocp-Apim-Subscription-Key: YourSubscriptionKey" -H "Content-Type: application/json" -d '{

"model1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"model2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"dataset": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/datasets/23b6554d-21f9-4df1-89cb-f84510ac8d23"

},

"project": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/projects/0198f569-cc11-4099-a0e8-9d55bc3d0c52"

},

"displayName": "My Evaluation",

"description": "My Evaluation Description",

"customProperties": {

"testingKind": "Evaluation"

},

"locale": "en-US"

}' "https://YourServiceRegion.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations"

You should receive a response body in the following format:

{

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/dda6e880-6ccd-49dc-b277-137565cbaa38",

"model1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"model2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"dataset": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/datasets/23b6554d-21f9-4df1-89cb-f84510ac8d23"

},

"transcription2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/transcriptions/b50642a8-febf-43e1-b9d3-e0c90b82a62a"

},

"transcription1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/transcriptions/b50642a8-febf-43e1-b9d3-e0c90b82a62a"

},

"project": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/projects/0198f569-cc11-4099-a0e8-9d55bc3d0c52"

},

"links": {

"files": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/dda6e880-6ccd-49dc-b277-137565cbaa38/files"

},

"properties": {

"wordErrorRate1": -1.0,

"sentenceErrorRate1": -1.0,

"sentenceCount1": -1,

"wordCount1": -1,

"correctWordCount1": -1,

"wordSubstitutionCount1": -1,

"wordDeletionCount1": -1,

"wordInsertionCount1": -1,

"wordErrorRate2": -1.0,

"sentenceErrorRate2": -1.0,

"sentenceCount2": -1,

"wordCount2": -1,

"correctWordCount2": -1,

"wordSubstitutionCount2": -1,

"wordDeletionCount2": -1,

"wordInsertionCount2": -1

},

"lastActionDateTime": "2024-07-14T21:31:14Z",

"status": "NotStarted",

"createdDateTime": "2024-07-14T21:31:14Z",

"locale": "en-US",

"displayName": "My Evaluation",

"description": "My Evaluation Description",

"customProperties": {

"testingKind": "Evaluation"

}

}

The top-level self property in the response body is the evaluation's URI. Use this URI to get details about the evaluation's project and test results. You also use this URI to update or delete the evaluation.

Get test results

You should get the test results and evaluate the word error rate (WER) compared to speech recognition results.

Follow these steps to get test results:

- Sign in to the Speech Studio.

- Select Custom speech > Your project name > Test models.

- Select the link by test name.

- After the test is complete, as indicated by the status set to Succeeded, you should see results that include the WER number for each tested model.

This page lists all the utterances in your dataset and the recognition results, alongside the transcription from the submitted dataset. You can toggle various error types, including insertion, deletion, and substitution. By listening to the audio and comparing recognition results in each column, you can decide which model meets your needs and determine where more training and improvements are required.

To get test results, use the spx csr evaluation status command. Construct the request parameters according to the following instructions:

- Set the required

evaluationparameter to the ID of the evaluation that you want to get test results.

Here's an example Speech CLI command that gets test results:

spx csr evaluation status --api-version v3.2 --evaluation 8bfe6b05-f093-4ab4-be7d-180374b751ca

The word error rates and more details are returned in the response body.

You should receive a response body in the following format:

{

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/dda6e880-6ccd-49dc-b277-137565cbaa38",

"model1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"model2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"dataset": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/datasets/23b6554d-21f9-4df1-89cb-f84510ac8d23"

},

"transcription2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/transcriptions/b50642a8-febf-43e1-b9d3-e0c90b82a62a"

},

"transcription1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/transcriptions/b50642a8-febf-43e1-b9d3-e0c90b82a62a"

},

"project": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/projects/0198f569-cc11-4099-a0e8-9d55bc3d0c52"

},

"links": {

"files": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/dda6e880-6ccd-49dc-b277-137565cbaa38/files"

},

"properties": {

"wordErrorRate1": 0.028900000000000002,

"sentenceErrorRate1": 0.667,

"tokenErrorRate1": 0.12119999999999999,

"sentenceCount1": 3,

"wordCount1": 173,

"correctWordCount1": 170,

"wordSubstitutionCount1": 2,

"wordDeletionCount1": 1,

"wordInsertionCount1": 2,

"tokenCount1": 165,

"correctTokenCount1": 145,

"tokenSubstitutionCount1": 10,

"tokenDeletionCount1": 1,

"tokenInsertionCount1": 9,

"tokenErrors1": {

"punctuation": {

"numberOfEdits": 4,

"percentageOfAllEdits": 20.0

},

"capitalization": {

"numberOfEdits": 2,

"percentageOfAllEdits": 10.0

},

"inverseTextNormalization": {

"numberOfEdits": 1,

"percentageOfAllEdits": 5.0

},

"lexical": {

"numberOfEdits": 12,

"percentageOfAllEdits": 12.0

},

"others": {

"numberOfEdits": 1,

"percentageOfAllEdits": 5.0

}

},

"wordErrorRate2": 0.028900000000000002,

"sentenceErrorRate2": 0.667,

"tokenErrorRate2": 0.12119999999999999,

"sentenceCount2": 3,

"wordCount2": 173,

"correctWordCount2": 170,

"wordSubstitutionCount2": 2,

"wordDeletionCount2": 1,

"wordInsertionCount2": 2,

"tokenCount2": 165,

"correctTokenCount2": 145,

"tokenSubstitutionCount2": 10,

"tokenDeletionCount2": 1,

"tokenInsertionCount2": 9,

"tokenErrors2": {

"punctuation": {

"numberOfEdits": 4,

"percentageOfAllEdits": 20.0

},

"capitalization": {

"numberOfEdits": 2,

"percentageOfAllEdits": 10.0

},

"inverseTextNormalization": {

"numberOfEdits": 1,

"percentageOfAllEdits": 5.0

},

"lexical": {

"numberOfEdits": 12,

"percentageOfAllEdits": 12.0

},

"others": {

"numberOfEdits": 1,

"percentageOfAllEdits": 5.0

}

}

},

"lastActionDateTime": "2024-07-14T21:31:22Z",

"status": "Succeeded",

"createdDateTime": "2024-07-14T21:31:14Z",

"locale": "en-US",

"displayName": "My Evaluation",

"description": "My Evaluation Description",

"customProperties": {

"testingKind": "Evaluation"

}

}

For Speech CLI help with evaluations, run the following command:

spx help csr evaluation

To get test results, start by using the Evaluations_Get operation of the Speech to text REST API.

Make an HTTP GET request using the URI as shown in the following example. Replace YourEvaluationId with your evaluation ID, replace YourSubscriptionKey with your Speech resource key, and replace YourServiceRegion with your Speech resource region.

curl -v -X GET "https://YourServiceRegion.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/YourEvaluationId" -H "Ocp-Apim-Subscription-Key: YourSubscriptionKey"

The word error rates and more details are returned in the response body.

You should receive a response body in the following format:

{

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/dda6e880-6ccd-49dc-b277-137565cbaa38",

"model1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"model2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/models/base/13fb305e-09ad-4bce-b3a1-938c9124dda3"

},

"dataset": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/datasets/23b6554d-21f9-4df1-89cb-f84510ac8d23"

},

"transcription2": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/transcriptions/b50642a8-febf-43e1-b9d3-e0c90b82a62a"

},

"transcription1": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/transcriptions/b50642a8-febf-43e1-b9d3-e0c90b82a62a"

},

"project": {

"self": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/projects/0198f569-cc11-4099-a0e8-9d55bc3d0c52"

},

"links": {

"files": "https://eastus.api.cognitive.microsoft.com/speechtotext/v3.2/evaluations/dda6e880-6ccd-49dc-b277-137565cbaa38/files"

},

"properties": {

"wordErrorRate1": 0.028900000000000002,

"sentenceErrorRate1": 0.667,

"tokenErrorRate1": 0.12119999999999999,

"sentenceCount1": 3,

"wordCount1": 173,

"correctWordCount1": 170,

"wordSubstitutionCount1": 2,

"wordDeletionCount1": 1,

"wordInsertionCount1": 2,

"tokenCount1": 165,

"correctTokenCount1": 145,

"tokenSubstitutionCount1": 10,

"tokenDeletionCount1": 1,

"tokenInsertionCount1": 9,

"tokenErrors1": {

"punctuation": {

"numberOfEdits": 4,

"percentageOfAllEdits": 20.0

},

"capitalization": {

"numberOfEdits": 2,

"percentageOfAllEdits": 10.0

},

"inverseTextNormalization": {

"numberOfEdits": 1,

"percentageOfAllEdits": 5.0

},

"lexical": {

"numberOfEdits": 12,

"percentageOfAllEdits": 12.0

},

"others": {

"numberOfEdits": 1,

"percentageOfAllEdits": 5.0

}

},

"wordErrorRate2": 0.028900000000000002,

"sentenceErrorRate2": 0.667,

"tokenErrorRate2": 0.12119999999999999,

"sentenceCount2": 3,

"wordCount2": 173,

"correctWordCount2": 170,

"wordSubstitutionCount2": 2,

"wordDeletionCount2": 1,

"wordInsertionCount2": 2,

"tokenCount2": 165,

"correctTokenCount2": 145,

"tokenSubstitutionCount2": 10,

"tokenDeletionCount2": 1,

"tokenInsertionCount2": 9,

"tokenErrors2": {

"punctuation": {

"numberOfEdits": 4,

"percentageOfAllEdits": 20.0

},

"capitalization": {

"numberOfEdits": 2,

"percentageOfAllEdits": 10.0

},

"inverseTextNormalization": {

"numberOfEdits": 1,

"percentageOfAllEdits": 5.0

},

"lexical": {

"numberOfEdits": 12,

"percentageOfAllEdits": 12.0

},

"others": {

"numberOfEdits": 1,

"percentageOfAllEdits": 5.0

}

}

},

"lastActionDateTime": "2024-07-14T21:31:22Z",

"status": "Succeeded",

"createdDateTime": "2024-07-14T21:31:14Z",

"locale": "en-US",

"displayName": "My Evaluation",

"description": "My Evaluation Description",

"customProperties": {

"testingKind": "Evaluation"

}

}

Evaluate word error rate (WER)

The industry standard for measuring model accuracy is word error rate (WER). WER counts the number of incorrect words identified during recognition, and divides the sum by the total number of words provided in the human-labeled transcript (N).

Incorrectly identified words fall into three categories:

- Insertion (I): Words that are incorrectly added in the hypothesis transcript

- Deletion (D): Words that are undetected in the hypothesis transcript

- Substitution (S): Words that were substituted between reference and hypothesis

In the Speech Studio, the quotient is multiplied by 100 and shown as a percentage. The Speech CLI and REST API results aren't multiplied by 100.

$$ WER = {{I+D+S}\over N} \times 100 $$

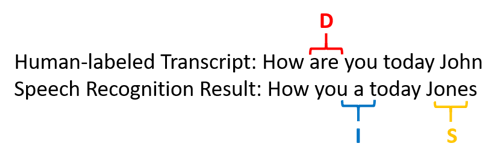

Here's an example that shows incorrectly identified words, when compared to the human-labeled transcript:

The speech recognition result erred as follows:

- Insertion (I): Added the word "a"

- Deletion (D): Deleted the word "are"

- Substitution (S): Substituted the word "Jones" for "John"

The word error rate from the previous example is 60%.

If you want to replicate WER measurements locally, you can use the sclite tool from the NIST Scoring Toolkit (SCTK).

Resolve errors and improve WER

You can use the WER calculation from the machine recognition results to evaluate the quality of the model you're using with your app, tool, or product. A WER of 5-10% is considered to be good quality and is ready to use. A WER of 20% is acceptable, but you might want to consider more training. A WER of 30% or more signals poor quality and requires customization and training.

How the errors are distributed is important. When many deletion errors are encountered, it's usually because of weak audio signal strength. To resolve this issue, you need to collect audio data closer to the source. Insertion errors mean that the audio was recorded in a noisy environment and crosstalk might be present, causing recognition issues. Substitution errors are often encountered when an insufficient sample of domain-specific terms is provided as either human-labeled transcriptions or related text.

By analyzing individual files, you can determine what type of errors exist, and which errors are unique to a specific file. Understanding issues at the file level helps you target improvements.

Evaluate token error rate (TER)

Besides word error rate, you can also use the extended measurement of Token Error Rate (TER) to evaluate quality on the final end-to-end display format. In addition to the lexical format (That will cost $900. instead of that will cost nine hundred dollars), TER takes into account the display format aspects such as punctuation, capitalization, and ITN. Learn more about Display output formatting with speech to text.

TER counts the number of incorrect tokens identified during recognition, and divides the sum by the total number of tokens provided in the human-labeled transcript (N).

$$ TER = {{I+D+S}\over N} \times 100 $$

The formula of TER calculation is also similar to WER. The only difference is that TER is calculated based on the token level instead of word level.

- Insertion (I): Tokens that are incorrectly added in the hypothesis transcript

- Deletion (D): Tokens that are undetected in the hypothesis transcript

- Substitution (S): Tokens that were substituted between reference and hypothesis

In a real-world case, you can analyze both WER and TER results to get the desired improvements.

Note

To measure TER, you need to make sure the audio + transcript testing data includes transcripts with display formatting such as punctuation, capitalization, and ITN.

Example scenario outcomes

Speech recognition scenarios vary by audio quality and language (vocabulary and speaking style). The following table examines four common scenarios:

| Scenario | Audio quality | Vocabulary | Speaking style |

|---|---|---|---|

| Call center | Low, 8 kHz, could be two people on one audio channel, could be compressed | Narrow, unique to domain and products | Conversational, loosely structured |

| Voice assistant, such as Cortana, or a drive-through window | High, 16 kHz | Entity-heavy (song titles, products, locations) | Clearly stated words and phrases |

| Dictation (instant message, notes, search) | High, 16 kHz | Varied | Note-taking |

| Video closed captioning | Varied, including varied microphone use, added music | Varied, from meetings, recited speech, musical lyrics | Read, prepared, or loosely structured |

Different scenarios produce different quality outcomes. The following table examines how content from these four scenarios rates in the WER. The table shows which error types are most common in each scenario. The insertion, substitution, and deletion error rates help you determine what kind of data to add to improve the model.

| Scenario | Speech recognition quality | Insertion errors | Deletion errors | Substitution errors |

|---|---|---|---|---|

| Call center | Medium (< 30% WER) |

Low, except when other people talk in the background | Can be high. Call centers can be noisy, and overlapping speakers can confuse the model | Medium. Products and people's names can cause these errors |

| Voice assistant | High (can be < 10% WER) |

Low | Low | Medium, due to song titles, product names, or locations |

| Dictation | High (can be < 10% WER) |

Low | Low | High |

| Video closed captioning | Depends on video type (can be < 50% WER) | Low | Can be high because of music, noises, microphone quality | Jargon might cause these errors |

Next steps

Palaute

Tulossa pian: Vuoden 2024 aikana poistamme asteittain GitHub Issuesin käytöstä sisällön palautemekanismina ja korvaamme sen uudella palautejärjestelmällä. Lisätietoja on täällä: https://aka.ms/ContentUserFeedback.

Lähetä ja näytä palaute kohteelle