Huomautus

Tämän sivun käyttö edellyttää valtuutusta. Voit yrittää kirjautua sisään tai vaihtaa hakemistoa.

Tämän sivun käyttö edellyttää valtuutusta. Voit yrittää vaihtaa hakemistoa.

Applies to:

Azure SQL Database

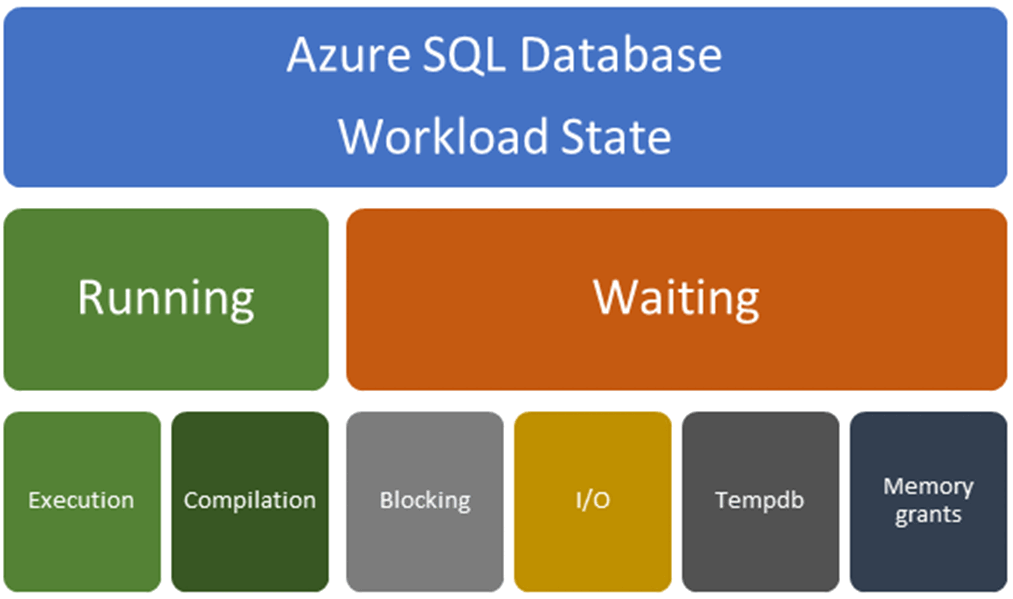

When trying to resolve a performance bottleneck, start by determining whether the bottleneck is occurring while the query is in a running state or a waiting state. Different resolutions apply depending upon this determination. Use the following diagram to help understand the factors that can cause either a running-related problem or a waiting-related problem. Problems and resolutions relating to each type of problem are discussed in this article.

You can use database watcher or dynamic management views to detect these types of performance bottlenecks.

Running-related problems: Running-related problems are generally related to compilation problems resulting in a suboptimal query plan or execution problems related to insufficient or overused resources. Waiting-related problems: Waiting-related problems are generally related to:

- Locks (blocking)

- I/O

- Contention related to

tempdbusage - Memory grant waits

This article is about Azure SQL Database, see also Detectable types of query performance bottlenecks in Azure SQL Managed Instance.

Compilation problems resulting in a suboptimal query plan

A suboptimal plan generated by the SQL Query Optimizer might be the cause of slow query performance. The SQL Query Optimizer might produce a suboptimal plan because of a missing index, stale statistics, an incorrect estimate of the number of rows to be processed, or an inaccurate estimate of the required memory. If you know the query was executed faster in the past or on another database, compare the actual execution plans to see if they're different.

Identify any missing indexes using one of these methods:

- Use database watcher.

- Review recommendations in the Database Advisor for single and pooled databases in Azure SQL Database. You might also choose to enable automatic tuning options for tuning indexes for Azure SQL Database.

- Missing indexes in DMVs and query execution plans. This article shows you how to detect and tune nonclustered indexes using missing index requests.

Try to update statistics or rebuild indexes to get the better plan. Enable automatic plan correction to automatically mitigate these problems.

As an advanced troubleshooting step, use Query Store hints to apply query hints using the Query Store, without making code changes.

This query tuning and hinting example shows the impact of a suboptimal query plan due to a parameterized query, how to detect this condition, and how to use a query hint to resolve.

Try changing the database compatibility level and implementing intelligent query processing. The SQL Query Optimizer might generate a different query plan depending upon the compatibility level for your database. Higher compatibility levels provide more intelligent query processing capabilities.

- For more information on query processing, see Query Processing Architecture Guide.

- To change database compatibility levels and read more about the differences between compatibility levels, see ALTER DATABASE.

- To read more about cardinality estimation, see Cardinality Estimation

Resolve queries with suboptimal query execution plans

The following sections discuss how to resolve queries with suboptimal query execution plan.

Queries that have parameter sensitive plan (PSP) problems

A parameter sensitive plan (PSP) problem happens when the query optimizer generates a query execution plan that's optimal only for a specific parameter value (or set of values) and the cached plan is then not optimal for parameter values that are used in consecutive executions. Plans that aren't optimal can then cause query performance problems and degrade overall workload throughput.

For more information on parameter sniffing and query processing, see the Query-processing architecture guide.

Several workarounds can mitigate PSP problems. Each workaround has associated tradeoffs and drawbacks:

- A new feature introduced with SQL Server 2022 (16.x) is Parameter Sensitive Plan optimization, which attempts to mitigate most suboptimal query plans caused by parameter sensitivity. This is enabled with database compatibility level 160 in Azure SQL Database.

- Use the RECOMPILE query hint at each query execution. This workaround trades compilation time and increased CPU for better plan quality. The

RECOMPILEoption is often not possible for workloads that require a high throughput. - Use the OPTION (OPTIMIZE FOR...) query hint to override the actual parameter value with a typical parameter value that produces a plan that's good enough for most parameter value possibilities. This option requires a good understanding of optimal parameter values and associated plan characteristics.

- Use the OPTION (OPTIMIZE FOR UNKNOWN) query hint to override the actual parameter value and instead use the density vector average. You can also do this by capturing the incoming parameter values in local variables and then using the local variables within the predicates instead of using the parameters themselves. For this fix, the average density must be good enough.

- Disable parameter sniffing entirely by using the DISABLE_PARAMETER_SNIFFING query hint.

- Use the KEEPFIXEDPLAN query hint to prevent recompilations in cache. This workaround assumes that the good-enough common plan is the one in cache already. You can also disable automatic statistics updates to reduce the chances that the good plan will be evicted and a new bad plan will be compiled.

- Force the plan by explicitly using the USE PLAN query hint by rewriting the query and adding the hint in the query text. Or set a specific plan by using Query Store or by enabling automatic tuning.

- Replace the single procedure with a nested set of procedures that can each be used based on conditional logic and the associated parameter values.

- Create dynamic string execution alternatives to a static procedure definition.

To apply query hints, modify the query or use Query Store hints to apply the hint without making code changes.

For more information about resolving PSP problems, see these blog posts:

Compile activity caused by improper parameterization

When a query has literals, either the database engine automatically parameterizes the statement or a user explicitly parameterizes the statement to reduce the number of compilations. A high number of compilations for a query using the same pattern but different literal values can result in high CPU usage. Similarly, if you only partially parameterize a query that continues to have literals, the database engine doesn't parameterize the query further.

Here's an example of a partially parameterized query:

SELECT *

FROM t1 JOIN t2 ON t1.c1 = t2.c1

WHERE t1.c1 = @p1 AND t2.c2 = '961C3970-0E54-4E8E-82B6-5545BE897F8F';

In this example, t1.c1 takes @p1, but t2.c2 continues to take GUID as literal. In this case, if you change the value for c2, the query is treated as a different query, and a new compilation will happen. To reduce compilations in this example, you would also parameterize the GUID.

The following query shows the count of queries by query hash to determine whether a query is properly parameterized:

SELECT TOP 10

q.query_hash

, count (distinct p.query_id ) AS number_of_distinct_query_ids

, min(qt.query_sql_text) AS sampled_query_text

FROM sys.query_store_query_text AS qt

JOIN sys.query_store_query AS q

ON qt.query_text_id = q.query_text_id

JOIN sys.query_store_plan AS p

ON q.query_id = p.query_id

JOIN sys.query_store_runtime_stats AS rs

ON rs.plan_id = p.plan_id

JOIN sys.query_store_runtime_stats_interval AS rsi

ON rsi.runtime_stats_interval_id = rs.runtime_stats_interval_id

WHERE

rsi.start_time >= DATEADD(hour, -2, GETUTCDATE())

AND query_parameterization_type_desc IN ('User', 'None')

GROUP BY q.query_hash

ORDER BY count (distinct p.query_id) DESC;

Factors that affect query plan changes

A query execution plan recompilation might result in a generated query plan that differs from the original cached plan. An existing original plan might be automatically recompiled for various reasons:

- Changes in the schema are referenced by the query

- Data changes to the tables are referenced by the query

- Query context options were changed

A compiled plan might be ejected from the cache for various reasons, such as:

- Instance restarts

- Database-scoped configuration changes

- Memory pressure

- Explicit requests to clear the cache

If you use a RECOMPILE hint, a plan won't be cached.

A recompilation (or fresh compilation after cache eviction) can still result in the generation of a query execution plan that's identical to the original. When the plan changes from the prior or original plan, these explanations are likely:

Changed physical design: For example, newly created indexes more effectively cover the requirements of a query. The new indexes might be used on a new compilation if the query optimizer decides that using that new index is more optimal than using the data structure that was originally selected for the first version of the query execution. Any physical changes to the referenced objects might result in a new plan choice at compile time.

Server resource differences: When a plan in one system differs from the plan in another system, resource availability, such as the number of available processors, can influence which plan gets generated. For example, if one system has more processors, a parallel plan might be chosen. For more information on parallelism in Azure SQL Database, see Configure the max degree of parallelism (MAXDOP) in Azure SQL Database.

Different statistics: The statistics associated with the referenced objects might have changed or might be materially different from the original system's statistics. If the statistics change and a recompilation happens, the query optimizer uses the statistics starting from when they changed. The revised statistics' data distributions and frequencies might differ from those of the original compilation. These changes are used to create cardinality estimates. (Cardinality estimates are the number of rows that are expected to flow through the logical query tree.) Changes to cardinality estimates might lead you to choose different physical operators and associated orders of operations. Even minor changes to statistics can result in a changed query execution plan.

Changed database compatibility level or cardinality estimator version: Changes to the database compatibility level can enable new strategies and features that might result in a different query execution plan. Beyond the database compatibility level, a disabled or enabled trace flag 4199 or a changed state of the database-scoped configuration QUERY_OPTIMIZER_HOTFIXES can also influence query execution plan choices at compile time. Trace flags 9481 (force legacy CE) and 2312 (force default CE) also affect the plan.

Resource limits issues

Slow query performance not related to suboptimal query plans and missing indexes are generally related to insufficient or overused resources. If the query plan is optimal, the query (and the database) might be hitting the resource limits for the database or elastic pool. An example might be excess log write throughput for the service level.

Detecting resource issues using the Azure portal: To see if resource limits are the problem, see SQL Database resource monitoring. For single databases and elastic pools, see Database Advisor performance recommendations and Query Performance Insights.

Detecting resource limits using database watcher.

Detecting resource issues using DMVs:

- The sys.dm_db_resource_stats DMV returns CPU, I/O, and memory consumption for the database. One row exists for every 15-second interval, even if there's no activity in the database. Historical data is maintained for one hour.

- The sys.resource_stats DMV returns CPU usage and storage data for Azure SQL Database. The data is collected and aggregated in five-minute intervals.

- Many individual queries that cumulatively consume high CPU

If you identify the problem as insufficient resource, you can upgrade resources to increase the capacity of your database to absorb the CPU requirements. For more information, see Scale single database resources in Azure SQL Database and Scale elastic pool resources in Azure SQL Database.

Performance problems caused by increased workload volume

An increase in application traffic and workload volume can cause increased CPU usage. But you must be careful to properly diagnose this problem. When you see a high-CPU problem, answer these questions to determine whether the increase is caused by changes to the workload volume:

Are the queries from the application the cause of the high-CPU problem?

For the top CPU-consuming queries that you can identify:

- Were multiple execution plans associated with the same query? If so, why?

- For queries with the same execution plan, were the execution times consistent? Did the execution count increase? If so, the workload increase is likely causing performance problems.

In summary, if the query execution plan didn't execute differently but CPU usage increased along with execution count, the performance problem is likely related to a workload increase.

It's not always easy to identify a workload volume change that's driving a CPU problem. Consider these factors:

Changed resource usage: For example, consider a scenario where CPU usage increased to 80 percent for an extended period of time. CPU usage alone doesn't mean the workload volume changed. Regressions in the query execution plan and changes in data distribution can also contribute to more resource usage even though the application executes the same workload.

The appearance of a new query: An application might drive a new set of queries at different times.

An increase or decrease in the number of requests: This scenario is the most obvious measure of a workload. The number of queries doesn't always correspond to more resource utilization. However, this metric is still a significant signal, assuming other factors are unchanged.

Use database watcher to detect workload increases and plan regressions over time.

- Parallelism: Excessive parallelism can worsen other concurrent workload performance by starving other queries of CPU and worker thread resources. For more information on parallelism in Azure SQL Database, see Configure the max degree of parallelism (MAXDOP) in Azure SQL Database.

Waiting-related problems

Once you have eliminated a suboptimal plan and Waiting-related problems that are related to execution problems, the performance problem is generally the queries are probably waiting for some resource. Waiting-related problems might be caused by:

Blocking:

One query might hold the lock on objects in the database while others try to access the same objects. You can identify blocking queries by using DMVs or database watcher. For more information, see Understand and resolve Azure SQL Database blocking problems.

IO problems

Queries might be waiting for the pages to be written to the data or log files. In this case, check the

INSTANCE_LOG_RATE_GOVERNOR,WRITE_LOG, orPAGEIOLATCH_*wait statistics in the DMV. See using DMVs to identify IO performance issues.Tempdb problems

If the workload uses temporary tables or there are

tempdbspills in the plans, the queries might have a problem withtempdbthroughput. To investigate further, review identify tempdb issues.Memory-related problems

If the workload doesn't have enough memory, the page life expectancy might drop, or the queries might get less memory than they need. In some cases, built-in intelligence in Query Optimizer will fix memory-related problems. See using DMVs to identify memory grant issues. For more information and sample queries, see Troubleshoot out of memory errors with Azure SQL Database. If you encounter out of memory errors, review sys.dm_os_out_of_memory_events.

Methods to show top wait categories

These methods are commonly used to show the top categories of wait types:

- Use database watcher to identify queries with performance degradation due to increased waits.

- Use Query Store to find wait statistics for each query over time. In Query Store, wait types are combined into wait categories. You can find the mapping of wait categories to wait types in sys.query_store_wait_stats.

- Use sys.dm_db_wait_stats to return information about all the waits encountered by threads that executed during a query operation. You can use this aggregated view to diagnose performance problems with Azure SQL Database and also with specific queries and batches. Queries can be waiting on resources, queue waits, or external waits.

- Use sys.dm_os_waiting_tasks to return information about the queue of tasks that are waiting on some resource.

In high-CPU scenarios, Query Store and wait statistics might not reflect CPU usage if:

- High-CPU-consuming queries are still executing.

- The high-CPU-consuming queries were running when a failover happened.

DMVs that track Query Store and wait statistics show results for only successfully completed and timed-out queries. They don't show data for currently executing statements until the statements finish. Use the dynamic management view sys.dm_exec_requests to track currently executing queries and the associated worker time.

Related content

- Configure the max degree of parallelism (MAXDOP) in Azure SQL Database

- Understand and resolve Azure SQL Database blocking problems in Azure SQL Database

- Diagnose and troubleshoot high CPU on Azure SQL Database

- SQL Database monitoring and tuning overview

- Monitoring Microsoft Azure SQL Database performance using dynamic management views

- Tune nonclustered indexes with missing index suggestions

- Resource management in Azure SQL Database

- Resource limits for single databases using the vCore purchasing model

- Resource limits for elastic pools using the vCore purchasing model

- Resource limits for single databases using the DTU purchasing model

- Resource limits for elastic pools using the DTU purchasing model