Integrate Apache Zeppelin with Hive Warehouse Connector in Azure HDInsight

HDInsight Spark clusters include Apache Zeppelin notebooks with different interpreters. In this article, we focus only on the Livy interpreter to access Hive tables from Spark using Hive Warehouse Connector.

Note

This article contains references to a term that Microsoft no longer uses. When the term is removed from the software, we'll remove it from this article.

Prerequisite

Complete the Hive Warehouse Connector setup steps.

Getting started

Use ssh command to connect to your Apache Spark cluster. Edit the following command by replacing CLUSTERNAME with the name of your cluster, and then enter the command:

ssh sshuser@CLUSTERNAME-ssh.azurehdinsight.netFrom your ssh session, execute the following command to note the versions for

hive-warehouse-connector-assemblyandpyspark_hwc:ls /usr/hdp/current/hive_warehouse_connectorSave the output for later use when configuring Apache Zeppelin.

Configure Livy

Following configurations are required to access hive tables from Zeppelin with the Livy interpreter.

Interactive Query Cluster

From a web browser, navigate to

https://LLAPCLUSTERNAME.azurehdinsight.net/#/main/services/HDFS/configswhere LLAPCLUSTERNAME is the name of your Interactive Query cluster.Navigate to Advanced > Custom core-site. Select Add Property... to add the following configurations:

Configuration Value hadoop.proxyuser.livy.groups * hadoop.proxyuser.livy.hosts * Save changes and restart all affected components.

Spark Cluster

From a web browser, navigate to

https://CLUSTERNAME.azurehdinsight.net/#/main/services/SPARK2/configswhere CLUSTERNAME is the name of your Apache Spark cluster.Expand Custom livy2-conf. Select Add Property... to add the following configuration:

Configuration Value livy.file.local-dir-whitelist /usr/hdp/current/hive_warehouse_connector/ Save changes and restart all affected components.

Configure Livy Interpreter in Zeppelin UI (Spark Cluster)

From a web browser, navigate to

https://CLUSTERNAME.azurehdinsight.net/zeppelin/#/interpreter, whereCLUSTERNAMEis the name of your Apache Spark cluster.Navigate to livy2.

Add the following configurations:

Configuration Value livy.spark.hadoop.hive.llap.daemon.service.hosts @llap0 livy.spark.security.credentials.hiveserver2.enabled true livy.spark.sql.hive.llap true livy.spark.yarn.security.credentials.hiveserver2.enabled true livy.superusers livy, zeppelin livy.spark.jars file:///usr/hdp/current/hive_warehouse_connector/hive-warehouse-connector-assembly-VERSION.jar.

Replace VERSION with value you obtained from Getting started, earlier.livy.spark.submit.pyFiles file:///usr/hdp/current/hive_warehouse_connector/pyspark_hwc-VERSION.zip.

Replace VERSION with value you obtained from Getting started, earlier.livy.spark.sql.hive.hiveserver2.jdbc.url Set it to the HiveServer2 Interactive JDBC URL of the Interactive Query cluster. spark.security.credentials.hiveserver2.enabled true For ESP clusters only, add the following configuration:

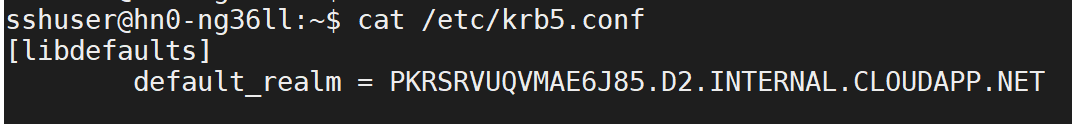

Configuration Value livy.spark.sql.hive.hiveserver2.jdbc.url.principal hive/_HOST@<AAD-Domain>Use ssh command to connect to your Interactive Query cluster. Look for

default_realmparameter in the/etc/krb5.conffile. Replace<AAD-DOMAIN>with this value as an uppercase string, otherwise the credential cannot be found.

Save the changes and restart the Livy interpreter.

If Livy interpreter isn't accessible, modify the shiro.ini file present within Zeppelin component in Ambari. For more information, see Configuring Apache Zeppelin Security.

Running Queries in Zeppelin

Launch a Zeppelin notebook using Livy interpreter and execute the following

%livy2

import com.hortonworks.hwc.HiveWarehouseSession

import com.hortonworks.hwc.HiveWarehouseSession._

import org.apache.spark.sql.SaveMode

# Initialize the hive context

val hive = HiveWarehouseSession.session(spark).build()

# Create a database

hive.createDatabase("hwc_db",true)

hive.setDatabase("hwc_db")

# Create a Hive table

hive.createTable("testers").ifNotExists().column("id", "bigint").column("name", "string").create()

val dataDF = Seq( (1, "foo"), (2, "bar"), (8, "john")).toDF("id", "name")

# Validate writes to the table

dataDF.write.format("com.hortonworks.spark.sql.hive.llap.HiveWarehouseConnector").mode("append").option("table", "hwc_db.testers").save()

# Validate reads

hive.executeQuery("select * from testers").show()

Next steps

Palaute

Tulossa pian: Vuoden 2024 aikana poistamme asteittain GitHub Issuesin käytöstä sisällön palautemekanismina ja korvaamme sen uudella palautejärjestelmällä. Lisätietoja on täällä: https://aka.ms/ContentUserFeedback.

Lähetä ja näytä palaute kohteelle