ONNX and Azure Machine Learning

This article describes how the Open Neural Network Exchange (ONNX) can help optimize the inference of your machine learning models. Inference or model scoring is the process of using a deployed model to generate predictions on production data.

Optimizing machine learning models for inference requires you to tune the model and the inference library to make the most of hardware capabilities. This task becomes complex if you want to get optimal performance on different platforms such as cloud, edge, CPU, or GPU, because each platform has different capabilities and characteristics. The complexity increases if you need to run models from various frameworks on different platforms. It can be time-consuming to optimize all the different combinations of frameworks and hardware.

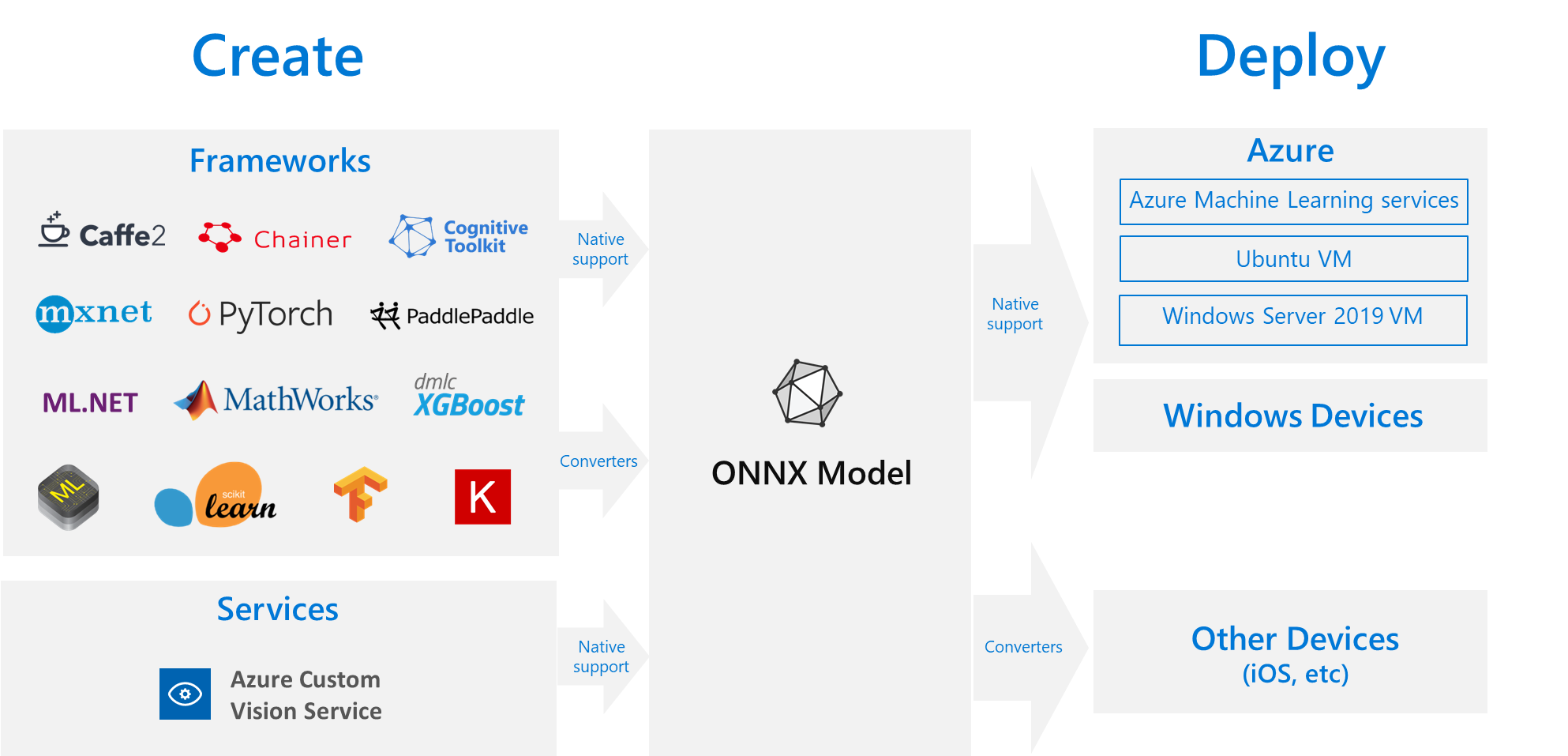

A useful solution is to train your model one time in your preferred framework, and then export or convert it to ONNX so it can run anywhere on the cloud or edge. Microsoft and a community of partners created ONNX as an open standard for representing machine learning models. You can export or convert models from many frameworks to the standard ONNX format. Supported frameworks include TensorFlow, PyTorch, scikit-learn, Keras, Chainer, MXNet, and MATLAB. You can run models in the ONNX format on various platforms and devices.

This ONNX flow diagram shows available frameworks and deployment options.

ONNX Runtime

ONNX Runtime is a high-performance inference engine for deploying ONNX models to production. ONNX Runtime is optimized for both cloud and edge, and works on Linux, Windows, and macOS. ONNX is written in C++, but also has C, Python, C#, Java, and JavaScript (Node.js) APIs to use in those environments.

ONNX Runtime supports both deep neural networks (DNN) and traditional machine learning models, and it integrates with accelerators on different hardware such as TensorRT on Nvidia GPUs, OpenVINO on Intel processors, and DirectML on Windows. By using ONNX Runtime, you can benefit from extensive production-grade optimizations, testing, and ongoing improvements.

High-scale Microsoft services such as Bing, Office, and Azure AI use ONNX Runtime. Although performance gains depend on many factors, these Microsoft services report an average 2x performance gain on CPU by using ONNX. ONNX Runtime runs in Azure Machine Learning and other Microsoft products that support machine learning workloads, including:

- Windows. ONNX runtime is built into Windows as part of Windows Machine Learning and runs on hundreds of millions of devices.

- Azure SQL. Azure SQL Edge and Azure SQL Managed Instance use ONNX to run native scoring on data.

- ML.NET. For an example, see Tutorial: Detect objects using ONNX in ML.NET.

Ways to obtain ONNX models

You can obtain ONNX models in several ways:

- Train a new ONNX model in Azure Machine Learning or use automated machine learning capabilities.

- Convert an existing model from another format to ONNX. For more information, see ONNX Tutorials.

- Get a pretrained ONNX model from the ONNX Model Zoo.

- Generate a customized ONNX model from Azure AI Custom Vision service.

You can represent many models as ONNX, including image classification, object detection, and text processing models. If you can't convert your model successfully, file a GitHub issue in the repository of the converter you used.

ONNX model deployment in Azure

You can deploy, manage, and monitor your ONNX models in Azure Machine Learning. Using a standard MLOps deployment workflow with ONNX Runtime, you can create a REST endpoint hosted in the cloud.

Python packages for ONNX Runtime

Python packages for CPU and GPU ONNX Runtime are available on PyPi.org. Be sure to review system requirements before installation.

To install ONNX Runtime for Python, use one of the following commands:

pip install onnxruntime # CPU build

pip install onnxruntime-gpu # GPU build

To call ONNX Runtime in your Python script, use the following code:

import onnxruntime

session = onnxruntime.InferenceSession("path to model")

The documentation accompanying the model usually tells you the inputs and outputs for using the model. You can also use a visualization tool such as Netron to view the model.

ONNX Runtime lets you query the model metadata, inputs, and outputs, as follows:

session.get_modelmeta()

first_input_name = session.get_inputs()[0].name

first_output_name = session.get_outputs()[0].name

To perform inferencing on your model, use run and pass in the list of outputs you want returned and a map of the input values. Leave the output list empty if you want all of the outputs. The result is a list of the outputs.

results = session.run(["output1", "output2"], {

"input1": indata1, "input2": indata2})

results = session.run([], {"input1": indata1, "input2": indata2})

For the complete ONNX Runtime API reference, see the Python API documentation.