Remarque

L’accès à cette page requiert une autorisation. Vous pouvez essayer de vous connecter ou de modifier des répertoires.

L’accès à cette page requiert une autorisation. Vous pouvez essayer de modifier des répertoires.

Les alertes vous permettre de détecter et de résoudre des problèmes avant que les utilisateurs ne les remarquent en vous informant de manière proactive quand les données Azure Monitor indiquent qu’il peut y avoir un problème avec votre infrastructure ou votre application.

Vous pouvez définir une alerte sur n’importe quelle source de données de métrique ou de journal dans la plateforme de données Azure Monitor.

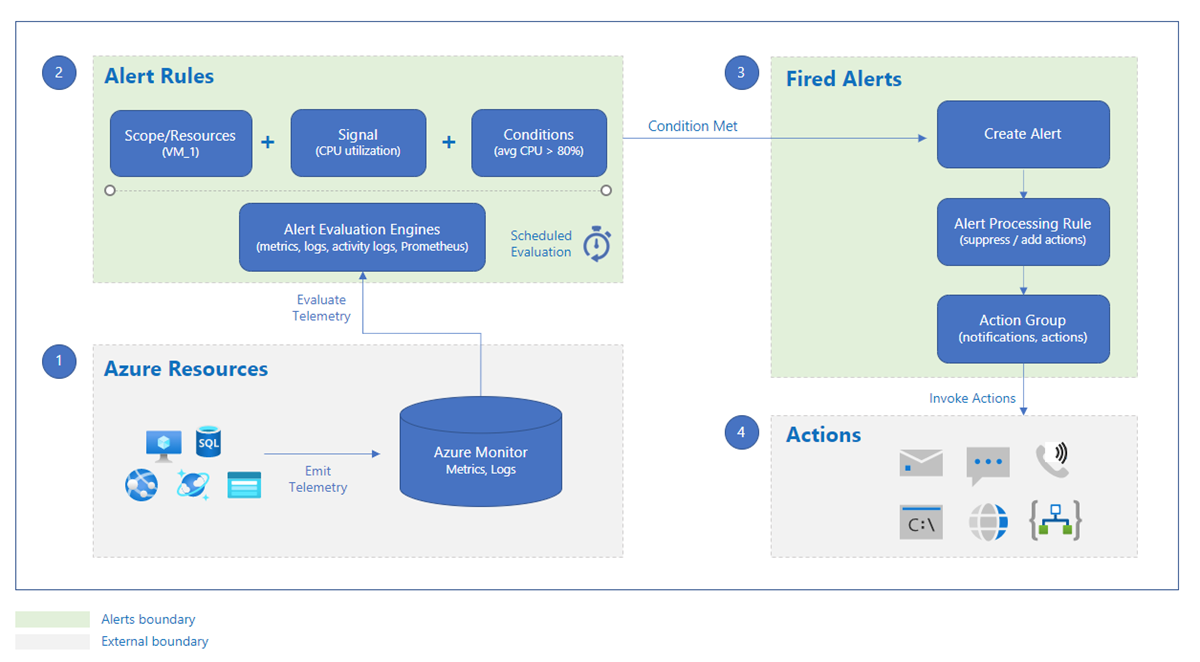

Ce diagramme vous montre comment fonctionnent les alertes.

Une règle d’alerte supervise vos données et capture un signal indiquant un évènement sur la ressource spécifiée. La règle d’alerte capture le signal et vérifie s’il répond aux critères de la condition.

Une règle d’alerte combine :

- Les ressources à superviser.

- Le signal ou les données de la ressource.

- Conditions.

Une alerte est déclenchée si les conditions de la règle d’alerte sont remplies. L’alerte lance le groupe d’actions associé et met à jour l’état de l’alerte. Si vous supervisez plusieurs ressources, la condition de la règle d’alerte est évaluée séparément pour chacune des ressources et les alertes sont déclenchées séparément pour chaque ressource.

Les alertes sont stockées pendant 30 jours et sont supprimées après la période de rétention de 30 jours. Vous pouvez voir toutes les instances d’alerte de toutes vos ressources Azure sur la page Alertes du portail Azure.

Les alertes se composent des éléments suivants :

- Groupes d’actions : ces groupes peuvent déclencher des notifications pour informer les utilisateurs qu’une alerte a été déclenchée ou lancer des flux de travail automatisés. Les groupes d’actions peuvent inclure les éléments suivants :

- Méthodes de notification telles que les e-mails, les SMS et les notifications Push.

- Runbooks Automation.

- Fonctions Azure.

- Incidents ITSM.

- Applications logiques.

- Webhooks sécurisés.

- Webhooks.

- Hubs d’événements.

- Conditions d’alerte : sont définies par le système. Quand une alerte se déclenche, la condition de l’alerte est déclenchée. Après la disparition de la condition sous-jacente qui a déclenché l’alerte, la condition de l’alerte est définie sur Résolu.

- Réponse de l’utilisateur : la réponse est définie par l’utilisateur et ne change pas tant que l’utilisateur ne l’a pas changée. La réponse utilisateur peut être Nouveau, Reçu ou Fermé.

- Règles de traitement des alertes : Vous pouvez utiliser des règles de traitement des alertes pour modifier les alertes déclenchées au fur et à mesure qu’elles sont déclenchées. Vous pouvez utiliser des règles de traitement des alertes pour ajouter ou supprimer des groupes d’actions, appliquer des filtres ou traiter la règle selon une planification prédéfinie.

Types d'alertes

Ce tableau fournit une brève description de chaque type d’alerte. Pour plus d’informations sur chaque type d’alerte et sur la façon de choisir le type d’alerte le mieux adapté à vos besoins, consultez Types d’alertes Azure Monitor.

| Type d’alerte | Descriptif |

|---|---|

| Alertes de métriques | Les alertes de métrique évaluent les métriques des ressources à intervalles réguliers. Les métriques peuvent être des métriques de plateforme, des métriques personnalisées, des journaux provenant d’Azure Monitor convertis en métriques ou des métriques Application Insights. Les alertes de métriques peuvent également appliquer plusieurs conditions et seuils dynamiques. |

| Alertes de recherche dans les journaux | Les alertes de recherche dans les journaux permettent aux utilisateurs d’utiliser une requête Log Analytics pour évaluer les journaux de ressource à une fréquence prédéfinie. |

| Alertes relatives à la recherche dans les journaux d’activité simples | Les alertes de journal simples permettent aux utilisateurs d’utiliser une requête Log Analytics pour évaluer chaque ligne individuellement. |

| Alertes du journal d’activité | Les alertes du journal d’activité sont déclenchées quand un nouvel événement du journal d’activité correspond à des conditions définies. Les alertes Resource Health et les alertes Service Health sont des alertes de journal d’activité qui concernent l’intégrité de votre service et de vos ressources. |

| Alertes de détection intelligente | La détection intelligente sur une ressource Application Insights vous informe automatiquement des éventuels problèmes de performances et anomalies d’échecs dans votre application web. Vous pouvez migrer la détection intelligente sur votre ressource Application Insights afin de créer des règles d’alerte pour les différents modules de détection intelligente. |

| Alertes Prometheus | Les alertes Prometheus sont utilisées pour alerter à propos des métriques Prometheus stockées dans les services managés Azure Monitor pour Prometheus. Les règles d’alerte sont basées sur le langage de requête open source PromQL. |

Alertes et état

Les alertes peuvent être avec état ou sans état.

- Les alertes sans état se déclenchent à chaque fois que la condition est remplie, même si elles ont déjà été déclenchées.

- Les alertes avec état se déclenchent quand les conditions des règles sont remplies, et ne se déclenchent plus ou ne déclenchent plus d’actions tant que les conditions ne sont pas résolues.

Chaque règle d’alerte est évaluée individuellement. Il n’existe aucune validation pour vérifier s’il existe une autre alerte configurée pour les mêmes conditions. S’il existe plusieurs règles d’alerte configurées pour les mêmes conditions, chacune de ces alertes se déclenche lorsque les conditions sont remplies.

Les alertes sont stockées pendant 30 jours et sont supprimées après la période de rétention de 30 jours.

Alertes sans état

Les alertes sans état se déclenchent à chaque fois que la condition est remplie. La condition d’alerte pour toutes les alertes sans état est toujours fired.

- Les alertes de journal d’activité sont sans état.

- La fréquence des notifications pour les alertes de métriques sans état varie en fonction de la fréquence configurée de la règle d’alerte :

- Fréquence d’alerte inférieure à 5 minutes : tant que la condition continue d’être remplie, une notification est envoyée à un moment donné compris entre une et six minutes.

- Fréquence d’alerte égale ou supérieure à 5 minutes : Tant que la condition continue d’être remplie, une notification est envoyée entre la fréquence configurée et le double de la fréquence. Par exemple, pour une règle d’alerte avec une fréquence de 15 minutes, une notification est envoyée à un moment donné compris entre 15 et 30 minutes.

Alertes avec état

Les alertes avec état se déclenchent quand les conditions des règles sont remplies, et ne se déclenchent plus ou ne déclenchent plus d’actions tant que les conditions ne sont pas résolues.

La condition d’alerte pour les alertes avec état est fired, jusqu’à ce qu’elle soit considérée comme résolue. Quand une alerte est considérée comme résolue, la règle d’alerte envoie une notification résolue en utilisant des webhooks ou par e-mail, et la condition de l’alerte est définie sur resolved.

Pour les alertes avec état, alors que l’alerte elle-même est supprimée après 30 jours, la condition d’alerte est stockée jusqu’à la résolution de l’alerte afin d’empêcher le déclenchement d’une autre alerte et l’envoi de notifications lorsque l’alerte est résolue.

Consultez les limites de service pour les limitations des alertes, y compris les limitations relatives aux alertes de journal avec état.

Ce tableau décrit quand une alerte avec état est considérée comme résolue :

| Type d’alerte | L’alerte est résolue quand |

|---|---|

| Alertes de mesure | La condition d’alerte n’est pas remplie pour trois vérifications consécutives. |

| Alertes de recherche dans les journaux d’activité | La condition d’alerte n’est pas remplie pendant un intervalle de temps spécifique. L’intervalle de temps diffère en fonction de la fréquence de l’alerte :

|

Règles d’alerte recommandées

Vous pouvez activer les règles d’alerte prêtes à l’emploi recommandées dans le portail Azure.

Le système compile une liste de règles d’alerte recommandées en fonction des éléments suivants :

- La connaissance par le fournisseur de la ressource des signaux et des seuils importants pour la surveillance de la ressource.

- Données qui nous indiquent ce que les clients signalent généralement concernant cette ressource.

Remarque

Les règles d’alerte recommandées sont activées pour :

- Machines virtuelles

- Ressources AKS

- Espaces de travail Log Analytics

Génération d’alertes à grande échelle

Vous pouvez utiliser l’une des méthodes suivantes pour créer des règles d’alerte à grande échelle. Chaque choix présente des avantages et des inconvénients qui pourraient impacter les coûts et la maintenance des règles d’alerte.

Alertes de mesure

Vous pouvez utiliser une règle d’alerte de métrique pour superviser plusieurs ressources du même type existant dans la même région Azure. Les notifications individuelles sont envoyées pour chaque ressource supervisée.

Pour les règles d’alerte de métrique des services Azure qui ne prennent pas en charge plusieurs ressources, utilisez les outils d’automatisation comme Azure CLI, PowerShell ou les modèles Azure Resource Manager pour créer la même règle d’alerte pour plusieurs ressources. Pour obtenir des exemples de modèles ARM, consultez Exemples de modèles Resource Manager pour les règles d’alerte de métrique dans Azure Monitor.

Chaque règle d’alerte de métrique est facturée en fonction du nombre de séries chronologiques surveillées.

Alertes de recherche dans les journaux d’activité

Utilisez les règles d’alerte de recherche dans les journaux pour surveiller toutes les ressources qui envoient des données à l’espace de travail Log Analytics. Ces ressources peuvent provenir de n’importe quel abonnement ou de n’importe quelle région. Utilisez des règles de collecte de données lors de la configuration de votre espace de travail Log Analytics pour collecter les données requises pour votre règle d’alerte de recherche dans les journaux.

Vous pouvez également créer des alertes centrées sur les ressources au lieu d’alertes centrées sur l’espace de travail à l’aide de la fonctionnalité Fractionner par dimension. Lorsque vous fractionnez la colonne resourceId, vous obtenez une alerte par ressource répondant à la condition.

Les règles d’alerte de recherche dans les journaux qui utilisent le fractionnement par dimension sont facturées en fonction du nombre de séries chronologiques créées par les dimensions résultant de votre requête. Si les données sont déjà collectées dans un espace de travail Log Analytics, cela n’entraîne aucun coût supplémentaire.

Si vous utilisez des données de métrique à grande échelle dans l’espace de travail Log Analytics, la tarification varie en fonction de l’ingestion des données.

Alertes de recherche de logs simples

Les alertes simples de recherche dans les journaux sont conçues pour offrir une alternative plus simple et plus rapide aux alertes traditionnelles de recherche dans les journaux. Contrairement aux alertes de recherche dans les journaux d’activité traditionnelles qui agrègent les lignes sur une période définie, les alertes simples de journaux d’activité évaluent chaque ligne individuellement. Les alertes basées sur la recherche prennent en charge les journaux d’activité analytiques et basiques.

Les alertes de recherche dans les journaux simples utilisent le langage de requête Kusto (KQL), mais la fonctionnalité est conçue pour simplifier le processus de requête, ce qui facilite la création d’alertes sans connaissances approfondies de KQL.

Les alertes de recherche simples fournissent des alertes plus rapides par rapport aux alertes de recherche de journal traditionnelles en évaluant chaque ligne individuellement. Les alertes sont déclenchées presque en temps réel, ce qui permet une réponse plus rapide aux incidents.

Créez une alerte simple de recherche dans les journaux.

Utiliser des stratégies Azure pour générer des alertes à grande échelle

Vous pouvez utiliser des stratégies Azure pour configurer des alertes à grande échelle. Cela permet une implémentation facile des alertes à grande échelle. Vous pouvez voir comment cela est implémenté avec des alertes de base Azure Monitor.

N’oubliez pas que si vous utilisez des stratégies pour créer des règles d’alerte, il est possible que les frais généraux de gestion d’un ensemble de règles d’alerte volumineux augmentent.

Contrôle d’accès en fonction du rôle (RBAC) Azure pour les alertes

Vous ne pouvez créer des alertes, les gérer et y accéder que pour les ressources pour lesquelles vous disposez d’autorisations.

Pour créer une règle d’alerte, vous devez disposer de ce qui suit :

- Autorisation de lecture sur la ressource cible de la règle d’alerte.

- Autorisation d’écriture sur le groupe de ressources dans lequel la règle d’alerte est créée. Si vous créez la règle d’alerte à partir du portail Azure, elle est créée par défaut dans le groupe de ressources dans lequel réside la ressource cible.

- Autorisation de lecture sur tout groupe d’action associé à la règle d’alerte (le cas échéant).

Ces rôles Azure intégrés, pris en charge pour toutes les étendues d’Azure Resource Manager, ont des autorisations et peuvent accéder aux informations sur les alertes et créer des règles d’alerte :

- Contributeur de surveillance : peut créer des alertes et utiliser des ressources dans leur étendue.

- Lecteur de surveillance : peut afficher les alertes et lire les ressources dans leur étendue.

Si le groupe d’actions ou l’emplacement de règle cible se trouve dans une étendue différente des deux rôles intégrés, créez un utilisateur avec les autorisations appropriées.

Tarification

Pour plus d’informations sur la tarification, consultez Tarification Azure Monitor.