Too much fun at CSUN! Part 3: 8 Way Speaker - Controlling speech output in many different ways for communication (Windows Store app, HTML/js)

A while ago I spent some time with a gentleman who was blind and had MND/ALS. (I described an experimental app I built based on our experiences, at https://herbi.org/WebKeys/WebKeys.htm.) Over time I noticed how the gentleman would support one arm with the other, as he’d reach over the keyboard to press a key to control his screen reader. So this got me thinking about how an app should continue to be usable as a person’s physical abilities change. The person shouldn’t have to learn a new app over time, just because their physical abilities are changing.

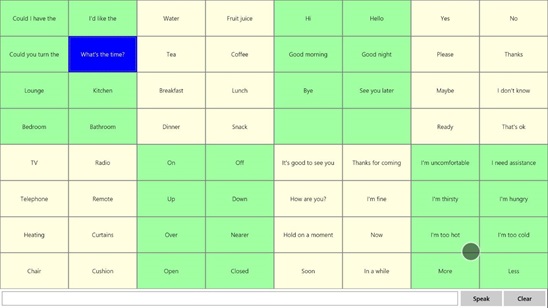

So I set to work building a simple text-to-speech app, with the goal of supporting many different forms of input. The app shows a simple 8-by-8 grid of customizable words and phrases. The grid just contains buttons, with the buttons styled with CSS to customize such things as their default, keyboard focused, mouse-over, and high contrast states. This was all routine stuff.

Try it yourself - Build your own Windows Store app with text-to-speech! Download the “Speech synthesis” sample at https://code.msdn.microsoft.com/windowsapps/Speech-synthesis-sample-6e07b218 and see how easy it is to add text-to-speech to your app.

Try it yourself - Learn how your app can fully respect your customer’s choice of high contrast colors! Download the XAML sample at https://code.msdn.microsoft.com/windowsapps/XAML-high-contrast-style-c0ce936f. Download the CSS sample at https://code.msdn.microsoft.com/windowsapps/high-contrast-b36079d8.

It's easy to add support for touch, mouse and keyboard to your Windows Store apps, and there are lots of code snippets around showing how to do that. So I got these forms of input working in the app first, and while incredibly important, wasn’t particularly interesting from a technical perspective. (Actually, it’s more interesting than it might seem, given that many eye tracking and head tracking systems will simulate mouse input. So if your app supports mouse input, it might be useable to someone who can only move their head or eyes.)

There were two other forms of input that I was more interested in from a technical perspective. The first was inspired by a question I’d received years earlier. That was, could I build a “gesture-to-speech” app, to enable a particular person who only had very small movement in his hand, such that he could trigger text-to-speech? (The results of this experiment leveraged the Windows gesture recognition functionality, and are at https://herbi.org/HerbiGestures/HerbiGestures.htm.)

So how could users with small movements in their hands use my new app? Well, maybe I just need to make a note of where the pointer, (perhaps a finger or mouse,) goes down, and how far it moves in some direction. I then amplify that distance by some (configurable) amount to get some further point on the screen, and find what UI element is beneath that further point. Once I’ve got that element, I’ll just set keyboard focus to it, and it’ll stand out to the user. Then a quick tap by the user, and the element gets invoked. I called this form of input at my app, “Small movements”, and it works both with small touch movements and small mouse movements.

This is an example of why I find building apps like this such a learning experience. I’d played with pointer event handlers before, but never had to find an element beneath a point. But all the info I need is up at MSDN, and the elementFromPoint() function that I’d never used before did the trick just fine.

Figure 1: The 8 Way Speaker app speaking text from a target button near the top left of the app, while the user is interacting with the app through touch near the bottom right of the app.

The other type of input I was particularly interested in was the switch device. When using switch devices, the app will often receive some form of simulated keyboard or mouse input, and so I decided to have my app respond to a press of the Spacebar to enable switch control input. (I can update this to support other types of keyboard or mouse input if someone asks me to.) So all I have to do is react to the Spacebar to control timers to set scanning visuals in the app, (first scanning the rows, and then the buttons in a row). CSS styling made showing the required visuals easy.

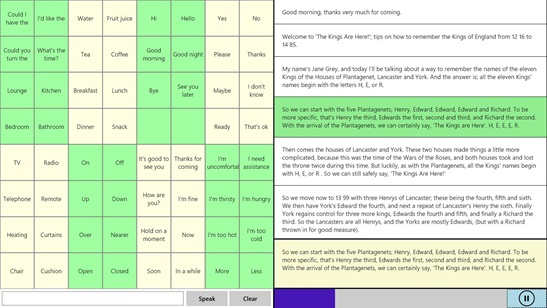

So having added switch device control, I’d had speech output triggered using 8 different forms of input, so named the app “8 Way Speaker”, and uploaded it to the Store. While I was doing this, I was thinking about a stand-up comedian who has cerebral palsy and who uses a device to output speech for him when delivering his routine. Would it be possible for me to at a “Presentation mode” to my app, such that the app could be used for public speaking, but critically, use all the forms of input I’d explored already, to control the presentation?

The answer turned out to be yes. I added a way for the app to show a set of pre-prepared text passages, and the user could control when the passages are spoken, paused or resumed. In fact, I contacted the stand-up comedian for his advice on what features are most important when using a device to deliver speech when presenting, and I updated my app based on his really helpful thoughts.

As it turned out, adding support for use of touch, mouse, keyboard and the “small movement” feature was straightforward. The most interesting part of this work for me, was not so much technical, rather it was all about the user experience. And this related to whether I could build an efficient user experience around presenting with a switch device. How could a switch device user control the presentation efficiently? That is, be able to pause, resume, restart the current passage, and move to the next passage without having to wait a long time for the scan feedback to reach they element that they want to interact with. So I chose a scan order that makes it really quick to pause and resume the current passage being spoken, and to then scan to restart the passage or move to the next passage. On the one hand, this seems an efficient way for the user to trigger common actions through the switch. On the other hand, if you miss the element you’re interested in, it takes a while for the scan to loop round to it again. So I can well believe this experience can be made more useful in practice, and I’m always looking for feedback on that.

Figure 2: Speaking of a presentation in the 8 Way Speaker app being control through a switch device.

All in all, it’s been really interesting for me to find that it’s so straightforward to have this Windows Store app controlled through so many different forms of input, and so the app could remain usable as a person’s physical abilities change over time. The app’s being tested now by someone who has cerebral palsy, and I really appreciate their help in making the app more useful to more people.

A note from CSUN

While demo’ing two of my apps which can be controlled through a switch device, I had one app scan rate at 0.5 seconds, and another at a more leisurely 1 second. I was asked afterwards about whether the speed of the scan is configurable to suit the needs of the user. Both my apps have options to select from a list of scan rates, but I’m not convinced they show a good set of choices. One app only offers rates of 0.5, 1 and 2 seconds, and the other offers a lot more options in 0.5 second increments. So if I get feedback asking for other choices, I’ll update the apps accordingly.

Further posts in this series:

Too much fun at CSUN! Part 1: Demo’ing four assistive technology apps for Windows at CSUN 2015

Too much fun at CSUN! Part 2: Herbi WriteAbout - Handwriting development

Too much fun at CSUN! Part 4: My Way Speaker - Using a switch device to efficiently browse the web

Too much fun at CSUN! Part 5: Herbi Speaks - Creating boards for education or communication